What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2024-02-14 17:30:42 | C peut être sûr de la mémoire, partie 2 C can be memory safe, part 2 (lien direct) |

Ce message de l'année dernière a été publié à un forum, Alors j'ai pensé que je rédige quelques réfutations à leurs commentaires. Le premier commentaireest par David Chisnall, créateur de Cheri C / C ++, qui propose que nous pouvons résoudre le problème avec les extensions de jeu d'instructions CPU.C'est une bonne idée, mais après 14 ans, les processeurs n'ont pas eu leurs ensembles d'instructions améliorés.Même les processeurs RISC V traditionnels ont été créés en utilisant ces extensions. Chisnall: " Si votre sécurité vous oblige à insérer des vérifications explicites, ce n'est pas sûr ".Cela est vrai d'un point de vue, faux d'un autre.Ma proposition comprend des compilateurs crachant avertissements & nbsp; chaque fois que les informations limites n'existent pas. c est pleine de problèmes en théorie qui n'existe pas dans la pratique parce queLe compilateur crache des avertissements en disant aux programmeurs de résoudre le problème.Les avertissements peuvent également noter les cas où les programmeurs ont probablement fait des erreurs.Nous ne pouvons pas obtenir des garanties parfaites, car les programmeurs peuvent encore faire des erreurs, mais nous pouvons certainement réaliser "assez bon". Chisnall: .... Sécurité de la bande de roulement ..... je ne suis pas sûr de comprendre le commentaire.Je comprends que Cheri peut garantir l'atomicité de la vérification des limites, ce qui nécessiterait autrement des instructions (interruptibles).Le nombre de cas où il s'agit d'un problème, et la proposition C ne serait pas pire que d'autres langues comme la rouille. Chisnall: Sécurité temporelle .... & nbsp; Beaucoup de techniques de "propriété" de rouille peuvent être appliquées à C avec ces annotations, à savoir, marquant les variables possédant une mémoire allouée et qui l'emprunte simplement.J'ai examiné beaucoup de bogues célèbres de l'utilisation et de double libre, et la plupart peuvent être trivialement corrigées par annotation. Chisnall: si vous écrivez unLe blog n'a jamais essayé de faire de la grande (million de lignes ou plus) C Code Code Memory Safe, vous sous-estimez probablement la difficulté par au moins un ordre de grandeur. & nbsp; i \\ 'm à la fois un programmeurQui a écrit un million de lignes de code de ma vie ainsi qu'un pirate avec des décennies d'expérience à la recherche de tels bugs.L'objectif n'est pas de poursuivre l'idéal d'un langage 100% sûr, mais de se débarrasser de 99% des erreurs de sécurité.1% moins sûr rend l'objectif un ordre de grandeur plus facile à atteindre. snej: & nbsp; Ce message semble incarner le trait d'ingénieur commun de voir tout problème que vous avez \\ 't personnellementsur comme trivial.Bien sûr, Bro, vous ajoutez quelques correctifs à Clang et GCC et avec ces nouveaux attributs, notre code C sera sûr.Cela ne prendra que quelques semaines et plus personne n'aura plus besoin de rouille. & nbsp; mais j'ai passé des décennies à y travailler.Le commentaire incarne le trait commun de ne pas réaliser à quel point la pensée et l'expertise se trouvent derrière le post.Je quelques patchs à Cang et GCC feront en sorte que C plus sûr .La solution est beaucoup moins sûre que la rouille.En fait, ma proposition rend le code plus interopérable et traduisible en rouille.À l'heure actuelle, la traduction de C en rouille crée juste un tas de code \\ 'dangereux \' qui doit être nettoyé.Avec de telles annotations, dans une étape de refactorisation utilisant des cadres de test existants, des résultats en code qui ne peut pas être transporté automatiquement en toute sécurité à Rust. Quant aux attributs Clang / GCC existants, il n'y a qu'un couple qui correspondLes macros que je propo | ★★ | |||

| 2023-02-01 13:30:40 | C can be memory-safe (lien direct) | The idea of memory-safe languages is in the news lately. C/C++ is famous for being the world's system language (that runs most things) but also infamous for being unsafe. Many want to solve this by hard-forking the world's system code, either by changing C/C++ into something that's memory-safe, or rewriting everything in Rust.Forking is a foolish idea. The core principle of computer-science is that we need to live with legacy, not abandon it.And there's no need. Modern C compilers already have the ability to be memory-safe, we just need to make minor -- and compatible -- changes to turn it on. Instead of a hard-fork that abandons legacy system, this would be a soft-fork that enables memory-safety for new systems.Consider the most recent memory-safety flaw in OpenSSL. They fixed it by first adding a memory-bounds, then putting every access to the memory behind a macro PUSHC() that checks the memory-bounds: A better (but currently hypothetical) fix would be something like the following:size_t maxsize CHK_SIZE(outptr) = out ? *outlen : 0;This would link the memory-bounds maxsize with the memory outptr. The compiler can then be relied upon to do all the bounds checking to prevent buffer overflows, the rest of the code wouldn't need to be changed.An even better (and hypothetical) fix would be to change the function declaration like the following:int ossl_a2ulabel(const char *in, char *out, size_t *outlen CHK_INOUT_SIZE(out));That's the intent anyway, that *outlen is the memory-bounds of out on input, and receives a shorter bounds on output.This specific feature isn't in compilers. But gcc and clang already have other similar features. They've only been halfway implemented. This feature would be relatively easy to add. I'm currently studying the code to see how I can add it myself. I could just mostly copy what's done for the alloc_size attribute. But there's a considerable learning curve, I'd rather just persuade an existing developer of gcc or clang to add the new attributes for me.Once you give the programmer the ability to fix memory-safety problems like the solution above, you can then enable warnings for unsafe code. The compiler knew the above code was unsafe, but since there's no practical way to fix it, it's pointless nagging the programmer about it. With this new features comes warnings about failing to use it.In other words, it becomes compiler-guided refactoring. Forking code is hard, refactoring is easy.As the above function shows, the OpenSSL code is already somewhat memory safe, just based upon the flawed principle of relying upon diligent programmers. We need the compi A better (but currently hypothetical) fix would be something like the following:size_t maxsize CHK_SIZE(outptr) = out ? *outlen : 0;This would link the memory-bounds maxsize with the memory outptr. The compiler can then be relied upon to do all the bounds checking to prevent buffer overflows, the rest of the code wouldn't need to be changed.An even better (and hypothetical) fix would be to change the function declaration like the following:int ossl_a2ulabel(const char *in, char *out, size_t *outlen CHK_INOUT_SIZE(out));That's the intent anyway, that *outlen is the memory-bounds of out on input, and receives a shorter bounds on output.This specific feature isn't in compilers. But gcc and clang already have other similar features. They've only been halfway implemented. This feature would be relatively easy to add. I'm currently studying the code to see how I can add it myself. I could just mostly copy what's done for the alloc_size attribute. But there's a considerable learning curve, I'd rather just persuade an existing developer of gcc or clang to add the new attributes for me.Once you give the programmer the ability to fix memory-safety problems like the solution above, you can then enable warnings for unsafe code. The compiler knew the above code was unsafe, but since there's no practical way to fix it, it's pointless nagging the programmer about it. With this new features comes warnings about failing to use it.In other words, it becomes compiler-guided refactoring. Forking code is hard, refactoring is easy.As the above function shows, the OpenSSL code is already somewhat memory safe, just based upon the flawed principle of relying upon diligent programmers. We need the compi |

★★★ | |||

| 2023-01-25 16:09:34 | I\'m still bitter about Slammer (lien direct) | Today is the 20th anniversary of the Slammer worm. I'm still angry over it, so I thought I'd write up my anger. This post will be of interest to nobody, it's just me venting my bitterness and get off my lawn!!Back in the day, I wrote "BlackICE", an intrusion detection and prevention system that ran as both a desktop version and a network appliance. Most cybersec people from that time remember it as the desktop version, but the bulk of our sales came from the network appliance.The network appliance competed against other IDSs at the time, such as Snort, an open-source product. For much the cybersec industry, IDS was Snort -- they had no knowledge of how intrusion-detection would work other than this product, because it was open-source.My intrusion-detection technology was radically different. The thing that makes me angry is that I couldn't explain the differences to the community because they weren't technical enough.When Slammer hit, Snort and Snort-like products failed. Mine succeeded extremely well. Yet, I didn't get the credit for this.The first difference is that I used a custom poll-mode driver instead of interrupts. This the now the norm in the industry, such as with Linux NAPI drivers. The problem with interrupts is that a computer could handle less than 50,000 interrupts-per-second. If network traffic arrived faster than this, then the computer would hang, spending all it's time in the interrupt handler doing no other useful work. By turning off interrupts and instead polling for packets, this problem is prevented. The cost is that if the computer isn't heavily loaded by network traffic, then polling causes wasted CPU and electrical power. Linux NAPI drivers switch between them, interrupts when traffic is light and polling when traffic is heavy.The consequence is that a typical machine of the time (dual Pentium IIIs) could handle 2-million packets-per-second running my software, far better than the 50,000 packets-per-second of the competitors.When Slammer hit, it filled a 1-gbps Ethernet with 300,000 packets-per-second. As a consequence, pretty much all other IDS products fell over. Those that survived were attached to slower links -- 100-mbps was still common at the time.An industry luminary even gave a presentation at BlackHat saying that my claimed performance (2-million packets-per-second) was impossible, because everyone knew that computers couldn't handle traffic that fast. I couldn't combat that, even by explaining with very small words "but we disable interrupts".Now this is the norm. All network drivers are written with polling in mind. Specialized drivers like PF_RING and DPDK do even better. Networks appliances are now written using these things. Now you'd expect something like Snort to keep up and not get overloaded with interrupts. What makes me bitter is that back then, this was inexplicable magic.I wrote an article in PoC||GTFO 0x15 that shows how my portscanner masscan uses this driver, if you want more info.The second difference with my product was how signatures were written. Everyone else used signatures that triggered on the pattern-matching. Instead, my technology included protocol-analysis, code that parsed more than 100 protocols.The difference is that when there is an exploit of a buffer-overflow vulnerability, pattern-matching searched for patterns unique to the exploit. In my case, we'd measure the length of the buffer, triggering when it exceeded a certain length, finding any attempt to attack the vulnerability.The reason we could do this was through the use of state-machine parsers. Such analysis was considered heavy-weight and slow, which is why others avoided it. State-machines are faster than pattern-matching, many times faster. Better and faster.Such parsers are no | Vulnerability Guideline | ★★ | ||

| 2022-10-23 16:05:58 | The RISC Deprogrammer (lien direct) | I should write up a larger technical document on this, but in the meanwhile is this short (-ish) blogpost. Everything you know about RISC is wrong. It's some weird nerd cult. Techies frequently mention RISC in conversation, with other techies nodding their head in agreement, but it's all wrong. Somehow everyone has been mind controlled to believe in wrong concepts.An example is this recent blogpost which starts out saying that "RISC is a set of design principles". No, it wasn't. Let's start from this sort of viewpoint to discuss this odd cult.What is RISC?Because of the march of Moore's Law, every year, more and more parts of a computer could be included onto a single chip. When chip densities reached the point where we could almost fit an entire computer on a chip, designers made tradeoffs, discarding unimportant stuff to make the fit happen. They made tradeoffs, deciding what needed to be included, what needed to change, and what needed to be discarded.RISC is a set of creative tradeoffs, meaningful at the time (early 1980s), but which were meaningless by the late 1990s.The interesting parts of CPU evolution are the three decades from 1964 with IBM's System/360 mainframe and 2007 with Apple's iPhone. The issue was a 32-bit core with memory-protection allowing isolation among different programs with virtual memory. These were real computers, from the modern perspective: real computers have at least 32-bit and an MMU (memory management unit).The year 1975 saw the release of Intel 8080 and MOS 6502, but these were 8-bit systems without memory protection. This was at the point of Moore's Law where we could get a useful CPU onto a single chip.In the year 1977 we saw DEC release it's VAX minicomputer, having a 32-bit CPU w/ MMU. Real computing had moved from insanely expensive mainframes filling entire rooms to less expensive devices that merely filled a rack. But the VAX was way too big to fit onto a chip at this time.The real interesting evolution of real computing happened in 1980 with Motorola's 68000 (aka. 68k) processor, essentially the first microprocessor that supported real computing.But this comes with caveats. Making microprocessor required creative work to decide what wasn't included. In the case of the 68k, it had only a 16-bit ALU. This meant adding two 32-bit registers required passing them twice through the ALU, adding each half separately. Because of this, many call the 68k a 16-bit rather than 32-bit microprocessor.More importantly, only the lower 24-bits of the registers were valid for memory addresses. Since it's memory addressing that makes a real computer "real", this is the more important measure. But 24-bits allows for 16-megabytes of memory, which is all that anybody could afford to include in a computer anyway. It was more than enough to run a real operating system like Unix. In contrast, 16-bit processors could only address 64-kilobytes of memory, and weren't really practical for real computing.The 68k didn't come with a MMU, but it allowed an extra MMU chip. Thus, the early 1980s saw an explosion of workstations and servers consisting of a 68k and an MMU. The most famous was Sun Microsystems launched in 1982, with their own custom designed MMU chip.Sun and its competitors transformed the industry running Unix. Many point to IBM's PC from 1982 as the transformative moment in computer history, but these were non-real 16-bit systems that struggled with more than 64k of memory. IBM PC computers wouldn't become real until 1993 with Microsoft's Windows NT, supporting full 32-bits, memory-protection, and pre-emptive multitasking.But except for Windows itself, the rest of computing is dominated by the Unix heritage. The phone in your hand, whether Android or iPhone, is a Unix compu | Guideline | Heritage | ||

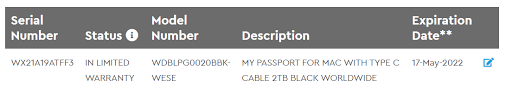

| 2022-07-03 19:02:22 | DS620slim tiny home server (lien direct) |  In this blogpost, I describe the Synology DS620slim. Mostly these are notes for myself, so when I need to replace something in the future, I can remember how I built the system. It's a "NAS" (network attached storage) server that has six hot-swappable bays for 2.5 inch laptop drives.That's right, laptop 2.5 inch drives. It makes this a tiny server that you can hold in your hand.The purpose of a NAS is reliable storage. All disk drives eventually fail. If you stick a USB external drive on your desktop for backups, it'll eventually crash, losing any data on it. A failure is unlikely tomorrow, but a spinning disk will almost certainly fail some time in the next 10 years. If you want to keep things, like photos, for the rest of your life, you need to do something different.The solution is RAID, an array of redundant disks such that when one fails (or even two), you don't lose any data. You simply buy a new disk to replace the failed one and keep going. With occasional replacements (as failures happen) it can last decades. My older NAS is 10 years old and I've replaced all the disks, one slot replaced twice.This can be expensive. A NAS requires a separate box in addition to lots of drives. In my case, I'm spending $1500 for a 18-terabytes of disk space that would cost only $400 as an external USB drive. But amortized for the expected 10+ year lifespan, I'm paying $15/month for this home system.This unit is not just disk drives but also a server. Spending $500 just for a box to hold the drives is a bit expensive, but the advantage is that it's also a server that's powered on all the time. I can setup tasks to run on regular basis that would break if I tried to regularly run them on a laptop or desktop computer.There are lots of do-it-yourself solutions (like the Radaxa Taco carrier board for a Raspberry Pi 4 CM running Linux), but I'm choosing this solution because I want something that just works without any hassle, that's configured for exactly what I need. For example, eventually a disk will fail and I'll have to replace it, and I know now that this is something that will be effortless when it happens in the future, without having to relearn some arcane Linux commands that I've forgotten years ago.Despite this, I'm a geek who obsesses about things, so I'm still going to do possibly unnecessary things, like upgrading hardware: memory, network, and fan for an optimized system. Here are all the components of my system:$500 - DS620slim unit$1000 - 6x Seagate Barracuda 5TB 2.5 inch laptop drive (ST5000LM000)$100 - 2x Crucial 8GB DDR3 SODIMMs (CT2K102464BF186D)$30 - 2.5gbps Ethernet USB (CableCreation B07VNFLTLD)$15 - Noctua NF-A8 ULN ultra silent fan$360 - WD Elements 18TB USB drive (WDBWLG0180HBK-NESN) In this blogpost, I describe the Synology DS620slim. Mostly these are notes for myself, so when I need to replace something in the future, I can remember how I built the system. It's a "NAS" (network attached storage) server that has six hot-swappable bays for 2.5 inch laptop drives.That's right, laptop 2.5 inch drives. It makes this a tiny server that you can hold in your hand.The purpose of a NAS is reliable storage. All disk drives eventually fail. If you stick a USB external drive on your desktop for backups, it'll eventually crash, losing any data on it. A failure is unlikely tomorrow, but a spinning disk will almost certainly fail some time in the next 10 years. If you want to keep things, like photos, for the rest of your life, you need to do something different.The solution is RAID, an array of redundant disks such that when one fails (or even two), you don't lose any data. You simply buy a new disk to replace the failed one and keep going. With occasional replacements (as failures happen) it can last decades. My older NAS is 10 years old and I've replaced all the disks, one slot replaced twice.This can be expensive. A NAS requires a separate box in addition to lots of drives. In my case, I'm spending $1500 for a 18-terabytes of disk space that would cost only $400 as an external USB drive. But amortized for the expected 10+ year lifespan, I'm paying $15/month for this home system.This unit is not just disk drives but also a server. Spending $500 just for a box to hold the drives is a bit expensive, but the advantage is that it's also a server that's powered on all the time. I can setup tasks to run on regular basis that would break if I tried to regularly run them on a laptop or desktop computer.There are lots of do-it-yourself solutions (like the Radaxa Taco carrier board for a Raspberry Pi 4 CM running Linux), but I'm choosing this solution because I want something that just works without any hassle, that's configured for exactly what I need. For example, eventually a disk will fail and I'll have to replace it, and I know now that this is something that will be effortless when it happens in the future, without having to relearn some arcane Linux commands that I've forgotten years ago.Despite this, I'm a geek who obsesses about things, so I'm still going to do possibly unnecessary things, like upgrading hardware: memory, network, and fan for an optimized system. Here are all the components of my system:$500 - DS620slim unit$1000 - 6x Seagate Barracuda 5TB 2.5 inch laptop drive (ST5000LM000)$100 - 2x Crucial 8GB DDR3 SODIMMs (CT2K102464BF186D)$30 - 2.5gbps Ethernet USB (CableCreation B07VNFLTLD)$15 - Noctua NF-A8 ULN ultra silent fan$360 - WD Elements 18TB USB drive (WDBWLG0180HBK-NESN) |

Ransomware Guideline | |||

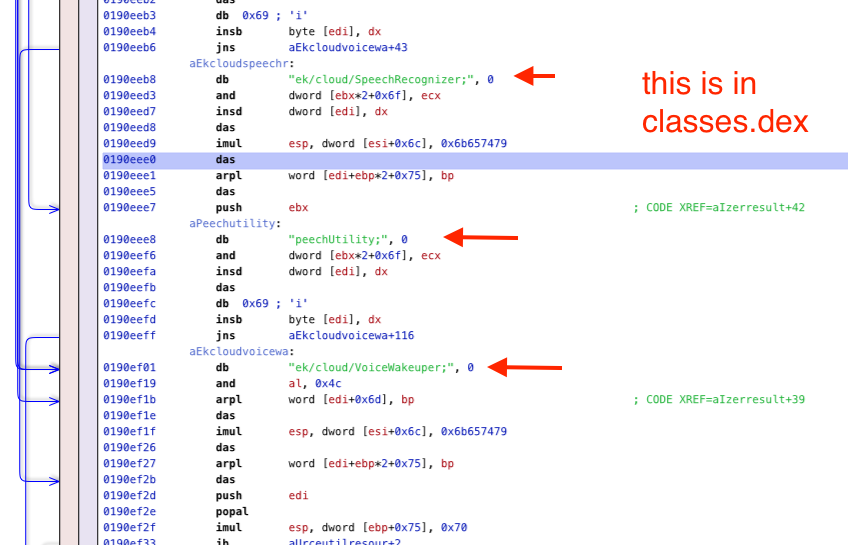

| 2022-01-31 15:33:58 | No, a researcher didn\'t find Olympics app spying on you (lien direct) | For the Beijing 2022 Winter Olympics, the Chinese government requires everyone to download an app onto their phone. It has many security/privacy concerns, as CitizenLab documents. However, another researcher goes further, claiming his analysis proves the app is recording all audio all the time. His analysis is fraudulent. He shows a lot of technical content that looks plausible, but nowhere does he show anything that substantiates his claims.Average techies may not be able to see this. It all looks technical. Therefore, I thought I'd describe one example of the problems with this data -- something the average techie can recognize.His "evidence" consists screenshots from reverse-engineering tools, with red arrows pointing to the suspicious bits. An example of one of these screenshots is this on: This screenshot is that of a reverse-engineering tool (Hopper, I think) that takes code and "disassembles" it. When you dump something into a reverse-engineering tool, it'll make a few assumptions about what it sees. These assumptions are usually wrong. There's a process where the human user looks at the analyzed output, does a "sniff-test" on whether it looks reasonable, and works with the tool until it gets the assumptions correct.That's the red flag above: the researcher has dumped the results of a reverse-engineering tool without recognizing that something is wrong in the analysis.It fails the sniff test. Different researchers will notice different things first. Famed google researcher Tavis Ormandy points out one flaw. In this post, I describe what jumps out first to me. That would be the 'imul' (multiplication) instruction shown in the blowup below: This screenshot is that of a reverse-engineering tool (Hopper, I think) that takes code and "disassembles" it. When you dump something into a reverse-engineering tool, it'll make a few assumptions about what it sees. These assumptions are usually wrong. There's a process where the human user looks at the analyzed output, does a "sniff-test" on whether it looks reasonable, and works with the tool until it gets the assumptions correct.That's the red flag above: the researcher has dumped the results of a reverse-engineering tool without recognizing that something is wrong in the analysis.It fails the sniff test. Different researchers will notice different things first. Famed google researcher Tavis Ormandy points out one flaw. In this post, I describe what jumps out first to me. That would be the 'imul' (multiplication) instruction shown in the blowup below: It's obviously ASCII. In other words, it's a series of bytes. The tool has tried to interpret these bytes as Intel x86 instructions (like 'and', 'insd', 'das', 'imul', etc.). But it's obviously not Intel x86, because those instructions make no sense.That 'imul' instruction is multiplying something by the (hex) number 0x6b657479. That doesn't look like a number -- it looks like four lower-case ASCII letters. ASCII lower-case letters are in the range 0x61 through 0x7A, so it's not the single 4-byte number 0x6b657479 but the 4 individual bytes 6b 65 74 79, which map to the ASCII letters 'k', 'e', 't It's obviously ASCII. In other words, it's a series of bytes. The tool has tried to interpret these bytes as Intel x86 instructions (like 'and', 'insd', 'das', 'imul', etc.). But it's obviously not Intel x86, because those instructions make no sense.That 'imul' instruction is multiplying something by the (hex) number 0x6b657479. That doesn't look like a number -- it looks like four lower-case ASCII letters. ASCII lower-case letters are in the range 0x61 through 0x7A, so it's not the single 4-byte number 0x6b657479 but the 4 individual bytes 6b 65 74 79, which map to the ASCII letters 'k', 'e', 't |

Tool | |||

| 2021-12-07 20:39:22 | Journalists: stop selling NFTs that you don\'t understand (lien direct) | The reason you don't really understand NFTs is because the journalists describing them to you don't understand them, either. We can see that when they attempt to sell an NFT as part of their stories (e.g. AP and NYTimes). They get important details wrong.The latest is Reason.com magazine selling an NFT. As libertarians, you'd think at least they'd get the technical details right. But they didn't. Instead of selling an NFT of the artwork, it's just an NFT of a URL. The URL points to OpenSea, which is known to remove artwork from its site (such as in response to DMCA takedown requests).If you buy that Reason.com NFT, what you'll actually get is a token pointing to:https://api.opensea.io/api/v1/metadata/0x495f947276749Ce646f68AC8c248420045cb7b5e/0x1F907774A05F9CD08975EBF7BF56BB4FF0A4EAF0000000000000060000000001This is just the metadata, which in turn contains a link to the claimed artwork:https://lh3.googleusercontent.com/8Q2OGcPuODtCxbTmlf3epFGOqbfCbs4fXZ2RcIMnLpRdTaYHgqKArk7uETRdSZmpRAFsNE8KB4sFJx6czKE5cBKB1pa7ovc4wBUdqQIf either OpenSea or Google removes the linked content, then any connection between the NFT and the artwork disappears.It doesn't have to be this way. The correct way to do NFT artwork is to point to a "hash" instead which uniquely identifies the work regardless of where it's located. That $69 million Beeple piece was done this correct way. It's completely decentralized. If the entire Internet disappeared except for the Ethereum blockchain, that Beeple NFT would still work.This is an analogy for the entire blockchain, cryptocurrency, and Dapp ecosystem: the hype you hear ignores technical details. They promise an entirely decentralized economy controlled by math and code, rather than any human entities. In practice, almost everything cheats, being tied to humans controlling things. In this case, the "Reason.com NFT artwork" is under control of OpenSea and not the "owner" of the token.Journalists have a problem. NFTs selling for millions of dollars are newsworthy, and it's the journalists place to report news rather than making judgements, like whether or not it's a scam. But at the same time, journalists are trying to explain things they don't understand. Instead of standing outside the story, simply quoting sources, they insert themselves into the story, becoming advocates rather than reporters. They can no longer be trusted as an objective observers.From a fraud perspective, it may not matter that the Reason.com NFT points to a URL instead of the promised artwork. The entire point of the blockchain is caveat emptor in action. Rules are supposed to be governed by code rather than companies, government, or the courts. There is no undoing of a transaction even if courts were to order it, because it's math.But from a journalistic point of view, this is important. They failed at an honest description of what actually the NFT contains. They've involved themselves in the story, creating a conflict of interest. It's now hard for them to point out NFT scams when they themselves have participated in something that, from a certain point of view, could be viewed as a scam. | ||||

| 2021-11-07 20:09:32 | Example: forensicating the Mesa County system image (lien direct) | Tina Peters, the election clerk in Mesa County (Colorado) went rogue and dumped disk images of an election computer on the Internet. They are available on the Internet via BitTorrent [Mesa1][Mesa2], The Colorado Secretary of State is now suing her over the incident.The lawsuit describes the facts of the case, how she entered the building with an accomplice on Sunday, May 23, 2021. I thought I'd do some forensics on the image to get more details.Specifically, I see from the Mesa1 image that she logged on at 4:24pm and was done acquiring the image by 4:30pm, in and (presumably) out in under 7 minutes.In this blogpost, I go into more detail about how to get that information.The imageTo download the Mesa1 image, you need a program that can access BitTorrent, such as the Brave web browser or a BitTorrent client like qBittorrent. Either click on the "magnet" link or copy/paste into the program you'll use to download. It takes a minute to gather all the "metadata" associated with the link, but it'll soon start the download:What you get is file named EMSSERVER.E01. This is a container file that contains both the raw disk image as well as some forensics metadata, like the date it was collected, the forensics investigator, and so on. This container is in the well-known "EnCase Expert Witness" format. EnCase is a commercial product, but its container format is a quasi-standard in the industry.Some freeware utilities you can use to open this container and view the disk include "FTK Imager", "Autopsy", and on the Linux command line, "ewf-tools".However you access the E01 file, what you most want to look at is the Windows operating-system logs. These are located in the directory C:\Windows\system32\winevtx. The standard Windows "Event Viewer" application can load these log files to help you view them.When inserting a USB drive to create the disk image, these event files will be updated and written to that disk before the image was taken. Thus, we can see in the event files all the events that happen right before the disk image happens.Disk image acquisitionHere's what the event logs on the Mesa1 image tells us about the acquisition of the disk image itself.The person taking the disk image logged in at 4:24:16pm, directly to the console (not remotely), on their second attempt after first typing an incorrect password. The account used was "emsadmin". Their NTLM password hash is 9e4ec70af42436e5f0abf0a99e908b7a. This is a "role-based" account rather than an individual's account, but I think Tina Peters is the person responsible for the "emsadmin" roll. Then, at 4:26:10pm, they connected via USB a Western Digital "easystore™" portable drive that holds 5-terabytes. This was mounted as the F: drive.The program "Access Data FTK Imager 4.2.0.13" was run from the USB drive (F:\FTK Imager\FTK Imager.exe) in order to image the system. The image was taken around 4:30pm, local Mountain Time (10:30pm GMT).It's impossible to say from this image what happened after it was taken. Presumab Then, at 4:26:10pm, they connected via USB a Western Digital "easystore™" portable drive that holds 5-terabytes. This was mounted as the F: drive.The program "Access Data FTK Imager 4.2.0.13" was run from the USB drive (F:\FTK Imager\FTK Imager.exe) in order to image the system. The image was taken around 4:30pm, local Mountain Time (10:30pm GMT).It's impossible to say from this image what happened after it was taken. Presumab |

★★★ | |||

| 2021-10-31 01:54:29 | Debunking: that Jones Alfa-Trump report (lien direct) | The Alfa-Trump conspiracy-theory has gotten a new life. Among the new things is a report done by Democrat operative Daniel Jones [*]. In this blogpost, I debunk that report.If you'll recall, the conspiracy-theory comes from anomalous DNS traffic captured by cybersecurity researchers. In the summer of 2016, while Trump was denying involvement with Russian banks, the Alfa Bank in Russia was doing lookups on the name "mail1.trump-email.com". During this time, additional lookups were also coming from two other organizations with suspicious ties to Trump, Spectrum Health and Heartland Payments.This is certainly suspicious, but people have taken it further. They have crafted a conspiracy-theory to explain the anomaly, namely that these organizations were secretly connecting to a Trump server.We know this explanation to be false. There is no Trump server, no real server at all, and no connections. Instead, the name was created and controlled by Cendyn. The server the name points to for transmitting bulk email and isn't really configured to accept connections. It's built for outgoing spam, not incoming connections. The Trump Org had no control over the name or the server. As Cendyn explains, the contract with the Trump Org ended in March 2016, after which they re-used the IP address for other marketing programs, but since they hadn't changed the DNS settings, this caused lookups of the DNS name.This still doesn't answer why Alfa, Spectrum, Heartland, and nobody else were doing the lookups. That's still a question. But the answer isn't secret connections to a Trump server. The evidence is pretty solid on that point.Daniel Jones and Democracy Integrity ProjectThe report is from Daniel Jones and his Democracy Integrity Project.It's at this point that things get squirrely. All sorts of right-wing sites claim he's a front for George Soros, funds Fusion GPS, and involved in the Steele Dossier. That's right-wing conspiracy theory nonsense.But at the same time, he's clearly not an independent and objective analyst. He was hired to further the interests of Democrats.If the data and analysis held up, then partisan ties wouldn't matter. But they don't hold up. Jones is clearly trying to be deceptive.The deception starts by repeatedly referring to the "Trump server". There is no Trump server. There is a Listrak server operated on behalf of Cendyn. Whether the Trump Org had any control over the name or the server is a key question the report should be trying to prove, not a premise. The report clearly understands this fact, so it can't be considered a mere mistake, but a deliberate deception.People make assumptions that a domain name like "trump-email.com" would be controlled by the Trump organization. It's wasn't. When Trump Hotels hired Cendyn to do marketing for them, Cendyn did what they normally do in such cases, register a domain with their client's name for the sending of bulk emails. They did the same thing with hyatt-email.com, denihan-email.com, mjh-email.com, and so on. What clear is that the Trump organization had no control, no direct ties to this domain until after the conspiracy-theory hit the press.Finding #1 - Alfa Bank, Spectrum Health, and Heartland account for nearly all of the DNS lookups for mail1.trump-email.com in the May-September timeframe.Yup, that's weird and unexplained.But it concludes from this that there were connections, saying the following:In the DNS environment, if "computer X" does a DNS look-up of "Computer Y," it means that "Computer X" is trying to connect to "Computer Y".This is false. That's certain | ||||

| 2021-10-24 19:46:46 | Review: Dune (2021) (lien direct) | One of the most important classic sci-fi stories is the book "Dune" from Frank Herbert. It was recently made into a movie. I thought I'd write a quick review.The summary is this: just read the book. It's a classic for a good reason, and you'll be missing a lot by not reading it.But the movie Dune (2021) movie is very good. The most important thing to know is see it in IMAX. IMAX is this huge screen technology that partly wraps around the viewer, and accompanied by huge speakers that overwhelm you with sound. If you watch it in some other format, what was visually stunning becomes merely very pretty.This is Villeneuve's trademark, which you can see in his other works, like his sequel to Bladerunner. The purpose is to marvel at the visuals in every scene. The story telling is just enough to hold the visuals together. I mean, he also seems to do a good job with the story telling, but it's just not the reason to go see the movie. (I can't tell -- I've read the book, so see the story differently than those of you who haven't).Beyond the story and visuals, many of the actor's performances were phenomenal. Javier Bardem's "Stilgar" character steals his scenes. Stellan Skarsgård exudes evil. The two character actors playing the mentats were each perfect. I found the lead character (Timothée Chalamet) a bit annoying, but simply because he is at this point in the story.Villeneuve's splits the book into two parts. This movie is only the first part. This presents a problem, because up until this point, the main character is just responding to events, not the hero who yet drives the events. It doesn't fit into the traditional Hollywood accounting model. I really want to see the second film even if the first part, released in the post-pandemic turmoil of the movie industry, doesn't perform well at the box office.In short, if you haven't read the books, I'm not sure how well you'll follow the storytelling. But the visuals (seen at IMAX scale) and the characters are so great that I'm pretty sure most people will enjoy the movie. And go see it on IMAX in order to get the second movie made!! | Guideline | |||

| 2021-10-13 23:33:07 | Fact check: that "forensics" of the Mesa image is crazy (lien direct) | Tina Peters, the elections clerk from Mesa County (Colorado) went rogue, creating a "disk-image" of the election server, and posting that image to the public Internet. Conspiracy theorists have been analyzing the disk-image trying to find anomalies supporting their conspiracy-theories. A recent example is this "forensics" report. In this blogpost, I debunk that report.I suppose calling somebody a "conspiracy theorist" is insulting, but there's three objective ways we can identify them as such.The first is when they use the logic "everything we can't explain is proof of the conspiracy". In other words, since there's no other rational explanation, the only remaining explanation is the conspiracy-theory. But there can be other possible explanations -- just ones unknown to the person because they aren't smart enough to understand them. We see that here: the person writing this report doesn't understand some basic concepts, like "airgapped" networks.This leads to the second way to recognize a conspiracy-theory, when it demands this one thing that'll clear things up. Here, it's demanding that a manual audit/recount of Mesa County be performed. But it won't satisfy them. The Maricopa audit in neighboring Colorado, whose recount found no fraud, didn't clear anything up -- it just found more anomalies demanding more explanation. It's like Obama's birth certificate. The reason he ignored demands to show it was that first, there was no serious question (even if born in Kenya, he'd still be a natural born citizen -- just like how Cruz was born in Canada and McCain in Panama), and second, showing the birth certificate wouldn't change anything at all, as they'd just claim it was fake. There is no possibility of showing a birth certificate that can be proven isn't fake.The third way to objectively identify a conspiracy theory is when they repeat objectively crazy things. In this case, they keep demanding that the 2020 election be "decertified". That's not a thing. There is no regulation or law where that can happen. The most you can hope for is to use this information to prosecute the fraudster, prosecute the elections clerk who didn't follow procedure, or convince legislators to change the rules for the next election. But there's just no way to change the results of the last election even if wide spread fraud is now proven.The document makes 6 individual claims. Let's debunk them one-by-one.#1 Data Integrity ViolationThe report tracks some logs on how some votes were counted. It concludes:If the reasons behind these findings cannot be adequately explained, then the county's election results are indeterminate and must be decertified.This neatly demonstrates two conditions I cited above. The analyst can't explain the anomaly not because something bad happened, but because they don't understand how Dominion's voting software works. This demand for an explanation is a common attribute of conspiracy theories -- the ignorant keep finding things they don't understand and demand somebody else explain them.Secondly, there's the claim that the election results must be "decertified". It's something that Trump and his supporters believe is a thing, that somehow the courts will overturn the past election and reinstate Trump. This isn't a rational claim. It's not how the courts or the law works or the Constitution works.#2 Intentional purging of Log FilesThis is the issue that convinced Tina Peters to go rogue, that the normal Dominion software update gets rid of all the old system-log files. She leaked two disk-images, before and after the update, to show the disappearance of system-logs. She believes this violates the law demanding the "election records" be preserved. She claims because o | Guideline | |||

| 2021-10-10 20:35:49 | 100 terabyte home NAS (lien direct) | So, as a nerd, let's say you need 100 terabytes of home storage. What do you do?My solution would be a commercial NAS RAID, like from Synology, QNAP, or Asustor. I'm a nerd, and I have setup my own Linux systems with RAID, but I'd rather get a commercial product. When a disk fails, and a disk will always eventually fail, then I want something that will loudly beep at me and make it easy to replace the drive and repair the RAID.Some choices you have are:vendor (Synology, QNAP, and Asustor are the vendors I know and trust the most)number of bays (you want 8 to 12)redundancy (you want at least 2 if not 3 disks)filesystem (btrfs or ZFS) [not btrfs-raid builtin, but btrfs on top of RAID]drives (NAS optimized between $20/tb and $30/tb)networking (at least 2-gbps bonded, but box probably can't use all of 10gbps)backup (big external USB drives)The products I link above all have at least 8 drive bays. When you google "NAS", you'll get a list of smaller products. You don't want them. You want somewhere between 8 and 12 drives.The reason is that you want two-drive redundancy like RAID6 or RAIDZ2, meaning two additional drives. Everyone tells you one-disk redundancy (like RAID5) is enough, they are wrong. It's just legacy thinking, because it was sufficient in the past when drives were small. Disks are so big nowadays that you really need two-drive redundancy. If you have a 4-bay unit, then half the drives are used for redundancy. If you have a 12-bay unit, then only 2 out of the 12 drives are being used for redundancy.The next decision is the filesystem. There's only two choices, btrfs and ZFS. The reason is that they both healing and snapshots. Note btrfs means btrfs-on-RAID6, not btrfs-RAID, which is broken. In other words, btrfs contains its own RAID feature that you don't want to use.Over long periods of time, errors creep into the file system. You want to scrub the data occasionally. This means reading the entire filesystem, checksuming the files, and repairing them if there's a problem. That requires a filesystem that checksums each block of data.Another thing you want snapshots to guard against things like ransomware. This means you mark the files you want to keep, and even if a workstation attempts to change or delete the file, it'll still be held on the disk.QNAP uses ZFS while others like Synology and Asustor use btrfs. I really don't know which is better.It's cheaper to buy the NAS diskless then add your own disk drives. If you can't do this, then you'll be helpless when a drive fails and needs to be replaced.Drives cost between $20/tb and $30/tb right now. This recent article has a good buying guide. You probably want to get a NAS optimized hard drive. You probably want to double-check that it's CMR instead of SMR -- SMR is "shingled" vs. "conventional" magnetic recording. SMR is bad. There's only three hard drive makers (Seagate, Western Digital, and Toshiba), so there's not a big selection.Working with such large data sets over 1-gbps is painful. These units allow 802.3ad link aggregation as well as faster Ethernet. Some have 10gbe built-in, others allow a PCIe adapter to be plugged in.However, due to the overhead of spinning disks, you are unlikely to get 10gbps speeds. I mention this because 10gbps copper Ethernet sucks, so is not necessarily a buying criteria. You may prefer multigig/NBASE-T that only does 5gbps with relaxed cabling requirements and lower power consumption.This means that your NAS decision is going to be made with your home networki | ||||

| 2021-09-24 03:51:21 | Check: that Republican audit of Maricopa (lien direct) | Author: Robert Graham (@erratarob)Later today (Friday, September 24, 2021), Republican auditors release their final report on the found with elections in Maricopa county. Draft copies have circulated online. In this blogpost, I write up my comments on the cybersecurity portions of their draft.https://arizonaagenda.substack.com/p/we-got-the-senate-audit-reportThe three main problems are:They misapply cybersecurity principles that are meaningful for normal networks, but which don't really apply to the air gapped networks we see here.They make some errors about technology, especially networking.They are overstretching themselves to find dirt, claiming the things they don't understand are evidence of something bad.In the parts below, I pick apart individual pieces from that document to demonstrate these criticisms. I focus on section 7, the cybersecurity section, and ignore the other parts of the document, where others are more qualified than I to opine.In short, when corrected, section 7 is nearly empty of any content.7.5.2.1.1 Software and Patch Management, part 1They claim Dominion is defective at one of the best-known cyber-security issues: applying patches.It's not true. The systems are “air gapped”, disconnected from the typical sort of threat that exploits unpatched systems. The primary security of the system is physical.This is standard in other industries with hard reliability constraints, like industrial or medical. Patches in those systems can destabilize systems and kill people, so these industries are risk averse. They prefer to mitigate the threat in other ways, such as with firewalls and air gaps.Yes, this approach is controversial. There are some in the cybersecurity community who use lack of patches as a bludgeon with which to bully any who don't apply every patch immediately. But this is because patching is more a political issue than a technical one. In the real, non-political world we live in, most things don't get immediately patched all the time.7.5.2.1.1 Software and Patch Management, part 2They claim new software executables were applied to the system, despite the rules against new software being applied. This isn't necessarily true.There are many reasons why Windows may create new software executables even when no new software is added. One reason is “Features on Demand” or FOD. You'll see new executables appear in C:\Windows\WinSxS for these. Another reason is their .NET language, which causes binary x86 executables to be created from bytecode. You'll see this in the C:\Windows\assembly directory.The auditors simply counted the number of new executables, with no indication which category they fell in. Maybe they are right, maybe new software was installed or old software updated. It's just that their mere counting of executable files doesn't show understanding of these differences.7.5.2.1.2 Log ManagementThe auditors claim that a central log management system should be used.This obviously wouldn't apply to “air gapped” systems, because it would need a connection to an external network.Dominion already designates their EMSERVER as the central log repository for their little air gapped network. Important files from C: are copied to D:, a RAID10 drive. This is a perfectly adequate solution, adding yet another computer to their little network would be overkill, and add as many security problems as it solved.One could argue more Windows logs need to be preserved, but that would simply mean archiving the from the C: drive onto the D: drive, not that you need to connect to the Internet to centrally log files.7.5.2.1.3 Credential ManagementLike the other sections, this claim is out of place | Threat Patching | |||

| 2021-09-21 18:01:25 | That Alfa-Trump Sussman indictment (lien direct) | Five years ago, online magazine Slate broke a story about how DNS packets showed secret communications between Alfa Bank in Russia and the Trump Organization, proving a link that Trump denied. I was the only prominent tech expert that debunked this as just a conspiracy-theory[*][*][*].Last week, I was vindicated by the indictment of a lawyer involved, a Michael Sussman. It tells a story of where this data came from, and some problems with it.But we should first avoid reading too much into this indictment. It cherry picks data supporting its argument while excluding anything that disagrees with it. We see chat messages expressing doubt in the DNS data. If chat messages existed expressing confidence in the data, we wouldn't see them in the indictment.In addition, the indictment tries to make strong ties to the Hillary campaign and the Steele Dossier, but ultimately, it's weak. It looks to me like an outsider trying to ingratiated themselves with the Hillary campaign rather than there being part of a grand Clinton-lead conspiracy against Trump.With these caveats, we do see some important things about where the data came from.We see how Tech-Executive-1 used his position at cyber-security companies to search private data (namely, private DNS logs) to search for anything that might link Trump to somebody nefarious, including Russian banks. In other words, a link between Trump and Alfa bank wasn't something they accidentally found, it was one of the many thousands of links they looked for.Such a technique has been long known as a problem in science. If you cast the net wide enough, you are sure to find things that would otherwise be statistically unlikely. In other words, if you do hundreds of tests of hydroxychloroquine or invermectin on Covid-19, you are sure to find results that are so statistically unlikely that they wouldn't happen more than 1% of the time.If you search world-wide DNS logs, you are certain to find weird anomalies that you can't explain. Unexplained computer anomalies happen all the time, as every user of computers can tell you.We've seen from the start that the data was highly manipulated. It's likely that the data is real, that the DNS requests actually happened, but at the same time, it's been stripped of everything that might cast doubt on the data. In this indictment we see why: before the data was found the purpose was to smear Trump. The finders of the data don't want people to come to the best explanation, they want only explainations that hurt Trump.Trump had no control over the domain in question, trump-email.com. Instead, it was created by a hotel marketing firm they hired, Cendyne. It's Cendyne who put Trump's name in the domain. A broader collection of DNS information including Cendyne's other clients would show whether this was normal or not.In other words, a possible explanation of the data, hints of a Trump-Alfa connection, has always been the dishonesty of those who collected the data. The above indictment confirms they were at this level of dishonesty. It doesn't mean the DNS requests didn't happen, but that their anomalous nature can be created by deletion of explanatory data.Lastly, we see in this indictment the problem with "experts". | Guideline | |||

| 2021-09-14 23:17:40 | How not to get caught in law-enforcement geofence requests (lien direct) | I thought I'd write up a response to this question from well-known 4th Amendment and CFAA lawyer Orin Kerr:Question for tech people related to "geofence" warrants served on Google: How easy is it for a cell phone user, either of an Android or an iPhone, to stop Google from generating the detailed location info needed to be responsive to a geofence warrant? What do you need to do?- Orin Kerr (@OrinKerr) September 15, 2021 (FWIW, I'm seeking info from people who actually know the answer based on their expertise, not from those who are just guessing, or are who are now googling around to figure out what the answer may be,)- Orin Kerr (@OrinKerr) September 15, 2021 First, let me address the second part of his tweet, whether I'm technically qualified to answer this. I'm not sure, I have only 80% confidence that I am. Hence, I'm writing this answer as blogpost hoping people will correct me if I'm wrong.There is a simple answer and it's this: just disable "Location" tracking in the settings on the phone. Both iPhone and Android have a one-click button to tap that disables everything. The trick is knowing which thing to disable. On the iPhone it's called "Location Services". On the Android, it's simply called "Location".If you do start googling around for answers, you'll find articles upset that Google is still tracking them. That's because they disabled "Location History" and not "Location". This left "Location Services" and "Web and App Activity" still tracking them. Disabling "Location" on the phone disables all these things [*].It's that simple: one click and done, and Google won't be able to report your location in a geofence request.I'm pretty confident in this answer, despite what your googling around will tell you about Google's pernicious ways. But I'm only 80% confident in my answer. Technology is complex and constantly changing.Note that the answer is very different for mobile phone companies, like AT&T or T-Mobile. They have their own ways of knowing about your phone's location independent of whatever Google or Apple do on the phone itself. Because of modern 4G/LTE, cell towers must estimate both your direction and distance from the tower. I've confirmed that they can know your location to within 50 feet. There are limitations to this, it depends upon whether you are simply in range of the tower or have an active phone call in progress. Thus, I think law enforcement prefers asking Google.Another example is how my car uses Google Maps all the time, and doesn't have privacy settings. I don't know what it reports to Google. So when I rob a bank, my phone won't betray me, but my car will. The trick is knowing which thing to disable. On the iPhone it's called "Location Services". On the Android, it's simply called "Location".If you do start googling around for answers, you'll find articles upset that Google is still tracking them. That's because they disabled "Location History" and not "Location". This left "Location Services" and "Web and App Activity" still tracking them. Disabling "Location" on the phone disables all these things [*].It's that simple: one click and done, and Google won't be able to report your location in a geofence request.I'm pretty confident in this answer, despite what your googling around will tell you about Google's pernicious ways. But I'm only 80% confident in my answer. Technology is complex and constantly changing.Note that the answer is very different for mobile phone companies, like AT&T or T-Mobile. They have their own ways of knowing about your phone's location independent of whatever Google or Apple do on the phone itself. Because of modern 4G/LTE, cell towers must estimate both your direction and distance from the tower. I've confirmed that they can know your location to within 50 feet. There are limitations to this, it depends upon whether you are simply in range of the tower or have an active phone call in progress. Thus, I think law enforcement prefers asking Google.Another example is how my car uses Google Maps all the time, and doesn't have privacy settings. I don't know what it reports to Google. So when I rob a bank, my phone won't betray me, but my car will. |

||||

| 2021-07-26 20:52:15 | Of course you can\'t trust scientists on politics (lien direct) | Many people make the same claim as this tweet. It's obviously wrong. Yes,, the right-wing has a problem with science, but this isn't it.If you think you don't trust scientists, you're mistaken. You trust scientists in a million different ways every time you step on a plane, or for that matter turn on your tap or open a can of beans. The fact that you're unaware of this doesn't mean it's not so.- Paul Graham (@paulg) July 26, 2021First of all, people trust airplanes because of their long track record of safety, not because of any claims made by scientists. Secondly, people distrust "scientists" when politics is involved because of course scientists are human and can get corrupted by their political (or religious) beliefs.And thirdly, the concept of "trusting scientific authority" is wrong, since the bedrock principle of science is distrusting authority. What defines sciences is how often prevailing scientific beliefs are challenged.Carl Sagan has many quotes along these lines that eloquently expresses this:A central lesson of science is that to understand complex issues (or even simple ones), we must try to free our minds of dogma and to guarantee the freedom to publish, to contradict, and to experiment. Arguments from authority are unacceptable.If you are "arguing from authority", like Paul Graham is doing above, then you are fundamentally misunderstanding both the principles of science and its history.We know where this controversy comes from: politics. The above tweet isn't complaining about the $400 billion U.S. market for alternative medicines, a largely non-political example. It's complaining about political issues like vaccines, global warming, and evolution.The reason those on the right-wing resist these things isn't because they are inherently anti-science, it's because the left-wing is. They left has corrupted and politicized these topics. The "Green New Deal" contains very little that is "Green" and much that is "New Deal", for example. The left goes from the fact "carbon dioxide absorbs infrared" to justify "we need to promote labor unions".Take Marjorie Taylor Green's (MTG) claim that she doesn't believe in the Delta variant because she doesn't believe in evolution. Her argument is laughably stupid, of course, but it starts with the way the left has politicized the term "evolution".The "Delta" variant didn't arise from "evolution", it arose because of "mutation" and "natural selection". We know the "mutation" bit is true, because we can sequence the complete DNA and detect that changes happen. We know that "selection" happens, because we see some variants overtake others in how fast they spread.Yes, "evolution" is synonymous with mutation plus selection, but it's also a politically loaded term that means a lot of additional things. The public doesn't understand mutation and natural-selection, because these concepts are not really taught in school. Schools don't teach students to understand these things, they teach students to believe.The focus of science eduction in school is indoctrinating students into believing in "evolution" rather than teaching the mechanisms of "mutation" and "natural-selection". We see the conflict in things like describing the evolution of the eyeball, which Creationists "reasonably" believe is too complex to have evolved this way. I put "reasonable" in quotes here because it's just the "Gods in the gaps" argument, which credits God for everything that science can't explain, which isn't very smart. But at the same time, science textbooks go too far, refusing to admit their gaps in knowledge here. The fossil records shows a lot of complexity arising over time through steady change -- it just doesn't show anything about eyeballs.In other words, it's | ||||

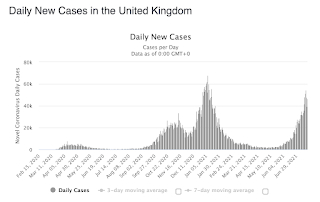

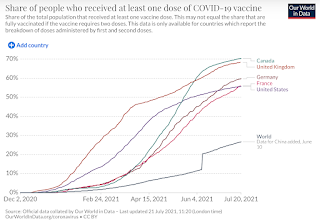

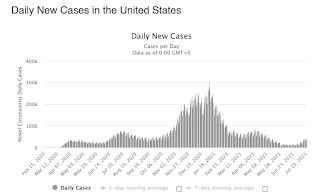

| 2021-07-21 18:11:58 | Risk analysis for DEF CON 2021 (lien direct) | It's the second year of the pandemic and the DEF CON hacker conference wasn't canceled. However, the Delta variant is spreading. I thought I'd do a little bit of risk analysis. TL;DR: I'm not canceling my ticket, but changing my plans what I do in Vegas during the convention.First, a note about risk analysis. For many people, "risk" means something to avoid. They work in a binary world, labeling things as either "risky" (to be avoided) or "not risky". But real risk analysis is about shades of gray, trying to quantify things.The Delta variant is a mutation out of India that, at the moment, is particularly affecting the UK. Cases are nearly up to their pre-vaccination peaks in that country. Note that the UK has already vaccinated nearly 70% of their population -- more than the United States. In both the UK and US there are few preventive measures in place (no lockdowns, no masks) other than vaccines. Note that the UK has already vaccinated nearly 70% of their population -- more than the United States. In both the UK and US there are few preventive measures in place (no lockdowns, no masks) other than vaccines.  Thus, the UK graph is somewhat predictive of what will happen in the United States. If we time things from when the latest wave hit the same levels as peak of the first wave, then it looks like the USA is only about 1.5 months behind the UK. Thus, the UK graph is somewhat predictive of what will happen in the United States. If we time things from when the latest wave hit the same levels as peak of the first wave, then it looks like the USA is only about 1.5 months behind the UK. It's another interesting lesson about risk analysis. Most people experience these things as sudden changes. One moment, everything seems fine, and cases are decreasing. The next moment, we are experiencing a major new wave of infections. It's especially jarring when the thing we are tracking is exponential. But we can compare the curves and see that things are totally predictable. In about another 1.5 months, the US will experience a wave that looks similar to the UK wave.Sometimes the problem is that the change is inconceivable. We saw that recently with 1-in-100 year floods in Germany. Weather forecasters predicted 1-in-100 level of floods days in advance, but they still surprised many people. It's another interesting lesson about risk analysis. Most people experience these things as sudden changes. One moment, everything seems fine, and cases are decreasing. The next moment, we are experiencing a major new wave of infections. It's especially jarring when the thing we are tracking is exponential. But we can compare the curves and see that things are totally predictable. In about another 1.5 months, the US will experience a wave that looks similar to the UK wave.Sometimes the problem is that the change is inconceivable. We saw that recently with 1-in-100 year floods in Germany. Weather forecasters predicted 1-in-100 level of floods days in advance, but they still surprised many people. |

||||

| 2021-07-14 20:49:05 | Ransomware: Quis custodiet ipsos custodes (lien direct) | Many claim that "ransomware" is due to cybersecurity failures. It's not really true. We are adequately protecting users and computers. The failure is in the inability of cybersecurity guardians to protect themselves. Ransomware doesn't make the news when it only accesses the files normal users have access to. The big ransomware news events happened because ransomware elevated itself to that of an "administrator" over the network, giving it access to all files, including online backups.Generic improvements in cybersecurity will help only a little, because they don't specifically address this problem. Likewise, blaming ransomware on how it breached perimeter defenses (phishing, patches, password reuse) will only produce marginal improvements. Ransomware solutions need to instead focus on looking at the typical human-operated ransomware killchain, identify how they typically achieve "administrator" credentials, and fix those problems. In particular, large organizations need to redesign how they handle Windows "domains" and "segment" networks.I read a lot of lazy op-eds on ransomware. Most of them claim that the problem is due to some sort of moral weakness (laziness, stupidity, greed, slovenliness, lust). They suggest things like "taking cybersecurity more seriously" or "do better at basic cyber hygiene". These are "unfalsifiable" -- things that nobody would disagree with, meaning they are things the speaker doesn't really have to defend. They don't rest upon technical authority but moral authority: anybody, regardless of technical qualifications, can have an opinion on ransomware as long as they phrase it in such terms.Another flaw of these "unfalsifiable" solutions is that they are not measurable. There's no standard definition for "best practices" or "basic cyber hygiene", so there no way to tell if you aren't already doing such things, or the gap you need to overcome to reach this standard. Worse, some people point to the "NIST Cybersecurity Framework" as the "basics" -- but that's a framework for all cybersecurity practices. In other words, anything short of doing everything possible is considered a failure to follow the basics.In this post, I try to focus on specifics, while at the same time, making sure things are broadly applicable. It's detailed enough that people will disagree with my solutions.The thesis of this blogpost is that we are failing to protect "administrative" accounts. The big ransomware attacks happen because the hackers got administrative control over the network, usually the Windows domain admin. It's with administrative control that they are able to cause such devastation, able to reach all the files in the network, while also being able to delete backups.The Kaseya attacks highlight this particularly well. The company produces a product that is in turn used by "Managed Security Providers" (MSPs) to administer the security of small and medium sized businesses. Hackers found and exploited a vulnerability in the product, which gave them administrative control of over 1000 small and medium sized businesses around the world.The underlying problems start with the way their software gives indiscriminate administrative access over computers. Then, this software was written using standard software techniques, meaning, with the standard vulnerabilities that most software has (such as "SQL injection"). It wasn't written in a paranoid, careful way that you'd hope for software that poses this much danger.A good analogy is airplanes. A common joke refers to the "black box" flight-recorders that survive airplane crashes, that maybe we should make the entire airplane out of that material. The reason we can't do this is that airplanes would be too heavy to fly. The same is true of software: airplane software is written with extreme paranoia knowing that bugs can l | Ransomware Vulnerability Guideline | |||

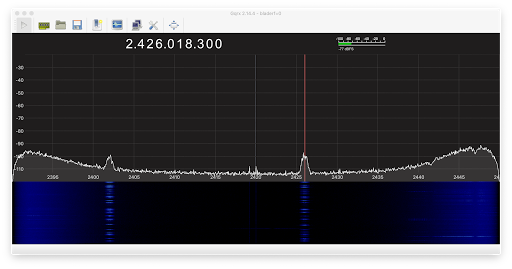

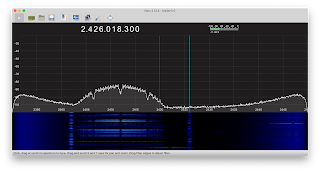

| 2021-07-05 17:15:28 | Some quick notes on SDR (lien direct) | I'm trying to create perfect screen captures of SDR to explain the world of radio around us. In this blogpost, I'm going to discuss some of the imperfect captures I'm getting, specifically, some notes about WiFi and Bluetooth.An SDR is a "software defined radio" which digitally samples radio waves and uses number crunching to decode the signal into data. Among the simplest thing an SDR can do is look at a chunk of spectrum and see signal strength. This is shown below, where I'm monitoring part of the famous 2.4 GHz pectrum used by WiFi/Bluetooth/microwave-ovens: There are two panes. The top shows the current signal strength as graph. The bottom pane is the "waterfall" graph showing signal strength over time, display strength as colors: black means almost no signal, blue means some, and yellow means a strong signal.The signal strength graph is a bowl shape, because we are actually sampling at a specific frequency of 2.42 GHz, and the further away from this "center", the less accurate the analysis. Thus, the algorithms think there is more signal the further away from the center we are.What we do see here is two peaks, at 2.402 GHz toward the left and 2.426 GHz toward the right (which I've marked with the red line). These are the "Bluetooth beacon" channels. I was able to capture the screen at the moment some packets were sent, showing signal at this point. Below in the waterfall chart, we see packets constantly being sent at these frequencies.We are surrounded by devices giving off packets here: our phones, our watches, "tags" attached to devices, televisions, remote controls, speakers, computers, and so on. This is a picture from my home, showing only my devices and perhaps my neighbors. In a crowded area, these two bands are saturated with traffic.The 2.4 GHz region also includes WiFi. So I connected to a WiFi access-point to watch the signal. There are two panes. The top shows the current signal strength as graph. The bottom pane is the "waterfall" graph showing signal strength over time, display strength as colors: black means almost no signal, blue means some, and yellow means a strong signal.The signal strength graph is a bowl shape, because we are actually sampling at a specific frequency of 2.42 GHz, and the further away from this "center", the less accurate the analysis. Thus, the algorithms think there is more signal the further away from the center we are.What we do see here is two peaks, at 2.402 GHz toward the left and 2.426 GHz toward the right (which I've marked with the red line). These are the "Bluetooth beacon" channels. I was able to capture the screen at the moment some packets were sent, showing signal at this point. Below in the waterfall chart, we see packets constantly being sent at these frequencies.We are surrounded by devices giving off packets here: our phones, our watches, "tags" attached to devices, televisions, remote controls, speakers, computers, and so on. This is a picture from my home, showing only my devices and perhaps my neighbors. In a crowded area, these two bands are saturated with traffic.The 2.4 GHz region also includes WiFi. So I connected to a WiFi access-point to watch the signal. WiFi uses more bandwidth than Bluetooth. The term "bandwidth" is used today to mean "faster speeds", but it comes from the world of radio where it quite literally means the width of the band. The width of the Bluetooth transmissions seen above is 2 MHz, the width of the WiFi band shown here is 20 MHz.It took about 50 screenshots before getting these two. I had to hit the "capture" button right at the moment things were being transmitted. And easier way is a setting that graphs the current signal strength compared to the maximum recently seen as a separate line. That's shown below: the instant it was taken, there was no signal, but it shows the maximum of recent signals as a separate line: WiFi uses more bandwidth than Bluetooth. The term "bandwidth" is used today to mean "faster speeds", but it comes from the world of radio where it quite literally means the width of the band. The width of the Bluetooth transmissions seen above is 2 MHz, the width of the WiFi band shown here is 20 MHz.It took about 50 screenshots before getting these two. I had to hit the "capture" button right at the moment things were being transmitted. And easier way is a setting that graphs the current signal strength compared to the maximum recently seen as a separate line. That's shown below: the instant it was taken, there was no signal, but it shows the maximum of recent signals as a separate line: |

||||

| 2021-06-20 20:34:42 | When we\'ll get a 128-bit CPU (lien direct) | On Hacker News, this article claiming "You won't live to see a 128-bit CPU" is trending". Sadly, it was non-technical, so didn't really contain anything useful. I thought I'd write up some technical notes.The issue isn't the CPU, but memory. It's not about the size of computations, but when CPUs will need more than 64-bits to address all the memory future computers will have. It's a simple question of math and Moore's Law.Today, Intel's server CPUs support 48-bit addresses, which is enough to address 256-terabytes of memory -- in theory. In practice, Amazon's AWS cloud servers are offered up to 24-terabytes, or 45-bit addresses, in the year 2020.Doing the math, it means we have 19-bits or 38-years left before we exceed the 64-bit registers in modern processors. This means that by the year 2058, we'll exceed the current address size and need to move 128-bits. Most people reading this blogpost will be alive to see that, though probably retired.There are lots of reasons to suspect that this event will come both sooner and later.It could come sooner if storage merges with memory. We are moving away from rotating platters of rust toward solid-state storage like flash. There are post-flash technologies like Intel's Optane that promise storage that can be accessed at speeds close to that of memory. We already have machines needing petabytes (at least 50-bits worth) of storage.Addresses often contain more just the memory address, but also some sort of description about the memory. For many applications, 56-bits is the maximum, as they use the remaining 8-bits for tags.Combining those two points, we may be only 12 years away from people starting to argue for 128-bit registers in the CPU.Or, it could come later because few applications need more than 64-bits, other than databases and file-systems.Previous transitions were delayed for this reason, as the x86 history shows. The first Intel CPUs were 16-bits addressing 20-bits of memory, and the Pentium Pro was 32-bits addressing 36-bits worth of memory.The few applications that needed the extra memory could deal with the pain of needing to use multiple numbers for addressing. Databases used Intel's address extensions, almost nobody else did. It took 20 years, from the initial release of MIPS R4000 in 1990 to Intel's average desktop processor shipped in 2010 for mainstream apps needing larger addresses.For the transition beyond 64-bits, it'll likely take even longer, and might never happen. Working with large datasets needing more than 64-bit addresses will be such a specialized discipline that it'll happen behind libraries or operating-systems anyway.So let's look at the internal cost of larger registers, if we expand registers to hold larger addresses.We already have 512-bit CPUs -- with registers that large. My laptop uses one. It supports AVX-512, a form of "SIMD" that packs multiple small numbers in one big register, so that he can perform identical computations on many numbers at once, in parallel, rather than sequentially. Indeed, even very low-end processors have been 128-bit for a long time -- for "SIMD".In other words, we can have a large register file with wide registers, and handle the bandwidth of shipping those registers around the CPU performing computations on them. Today's processors already handle this for certain types of computations.But just because we can do many 64-bit computations at once ("SIMD") still doesn't mean we can do a 128-bit computation ("scalar"). Simple problems like "carry" get difficult as numbers get larger. Just because SIMD can do multiple small computations doesn't tell us what one large computation will cost. This was why it took an extra decade for Intel to make the transition -- they added 64-bit MMX registers for SIMD a decade before they added 64-bit for normal computations.The abo | ||||