What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2023-06-23 12:03:59 | Sécurité de la chaîne d'approvisionnement pour GO, partie 2: dépendances compromises Supply chain security for Go, Part 2: Compromised dependencies (lien direct) |

Julie Qiu, Go Security & Reliability, and Roger Ng, Google Open Source Security Team“Secure your dependencies”-it\'s the new supply chain mantra. With attacks targeting software supply chains sharply rising, open source developers need to monitor and judge the risks of the projects they rely on. Our previous installment of the Supply chain security for Go series shared the ecosystem tools available to Go developers to manage their dependencies and vulnerabilities. This second installment describes the ways that Go helps you trust the integrity of a Go package. Go has built-in protections against three major ways packages can be compromised before reaching you: A new, malicious version of your dependency is publishedA package is withdrawn from the ecosystemA malicious file is substituted for a currently used version of your dependencyIn this blog post we look at real-world scenarios of each situation and show how Go helps protect you from similar attac | ★★ | |||

| 2023-06-22 12:05:42 | Google Cloud attribue 313 337 $ en 2022 Prix VRP Google Cloud Awards $313,337 in 2022 VRP Prizes (lien direct) |

Anthony Weems, Information Security Engineer2022 was a successful year for Google\'s Vulnerability Reward Programs (VRPs), with over 2,900 security issues identified and fixed, and over $12 million in bounty rewards awarded to researchers. A significant amount of these vulnerability reports helped improve the security of Google Cloud products, which in turn helps improve security for our users, customers, and the Internet at large.We first announced the Google Cloud VRP Prize in 2019 to encourage security researchers to focus on the security of Google Cloud and to incentivize sharing knowledge on Cloud vulnerability research with the world. This year, we were excited to see an increase in collaboration between researchers, which often led to more detailed and complex vulnerability reports. After careful evaluation of the submissions, today we are excited to announce the winners of the 2022 Google Cloud VRP Prize.2022 Google Cloud VRP Prize Winners1st Prize - $133,337: Yuval Avrahami for the report and write-up Privilege escalations in GKE Autopilot. Yuval\'s excellent write-up describes several attack paths that would allow an attacker with permission to create pods in an Autopilot cluster to escalate privileges and compromise the underlying node VMs. While thes | Vulnerability Cloud | Uber | ★★ | |

| 2023-06-20 11:58:57 | Protégez et gérez les extensions du navigateur à l'aide de la gestion du cloud Chrome Browser Protect and manage browser extensions using Chrome Browser Cloud Management (lien direct) |

Posted by Anuj Goyal, Product Manager, Chrome Browser

Browser extensions, while offering valuable functionalities, can seem risky to organizations. One major concern is the potential for security vulnerabilities. Poorly designed or malicious extensions could compromise data integrity and expose sensitive information to unauthorized access. Moreover, certain extensions may introduce performance issues or conflicts with other software, leading to system instability. Therefore, many organizations find it crucial to have visibility into the usage of extensions and the ability to control them. Chrome browser offers these extension management capabilities and reporting via Chrome Browser Cloud Management. In this blog post, we will walk you through how to utilize these features to keep your data and users safe.

Visibility into Extensions being used in your environment

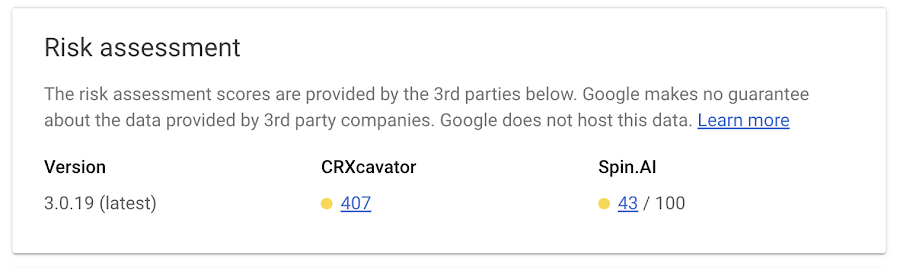

Having visibility into what and how extensions are being used enables IT and security teams to assess potential security implications, ensure compliance with organizational policies, and mitigate potential risks. There are three ways you can get critical information about extensions in your organization:1. App and extension usage reportingOrganizations can gain visibility into every Chrome extension that is installed across an enterprise\'s fleet in Chrome App and Extension Usage Reporting.2. Extension Risk AssessmentCRXcavator and Spin.AI Risk Assessment are tools used to assess the risks of browser extensions and minimize the risks associated with them. We are making extension scores via these two platforms available directly in Chrome Browser Cloud Management, so security teams can have an at-a-glance view of risk scores of the extensions being used in their browser environment.

3. Extension event reportingExtension installs events are now available to alert IT and security teams of new extension usage in their environment.Organizations can send critical browser security events to their chosen solution providers, such as Splunk, Crowdstrike, Palo Alto Networks, and Google solutions, including Chronicle, Cloud PubSub, and Google Workspace, for further analysis. You can also view the event logs directly in Chrome Browser Cloud Management. 3. Extension event reportingExtension installs events are now available to alert IT and security teams of new extension usage in their environment.Organizations can send critical browser security events to their chosen solution providers, such as Splunk, Crowdstrike, Palo Alto Networks, and Google solutions, including Chronicle, Cloud PubSub, and Google Workspace, for further analysis. You can also view the event logs directly in Chrome Browser Cloud Management. |

Cloud | ★★★ | ||

| 2023-06-16 13:11:38 | Apporter la transparence à l'informatique confidentielle avec SLSA Bringing Transparency to Confidential Computing with SLSA (lien direct) |

Asra Ali, Razieh Behjati, Tiziano Santoro, Software EngineersEvery day, personal data, such as location information, images, or text queries are passed between your device and remote, cloud-based services. Your data is encrypted when in transit and at rest, but as potential attack vectors grow more sophisticated, data must also be protected during use by the service, especially for software systems that handle personally identifiable user data.Toward this goal, Google\'s Project Oak is a research effort that relies on the confidential computing paradigm to build an infrastructure for processing sensitive user data in a secure and privacy-preserving way: we ensure data is protected during transit, at rest, and while in use. As an assurance that the user data is in fact protected, we\'ve open sourced Project Oak code, and have introduced a transparent release process to provide publicly inspectable evidence that the application was built from that source code. This blog post introduces Oak\'s transparent release process, which relies on the SLSA framework to generate cryptographic proof of the origin of Oak\'s confidential computing stack, and together with Oak\'s remote attestation process, allows users to cryptographically verify that their personal data was processed by a trustworthy application in a secure environment. | Tool | ★★ | ||

| 2023-06-14 11:59:49 | Apprentissage de KCTF VRP \\'s 42 Linux Neule exploite les soumissions Learnings from kCTF VRP\\'s 42 Linux kernel exploits submissions (lien direct) |

Tamás Koczka, Security EngineerIn 2020, we integrated kCTF into Google\'s Vulnerability Rewards Program (VRP) to support researchers evaluating the security of Google Kubernetes Engine (GKE) and the underlying Linux kernel. As the Linux kernel is a key component not just for Google, but for the Internet, we started heavily investing in this area. We extended the VRP\'s scope and maximum reward in 2021 (to $50k), then again in February 2022 (to $91k), and finally in August 2022 (to $133k). In 2022, we also summarized our learnings to date in our cookbook, and introduced our experimental mitigations for the most common exploitation techniques.In this post, we\'d like to share our learnings and statistics about the latest Linux kernel exploit submissions, how effective our | Vulnerability | Uber | ★★ | |

| 2023-06-01 11:59:52 | Annonce du bonus d'exploitation en pleine chaîne du navigateur Chrome Announcing the Chrome Browser Full Chain Exploit Bonus (lien direct) |

Amy Ressler, Chrome Security Team au nom du Chrome VRP Depuis 13 ans, un pilier clé de l'écosystème de sécurité chromée a inclus des chercheurs en sécurité à trouver des vulnérabilités de sécurité dans Chrome Browser et à nous les signaler, à travers le Programme de récompenses de vulnérabilité Chrome . À partir d'aujourd'hui et jusqu'au 1er décembre 2023, le premier rapport de bogue de sécurité que nous recevons avec un exploit fonctionnel de la chaîne complète, résultant en une évasion chromée de sable, est éligible à triple le montant de la récompense complet .Votre exploit en pleine chaîne pourrait entraîner une récompense pouvant atteindre 180 000 $ (potentiellement plus avec d'autres bonus). Toutes les chaînes complètes ultérieures soumises pendant cette période sont éligibles pour doubler le montant de récompense complet ! Nous avons historiquement mis une prime sur les rapports avec les exploits & # 8211;«Des rapports de haute qualité avec un exploit fonctionnel» est le niveau le plus élevé de montants de récompense dans notre programme de récompenses de vulnérabilité.Au fil des ans, le modèle de menace de Chrome Browser a évolué à mesure que les fonctionnalités ont mûri et de nouvelles fonctionnalités et de nouvelles atténuations, tels a miracleptr , ont été introduits.Compte tenu de ces évolutions, nous sommes toujours intéressés par les explorations d'approches nouvelles et nouvelles pour exploiter pleinement le navigateur Chrome et nous voulons offrir des opportunités pour mieux inciter ce type de recherche.Ces exploits nous fournissent un aperçu précieux des vecteurs d'attaque potentiels pour exploiter Chrome et nous permettent d'identifier des stratégies pour un meilleur durcissement des caractéristiques et des idées de chrome spécifiques pour de futures stratégies d'atténuation à grande échelle. Les détails complets de cette opportunité de bonus sont disponibles sur le Chrome VRP Rules and Rewards page .Le résumé est le suivant: Les rapports de bogues peuvent être soumis à l'avance pendant que le développement de l'exploitation se poursuit au cours de cette fenêtre de 180 jours.Les exploits fonctionnels doivent être soumis à Chrome à la fin de la fenêtre de 180 jours pour être éligible à la triple ou double récompense. Le premier exploit fonctionnel de la chaîne complète que nous recevons est éligible au triple de récompense. L'exploit en chaîne complète doit entraîner une évasion de bac à sable de navigateur Chrome, avec une démonstration de contrôle / exécution de code de l'attaquant en dehors du bac à sable. L'exploitation doit pouvoir être effectuée à distance et aucune dépendance ou très limitée à l'interaction utilisateur. L'exploit doit avoir été fonctionnel dans un canal de libération actif de Chrome (Dev, Beta, stable, étendu stable) au moment des rapports initiaux des bogues dans cette chaîne.Veuillez ne pas soumettre des exploits développés à partir de bogues de sécurité divulgués publiquement ou d'autres artefacts dans les anciennes versions passées de Chrome. Comme cela est conforme à notre politique générale de récompenses, si l'exploit permet l'exécution du code distant (RCE) dans le navigateur ou un autre processus hautement privilégié, tel que le processus de réseau ou de GPU, pour entraîner une évasion de bac à sable sans avoir besoin d'une première étapeBug, le montant de récompense pour le rendu «rapport de haute qualité avec exploit fonctionnel» serait accordé et inclus dans le calcul du total de récompense de bonus. Sur la base de notre | Vulnerability Threat | ★★★ | ||

| 2023-05-31 12:00:25 | Ajout d'actions de correction de la gestion du nuage de navigateur Chrome dans Splunk en utilisant des actions d'alerte Adding Chrome Browser Cloud Management remediation actions in Splunk using Alert Actions (lien direct) |

Posted by Ashish Pujari, Chrome Security Team

Introduction

Chrome is trusted by millions of business users as a secure enterprise browser. Organizations can use Chrome Browser Cloud Management to help manage Chrome browsers more effectively. As an admin, they can use the Google Admin console to get Chrome to report critical security events to third-party service providers such as Splunk® to create custom enterprise security remediation workflows.

Security remediation is the process of responding to security events that have been triggered by a system or a user. Remediation can be done manually or automatically, and it is an important part of an enterprise security program.

Why is Automated Security Remediation Important?

When a security event is identified, it is imperative to respond as soon as possible to prevent data exfiltration and to prevent the attacker from gaining a foothold in the enterprise. Organizations with mature security processes utilize automated remediation to improve the security posture by reducing the time it takes to respond to security events. This allows the usually over burdened Security Operations Center (SOC) teams to avoid alert fatigue.

Automated Security Remediation using Chrome Browser Cloud Management and Splunk

Chrome integrates with Chrome Enterprise Recommended partners such as Splunk® using Chrome Enterprise Connectors to report security events such as malware transfer, unsafe site visits, password reuse. Other supported events can be found on our support page.

The Splunk integration with Chrome browser allows organizations to collect, analyze, and extract insights from security events. The extended security insights into managed browsers will enable SOC teams to perform better informed automated security remediations using Splunk® Alert Actions.

Splunk Alert Actions are a great capability for automating security remediation tasks. By creating alert actions, enterprises can automate the process of identifying, prioritizing, and remediating security threats.

In Splunk®, SOC teams can use alerts to monitor for and respond to specific Chrome Browser Cloud Management events. Alerts use a saved search to look for events in real time or on a schedule and can trigger an Alert Action when search results meet specific conditions as outlined in the diagram below.

Use Case

If a user downloads a malicious file after bypassing a Chrome “Dangerous File” message their managed browser/managed CrOS device should be quarantined.

Prerequisites

Create a Chrome Browser Cloud Management account at no additional costs

Use Case

If a user downloads a malicious file after bypassing a Chrome “Dangerous File” message their managed browser/managed CrOS device should be quarantined.

Prerequisites

Create a Chrome Browser Cloud Management account at no additional costs

|

Malware Cloud | ★★ | ||

| 2023-05-26 14:06:55 | Time to challenge yourself in the 2023 Google CTF! (lien direct) | Vincent Winstead, Technical Program ManagerIt\'s Google CTF time! Get your hacking toolbox ready and prepare your caffeine for rapid intake. The competition kicks off on June 23 2023 6:00 PM UTC and runs through June 25 2023 6:00 PM UTC. Registration is now open at g.co/ctf. Google CTF gives you a chance to challenge your skillz, show off your hacktastic abilities, and learn some new tricks along the way. It consists of a set of computer security puzzles (or challenges) involving reverse-engineering, memory corruption, cryptography, web technologies, and more. Use obscure security knowledge to find exploits through bugs and creative misuse. With each completed challenge your team will earn points and move up through the ranks. The top 8 teams will qualify for our Hackceler8 competition taking place in Tokyo later this year. Hackceler8 is our experimental esport-style hacking game, custom-made to mix CTF and speedrunning. In the competition Google CTF gives you a chance to challenge your skillz, show off your hacktastic abilities, and learn some new tricks along the way. It consists of a set of computer security puzzles (or challenges) involving reverse-engineering, memory corruption, cryptography, web technologies, and more. Use obscure security knowledge to find exploits through bugs and creative misuse. With each completed challenge your team will earn points and move up through the ranks. The top 8 teams will qualify for our Hackceler8 competition taking place in Tokyo later this year. Hackceler8 is our experimental esport-style hacking game, custom-made to mix CTF and speedrunning. In the competition |

★★ | |||

| 2023-05-26 12:02:28 | Il est temps de vous mettre au défi dans le Google CTF 2023! Time to challenge yourself in the 2023 Google CTF! (lien direct) |

Vincent Winstead, Technical Program ManagerIt\'s Google CTF time! Get your hacking toolbox ready and prepare your caffeine for rapid intake. The competition kicks off on June 23 2023 6:00 PM UTC and runs through June 25 2023 6:00 PM UTC. Registration is now open at g.co/ctf. Google CTF gives you a chance to challenge your skillz, show off your hacktastic abilities, and learn some new tricks along the way. It consists of a set of computer security puzzles (or challenges) involving reverse-engineering, memory corruption, cryptography, web technologies, and more. Use obscure security knowledge to find exploits through bugs and creative misuse. With each completed challenge your team will earn points and move up through the ranks. The top 8 teams will qualify for our Hackceler8 competition taking place in Tokyo later this year. Hackceler8 is our experimental esport-style hacking game, custom-made to mix CTF and speedrunning. In the competition, teams need to find clever ways to abuse the game features to capture flags as quickly as possible. See the 2022 highlight reel to get a sense of what it\'s like. The prize pool for this year\'s event stands at more than $32,000! Google CTF gives you a chance to challenge your skillz, show off your hacktastic abilities, and learn some new tricks along the way. It consists of a set of computer security puzzles (or challenges) involving reverse-engineering, memory corruption, cryptography, web technologies, and more. Use obscure security knowledge to find exploits through bugs and creative misuse. With each completed challenge your team will earn points and move up through the ranks. The top 8 teams will qualify for our Hackceler8 competition taking place in Tokyo later this year. Hackceler8 is our experimental esport-style hacking game, custom-made to mix CTF and speedrunning. In the competition, teams need to find clever ways to abuse the game features to capture flags as quickly as possible. See the 2022 highlight reel to get a sense of what it\'s like. The prize pool for this year\'s event stands at more than $32,000! |

Conference | ★★★★ | ||

| 2023-05-25 12:00:55 | API de services Google Trust ACME disponibles pour tous les utilisateurs sans frais Google Trust Services ACME API available to all users at no cost (lien direct) |

David Kluge, Technical Program Manager, and Andy Warner, Product ManagerNobody likes preventable site errors, but they happen disappointingly often. The last thing you want your customers to see is a dreaded \'Your connection is not private\' error instead of the service they expected to reach. Most certificate errors are preventable and one of the best ways to help prevent issues is by automating your certificate lifecycle using the ACME standard. Google Trust Services now offers our ACME API to all users with a Google Cloud account (referred to as “users” here), allowing them to automatically acquire and renew publicly-trusted TLS certificates for free. The ACME API has been available as a preview and over 200 million certificates have been issued already, offering the same compatibility as major Google services like google.com or youtube.com. | Tool Cloud | ★★★ | ||

| 2023-05-24 12:49:28 | Annonçant le lancement de Guac V0.1 Announcing the launch of GUAC v0.1 (lien direct) |

Brandon Lum and Mihai Maruseac, Google Open Source Security TeamToday, we are announcing the launch of the v0.1 version of Graph for Understanding Artifact Composition (GUAC). Introduced at Kubecon 2022 in October, GUAC targets a critical need in the software industry to understand the software supply chain. In collaboration with Kusari, Purdue University, Citi, and community members, we have incorporated feedback from our early testers to improve GUAC and make it more useful for security professionals. This improved version is now available as an API for you to start developing on top of, and integrating into, your systems.The need for GUACHigh-profile incidents such as Solarwinds, and the recent 3CX supply chain double-exposure, are evidence that supply chain attacks are getting more sophisticated. As highlighted by the | Tool Vulnerability Threat | Yahoo | ★★ | |

| 2023-05-23 12:01:36 | Comment le programme Chrome Root protège les utilisateurs How the Chrome Root Program Keeps Users Safe (lien direct) |

Posted by Chrome Root Program, Chrome Security Team What is the Chrome Root Program? A root program is one of the foundations for securing connections to websites. The Chrome Root Program was announced in September 2022. If you missed it, don\'t worry - we\'ll give you a quick summary below! Chrome Root Program: TL;DR Chrome uses digital certificates (often referred to as “certificates,” “HTTPS certificates,” or “server authentication certificates”) to ensure the connections it makes for its users are secure and private. Certificates are issued by trusted entities called “Certification Authorities” (CAs). The collection of digital certificates, CA systems, and other related online servicews is the foundation of HTTPS and is often referred to as the “Web PKI.” Before issuing a certificate to a website, the CA must verify that the certificate requestor legitimately controls the domain whose name will be represented in the certificate. This process is often referred to as “domain validation” and there are several methods that can be used. For example, a CA can specify a random value to be placed on a website, and then perform a check to verify the value\'s presence. Typically, domain validation practices must conform with a set of security requirements described in both industry-wide and browser-specific policies, like the CA/Browser Forum “Baseline Requirements” and the Chrome Root Program policy. Upon connecting to a website, Chrome verifies that a recognized (i.e., trusted) CA issued its certificate, while also performing additional evaluations of the connection\'s security properties (e.g., validating data from Certificate Transparency logs). Once Chrome determines that the certificate is valid, Chrome can use it to establish an encrypted connection to the website. Encrypted connections prevent attackers from being able to intercept (i.e., eavesdrop) or modify communication. In security speak, this is known as confidentiality and integrity. The Chrome Root Program, led by members of the Chrome Security team, provides governance and security review to determine the set of CAs trusted by default in Chrome. This set of so-called "root certificates" is known at the Chrome Root Store. How does the Chrome Root Program keep users safe? The Chrome Root Program keeps users safe by ensuring the CAs Chrome trusts to validate domains are worthy of that trust. We do that by: administering policy and governance activities to manage the set of CAs trusted by default in Chrome, evaluating impact and corresponding security implications related to public security incident disclosures by participating CAs, and leading positive change to make the ecosystem more resilient. Policy and Governance The Chrome Root Program policy defines the minimum requirements a CA owner must meet for inclusion in the Chrome Root Store. It incorporates the industry-wide CA/Browser Forum Baseline Requirements and further adds security controls to improve Chrome user security. The CA | ★★ | |||

| 2023-05-17 11:59:38 | Nouvelles initiatives du programme de récompense de vulnérabilité de l'appareil Android et Google New Android & Google Device Vulnerability Reward Program Initiatives (lien direct) |

Publié par Sarah Jacobus, Vulnérabilité Rewards Team Alors que la technologie continue d'avancer, les efforts des cybercriminels qui cherchent à exploiter les vulnérabilités dans les logiciels et les appareils.C'est pourquoi chez Google et Android, la sécurité est une priorité absolue, et nous travaillons constamment pour rendre nos produits plus sécurisés.Une façon dont nous le faisons est grâce à nos programmes de récompense de vulnérabilité (VRP), qui incitent les chercheurs en sécurité à trouver et à signaler les vulnérabilités dans notre système d'exploitation et nos appareils. Nous sommes heureux d'annoncer que nous mettons en œuvre un nouveau système de notation de qualité pour les rapports de vulnérabilité de sécurité pour encourager davantage de recherches sur la sécurité dans les domaines à impact plus élevé de nos produits et assurer la sécurité de nos utilisateurs.Ce système évaluera les rapports de vulnérabilité comme une qualité élevée, moyenne ou faible en fonction du niveau de détail fourni dans le rapport.Nous pensons que ce nouveau système encouragera les chercheurs à fournir des rapports plus détaillés, ce qui nous aidera à résoudre les problèmes rapportés plus rapidement et à permettre aux chercheurs de recevoir des récompenses de primes plus élevées. Les vulnérabilités de la plus haute qualité et les plus critiques sont désormais éligibles à des récompenses plus importantes allant jusqu'à 15 000 $! Il y a quelques éléments clés que nous recherchons: Description précise et détaillée : Un rapport doit décrire clairement et avec précision la vulnérabilité, y compris le nom et la version de l'appareil.La description doit être suffisamment détaillée pour comprendre facilement le problème et commencer à travailler sur un correctif. Analyse des causes racines : Un rapport doit inclure une analyse complète des causes profondes qui décrit pourquoi le problème se produit et quel code source Android doit être corrigé pour le résoudre.Cette analyse doit être approfondie et fournir suffisamment d'informations pour comprendre la cause sous-jacente de la vulnérabilité. preuve de concept : Un rapport doit inclure une preuve de concept qui démontre efficacement la vulnérabilité.Cela peut inclure des enregistrements vidéo, une sortie de débogueur ou d'autres informations pertinentes.La preuve de concept doit être de haute qualité et inclure la quantité minimale de code possible pour démontrer le problème. Reproductibilité : Un rapport doit inclure une explication étape par étape de la façon de reproduire la vulnérabilité sur un appareil éligible exécutant la dernière version.Ces informations doivent être claires et concises et devraient permettre à nos ingénieurs de reproduire facilement le problème et de commencer à travailler sur une correction. preuve d'accès à l'accouchement : Enfin, un rapport doit inclure des preuves ou une analyse qui démontrent le type de problème et le niveau d'accès ou d'exécution atteint. * Remarque: ces critères peuvent changer avec le temps.Pour les informations les plus récentes, veuillez vous référer à notre Page des règles publiques . De plus, à partir du 15 mars 2023, Android n'attribuera plus de vulnérabilités et d'expositions courantes (CVE) à la plupart des problèmes de gravité modérés.Le CVE continuera d'être affecté à des vulnérabilités critiques et à forte gravité. Nous pensons que l'incitation aux chercheurs à fournir des rapports de haute qualité profitera à la fois à la communauté de sécurité plus large et à notre | Vulnerability | ★★ | ||

| 2023-05-15 13:35:50 | 22 000 $ décerné à SBFT \\ '23 gagnants du concours de fuzzing $22k awarded to SBFT \\'23 fuzzing competition winners (lien direct) |

Dongge Liu, Jonathan Metzman and Oliver Chang, Google Open Source Security TeamGoogle\'s Open Source Security Team recently sponsored a fuzzing competition as part of ISCE\'s Search-Based and Fuzz Testing (SBFT) Workshop. Our goal was to encourage the development of new fuzzing techniques, which can lead to the discovery of software vulnerabilities and ultimately a safer open source ecosystem. The competitors\' fuzzers were judged on code coverage and their ability to discover bugs: HasteFuzz took the $11,337 prize for code coveragePASTIS and AFLrustrust tied for bug discovery and split the $11,337 prizeCompetitors were evaluated using | Conference | ★★ | ||

| 2023-05-11 12:44:52 | Présentation d'une nouvelle façon de bourdonner pour les vulnérabilités EBPF Introducing a new way to buzz for eBPF vulnerabilities (lien direct) |

Juan José López Jaimez, Security Researcher and Meador Inge, Security EngineerToday, we are announcing Buzzer, a new eBPF Fuzzing framework that aims to help hardening the Linux Kernel.What is eBPF and how does it verify safety?eBPF is a technology that allows developers and sysadmins to easily run programs in a privileged context, like an operating system kernel. Recently, its popularity has increased, with more products adopting it as, for example, a network filtering solution. At the same time, it has maintained its relevance in the security research community, since it provides a powerful attack surface into the operating system.While there are many solutions for fuzzing vulnerabilities in the Linux Kernel, they are not necessarily tailored to the unique features of eBPF. In particular, eBPF has many complex security rules that programs must follow to be considered valid and safe. These rules are enforced by a component of eBPF referred to as the "verifier". The correctness properties of the verifier implementation have proven difficult to understand by reading the source code alone. That\'s why our security team at Google decided to create a new fuzzer framework that aims to test the limits of the eBPF verifier through generating eBPF programs.The eBPF verifier\'s main goal is to make sure that a program satisfies a certain set of safety rules, for example: programs should not be able to write outside designated memory regions, certain arithmetic operations should be restricted on pointers, and so on. However, like all pieces of software, there can be holes in the logic of these checks. This could potentially cause unsafe behavior of an eBPF program and have security implications. | ★★ | |||

| 2023-05-10 14:59:36 | E / S 2023: Ce qui est nouveau dans la sécurité et la confidentialité d'Android I/O 2023: What\\'s new in Android security and privacy (lien direct) |

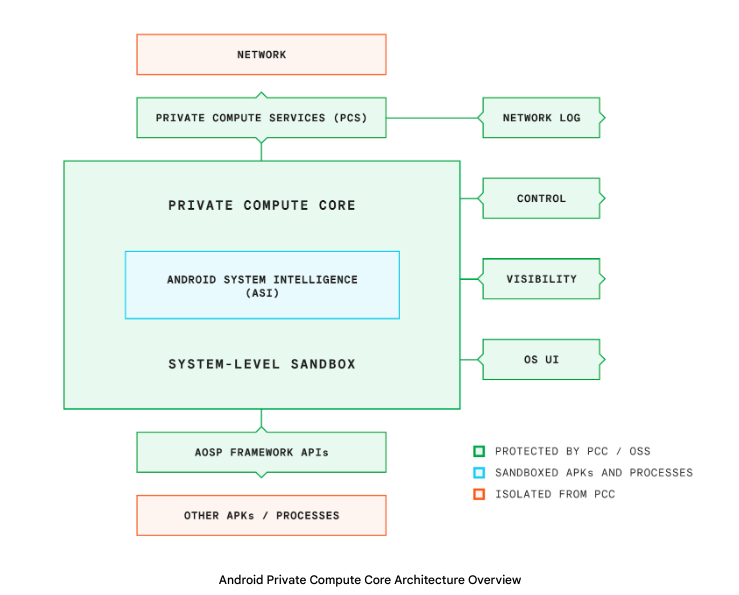

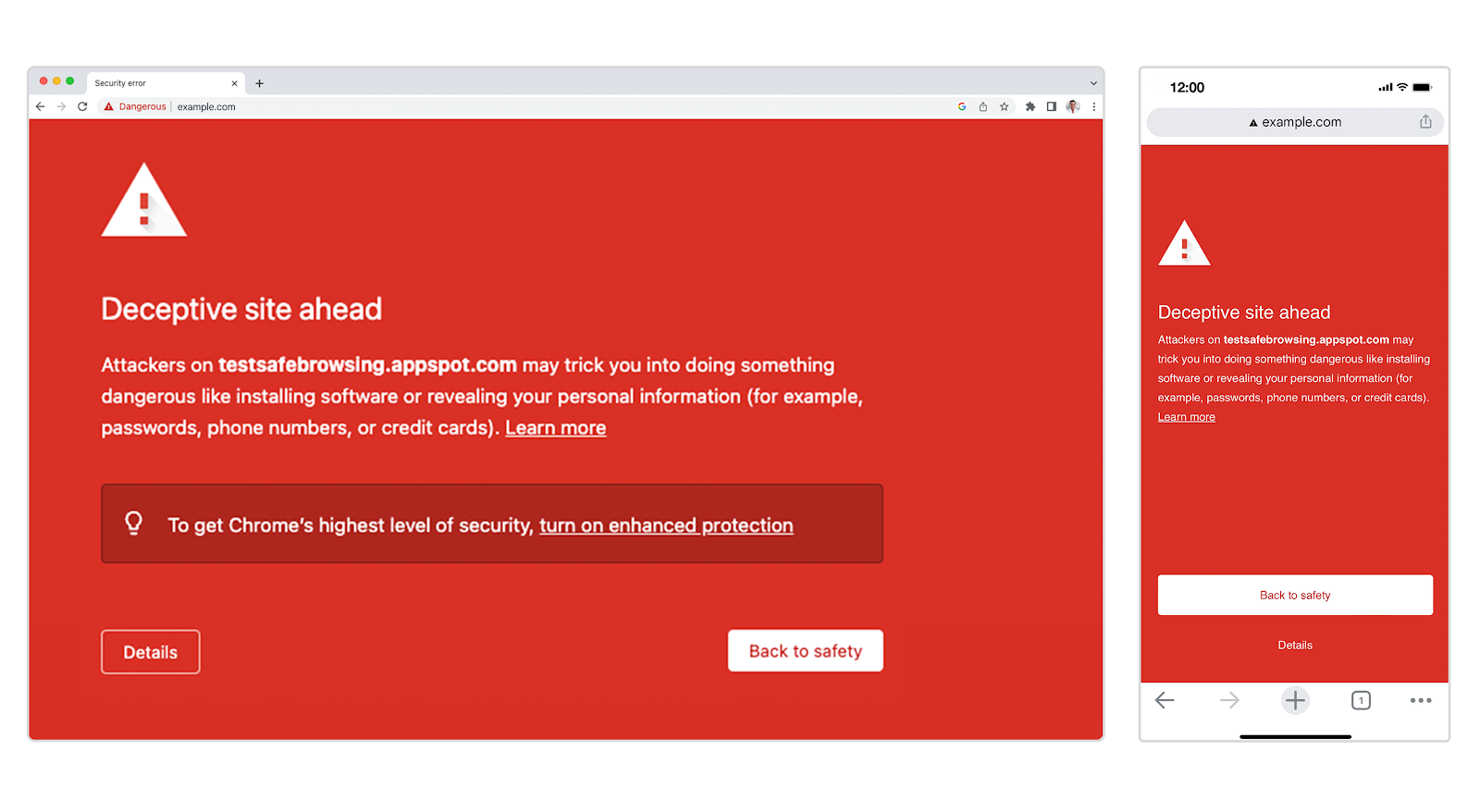

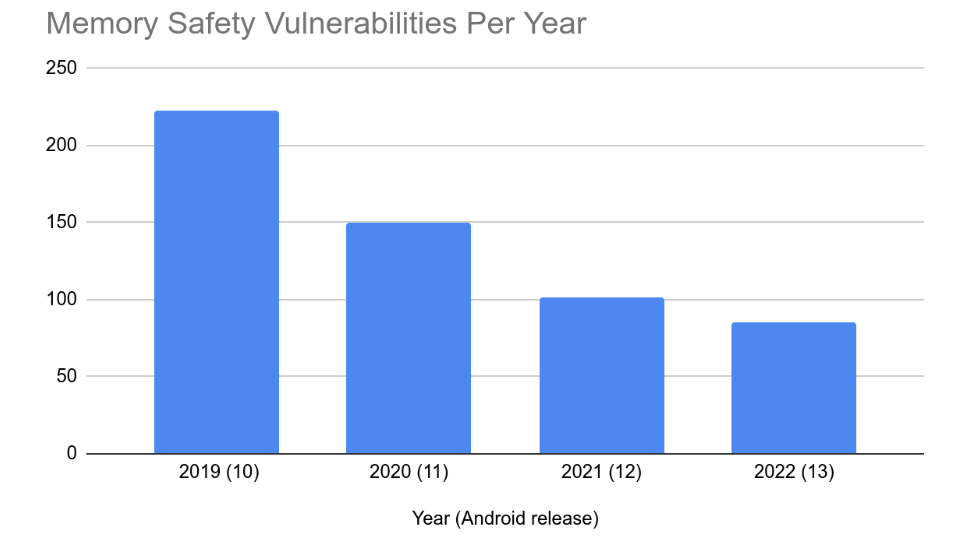

Posted by Ronnie Falcon, Product Manager Android is built with multiple layers of security and privacy protections to help keep you, your devices, and your data safe. Most importantly, we are committed to transparency, so you can see your device safety status and know how your data is being used. Android uses the best of Google\'s AI and machine learning expertise to proactively protect you and help keep you out of harm\'s way. We also empower you with tools that help you take control of your privacy. I/O is a great moment to show how we bring these features and protections all together to help you stay safe from threats like phishing attacks and password theft, while remaining in charge of your personal data. Safe Browsing: faster more intelligent protection Android uses Safe Browsing to protect billions of users from web-based threats, like deceptive phishing sites. This happens in the Chrome default browser and also in Android WebView, when you open web content from apps. Safe Browsing is getting a big upgrade with a new real-time API that helps ensure you\'re warned about fast-emerging malicious sites. With the newest version of Safe Browsing, devices will do real-time blocklist checks for low reputation sites. Our internal analysis has found that a significant number of phishing sites only exist for less than ten minutes to try and stay ahead of block-lists. With this real-time detection, we expect we\'ll be able to block an additional 25 percent of phishing attempts every month in Chrome and Android1. Safe Browsing isn\'t just getting faster at warning users. We\'ve also been building in more intelligence, leveraging Google\'s advances in AI. Last year, Chrome browser on Android and desktop started utilizing a new image-based phishing detection machine learning model to visually inspect fake sites that try to pass themselves off as legitimate log-in pages. By leveraging a TensorFlow Lite model, we\'re able to find 3x more2 phishing sites compared to previous machine learning models and help warn you before you get tricked into signing in. This year, we\'re expanding the coverage of the model to detect hundreds of more phishing campaigns and leverage new ML technologies. This is just one example of how we use our AI expertise to keep your data safe. Last year, Android used AI to protect users from 100 billion suspected spam messages and calls.3 Passkeys helps move users beyond passwords For many, passwords are the primary protection for their online life. In reality, they are frustrating to create, remember and are easily hacked. But hackers can\'t phish a password that doesn\'t exist. Which is why we are excited to share another major step forward in our passwordless journey: Passkeys. | Spam Malware Tool | ★★★ | ||

| 2023-05-05 12:00:43 | Faire l'authentification plus rapidement que jamais: Passkeys vs mots de passe Making authentication faster than ever: passkeys vs. passwords (lien direct) |

Silvia Convento, Senior UX Researcher and Court Jacinic, Senior UX Content DesignerIn recognition of World Password Day 2023, Google announced its next step toward a passwordless future: passkeys. Passkeys are a new, passwordless authentication method that offer a convenient authentication experience for sites and apps, using just a fingerprint, face scan or other screen lock. They are designed to enhance online security for users. Because they are based on the public key cryptographic protocols that underpin security keys, they are resistant to phishing and other online attacks, making them more secure than SMS, app based one-time passwords and other forms of multi-factor authentication (MFA). And since passkeys are standardized, a single implementation enables a passwordless experience across browsers and operating systems. Passkeys can be used in two different ways: on the same device or from a different device. For example, if you need to sign in to a website on an Android device and you have a passkey stored on that same device, then using it only involves unlocking the phone. On the other hand, if you need to sign in to that website on the Chrome browser on your computer, you simply scan a QR code to connect the phone and computer to use the passkey.The technology behind the former (“same device passkey”) is not new: it was originally developed within the FIDO Alliance and first implemented by Google in August 2019 in select flows. Google and other FIDO members have been working together on enhancing the underlying technology of passkeys over the last few years to improve their usability and convenience. This technology behind passkeys allows users to log in to their account using any form of device-based user verification, such as biometrics or a PIN code. A credential is only registered once on a user\'s personal device, and then the device proves possession of the registered credential to the remote server by asking the user to use their device\'s screen lock. The user\'s biometric, or other screen lock data, is never sent to Google\'s servers - it stays securely stored on the device, and only cryptographic proof that the user has correctly provided it is sent to Google. Passkeys are also created and stored on your devices and are not sent to websites or apps. If you create a passkey on one device the Google Password Manager can make it available on your other devices that are signed into the same system account.Learn more on how passkey works under the hoo | APT 38 APT 15 APT 10 Guam | ★★ | ||

| 2023-05-05 11:02:09 | Présentation des règles_oci Introducing rules_oci (lien direct) |

Appu Goundan, Google Open Source Security TeamToday, we are announcing the General Availability 1.0 version of rules_oci, an open-sourced Bazel plugin (“ruleset”) that makes it simpler and more secure to build container images with Bazel. This effort was a collaboration we had with Aspect and the Rules Authors Special Interest Group. In this post, we\'ll explain how rules_oci differs from its predecessor, rules_docker, and describe the benefits it offers for both container image security and the container community. Bazel and Distroless for supply chain securityGoogle\'s popular build and test tool, known as Bazel, is gaining fast adoption within enterprises thanks to its ability to scale to the largest codebases and handle builds in almost any language. Because Bazel manages and caches dependencies by their integrity hash, it is uniquely suited to make assurances about the supply chain based on the Trust-on-First-Use principle. One way Google uses Bazel is to build widely used Distroless base images for Docker. Distroless is a series of minimal base images which improve supply-chain security. They restrict what\'s in your runtime container to precisely what\'s necessary for your app, which is a best practice employed by Google and other tech companies that have used containers in production for many years. Using minimal base images reduces the burden of managing risks associated with security vulnerabilities, licensing, and governance issues in the supply chain for building applications.rules_oci vs rules_docker | ★★ | |||

| 2023-05-03 08:20:21 | Si longs mots de passe, merci pour tous les phish So long passwords, thanks for all the phish (lien direct) |

By: Arnar Birgisson and Diana K Smetters, Identity Ecosystems and Google Account Security and Safety teamsStarting today, you can create and use passkeys on your personal Google Account. When you do, Google will not ask for your password or 2-Step Verification (2SV) when you sign in.Passkeys are a more convenient and safer alternative to passwords. They work on all major platforms and browsers, and allow users to sign in by unlocking their computer or mobile device with their fingerprint, face recognition or a local PIN.Using passwords puts a lot of responsibility on users. Choosing strong passwords and remembering them across various accounts can be hard. In addition, even the most savvy users are often misled into giving them up during phishing attempts. 2SV (2FA/MFA) helps, but again puts strain on the user with additional, unwanted friction and still doesn\'t fully protect against phishing attacks and targeted attacks like "SIM swaps" for SMS verification. Passkeys help address all these issues.Creating passkeys on your Google AccountWhen you add a passkey to your Google Account, we will start asking for it when you sign in or perform sensitive actions on your account. The passkey itself is stored on your local computer or mobile device, which will ask for your screen lock biometrics or PIN to confirm it\'s really you. Biometric data is never shared with Google or any other third party – the screen lock only unlocks the passkey locally.Unlike passwords, passkeys can only exist on your devices. They cannot be written down or accidentally given to a bad actor. When you use a passkey to sign | ★★★ | |||

| 2023-05-02 09:01:05 | Google et Apple Lead Initiative pour une spécification de l'industrie pour traiter le suivi indésirable Google and Apple lead initiative for an industry specification to address unwanted tracking (lien direct) |

Companies welcome input from industry participants and advocacy groups on a draft specification to alert users in the event of suspected unwanted tracking

Location-tracking devices help users find personal items like their keys, purse, luggage, and more through crowdsourced finding networks. However, they can also be misused for unwanted tracking of individuals.

Today Google and Apple jointly submitted a proposed industry specification to help combat the misuse of Bluetooth location-tracking devices for unwanted tracking. The first-of-its-kind specification will allow Bluetooth location-tracking devices to be compatible with unauthorized tracking detection and alerts across Android and iOS platforms. Samsung, Tile, Chipolo, eufy Security, and Pebblebee have expressed support for the draft specification, which offers best practices and instructions for manufacturers, should they choose to build these capabilities into their products.“Bluetooth trackers have created tremendous user benefits but also bring the potential of unwanted tracking, which requires industry-wide action to solve,” said Dave Burke, Google\'s vice president of Engineering for Android. “Android has an unwavering commitment to protecting users and will continue to develop strong safeguards and collaborate with the industry to help combat the misuse of Bluetooth tracking devices.”

“Apple launched AirTag to give users the peace of mind knowing where to find their most important items,” said Ron Huang, Apple\'s vice president of Sensing and Connectivity. “We built AirTag and the Find My network with a set of proactive features to discourage unwanted tracking, a first in the industry, and we continue to make improvements to help ensure the technology is being used as intended. “This new industry specification builds upon the AirTag protections, and through collaboration with Google, results in a critical step forward to help combat unwanted tracking across iOS and Android.”

In addition to incorporating feedback from device manufacturers, input from various safety and advocacy groups has been integrated into the development of the specification.

“The National Network to End Domestic Violence has been advocating for universal standards to protect survivors – and all people – from the misuse of bluetooth tracking devices. This collaboration and the resulting standards are a significant step forward. NNEDV is encouraged by this progress,” said Erica Olsen, the National Network to End Domestic Violence\'s senior director of its Safety Net Project. “These new standards will minimize opportunities for abuse of this technology and decrease burdens on survivors in detecting unwanted trackers. We are grateful for these efforts and look forward to continuing to work together to address unwanted tracking and misuse.”

“Today\'s release of a draft specification is a welcome step to confront harmful misuses of Bluetooth location trackers,” said Alexandra Reeve Givens, the Center for Democracy & Technology\'s president and CEO. “CDT continues to focus on ways to make these devices more detectable and reduce the likelihood that they will be used to track people. A key

Location-tracking devices help users find personal items like their keys, purse, luggage, and more through crowdsourced finding networks. However, they can also be misused for unwanted tracking of individuals.

Today Google and Apple jointly submitted a proposed industry specification to help combat the misuse of Bluetooth location-tracking devices for unwanted tracking. The first-of-its-kind specification will allow Bluetooth location-tracking devices to be compatible with unauthorized tracking detection and alerts across Android and iOS platforms. Samsung, Tile, Chipolo, eufy Security, and Pebblebee have expressed support for the draft specification, which offers best practices and instructions for manufacturers, should they choose to build these capabilities into their products.“Bluetooth trackers have created tremendous user benefits but also bring the potential of unwanted tracking, which requires industry-wide action to solve,” said Dave Burke, Google\'s vice president of Engineering for Android. “Android has an unwavering commitment to protecting users and will continue to develop strong safeguards and collaborate with the industry to help combat the misuse of Bluetooth tracking devices.”

“Apple launched AirTag to give users the peace of mind knowing where to find their most important items,” said Ron Huang, Apple\'s vice president of Sensing and Connectivity. “We built AirTag and the Find My network with a set of proactive features to discourage unwanted tracking, a first in the industry, and we continue to make improvements to help ensure the technology is being used as intended. “This new industry specification builds upon the AirTag protections, and through collaboration with Google, results in a critical step forward to help combat unwanted tracking across iOS and Android.”

In addition to incorporating feedback from device manufacturers, input from various safety and advocacy groups has been integrated into the development of the specification.

“The National Network to End Domestic Violence has been advocating for universal standards to protect survivors – and all people – from the misuse of bluetooth tracking devices. This collaboration and the resulting standards are a significant step forward. NNEDV is encouraged by this progress,” said Erica Olsen, the National Network to End Domestic Violence\'s senior director of its Safety Net Project. “These new standards will minimize opportunities for abuse of this technology and decrease burdens on survivors in detecting unwanted trackers. We are grateful for these efforts and look forward to continuing to work together to address unwanted tracking and misuse.”

“Today\'s release of a draft specification is a welcome step to confront harmful misuses of Bluetooth location trackers,” said Alexandra Reeve Givens, the Center for Democracy & Technology\'s president and CEO. “CDT continues to focus on ways to make these devices more detectable and reduce the likelihood that they will be used to track people. A key |

★★ | |||

| 2023-04-28 11:59:39 | Transactions de paiement mobile sécurisées activées par la confirmation protégée Android Secure mobile payment transactions enabled by Android Protected Confirmation (lien direct) |

Publié par Rae Wang, directeur de la gestion des produits (Android Security and Privacy Team) Contrairement à d'autres OS mobiles, Android est construit avec une architecture transparente et open source.Nous croyons fermement que nos utilisateurs et l'écosystème mobile en général devraient être en mesure de vérifier la sécurité et la sécurité d'Android \\ et pas seulement nous croire sur parole. Nous avons démontré notre profonde croyance à la transparence de la sécurité en investissant dans des fonctionnalités qui permettent aux utilisateurs de confirmer que ce qu'ils attendent se produit sur leur appareil. L'assurance de la confirmation protégée par Android L'une de ces fonctionnalités est la confirmation protégée par Android, une API qui permet aux développeurs d'utiliser le matériel Android pour fournir aux utilisateurs encore plus d'assurance qu'une action critique a été exécutée en toute sécurité.À l'aide d'une interface utilisateur protégé par le matériel, la confirmation protégée par Android peut aider les développeurs à vérifier l'intention d'action d'un utilisateur avec un degré de confiance très élevé.Cela peut être particulièrement utile dans un certain nombre de moments d'utilisateur & # 8211;Comme pendant les transactions de paiement mobile - qui bénéficient considérablement d'une vérification et d'une sécurité supplémentaires. Nous sommes ravis de voir que la confirmation protégée par Android attire désormais l'attention de l'écosystème en tant que méthode de pointe pour confirmer les actions des utilisateurs critiques via le matériel.Récemment, UBS Group AG et la Bern University of Applied Sciences, cofinancée par Innosuisse et UBS Next, Annommé Ils travaillent avec Google sur un projet pilote pour établir une confirmation protégée en tant que norme d'interface programmable d'application courante.Dans un pilote prévu pour 2023, les clients bancaires en ligne UBS avec des appareils Pixel 6 ou 7 peuvent utiliser une confirmation protégée Android soutenue par Strongbox, un coffre-fort certifié avec protection d'attaque physique, pour confirmer les paiements et vérifier les achats en ligne grâce à une confirmation matérielle dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur conviction dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation dans leur confirmation de leur matériel dans leurUBS Access App. démontrant une utilisation réelle pour la confirmation de protection Android Nous avons travaillé en étroite collaboration avec UBS pour donner vie à ce pilote et nous assurer qu'ils peuvent le tester sur les appareils Google Pixel.La démonstration des cas d'utilisation du monde réel qui sont activés par la confirmation protégée par Android débloque la promesse de cette tech | ★★★ | |||

| 2023-04-27 11:01:43 | Comment nous avons combattu de mauvaises applications et de mauvais acteurs en 2022 How we fought bad apps and bad actors in 2022 (lien direct) |

Posted by Anu Yamunan and Khawaja Shams (Android Security and Privacy Team), and Mohet Saxena (Compute Trust and Safety)

Keeping Google Play safe for users and developers remains a top priority for Google. Google Play Protect continues to scan billions of installed apps each day across billions of Android devices to keep users safe from threats like malware and unwanted software.

In 2022, we prevented 1.43 million policy-violating apps from being published on Google Play in part due to new and improved security features and policy enhancements - in combination with our continuous investments in machine learning systems and app review processes. We also continued to combat malicious developers and fraud rings, banning 173K bad accounts, and preventing over $2 billion in fraudulent and abusive transactions. We\'ve raised the bar for new developers to join the Play ecosystem with phone, email, and other identity verification methods, which contributed to a reduction in accounts used to publish violative apps. We continued to partner with SDK providers to limit sensitive data access and sharing, enhancing the privacy posture for over one million apps on Google Play.

With strengthened Android platform protections and policies, and developer outreach and education, we prevented about 500K submitted apps from unnecessarily accessing sensitive permissions over the past 3 years.

Developer Support and Collaboration to Help Keep Apps Safe

As the Android ecosystem expands, it\'s critical for us to work closely with the developer community to ensure they have the tools, knowledge, and support to build secure and trustworthy apps that respect user data security and privacy.

In 2022, the App Security Improvements program helped developers fix ~500K security weaknesses affecting ~300K apps with a combined install base of approximately 250B installs. We also launched the Google Play SDK Index to help developers evaluate an SDK\'s reliability and safety and make informed decisions about whether an SDK is right for their business and their users. We will keep working closely with SDK providers to improve app and SDK safety, limit how user data is shared, and improve lines of communication with app developers.

We also recently launched new features and resources to give developers a better policy experience. We\'ve expanded our Helpline pilot to give more developers direct policy phone support. And we piloted the Google Play Developer Community so more developers can discuss policy questions and exchange best practices on how to build

Developer Support and Collaboration to Help Keep Apps Safe

As the Android ecosystem expands, it\'s critical for us to work closely with the developer community to ensure they have the tools, knowledge, and support to build secure and trustworthy apps that respect user data security and privacy.

In 2022, the App Security Improvements program helped developers fix ~500K security weaknesses affecting ~300K apps with a combined install base of approximately 250B installs. We also launched the Google Play SDK Index to help developers evaluate an SDK\'s reliability and safety and make informed decisions about whether an SDK is right for their business and their users. We will keep working closely with SDK providers to improve app and SDK safety, limit how user data is shared, and improve lines of communication with app developers.

We also recently launched new features and resources to give developers a better policy experience. We\'ve expanded our Helpline pilot to give more developers direct policy phone support. And we piloted the Google Play Developer Community so more developers can discuss policy questions and exchange best practices on how to build |

Malware Prediction | Uber | ★★★★ | |

| 2023-04-26 11:00:21 | Célébrer SLSA v1.0: sécuriser la chaîne d'approvisionnement des logiciels pour tout le monde Celebrating SLSA v1.0: securing the software supply chain for everyone (lien direct) |

Bob Callaway, Staff Security Engineer, Google Open Source Security team Last week the Open Source Security Foundation (OpenSSF) announced the release of SLSA v1.0, a framework that helps secure the software supply chain. Ten years of using an internal version of SLSA at Google has shown that it\'s crucial to warding off tampering and keeping software secure. It\'s especially gratifying to see SLSA reaching v1.0 as an open source project-contributors have come together to produce solutions that will benefit everyone. SLSA for safer supply chains Developers and organizations that adopt SLSA will be protecting themselves against a variety of supply chain attacks, which have continued rising since Google first donated SLSA to OpenSSF in 2021. In that time, the industry has also seen a U.S. Executive Order on Cybersecurity and the associated NIST Secure Software Development Framework (SSDF) to guide national standards for software used by the U.S. government, as well as the Network and Information Security (NIS2) Directive in the European Union. SLSA offers not only an onramp to meeting these standards, but also a way to prepare for a climate of increased scrutiny on software development practices. As organizations benefit from using SLSA, it\'s also up to them to shoulder part of the burden of spreading these benefits to open source projects. Many maintainers of the critical open source projects that underpin the internet are volunteers; they cannot be expected to do all the work when so many of the rewards of adopting SLSA roll out across the supply chain to benefit everyone. Supply chain security for all That\'s why beyond contributing to SLSA, we\'ve also been laying the foundation to integrate supply chain solutions directly into the ecosystems and platforms used to create open source projects. We\'re also directly supporting open source maintainers, who often cite lack of time or resources as limiting factors when making security improvements to their projects. Our Open Source Security Upstream Team consists of developers who spend 100% of their time contributing to critical open source projects to make security improvements. For open source developers who choose to adopt SLSA on their own, we\'ve funded the Secure Open Source Rewards Program, which pays developers directly for these types of security improvements. Currently, open source developers who want to secure their builds can use the free SLSA L3 GitHub Builder, which requires only a one-time adjustment to the traditional build process implemented through GitHub actions. There\'s also the SLSA Verifier tool for software consumers. Users of npm-or Node Package Manager, the world\'s largest software repository-can take advantage of their recently released beta SLSA integration, which streamlines the process of creating and verifying SLSA provenance through the npm command line interface. We\'re also supporting the integration of Sigstore into many major | Tool Patching | ★★ | ||

| 2023-04-24 12:00:03 | Google Authenticator prend désormais en charge la synchronisation du compte Google Google Authenticator now supports Google Account synchronization (lien direct) |

Christiaan Brand, Group Product Manager | ★★★ | |||

| 2023-04-18 12:00:25 | Hébergeant en toute sécurité les données des utilisateurs dans les applications Web modernes Securely Hosting User Data in Modern Web Applications (lien direct) |

Posted by David Dworken, Information Security Engineer, Google Security Team Many web applications need to display user-controlled content. This can be as simple as serving user-uploaded images (e.g. profile photos), or as complex as rendering user-controlled HTML (e.g. a web development tutorial). This has always been difficult to do securely, so we\'ve worked to find easy, but secure solutions that can be applied to most types of web applications. Classical Solutions for Isolating Untrusted Content The classic solution for securely serving user-controlled content is to use what are known as “sandbox domains”. The basic idea is that if your application\'s main domain is example.com, you could serve all untrusted content on exampleusercontent.com. Since these two domains are cross-site, any malicious content on exampleusercontent.com can\'t impact example.com. This approach can be used to safely serve all kinds of untrusted content including images, downloads, and HTML. While it may not seem like it is necessary to use this for images or downloads, doing so helps avoid risks from content sniffing, especially in legacy browsers. Sandbox domains are widely used across the industry and have worked well for a long time. But, they have two major downsides: Applications often need to restrict content access to a single user, which requires implementing authentication and authorization. Since sandbox domains purposefully do not share cookies with the main application domain, this is very difficult to do securely. To support authentication, sites either have to rely on capability URLs, or they have to set separate authentication cookies for the sandbox domain. This second method is especially problematic in the modern web where many browsers restrict cross-site cookies by default. While user content is isolated from the main site, it isn\'t isolated from other user content. This creates the risk of malicious user content attacking other data on the sandbox domain (e.g. via reading same-origin data). It is also worth noting that sandbox domains help mitigate phishing risks since resources are clearly segmented onto an isolated domain. Modern Solutions for Serving User Content Over time the web has evolved, and there are now easier, more secure ways to serve untrusted content. There are many different approaches here, so we will outline two solutions that are currently in wide use at Google. Approach 1: Serving Inactive User Content If a site only needs to serve inactive user content (i.e. content that is not HTML/JS, for example images and downloads), this can now be safely done without an isolated sandbox domain. There are two key steps: Always set the Content-Type header to a well-known MIME type that is supported by all browsers and guaranteed not to contain active content (when in doubt, application/octet-stream is a safe choice). In addition, always set the below response headers to ensure that the browser fully isolates the response. | Threat | ★★ | ||

| 2023-04-13 12:04:31 | Sécurité de la chaîne d'approvisionnement pour GO, partie 1: Gestion de la vulnérabilité Supply chain security for Go, Part 1: Vulnerability management (lien direct) |

Posted by Julie Qiu, Go Security & Reliability and Oliver Chang, Google Open Source Security Team High profile open source vulnerabilities have made it clear that securing the supply chains underpinning modern software is an urgent, yet enormous, undertaking. As supply chains get more complicated, enterprise developers need to manage the tidal wave of vulnerabilities that propagate up through dependency trees. Open source maintainers need streamlined ways to vet proposed dependencies and protect their projects. A rise in attacks coupled with increasingly complex supply chains means that supply chain security problems need solutions on the ecosystem level. One way developers can manage this enormous risk is by choosing a more secure language. As part of Google\'s commitment to advancing cybersecurity and securing the software supply chain, Go maintainers are focused this year on hardening supply chain security, streamlining security information to our users, and making it easier than ever to make good security choices in Go. This is the first in a series of blog posts about how developers and enterprises can secure their supply chains with Go. Today\'s post covers how Go helps teams with the tricky problem of managing vulnerabilities in their open source packages. Extensive Package Insights Before adopting a dependency, it\'s important to have high-quality information about the package. Seamless access to comprehensive information can be the difference between an informed choice and a future security incident from a vulnerability in your supply chain. Along with providing package documentation and version history, the Go package discovery site links to Open Source Insights. The Open Source Insights page includes vulnerability information, a dependency tree, and a security score provided by the OpenSSF Scorecard project. Scorecard evaluates projects on more than a dozen security metrics, each backed up with supporting information, and assigns the project an overall score out of ten to help users quickly judge its security stance (example). The Go package discovery site puts all these resources at developers\' fingertips when they need them most-before taking on a potentially risky dependency. Curated Vulnerability Information Large consumers of open source software must manage many packages and a high volume of vulnerabilities. For enterprise teams, filtering out noisy, low quality advisories and false positives from critical vulnerabilities is often the most important task in vulnerability management. If it is difficult to tell which vulnerabilities are important, it is impossible to properly prioritize their remediation. With granular advisory details, the Go vulnerability database removes barriers to vulnerability prioritization and remediation. All vulnerability database entries are reviewed and curated by the Go security team. As a result, entries are accurate and include detailed metadata to improve the quality of vulnerability scans and to make vulnerability information more actionable. This metadata includes information on affected functions, operating systems, and architectures. With this information, vulnerability scanners can reduce the number of false po | Tool Vulnerability | ★★ | ||

| 2023-04-11 12:11:33 | Annonce de l'API DEPS.DEV: données de dépendance critiques pour les chaînes d'approvisionnement sécurisées Announcing the deps.dev API: critical dependency data for secure supply chains (lien direct) |

Posted by Jesper Sarnesjo and Nicky Ringland, Google Open Source Security Team Today, we are excited to announce the deps.dev API, which provides free access to the deps.dev dataset of security metadata, including dependencies, licenses, advisories, and other critical health and security signals for more than 50 million open source package versions. Software supply chain attacks are increasingly common and harmful, with high profile incidents such as Log4Shell, Codecov, and the recent 3CX hack. The overwhelming complexity of the software ecosystem causes trouble for even the most diligent and well-resourced developers. We hope the deps.dev API will help the community make sense of complex dependency data that allows them to respond to-or even prevent-these types of attacks. By integrating this data into tools, workflows, and analyses, developers can more easily understand the risks in their software supply chains. The power of dependency data As part of Google\'s ongoing efforts to improve open source security, the Open Source Insights team has built a reliable view of software metadata across 5 packaging ecosystems. The deps.dev data set is continuously updated from a range of sources: package registries, the Open Source Vulnerability database, code hosts such as GitHub and GitLab, and the software artifacts themselves. This includes 5 million packages, more than 50 million versions, from the Go, Maven, PyPI, npm, and Cargo ecosystems-and you\'d better believe we\'re counting them! We collect and aggregate this data and derive transitive dependency graphs, advisory impact reports, OpenSSF Security Scorecard information, and more. Where the deps.dev website allows human exploration and examination, and the BigQuery dataset supports large-scale bulk data analysis, this new API enables programmatic, real-time access to the corpus for integration into tools, workflows, and analyses. The API is used by a number of teams internally at Google to support the security of our own products. One of the first publicly visible uses is the GUAC integration, which uses the deps.dev data to enrich SBOMs. We have more exciting integrations in the works, but we\'re most excited to see what the greater open source community builds! We see the API as being useful for tool builders, researchers, and tinkerers who want to answer questions like: What versions are available for this package? What are the licenses that cover this version of a package-or all the packages in my codebase? How many dependencies does this package have? What are they? Does the latest version of this package include changes to dependencies or licenses? What versions of what packages correspond to this file? Taken together, this information can help answer the most important overarching question: how much risk would this dependency add to my project? The API can help surface critical security information where and when developers can act. This data can be integrated into: IDE Plugins, to make dependency and security information immediately available. CI | Tool Vulnerability | ★★ | ||

| 2023-03-08 12:04:53 | OSV and the Vulnerability Life Cycle (lien direct) | Posted by Oliver Chang and Andrew Pollock, Google Open Source Security Team It is an interesting time for everyone concerned with open source vulnerabilities. The U.S. Executive Order on Improving the Nation's Cybersecurity requirements for vulnerability disclosure programs and assurances for software used by the US government will go into effect later this year. Finding and fixing security vulnerabilities has never been more important, yet with increasing interest in the area, the vulnerability management space has become fragmented-there are a lot of new tools and competing standards. In 2021, we announced the launch of OSV, a database of open source vulnerabilities built partially from vulnerabilities found through Google's OSS-Fuzz program. OSV has grown since then and now includes a widely adopted OpenSSF schema and a vulnerability scanner. In this blog post, we'll cover how these tools help maintainers track vulnerabilities from discovery to remediation, and how to use OSV together with other SBOM and VEX standards. Vulnerability Databases The lifecycle of a known vulnerability begins when it is discovered. To reach developers, the vulnerability needs to be added to a database. CVEs are the industry standard for describing vulnerabilities across all software, but there was a lack of an open source centric database. As a result, several independent vulnerability databases exist across different ecosystems. To address this, we announced the OSV Schema to unify open source vulnerability databases. The schema is machine readable, and is designed so dependencies can be easily matched to vulnerabilities using automation. The OSV Schema remains the only widely adopted schema that treats open source as a first class citizen. Since becoming a part of OpenSSF, the OSV Schema has seen adoption from services like GitHub, ecosystems such as Rust and Python, and Linux distributions such as Rocky Linux. Thanks to such wide community adoption of the OSV Schema, OSV.dev is able to provide a distributed vulnerability database and service that pulls from language specific authoritative sources. In total, the OSV.dev database now includes 43,302 vulnerabilities from 16 ecosystems as of March 2023. Users can check OSV for a comprehensive view of all known vulnerabilities in open source. Every vulnerability in OSV.dev contains package manager versions and git commit hashes, so open source users can easily determine if their packages are impacted because of the familiar style of versioning. Maintainers are also familiar with OSV's community driven and distributed collaboration on the development of OSV's database, tools, and schema. Matching The next step in managing vulnerabilities is to determine project dependencies and their associated vulnerabilities. Last December we released OSV-Scanner, a free, open source tool which scans software projects' lockfiles, SBOMs, or git repositories to identify vulnerabilities found in the | Tool Vulnerability | ★★★★ | ||

| 2023-03-08 11:59:13 | Thank you and goodbye to the Chrome Cleanup Tool (lien direct) | Posted by Jasika Bawa, Chrome Security Team Starting in Chrome 111 we will begin to turn down the Chrome Cleanup Tool, an application distributed to Chrome users on Windows to help find and remove unwanted software (UwS). Origin story The Chrome Cleanup Tool was introduced in 2015 to help users recover from unexpected settings changes, and to detect and remove unwanted software. To date, it has performed more than 80 million cleanups, helping to pave the way for a cleaner, safer web. A changing landscape In recent years, several factors have led us to reevaluate the need for this application to keep Chrome users on Windows safe. First, the user perspective – Chrome user complaints about UwS have continued to fall over the years, averaging out to around 3% of total complaints in the past year. Commensurate with this, we have observed a steady decline in UwS findings on users' machines. For example, last month just 0.06% of Chrome Cleanup Tool scans run by users detected known UwS. Next, several positive changes in the platform ecosystem have contributed to a more proactive safety stance than a reactive one. For example, Google Safe Browsing as well as antivirus software both block file-based UwS more effectively now, which was originally the goal of the Chrome Cleanup Tool. Where file-based UwS migrated over to extensions, our substantial investments in the Chrome Web Store review process have helped catch malicious extensions that violate the Chrome Web Store's policies. Finally, we've observed changing trends in the malware space with techniques such as Cookie Theft on the rise – as such, we've doubled down on defenses against such malware via a variety of improvements including hardened authentication workflows and advanced heuristics for blocking phishing and social engineering emails, malware landing pages, and downloads. What to expect Starting in Chrome 111, users will no longer be able to request a Chrome Cleanup Tool scan through Safety Check or leverage the "Reset settings and cleanup" option offered in chrome://settings on Windows. Chrome will also remove the component that periodically scans Windows machines and prompts users for cleanup should it find anything suspicious. Even without the Chrome Cleanup Tool, users are automatically protected by Safe Browsing in Chrome. Users also have the option to turn on Enhanced protection by navigating to chrome://settings/security – this mode substantially increases protection from dangerous websites and downloads by sharing real-time data with Safe Browsing. While we'll miss the Chrome Cleanup Tool, we wanted to take this opportunity to acknowledge its role in combating UwS for the past 8 years. We'll continue to monitor user feedback and trends in the malware ecosystem, and when adversaries adapt their techniques again – which they will – we'll be at the ready. As always, please feel free to send us feedback or find us on Twitter @googlechrome. | Malware Tool | ★★★ | ||

| 2023-03-02 12:42:15 | Google Trust Services now offers TLS certificates for Google Domains customers (lien direct) | Andy Warner, Google Trust Services, and Carl Krauss, Product Manager, Google DomainsWe're excited to announce changes that make getting Google Trust Services TLS certificates easier for Google Domains customers. With this integration, all Google Domains customers will be able to acquire public certificates for their websites at no additional cost, whether the site runs on a Google service or uses another provider. Additionally, Google Domains is now making an API available to allow for DNS-01 challenges with Google Domains DNS servers to issue and renew certificates automatically.Like the existing Google Cloud integration, Automatic Certificate Management Environment (ACME) protocol is used to enable seamless automatic lifecycle management of TLS certificates. These certificates are issued by the same Certificate Authority (CA) Google uses for its own sites, so they are widely supported across the entire spectrum of devices used to access your services.How do I use it?Using ACME ensures your certificates are renewed automatically and many hosting services already support ACME. If you're running your own web servers / services, there are ACME clients that integrate easily with common servers. To use this feature, you will need an API key called an External Account Binding key. This enables your certificate requests to be associated with your Google Domains account. You can get an API key by visiting Google Domains and navigating to the Security page for your domain. There you'll see a section for Google Trust Services where you can get your EAB Key. |

★★ | |||

| 2023-03-01 11:59:44 | 8 ways to secure Chrome browser for Google Workspace users (lien direct) | Posted by Kiran Nair, Product Manager, Chrome Browser Your journey towards keeping your Google Workspace users and data safe, starts with bringing your Chrome browsers under Cloud Management at no additional cost. Chrome Browser Cloud Management is a single destination for applying Chrome Browser policies and security controls across Windows, Mac, Linux, iOS and Android. You also get deep visibility into your browser fleet including which browsers are out of date, which extensions your users are using and bringing insight to potential security blindspots in your enterprise. Managing Chrome from the cloud allows Google Workspace admins to enforce enterprise protections and policies to the whole browser on fully managed devices, which no longer requires a user to sign into Chrome to have policies enforced. You can also enforce policies that apply when your managed users sign in to Chrome browser on any Windows, Mac, or Linux computer (via Chrome Browser user-level management) --not just on corporate managed devices.  This enables you to keep your corporate data and users safe, whether they are accessing work resources from fully managed, personal, or unmanaged devices used by your vendors. Getting started is easy. If your organization hasn't already, check out this guide for steps on how to enroll your devices. 2. Enforce built-in protections against Phishing, Ransomware & Malware Chrome uses Google's Safe Browsing technology to help protect billions of devices every day by showing warnings to users when they attempt to navigate to dangerous sites or download dangerous files. Safe Browsing is enabled by default for all users when they download Chrome. As an administrator, you can prevent your users from disabling Safe Browsing by enforcing the SafeBrowsingProtectionLevel policy. This enables you to keep your corporate data and users safe, whether they are accessing work resources from fully managed, personal, or unmanaged devices used by your vendors. Getting started is easy. If your organization hasn't already, check out this guide for steps on how to enroll your devices. 2. Enforce built-in protections against Phishing, Ransomware & Malware Chrome uses Google's Safe Browsing technology to help protect billions of devices every day by showing warnings to users when they attempt to navigate to dangerous sites or download dangerous files. Safe Browsing is enabled by default for all users when they download Chrome. As an administrator, you can prevent your users from disabling Safe Browsing by enforcing the SafeBrowsingProtectionLevel policy.  Over the past few years, we've seen threats on the web becoming increasingly sophisticated. Turning on Enhanced Safe Browsing will substantially increase protection Over the past few years, we've seen threats on the web becoming increasingly sophisticated. Turning on Enhanced Safe Browsing will substantially increase protection |

Ransomware Malware Tool Threat Guideline Cloud | ★★★ | ||