What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2024-05-15 12:59:21 | E / S 2024: Ce qui est nouveau dans la sécurité et la confidentialité d'Android I/O 2024: What\\'s new in Android security and privacy (lien direct) |

Posted by Dave Kleidermacher, VP Engineering, Android Security and Privacy

Our commitment to user safety is a top priority for Android. We\'ve been consistently working to stay ahead of the world\'s scammers, fraudsters and bad actors. And as their tactics evolve in sophistication and scale, we continually adapt and enhance our advanced security features and AI-powered protections to help keep Android users safe.

In addition to our new suite of advanced theft protection features to help keep your device and data safe in the case of theft, we\'re also focusing increasingly on providing additional protections against mobile financial fraud and scams.

Today, we\'re announcing more new fraud and scam protection features coming in Android 15 and Google Play services updates later this year to help better protect users around the world. We\'re also sharing new tools and policies to help developers build safer apps and keep their users safe.

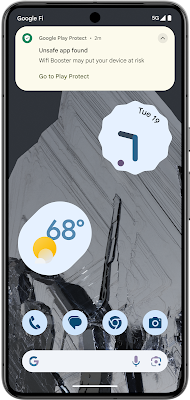

Google Play Protect live threat detection

Google Play Protect now scans 200 billion Android apps daily, helping keep more than 3 billion users safe from malware. We are expanding Play Protect\'s on-device AI capabilities with Google Play Protect live threat detection to improve fraud and abuse detection against apps that try to cloak their actions.

With live threat detection, Google Play Protect\'s on-device AI will analyze additional behavioral signals related to the use of sensitive permissions and interactions with other apps and services. If suspicious behavior is discovered, Google Play Protect can send the app to Google for additional review and then warn users or disable the app if malicious behavior is confirmed. The detection of suspicious behavior is done on device in a privacy preserving way through Private Compute Core, which allows us to protect users without collecting data. Google Pixel, Honor, Lenovo, Nothing, OnePlus, Oppo, Sharp, Transsion, and other manufacturers are deploying live threat detection later this year.

Stronger protections against fraud and scams

We\'re also bringing additional protections to fight fraud and scams in Android 15 with two key enhancements to safeguard your information and privacy from bad apps:

Protecting One-time Passwords from Malware: With the exception of a few types of apps, such as wearable companion apps, one-time passwords are now hidden from notifications, closing a common attack vector for fraud and spyware.

Expanded Restricted Settings: To help protect more sensitive permissions that are commonly abused by fraudsters, we\'re expanding Android 13\'s restricted settings, which require additional user approval to enable permissions when installing an app from an Internet-sideloading source (web browsers, messaging apps or file managers).

We are continuing to develop new, AI-powered protections,

Stronger protections against fraud and scams

We\'re also bringing additional protections to fight fraud and scams in Android 15 with two key enhancements to safeguard your information and privacy from bad apps:

Protecting One-time Passwords from Malware: With the exception of a few types of apps, such as wearable companion apps, one-time passwords are now hidden from notifications, closing a common attack vector for fraud and spyware.

Expanded Restricted Settings: To help protect more sensitive permissions that are commonly abused by fraudsters, we\'re expanding Android 13\'s restricted settings, which require additional user approval to enable permissions when installing an app from an Internet-sideloading source (web browsers, messaging apps or file managers).

We are continuing to develop new, AI-powered protections, |

Malware Tool Threat Mobile Cloud | |||

| 2024-04-30 12:14:48 | Détection du vol de données du navigateur à l'aide des journaux d'événements Windows Detecting browser data theft using Windows Event Logs (lien direct) |

Publié par Will Harris, Chrome Security Team Le modèle de processus sandboxé de Chrome \\ de se défend bien contre le contenu Web malveillant, mais il existe des limites à la façon dont l'application peut se protéger des logiciels malveillants déjà sur l'ordinateur.Les cookies et autres informations d'identification restent un objectif de grande valeur pour les attaquants, et nous essayons de lutter contre cette menace continue de plusieurs manières, notamment en travaillant sur des normes Web comme dbsc Cela aidera à perturber l'industrie du vol de cookies car l'exfiltration de ces cookies n'aura plus de valeur. Lorsqu'il n'est pas possible d'éviter le vol d'identification et de cookies par malware, la prochaine meilleure chose est de rendre l'attaque plus observable par antivirus, d'agents de détection de terminaux ou d'administrateurs d'entreprise avec des outils d'analyse de journaux de base. Ce blog décrit un ensemble de signaux à utiliser par les administrateurs système ou les agents de détection de point de terminaison qui devraient signaler de manière fiable tout accès aux données protégées du navigateur d'une autre application sur le système.En augmentant la probabilité d'une attaque détectée, cela modifie le calcul pour les attaquants qui pourraient avoir un fort désir de rester furtif et pourraient les amener à repenser ces types d'attaques contre nos utilisateurs. arrière-plan Les navigateurs basés sur le chrome sur Windows utilisent le DPAPI (API de protection des données) pour sécuriser les secrets locaux tels que les cookies, le mot de passe, etc.La protection DPAPI est basée sur une clé dérivée des informations d'identification de connexion de l'utilisateur et est conçue pour se protéger contre l'accès non autorisé aux secrets des autres utilisateurs du système ou lorsque le système est éteint.Étant donné que le secret DPAPI est lié à l'utilisateur connecté, il ne peut pas protéger contre les attaques de logiciels malveillants locaux - l'exécution de logiciels malveillants en tant qu'utilisateur ou à un niveau de privilège plus élevé peut simplement appeler les mêmes API que le navigateur pour obtenir le secret DPAPI. Depuis 2013, Chromium applique l'indicateur CryptProtect_Audit aux appels DPAPI pour demander qu'un journal d'audit soit généré lorsque le décryptage se produit, ainsi que le marquage des données en tant que détenue par le navigateur.Parce que tout le stockage de données crypté de Chromium \\ est soutenu par une clé sécurisée DPAPI, toute application qui souhaite décrypter ces données, y compris les logiciels malveillants, devrait toujours générer de manière fiable un journal d'événements clairement observable, qui peut être utilisé pour détecter ces typesd'attaques. Il y a trois étapes principales impliquées dans le profit de ce journal: Activer la connexion sur l'ordinateur exécutant Google Chrome, ou tout autre navigateur basé sur le chrome. Exporter les journaux des événements vers votre système backend. Créer une logique de détection pour détecter le vol. Ce blog montrera également comment la journalisation fonctionne dans la pratique en la testant contre un voleur de mot de passe Python. Étape 1: Activer la connexion sur le système Les événements DPAPI sont connectés à deux endroits du système.Premièrement, il y a le 4693 Événement qui peut être connecté au journal de sécurité.Cet événement peut être activé en activant "Audit l'activité DPAPI" et les étapes pour ce faire sont d | Malware Tool Threat | ★★ | ||

| 2024-04-29 11:59:47 | Comment nous avons combattu de mauvaises applications et de mauvais acteurs en 2023 How we fought bad apps and bad actors in 2023 (lien direct) |

Posted by Steve Kafka and Khawaja Shams (Android Security and Privacy Team), and Mohet Saxena (Play Trust and Safety) A safe and trusted Google Play experience is our top priority. We leverage our SAFE (see below) principles to provide the framework to create that experience for both users and developers. Here\'s what these principles mean in practice: (S)afeguard our Users. Help them discover quality apps that they can trust. (A)dvocate for Developer Protection. Build platform safeguards to enable developers to focus on growth. (F)oster Responsible Innovation. Thoughtfully unlock value for all without compromising on user safety. (E)volve Platform Defenses. Stay ahead of emerging threats by evolving our policies, tools and technology. With those principles in mind, we\'ve made recent improvements and introduced new measures to continue to keep Google Play\'s users safe, even as the threat landscape continues to evolve. In 2023, we prevented 2.28 million policy-violating apps from being published on Google Play1 in part thanks to our investment in new and improved security features, policy updates, and advanced machine learning and app review processes. We have also strengthened our developer onboarding and review processes, requiring more identity information when developers first establish their Play accounts. Together with investments in our review tooling and processes, we identified bad actors and fraud rings more effectively and banned 333K bad accounts from Play for violations like confirmed malware and repeated severe policy violations. Additionally, almost 200K app submissions were rejected or remediated to ensure proper use of sensitive permissions such as background location or SMS access. To help safeguard user privacy at scale, we partnered with SDK providers to limit sensitive data access and sharing, enhancing the privacy posture for over 31 SDKs impacting 790K+ apps. We also significantly expanded the Google Play SDK Index, which now covers the SDKs used in almost 6 million apps across the Android ecosystem. This valuable resource helps developers make better SDK choices, boosts app quality and minimizes integration risks. Protecting the Android Ecosystem Building on our success with the App Defense Alliance (ADA), we partnered with Microsoft and Meta as steering committee members in the newly restructured ADA under the Joint Development Foundation, part of the Linux Foundation family. The Alliance will support industry-wide adoption of app security best practices and guidelines, as well as countermeasures against emerging security risks. Additionally, we announced new Play Store transparency labeling to highlight VPN apps that have completed an independent security review through App Defense Alliance\'s Mobile App Security Assessment (MASA). When a user searches for VPN apps, they will now see a banner at the top of Google Play that educates them about the “Independent security review” badge in the Data safety section. This helps users see at-a-glance that a developer has prioritized security and privacy best practices and is committed to user safety. | Malware Tool Threat Mobile | ★★★ | ||

| 2024-04-26 18:33:10 | Accélération de la réponse aux incidents en utilisant une AI générative Accelerating incident response using generative AI (lien direct) |

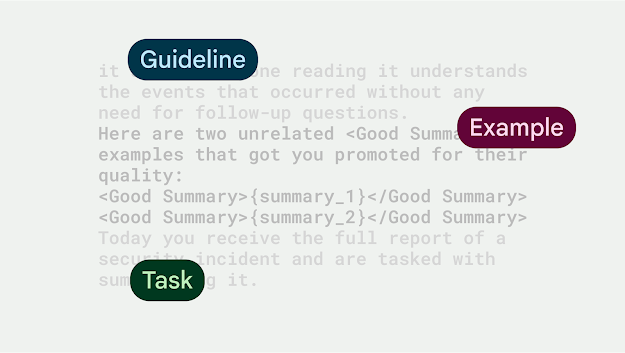

Lambert Rosique and Jan Keller, Security Workflow Automation, and Diana Kramer, Alexandra Bowen and Andrew Cho, Privacy and Security Incident Response IntroductionAs security professionals, we\'re constantly looking for ways to reduce risk and improve our workflow\'s efficiency. We\'ve made great strides in using AI to identify malicious content, block threats, and discover and fix vulnerabilities. We also published the Secure AI Framework (SAIF), a conceptual framework for secure AI systems to ensure we are deploying AI in a responsible manner. Today we are highlighting another way we use generative AI to help the defenders gain the advantage: Leveraging LLMs (Large Language Model) to speed-up our security and privacy incidents workflows. IntroductionAs security professionals, we\'re constantly looking for ways to reduce risk and improve our workflow\'s efficiency. We\'ve made great strides in using AI to identify malicious content, block threats, and discover and fix vulnerabilities. We also published the Secure AI Framework (SAIF), a conceptual framework for secure AI systems to ensure we are deploying AI in a responsible manner. Today we are highlighting another way we use generative AI to help the defenders gain the advantage: Leveraging LLMs (Large Language Model) to speed-up our security and privacy incidents workflows. |

Tool Threat Industrial Cloud | ★★★ | ||

| 2024-04-23 13:15:47 | Découvrir des menaces potentielles à votre application Web en tirant parti des rapports de sécurité Uncovering potential threats to your web application by leveraging security reports (lien direct) |

Posted by Yoshi Yamaguchi, Santiago Díaz, Maud Nalpas, Eiji Kitamura, DevRel team

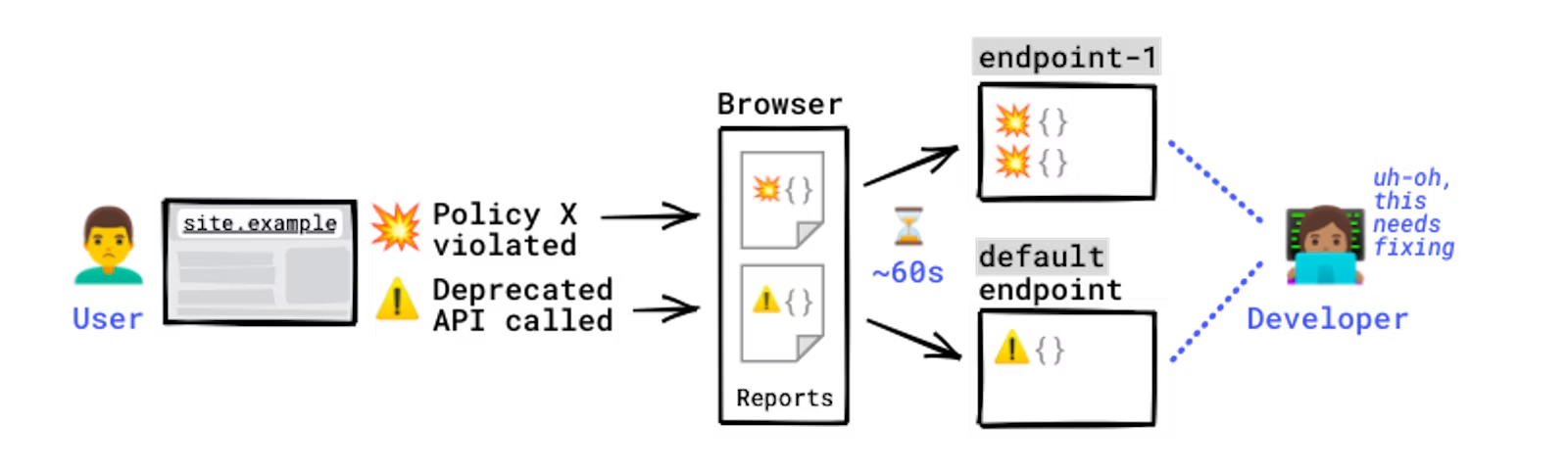

The Reporting API is an emerging web standard that provides a generic reporting mechanism for issues occurring on the browsers visiting your production website. The reports you receive detail issues such as security violations or soon-to-be-deprecated APIs, from users\' browsers from all over the world.

Collecting reports is often as simple as specifying an endpoint URL in the HTTP header; the browser will automatically start forwarding reports covering the issues you are interested in to those endpoints. However, processing and analyzing these reports is not that simple. For example, you may receive a massive number of reports on your endpoint, and it is possible that not all of them will be helpful in identifying the underlying problem. In such circumstances, distilling and fixing issues can be quite a challenge.

In this blog post, we\'ll share how the Google security team uses the Reporting API to detect potential issues and identify the actual problems causing them. We\'ll also introduce an open source solution, so you can easily replicate Google\'s approach to processing reports and acting on them.

How does the Reporting API work?

Some errors only occur in production, on users\' browsers to which you have no access. You won\'t see these errors locally or during development because there could be unexpected conditions real users, real networks, and real devices are in. With the Reporting API, you directly leverage the browser to monitor these errors: the browser catches these errors for you, generates an error report, and sends this report to an endpoint you\'ve specified.

How reports are generated and sent.

Errors you can monitor with the Reporting API include:

Security violations: Content-Security-Policy (CSP), Cross-Origin-Opener-Policy (COOP), Cross-Origin-Embedder-Policy (COEP)

Deprecated and soon-to-be-deprecated API calls

Browser interventions

Permissions policy

And more

For a full list of error types you can monitor, see use cases and report types.

The Reporting API is activated and configured using HTTP response headers: you need to declare the endpoint(s) you want the browser to send reports to, and which error types you want to monitor. The browser then sends reports to your endpoint in POST requests whose payload is a list of reports.

Example setup:#

How reports are generated and sent.

Errors you can monitor with the Reporting API include:

Security violations: Content-Security-Policy (CSP), Cross-Origin-Opener-Policy (COOP), Cross-Origin-Embedder-Policy (COEP)

Deprecated and soon-to-be-deprecated API calls

Browser interventions

Permissions policy

And more

For a full list of error types you can monitor, see use cases and report types.

The Reporting API is activated and configured using HTTP response headers: you need to declare the endpoint(s) you want the browser to send reports to, and which error types you want to monitor. The browser then sends reports to your endpoint in POST requests whose payload is a list of reports.

Example setup:# |

Malware Tool Vulnerability Mobile Cloud | ★★★ | ||

| 2024-04-18 13:40:42 | Empêcher les fuites de données généatives de l'IA avec Chrome Enterprise DLP Prevent Generative AI Data Leaks with Chrome Enterprise DLP (lien direct) |

Publié Kaleigh Rosenblat, Chrome Enterprise Senior Staff Engineer, Security Lead L'IA générative est devenue un outil puissant et populaire pour automatiser la création de contenu et des tâches simples.De la création de contenu personnalisée à la génération de code source, il peut augmenter à la fois notre productivité et notre potentiel créatif. Les entreprises souhaitent tirer parti de la puissance des LLM, comme les Gémeaux, mais beaucoup peuvent avoir des problèmes de sécurité et souhaiter plus de contrôle sur la façon dont les employés s'assurent de ces nouveaux outils.Par exemple, les entreprises peuvent vouloir s'assurer que diverses formes de données sensibles, telles que des informations personnellement identifiables (PII), des dossiers financiers et de la propriété intellectuelle interne, ne doit pas être partagé publiquement sur les plateformes d'IA génératrices.Les dirigeants de la sécurité sont confrontés au défi de trouver le bon équilibre - permettant aux employés de tirer parti de l'IA pour augmenter l'efficacité, tout en protégeant les données des entreprises. Dans cet article de blog, nous explorerons des politiques de reportage et d'application que les équipes de sécurité des entreprises peuvent mettre en œuvre dans Chrome Enterprise Premium Pour la prévention des pertes de données (DLP). 1. & nbsp; Voir les événements de connexion * pour comprendre l'utilisation des services d'IA génératifs au sein de l'organisation.Avec Chrome Enterprise \'s Reporting Connector , la sécurité et les équipes informatiques peuvent voir quand unL'utilisateur se connecte avec succès dans un domaine spécifique, y compris les sites Web d'IA génératifs.Les équipes d'opérations de sécurité peuvent tirer parti de cette télémétrie pour détecter les anomalies et les menaces en diffusant les données dans chronique ou autre tiers siems sans frais supplémentaires. 2. & nbsp; Activer le filtrage URL pour avertir les utilisateurs des politiques de données sensibles et les laisser décider s'ils souhaitent ou non accéder à l'URL, ou pour empêcher les utilisateurs de passer à certains groupes de sites. Par exemple, avec le filtrage d'URL de l'entreprise Chrome, les administrateurs informatiques peuvent créer des règlesqui avertissent les développeurs de ne pas soumettre le code source à des applications ou outils AI génératifs spécifiques, ou de les bloquer. 3. & nbsp; avertir, bloquer ou surveiller les actions de données sensibles dans les sites Web d'IA génératifs avec des règles dynamiques basées sur le contenu pour des actions telles que la pâte, les téléchargements / téléchargements de fichiers et l'impression. & nbsp; Chrome Enterprise DLP DLPRègles Donnez aux administrateurs des administrateurs des activités granulaires sur les activités du navigateur, telles que la saisie des informations financières dans les sites Web de Gen AI.Les administrateurs peuvent personnaliser les règles du DLP pour restre | Tool Legislation | ★★★ | ||

| 2024-03-28 18:16:18 | Adressez désinfectant pour le firmware à métal nu Address Sanitizer for Bare-metal Firmware (lien direct) |

Posted by Eugene Rodionov and Ivan Lozano, Android Team With steady improvements to Android userspace and kernel security, we have noticed an increasing interest from security researchers directed towards lower level firmware. This area has traditionally received less scrutiny, but is critical to device security. We have previously discussed how we have been prioritizing firmware security, and how to apply mitigations in a firmware environment to mitigate unknown vulnerabilities. In this post we will show how the Kernel Address Sanitizer (KASan) can be used to proactively discover vulnerabilities earlier in the development lifecycle. Despite the narrow application implied by its name, KASan is applicable to a wide-range of firmware targets. Using KASan enabled builds during testing and/or fuzzing can help catch memory corruption vulnerabilities and stability issues before they land on user devices. We\'ve already used KASan in some firmware targets to proactively find and fix 40+ memory safety bugs and vulnerabilities, including some of critical severity. Along with this blog post we are releasing a small project which demonstrates an implementation of KASan for bare-metal targets leveraging the QEMU system emulator. Readers can refer to this implementation for technical details while following the blog post. Address Sanitizer (ASan) overview Address sanitizer is a compiler-based instrumentation tool used to identify invalid memory access operations during runtime. It is capable of detecting the following classes of temporal and spatial memory safety bugs: out-of-bounds memory access use-after-free double/invalid free use-after-return ASan relies on the compiler to instrument code with dynamic checks for virtual addresses used in load/store operations. A separate runtime library defines the instrumentation hooks for the heap memory and error reporting. For most user-space targets (such as aarch64-linux-android) ASan can be enabled as simply as using the -fsanitize=address compiler option for Clang due to existing support of this target both in the toolchain and in the libclang_rt runtime. However, the situation is rather different for bare-metal code which is frequently built with the none system targets, such as arm-none-eabi. Unlike traditional user-space programs, bare-metal code running inside an embedded system often doesn\'t have a common runtime implementation. As such, LLVM can\'t provide a default runtime for these environments. To provide custom implementations for the necessary runtime routines, the Clang toolchain exposes an interface for address sanitization through the -fsanitize=kernel-address compiler option. The KASan runtime routines implemented in the Linux kernel serve as a great example of how to define a KASan runtime for targets which aren\'t supported by default with -fsanitize=address. We\'ll demonstrate how to use the version of address sanitizer originally built for the kernel on other bare-metal targets. KASan 101 Let\'s take a look at the KASan major building blocks from a high-level perspective (a thorough explanation of how ASan works under-the-hood is provided in this whitepaper). The main idea behind KASan is that every memory access operation, such as load/store instructions and memory copy functions (for example, memm | Tool Vulnerability Mobile Technical | ★★ | ||

| 2024-02-05 11:59:31 | Amélioration de l'interopérabilité entre la rouille et le C ++ Improving Interoperability Between Rust and C++ (lien direct) |

Publié par Lars Bergstrom & # 8211;Directeur, Android Platform Tools & amp;Bibliothèques et présidente du Rust Foundation Board En 2021, nous annoncé que Google rejoignait la Fondation Rust.À l'époque, Rust était déjà largement utilisée sur Android et d'autres produits Google.Notre annonce a souligné notre engagement à améliorer les examens de sécurité du code de la rouille et son interopérabilité avec le code C ++.La rouille est l'un des outils les plus forts que nous avons pour résoudre les problèmes de sécurité de la sécurité mémoire.Depuis cette annonce, les leaders de l'industrie et agences gouvernementales sentiment. Nous sommes ravis d'annoncer que Google a fourni une subvention de 1 million de dollars à la Rust Foundation pour soutenir les efforts qui amélioreront la capacité de Rust Code à interopérer avec les bases de code C ++ héritées existantes.Nous réapparaisons également notre engagement existant envers la communauté de la rouille open source en agrégant et en publiant Audits pour les caisses de rouille que nous utilisons dans les projets Google open-source.Ces contributions, ainsi que notre contributions précédentes à l'interopérabilité , ont-ellesenthousiasmé par l'avenir de la rouille. "Sur la base des statistiques historiques de la densité de la densité de vulnérabilité, Rust a empêché de manière proactive des centaines de vulnérabilités d'avoir un impact sur l'écosystème Android.Cet investissement vise à étendre l'adoption de la rouille sur divers composants de la plate-forme. » & # 8211;Dave Kleidermacher, vice-président de l'ingénierie, Android Security & AMP;Confidentialité Bien que Google ait connu la croissance la plus importante de l'utilisation de la rouille dans Android, nous continuons à augmenter son utilisation sur plus d'applications, y compris les clients et le matériel de serveur. «Bien que la rouille ne soit pas adaptée à toutes les applications de produits, la priorisation de l'interopérabilité transparente avec C ++ accélérera l'adoption de la communauté plus large, s'alignant ainsi sur les objectifs de l'industrie d'améliorer la sécurité mémoire.» & # 8211;Royal Hansen, vice-président de Google de la sécurité et de l'AMP;Sécurité L'outillage de rouille et l'écosystème prennent déjà en charge interopérabilité avec Android et avec un investissement continuDans des outils comme cxx , autocxx , bindgen , cbindgen , diplomate , et crubit, nous constatons des améliorations régulières de l'état d'interopérabilité de la rouille avec C ++.Au fur et à mesure que ces améliorations se sont poursuivies, nous avons constaté une réduction des obstacles à l'adoption et à l'adoption accélérée de la rouille.Bien que ces progrès à travers les nombreux outils se poursuivent, il ne se fait souvent que développer progressivement pour répondre aux besoins particuliers d'un projet ou d'une entreprise donnée. Afin d'accélérer à la fois l'adoption de la rouill | Tool Vulnerability Mobile | ★★★ | ||

| 2024-01-11 14:18:14 | MiraclePtr: protéger les utilisateurs contre les vulnérabilités sans utilisation sans plateformes MiraclePtr: protecting users from use-after-free vulnerabilities on more platforms (lien direct) |

Posted by Keishi Hattori, Sergei Glazunov, Bartek Nowierski on behalf of the MiraclePtr team

Welcome back to our latest update on MiraclePtr, our project to protect against use-after-free vulnerabilities in Google Chrome. If you need a refresher, you can read our previous blog post detailing MiraclePtr and its objectives.

More platforms

We are thrilled to announce that since our last update, we have successfully enabled MiraclePtr for more platforms and processes:

In June 2022, we enabled MiraclePtr for the browser process on Windows and Android.

In September 2022, we expanded its coverage to include all processes except renderer processes.

In June 2023, we enabled MiraclePtr for ChromeOS, macOS, and Linux.

Furthermore, we have changed security guidelines to downgrade MiraclePtr-protected issues by one severity level!

Evaluating Security Impact

First let\'s focus on its security impact. Our analysis is based on two primary information sources: incoming vulnerability reports and crash reports from user devices. Let\'s take a closer look at each of these sources and how they inform our understanding of MiraclePtr\'s effectiveness.

Bug reports

Chrome vulnerability reports come from various sources, such as:

Chrome Vulnerability Reward Program participants,

our fuzzing infrastructure,

internal and external teams investigating security incidents.

For the purposes of this analysis, we focus on vulnerabilities that affect platforms where MiraclePtr was enabled at the time the issues were reported. We also exclude bugs that occur inside a sandboxed renderer process. Since the initial launch of MiraclePtr in 2022, we have received 168 use-after-free reports matching our criteria.

What does the data tell us? MiraclePtr effectively mitigated 57% of these use-after-free vulnerabilities in privileged processes, exceeding our initial estimate of 50%. Reaching this level of effectiveness, however, required additional work. For instance, we not only rewrote class fields to use MiraclePtr, as discussed in the previous post, but also added MiraclePtr support for bound function arguments, such as Unretained pointers. These pointers have been a significant source of use-after-frees in Chrome, and the additional protection allowed us to mitigate 39 more issues.

Moreover, these vulnerability reports enable us to pinpoint areas needing improvement. We\'re actively working on adding support for select third-party libraries that have been a source of use-after-free bugs, as well as developing a more advanced rewriter tool that can handle transformations like converting std::vector into std::vector. We\'ve also made sever

What does the data tell us? MiraclePtr effectively mitigated 57% of these use-after-free vulnerabilities in privileged processes, exceeding our initial estimate of 50%. Reaching this level of effectiveness, however, required additional work. For instance, we not only rewrote class fields to use MiraclePtr, as discussed in the previous post, but also added MiraclePtr support for bound function arguments, such as Unretained pointers. These pointers have been a significant source of use-after-frees in Chrome, and the additional protection allowed us to mitigate 39 more issues.

Moreover, these vulnerability reports enable us to pinpoint areas needing improvement. We\'re actively working on adding support for select third-party libraries that have been a source of use-after-free bugs, as well as developing a more advanced rewriter tool that can handle transformations like converting std::vector into std::vector. We\'ve also made sever |

Tool Vulnerability Threat Mobile | ★★★ | ||

| 2023-12-12 12:00:09 | Durcissant les bandes de base cellulaire dans Android Hardening cellular basebands in Android (lien direct) |

Posted by Ivan Lozano and Roger Piqueras Jover Android\'s defense-in-depth strategy applies not only to the Android OS running on the Application Processor (AP) but also the firmware that runs on devices. We particularly prioritize hardening the cellular baseband given its unique combination of running in an elevated privilege and parsing untrusted inputs that are remotely delivered into the device. This post covers how to use two high-value sanitizers which can prevent specific classes of vulnerabilities found within the baseband. They are architecture agnostic, suitable for bare-metal deployment, and should be enabled in existing C/C++ code bases to mitigate unknown vulnerabilities. Beyond security, addressing the issues uncovered by these sanitizers improves code health and overall stability, reducing resources spent addressing bugs in the future. An increasingly popular attack surface As we outlined previously, security research focused on the baseband has highlighted a consistent lack of exploit mitigations in firmware. Baseband Remote Code Execution (RCE) exploits have their own categorization in well-known third-party marketplaces with a relatively low payout. This suggests baseband bugs may potentially be abundant and/or not too complex to find and exploit, and their prominent inclusion in the marketplace demonstrates that they are useful. Baseband security and exploitation has been a recurring theme in security conferences for the last decade. Researchers have also made a dent in this area in well-known exploitation contests. Most recently, this area has become prominent enough that it is common to find practical baseband exploitation trainings in top security conferences. Acknowledging this trend, combined with the severity and apparent abundance of these vulnerabilities, last year we introduced updates to the severity guidelines of Android\'s Vulnerability Rewards Program (VRP). For example, we consider vulnerabilities allowing Remote Code Execution (RCE) in the cellular baseband to be of CRITICAL severity. Mitigating Vulnerability Root Causes with Sanitizers Common classes of vulnerabilities can be mitigated through the use of sanitizers provided by Clang-based toolchains. These sanitizers insert runtime checks against common classes of vulnerabilities. GCC-based toolchains may also provide some level of support for these flags as well, but will not be considered further in this post. We encourage you to check your toolchain\'s documentation. Two sanitizers included in Undefine | Tool Vulnerability Threat Mobile Prediction Conference | ★★★ | ||

| 2023-11-02 12:00:24 | Plus de moyens pour les utilisateurs d'identifier les applications testées sur la sécurité indépendante sur Google Play More ways for users to identify independently security tested apps on Google Play (lien direct) |

Posted by Nataliya Stanetsky, Android Security and Privacy Team

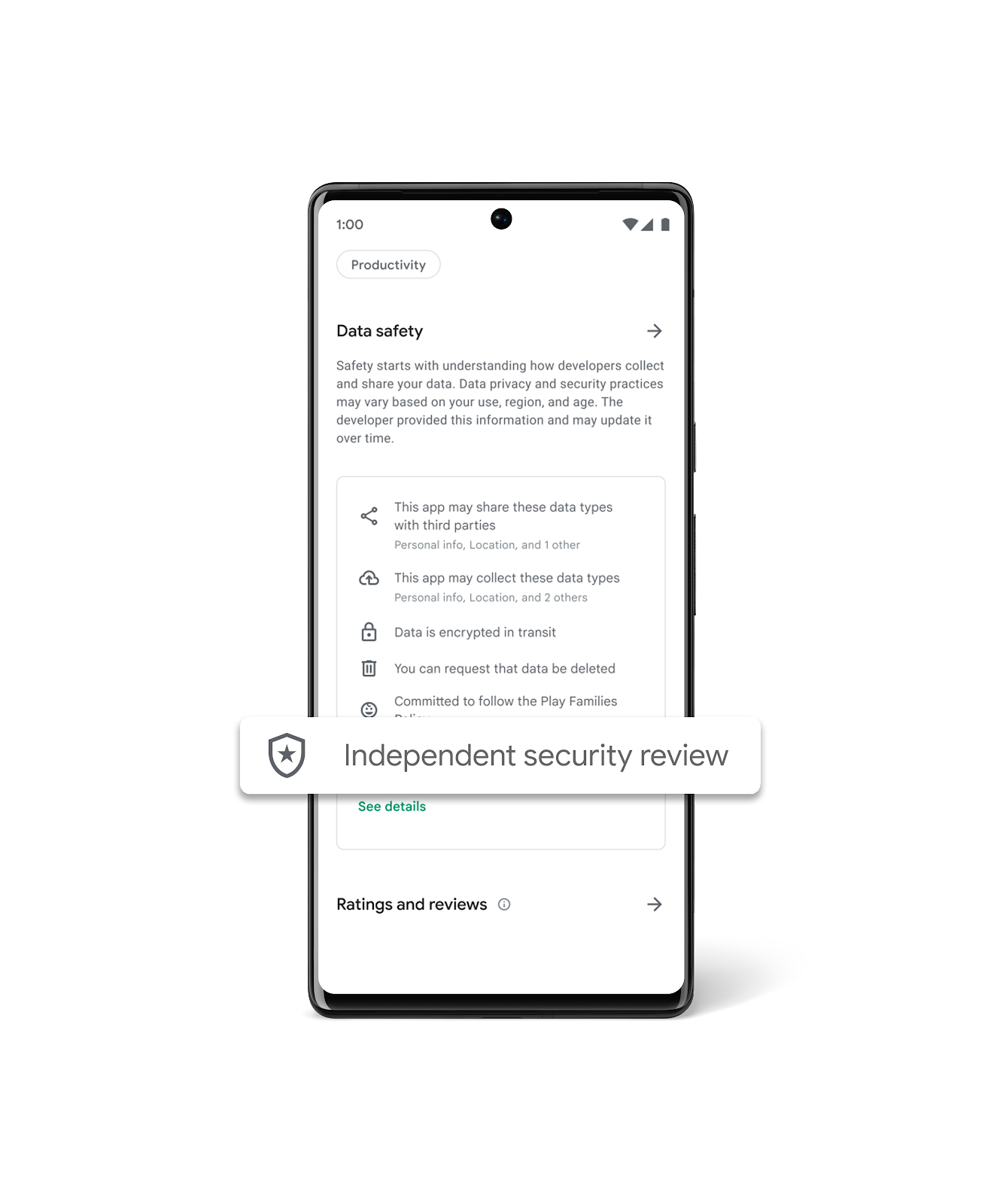

Keeping Google Play safe for users and developers remains a top priority for Google. As users increasingly prioritize their digital privacy and security, we continue to invest in our Data Safety section and transparency labeling efforts to help users make more informed choices about the apps they use.

Research shows that transparent security labeling plays a crucial role in consumer risk perception, building trust, and influencing product purchasing decisions. We believe the same principles apply for labeling and badging in the Google Play store. The transparency of an app\'s data security and privacy play a key role in a user\'s decision to download, trust, and use an app.

Highlighting Independently Security Tested VPN Apps

Last year, App Defense Alliance (ADA) introduced MASA (Mobile App Security Assessment), which allows developers to have their apps independently validated against a global security standard. This signals to users that an independent third-party has validated that the developers designed their apps to meet these industry mobile security and privacy minimum best practices and the developers are going the extra mile to identify and mitigate vulnerabilities. This, in turn, makes it harder for attackers to reach users\' devices and improves app quality across the ecosystem. Upon completion of the successful validation, Google Play gives developers the option to declare an “Independent security review” badge in its Data Safety section, as shown in the image below. While certification to baseline security standards does not imply that a product is free of vulnerabilities, the badge associated with these validated apps helps users see at-a-glance that a developer has prioritized security and privacy practices and committed to user safety.

To help give users a simplified view of which apps have undergone an independent security validation, we\'re introducing a new Google Play store banner for specific app types, starting with VPN apps. We\'ve launched this banner beginning with VPN apps due to the sensitive and significant amount of user data these apps handle. When a user searches for VPN apps, they will now see a banner at the top of Google Play that educates them about the “Independent security review” badge in the Data Safety Section. Users also have the ability to “Learn More”, which redirects them to the App Validation Directory, a centralized place to view all VPN apps that have been independently security reviewed. Users can also discover additional technical assessment details in the App Validation Directory, helping them to make more informed decisions about what VPN apps to download, use, and trust with their data.

To help give users a simplified view of which apps have undergone an independent security validation, we\'re introducing a new Google Play store banner for specific app types, starting with VPN apps. We\'ve launched this banner beginning with VPN apps due to the sensitive and significant amount of user data these apps handle. When a user searches for VPN apps, they will now see a banner at the top of Google Play that educates them about the “Independent security review” badge in the Data Safety Section. Users also have the ability to “Learn More”, which redirects them to the App Validation Directory, a centralized place to view all VPN apps that have been independently security reviewed. Users can also discover additional technical assessment details in the App Validation Directory, helping them to make more informed decisions about what VPN apps to download, use, and trust with their data.

|

Tool Vulnerability Mobile Technical | ★★ | ||

| 2023-10-26 08:49:41 | Increasing transparency in AI security (lien direct) | Mihai Maruseac, Sarah Meiklejohn, Mark Lodato, Google Open Source Security Team (GOSST)New AI innovations and applications are reaching consumers and businesses on an almost-daily basis. Building AI securely is a paramount concern, and we believe that Google\'s Secure AI Framework (SAIF) can help chart a path for creating AI applications that users can trust. Today, we\'re highlighting two new ways to make information about AI supply chain security universally discoverable and verifiable, so that AI can be created and used responsibly. The first principle of SAIF is to ensure that the AI ecosystem has strong security foundations. In particular, the software supply chains for components specific to AI development, such as machine learning models, need to be secured against threats including model tampering, data poisoning, and the production of harmful content. Even as machine learning and artificial intelligence continue to evolve rapidly, some solutions are now within reach of ML creators. We\'re building on our prior work with the Open Source Security Foundation to show how ML model creators can and should protect against ML supply chain attacks by using | Malware Tool Vulnerability Threat Cloud | ★★ | ||

| 2023-10-26 08:00:33 | Google\\'s reward criteria for reporting bugs in AI products (lien direct) | Eduardo Vela, Jan Keller and Ryan Rinaldi, Google Engineering In September, we shared how we are implementing the voluntary AI commitments that we and others in industry made at the White House in July. One of the most important developments involves expanding our existing Bug Hunter Program to foster third-party discovery and reporting of issues and vulnerabilities specific to our AI systems. Today, we\'re publishing more details on these new reward program elements for the first time. Last year we issued over $12 million in rewards to security researchers who tested our products for vulnerabilities, and we expect today\'s announcement to fuel even greater collaboration for years to come. What\'s in scope for rewards In our recent AI Red Team report, we identified common tactics, techniques, and procedures (TTPs) that we consider most relevant and realistic for real-world adversaries to use against AI systems. The following table incorporates shared learnings from | Tool Vulnerability | ★★ | ||

| 2023-10-10 15:39:40 | Échelle au-delà ducorp avec les politiques de contrôle d'accès assistées par l'IA Scaling BeyondCorp with AI-Assisted Access Control Policies (lien direct) |

Ayush Khandelwal, Software Engineer, Michael Torres, Security Engineer, Hemil Patel, Technical Product Expert, Sameer Ladiwala, Software EngineerIn July 2023, four Googlers from the Enterprise Security and Access Security organizations developed a tool that aimed at revolutionizing the way Googlers interact with Access Control Lists - SpeakACL. This tool, awarded the Gold Prize during Google\'s internal Security & AI Hackathon, allows developers to create or modify security policies using simple English instructions rather than having to learn system-specific syntax or complex security principles. This can save security and product teams hours of time and effort, while helping to protect the information of their users by encouraging the reduction of permitted access by adhering to the principle of least privilege.Access Control Policies in BeyondCorpGoogle requires developers and owners of enterprise applications to define their own access control policies, as described in BeyondCorp: The Access Proxy. We have invested in reducing the difficulty of self-service ACL and ACL test creation to encourage these service owners to define least privilege access control policies. However, it is still challenging to concisely transform their intent into the language acceptable to the access control engine. Additional complexity is added by the variety of engines, and corresponding policy definition languages that target different access control domains (i.e. websites, networks, RPC servers).To adequately implement an access control policy, service developers are expected to learn various policy definition languages and their associated syntax, in addition to sufficiently understanding security concepts. As this takes time away from core developer work, it is not the most efficient use of developer time. A solution was required to remove these challenges so developers can focus on building innovative tools and products.Making it WorkWe built a prototype interface for interactively defining and modifying access control policies for the | Tool Vulnerability | ★★★ | ||

| 2023-10-09 12:30:13 | Rust à métal nu dans Android Bare-metal Rust in Android (lien direct) |

Posted by Andrew Walbran, Android Rust Team

Last year we wrote about how moving native code in Android from C++ to Rust has resulted in fewer security vulnerabilities. Most of the components we mentioned then were system services in userspace (running under Linux), but these are not the only components typically written in memory-unsafe languages. Many security-critical components of an Android system run in a “bare-metal” environment, outside of the Linux kernel, and these are historically written in C. As part of our efforts to harden firmware on Android devices, we are increasingly using Rust in these bare-metal environments too.

To that end, we have rewritten the Android Virtualization Framework\'s protected VM (pVM) firmware in Rust to provide a memory safe foundation for the pVM root of trust. This firmware performs a similar function to a bootloader, and was initially built on top of U-Boot, a widely used open source bootloader. However, U-Boot was not designed with security in a hostile environment in mind, and there have been numerous security vulnerabilities found in it due to out of bounds memory access, integer underflow and memory corruption. Its VirtIO drivers in particular had a number of missing or problematic bounds checks. We fixed the specific issues we found in U-Boot, but by leveraging Rust we can avoid these sorts of memory-safety vulnerabilities in future. The new Rust pVM firmware was released in Android 14.

As part of this effort, we contributed back to the Rust community by using and contributing to existing crates where possible, and publishing a number of new crates as well. For example, for VirtIO in pVM firmware we\'ve spent time fixing bugs and soundness issues in the existing virtio-drivers crate, as well as adding new functionality, and are now helping maintain this crate. We\'ve published crates for making PSCI and other Arm SMCCC calls, and for managing page tables. These are just a start; we plan to release more Rust crates to support bare-metal programming on a range of platforms. These crates are also being used outside of Android, such as in Project Oak and the bare-metal section of our Comprehensive Rust course.

Training engineers

Many engineers have been positively surprised by how p

Last year we wrote about how moving native code in Android from C++ to Rust has resulted in fewer security vulnerabilities. Most of the components we mentioned then were system services in userspace (running under Linux), but these are not the only components typically written in memory-unsafe languages. Many security-critical components of an Android system run in a “bare-metal” environment, outside of the Linux kernel, and these are historically written in C. As part of our efforts to harden firmware on Android devices, we are increasingly using Rust in these bare-metal environments too.

To that end, we have rewritten the Android Virtualization Framework\'s protected VM (pVM) firmware in Rust to provide a memory safe foundation for the pVM root of trust. This firmware performs a similar function to a bootloader, and was initially built on top of U-Boot, a widely used open source bootloader. However, U-Boot was not designed with security in a hostile environment in mind, and there have been numerous security vulnerabilities found in it due to out of bounds memory access, integer underflow and memory corruption. Its VirtIO drivers in particular had a number of missing or problematic bounds checks. We fixed the specific issues we found in U-Boot, but by leveraging Rust we can avoid these sorts of memory-safety vulnerabilities in future. The new Rust pVM firmware was released in Android 14.

As part of this effort, we contributed back to the Rust community by using and contributing to existing crates where possible, and publishing a number of new crates as well. For example, for VirtIO in pVM firmware we\'ve spent time fixing bugs and soundness issues in the existing virtio-drivers crate, as well as adding new functionality, and are now helping maintain this crate. We\'ve published crates for making PSCI and other Arm SMCCC calls, and for managing page tables. These are just a start; we plan to release more Rust crates to support bare-metal programming on a range of platforms. These crates are also being used outside of Android, such as in Project Oak and the bare-metal section of our Comprehensive Rust course.

Training engineers

Many engineers have been positively surprised by how p |

Tool Vulnerability | ★★ | ||

| 2023-09-21 12:00:57 | Échec de l'adoption de la rouille grâce à la formation Scaling Rust Adoption Through Training (lien direct) |

Posted by Martin Geisler, Android team Android 14 is the third major Android release with Rust support. We are already seeing a number of benefits: Productivity: Developers quickly feel productive writing Rust. They report important indicators of development velocity, such as confidence in the code quality and ease of code review. Security: There has been a reduction in memory safety vulnerabilities as we shift more development to memory safe languages. These positive early results provided an enticing motivation to increase the speed and scope of Rust adoption. We hoped to accomplish this by investing heavily in training to expand from the early adopters. Scaling up from Early Adopters Early adopters are often willing to accept more risk to try out a new technology. They know there will be some inconveniences and a steep learning curve but are willing to learn, often on their own time. Scaling up Rust adoption required moving beyond early adopters. For that we need to ensure a baseline level of comfort and productivity within a set period of time. An important part of our strategy for accomplishing this was training. Unfortunately, the type of training we wanted to provide simply didn\'t exist. We made the decision to write and implement our own Rust training. Training Engineers Our goals for the training were to: Quickly ramp up engineers: It is hard to take people away from their regular work for a long period of time, so we aimed to provide a solid foundation for using Rust in days, not weeks. We could not make anybody a Rust expert in so little time, but we could give people the tools and foundation needed to be productive while they continued to grow. The goal is to enable people to use Rust to be productive members of their teams. The time constraints meant we couldn\'t teach people programming from scratch; we also decided not to teach macros or unsafe Rust in detail. Make it engaging (and fun!): We wanted people to see a lot of Rust while also getting hands-on experience. Given the scope and time constraints mentioned above, the training was necessarily information-dense. This called for an interactive setting where people could quickly ask questions to the instructor. Research shows that retention improves when people can quickly verify assumptions and practice new concepts. Make it relevant for Android: The Android-specific tooling for Rust was already documented, but we wanted to show engineers how to use it via worked examples. We also wanted to document emerging standards, such as using thiserror and anyhow crates for error handling. Finally, because Rust is a new language in the Android Platform (AOSP), we needed to show how to interoperate with existing languages such as Java and C++. With those three goals as a starting point, we looked at the existing material and available tools. Existing Material Documentation is a key value of the Rust community and there are many great resources available for learning Rust. First, there is the freely available Rust Book, which covers almost all of the language. Second, the standard library is extensively documented. Because we knew our target audience, we could make stronger assumptions than most material found online. We created the course for engineers with at least 2–3 years of coding experience in either C, C++, or Java. This allowed us to move | Tool | ★★ | ||

| 2023-09-15 14:11:38 | Capslock: De quoi votre code est-il vraiment capable? Capslock: What is your code really capable of? (lien direct) |

Jess McClintock and John Dethridge, Google Open Source Security Team, and Damien Miller, Enterprise Infrastructure Protection TeamWhen you import a third party library, do you review every line of code? Most software packages depend on external libraries, trusting that those packages aren\'t doing anything unexpected. If that trust is violated, the consequences can be huge-regardless of whether the package is malicious, or well-intended but using overly broad permissions, such as with Log4j in 2021. Supply chain security is a growing issue, and we hope that greater transparency into package capabilities will help make secure coding easier for everyone.Avoiding bad dependencies can be hard without appropriate information on what the dependency\'s code actually does, and reviewing every line of that code is an immense task. Every dependency also brings its own dependencies, compounding the need for review across an expanding web of transitive dependencies. But what if there was an easy way to know the capabilities–the privileged operations accessed by the code–of your dependencies? Capslock is a capability analysis CLI tool that informs users of privileged operations (like network access and arbitrary code execution) in a given package and its dependencies. Last month we published the alpha version of Capslock for the Go language, which can analyze and report on the capabilities that are used beneath the surface of open source software. | Tool Vulnerability | ★★ | ||

| 2023-08-10 12:01:36 | Rendre le chrome plus sécurisé en apportant une épingle clé sur Android Making Chrome more secure by bringing Key Pinning to Android (lien direct) |

Posted by David Adrian, Joe DeBlasio and Carlos Joan Rafael Ibarra Lopez, Chrome Security

Chrome 106 added support for enforcing key pins on Android by default, bringing Android to parity with Chrome on desktop platforms. But what is key pinning anyway?

One of the reasons Chrome implements key pinning is the “rule of two”. This rule is part of Chrome\'s holistic secure development process. It says that when you are writing code for Chrome, you can pick no more than two of: code written in an unsafe language, processing untrustworthy inputs, and running without a sandbox. This blog post explains how key pinning and the rule of two are related.

The Rule of Two

Chrome is primarily written in the C and C++ languages, which are vulnerable to memory safety bugs. Mistakes with pointers in these languages can lead to memory being misinterpreted. Chrome invests in an ever-stronger multi-process architecture built on sandboxing and site isolation to help defend against memory safety problems. Android-specific features can be written in Java or Kotlin. These languages are memory-safe in the common case. Similarly, we\'re working on adding support to write Chrome code in Rust, which is also memory-safe.

Much of Chrome is sandboxed, but the sandbox still requires a core high-privilege “broker” process to coordinate communication and launch sandboxed processes. In Chrome, the broker is the browser process. The browser process is the source of truth that allows the rest of Chrome to be sandboxed and coordinates communication between the rest of the processes.

If an attacker is able to craft a malicious input to the browser process that exploits a bug and allows the attacker to achieve remote code execution (RCE) in the browser process, that would effectively give the attacker full control of the victim\'s Chrome browser and potentially the rest of the device. Conversely, if an attacker achieves RCE in a sandboxed process, such as a renderer, the attacker\'s capabilities are extremely limited. The attacker cannot reach outside of the sandbox unless they can additionally exploit the sandbox itself.

Without sandboxing, which limits the actions an attacker can take, and without memory safety, which removes the ability of a bug to disrupt the intended control flow of the program, the rule of two requires that the browser process does not handle untrustworthy inputs. The relative risks between sandboxed processes and the browser process are why the browser process is only allowed to parse trustworthy inputs and specific IPC messages.

Trustworthy inputs are defined extremely strictly: A “trustworthy source” means that Chrome can prove that the data comes from Google. Effectively, this means that in situations where the browser process needs access to data from e

Chrome is primarily written in the C and C++ languages, which are vulnerable to memory safety bugs. Mistakes with pointers in these languages can lead to memory being misinterpreted. Chrome invests in an ever-stronger multi-process architecture built on sandboxing and site isolation to help defend against memory safety problems. Android-specific features can be written in Java or Kotlin. These languages are memory-safe in the common case. Similarly, we\'re working on adding support to write Chrome code in Rust, which is also memory-safe.

Much of Chrome is sandboxed, but the sandbox still requires a core high-privilege “broker” process to coordinate communication and launch sandboxed processes. In Chrome, the broker is the browser process. The browser process is the source of truth that allows the rest of Chrome to be sandboxed and coordinates communication between the rest of the processes.

If an attacker is able to craft a malicious input to the browser process that exploits a bug and allows the attacker to achieve remote code execution (RCE) in the browser process, that would effectively give the attacker full control of the victim\'s Chrome browser and potentially the rest of the device. Conversely, if an attacker achieves RCE in a sandboxed process, such as a renderer, the attacker\'s capabilities are extremely limited. The attacker cannot reach outside of the sandbox unless they can additionally exploit the sandbox itself.

Without sandboxing, which limits the actions an attacker can take, and without memory safety, which removes the ability of a bug to disrupt the intended control flow of the program, the rule of two requires that the browser process does not handle untrustworthy inputs. The relative risks between sandboxed processes and the browser process are why the browser process is only allowed to parse trustworthy inputs and specific IPC messages.

Trustworthy inputs are defined extremely strictly: A “trustworthy source” means that Chrome can prove that the data comes from Google. Effectively, this means that in situations where the browser process needs access to data from e |

Tool | ★★ | ||

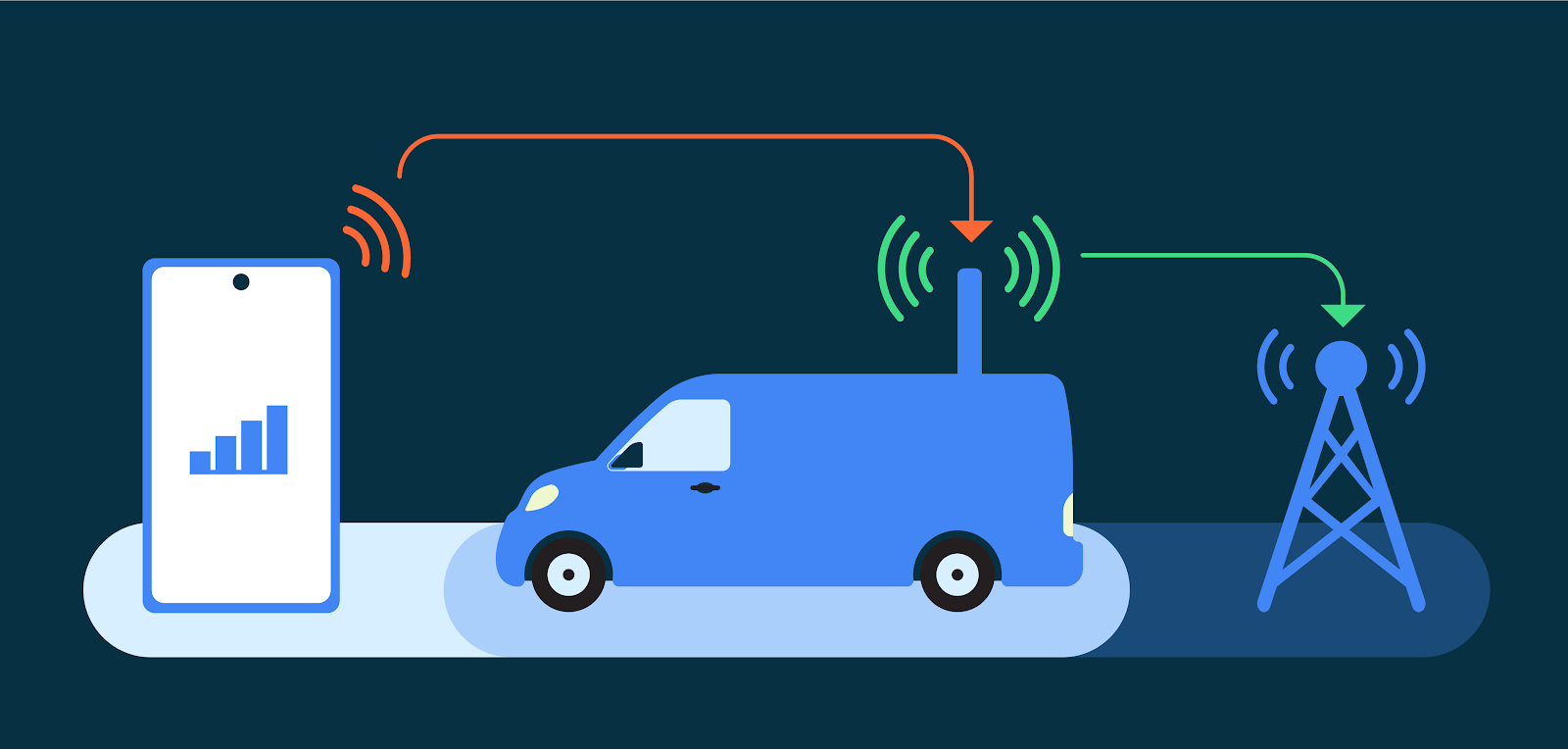

| 2023-08-08 11:59:13 | Android 14 présente les fonctionnalités de sécurité de la connectivité cellulaire en son genre Android 14 introduces first-of-its-kind cellular connectivity security features (lien direct) |

Posted by Roger Piqueras Jover, Yomna Nasser, and Sudhi Herle

Android is the first mobile operating system to introduce advanced cellular security mitigations for both consumers and enterprises. Android 14 introduces support for IT administrators to disable 2G support in their managed device fleet. Android 14 also introduces a feature that disables support for null-ciphered cellular connectivity.

Hardening network security on Android

The Android Security Model assumes that all networks are hostile to keep users safe from network packet injection, tampering, or eavesdropping on user traffic. Android does not rely on link-layer encryption to address this threat model. Instead, Android establishes that all network traffic should be end-to-end encrypted (E2EE).

When a user connects to cellular networks for their communications (data, voice, or SMS), due to the distinctive nature of cellular telephony, the link layer presents unique security and privacy challenges. False Base Stations (FBS) and Stingrays exploit weaknesses in cellular telephony standards to cause harm to users. Additionally, a smartphone cannot reliably know the legitimacy of the cellular base station before attempting to connect to it. Attackers exploit this in a number of ways, ranging from traffic interception and malware sideloading, to sophisticated dragnet surveillance.

Recognizing the far reaching implications of these attack vectors, especially for at-risk users, Android has prioritized hardening cellular telephony. We are tackling well-known insecurities such as the risk presented by 2G networks, the risk presented by null ciphers, other false base station (FBS) threats, and baseband hardening with our ecosystem partners.

2G and a history of inherent security risk

The mobile ecosystem is rapidly adopting 5G, the latest wireless standard for mobile, and many carriers have started to turn down 2G service. In the United States, for example, most major carriers have shut down 2G networks. However, all existing mobile devices still have support for 2G. As a result, when available, any mobile device will connect to a 2G network. This occurs automatically when 2G is the only network available, but this can also be remotely triggered in a malicious attack, silently inducing devices to downgrade to 2G-only connectivity and thus, ignoring any non-2G network. This behavior happens regardless of whether local operators have already sunset their 2G infrastructure.

2G networks, first implemented in 1991, do not provide the same level of security as subsequent mobile generat

Recognizing the far reaching implications of these attack vectors, especially for at-risk users, Android has prioritized hardening cellular telephony. We are tackling well-known insecurities such as the risk presented by 2G networks, the risk presented by null ciphers, other false base station (FBS) threats, and baseband hardening with our ecosystem partners.

2G and a history of inherent security risk

The mobile ecosystem is rapidly adopting 5G, the latest wireless standard for mobile, and many carriers have started to turn down 2G service. In the United States, for example, most major carriers have shut down 2G networks. However, all existing mobile devices still have support for 2G. As a result, when available, any mobile device will connect to a 2G network. This occurs automatically when 2G is the only network available, but this can also be remotely triggered in a malicious attack, silently inducing devices to downgrade to 2G-only connectivity and thus, ignoring any non-2G network. This behavior happens regardless of whether local operators have already sunset their 2G infrastructure.

2G networks, first implemented in 1991, do not provide the same level of security as subsequent mobile generat |

Malware Tool Threat Conference | ★★★ | ||

| 2023-07-27 12:01:55 | Les hauts et les bas de 0 jours: une année en revue des 0 jours exploités dans le monde en 2022 The Ups and Downs of 0-days: A Year in Review of 0-days Exploited In-the-Wild in 2022 (lien direct) |

Maddie Stone, Security Researcher, Threat Analysis Group (TAG)This is Google\'s fourth annual year-in-review of 0-days exploited in-the-wild [2021, 2020, 2019] and builds off of the mid-year 2022 review. The goal of this report is not to detail each individual exploit, but instead to analyze the exploits from the year as a whole, looking for trends, gaps, lessons learned, and successes. Executive Summary41 in-the-wild 0-days were detected and disclosed in 2022, the second-most ever recorded since we began tracking in mid-2014, but down from the 69 detected in 2021. Although a 40% drop might seem like a clear-cut win for improving security, the reality is more complicated. Some of our key takeaways from 2022 include:N-days function like 0-days on Android due to long patching times. Across the Android ecosystem there were multiple cases where patches were not available to users for a significant time. Attackers didn\'t need 0-day exploits and instead were able to use n-days that functioned as 0-days. | Tool Vulnerability Threat Prediction Conference | ★★★ | ||

| 2023-07-20 16:03:33 | Sécurité de la chaîne d'approvisionnement pour Go, partie 3: décalage à gauche Supply chain security for Go, Part 3: Shifting left (lien direct) |

Julie Qiu, Go Security & Reliability and Jonathan Metzman, Google Open Source Security TeamPreviously in our Supply chain security for Go series, we covered dependency and vulnerability management tools and how Go ensures package integrity and availability as part of the commitment to countering the rise in supply chain attacks in recent years. In this final installment, we\'ll discuss how “shift left” security can help make sure you have the security information you need, when you need it, to avoid unwelcome surprises. Shifting leftThe software development life cycle (SDLC) refers to the series of steps that a software project goes through, from planning all the way through operation. It\'s a cycle because once code has been released, the process continues and repeats through actions like coding new features, addressing bugs, and more. |

Tool Vulnerability | ★★ | ||

| 2023-06-16 13:11:38 | Apporter la transparence à l'informatique confidentielle avec SLSA Bringing Transparency to Confidential Computing with SLSA (lien direct) |

Asra Ali, Razieh Behjati, Tiziano Santoro, Software EngineersEvery day, personal data, such as location information, images, or text queries are passed between your device and remote, cloud-based services. Your data is encrypted when in transit and at rest, but as potential attack vectors grow more sophisticated, data must also be protected during use by the service, especially for software systems that handle personally identifiable user data.Toward this goal, Google\'s Project Oak is a research effort that relies on the confidential computing paradigm to build an infrastructure for processing sensitive user data in a secure and privacy-preserving way: we ensure data is protected during transit, at rest, and while in use. As an assurance that the user data is in fact protected, we\'ve open sourced Project Oak code, and have introduced a transparent release process to provide publicly inspectable evidence that the application was built from that source code. This blog post introduces Oak\'s transparent release process, which relies on the SLSA framework to generate cryptographic proof of the origin of Oak\'s confidential computing stack, and together with Oak\'s remote attestation process, allows users to cryptographically verify that their personal data was processed by a trustworthy application in a secure environment. | Tool | ★★ | ||

| 2023-05-25 12:00:55 | API de services Google Trust ACME disponibles pour tous les utilisateurs sans frais Google Trust Services ACME API available to all users at no cost (lien direct) |

David Kluge, Technical Program Manager, and Andy Warner, Product ManagerNobody likes preventable site errors, but they happen disappointingly often. The last thing you want your customers to see is a dreaded \'Your connection is not private\' error instead of the service they expected to reach. Most certificate errors are preventable and one of the best ways to help prevent issues is by automating your certificate lifecycle using the ACME standard. Google Trust Services now offers our ACME API to all users with a Google Cloud account (referred to as “users” here), allowing them to automatically acquire and renew publicly-trusted TLS certificates for free. The ACME API has been available as a preview and over 200 million certificates have been issued already, offering the same compatibility as major Google services like google.com or youtube.com. | Tool Cloud | ★★★ | ||

| 2023-05-24 12:49:28 | Annonçant le lancement de Guac V0.1 Announcing the launch of GUAC v0.1 (lien direct) |

Brandon Lum and Mihai Maruseac, Google Open Source Security TeamToday, we are announcing the launch of the v0.1 version of Graph for Understanding Artifact Composition (GUAC). Introduced at Kubecon 2022 in October, GUAC targets a critical need in the software industry to understand the software supply chain. In collaboration with Kusari, Purdue University, Citi, and community members, we have incorporated feedback from our early testers to improve GUAC and make it more useful for security professionals. This improved version is now available as an API for you to start developing on top of, and integrating into, your systems.The need for GUACHigh-profile incidents such as Solarwinds, and the recent 3CX supply chain double-exposure, are evidence that supply chain attacks are getting more sophisticated. As highlighted by the | Tool Vulnerability Threat | Yahoo | ★★ | |

| 2023-05-10 14:59:36 | E / S 2023: Ce qui est nouveau dans la sécurité et la confidentialité d'Android I/O 2023: What\\'s new in Android security and privacy (lien direct) |

Posted by Ronnie Falcon, Product Manager Android is built with multiple layers of security and privacy protections to help keep you, your devices, and your data safe. Most importantly, we are committed to transparency, so you can see your device safety status and know how your data is being used. Android uses the best of Google\'s AI and machine learning expertise to proactively protect you and help keep you out of harm\'s way. We also empower you with tools that help you take control of your privacy. I/O is a great moment to show how we bring these features and protections all together to help you stay safe from threats like phishing attacks and password theft, while remaining in charge of your personal data. Safe Browsing: faster more intelligent protection Android uses Safe Browsing to protect billions of users from web-based threats, like deceptive phishing sites. This happens in the Chrome default browser and also in Android WebView, when you open web content from apps. Safe Browsing is getting a big upgrade with a new real-time API that helps ensure you\'re warned about fast-emerging malicious sites. With the newest version of Safe Browsing, devices will do real-time blocklist checks for low reputation sites. Our internal analysis has found that a significant number of phishing sites only exist for less than ten minutes to try and stay ahead of block-lists. With this real-time detection, we expect we\'ll be able to block an additional 25 percent of phishing attempts every month in Chrome and Android1. Safe Browsing isn\'t just getting faster at warning users. We\'ve also been building in more intelligence, leveraging Google\'s advances in AI. Last year, Chrome browser on Android and desktop started utilizing a new image-based phishing detection machine learning model to visually inspect fake sites that try to pass themselves off as legitimate log-in pages. By leveraging a TensorFlow Lite model, we\'re able to find 3x more2 phishing sites compared to previous machine learning models and help warn you before you get tricked into signing in. This year, we\'re expanding the coverage of the model to detect hundreds of more phishing campaigns and leverage new ML technologies. This is just one example of how we use our AI expertise to keep your data safe. Last year, Android used AI to protect users from 100 billion suspected spam messages and calls.3 Passkeys helps move users beyond passwords For many, passwords are the primary protection for their online life. In reality, they are frustrating to create, remember and are easily hacked. But hackers can\'t phish a password that doesn\'t exist. Which is why we are excited to share another major step forward in our passwordless journey: Passkeys. | Spam Malware Tool | ★★★ | ||

| 2023-04-26 11:00:21 | Célébrer SLSA v1.0: sécuriser la chaîne d'approvisionnement des logiciels pour tout le monde Celebrating SLSA v1.0: securing the software supply chain for everyone (lien direct) |

Bob Callaway, Staff Security Engineer, Google Open Source Security team Last week the Open Source Security Foundation (OpenSSF) announced the release of SLSA v1.0, a framework that helps secure the software supply chain. Ten years of using an internal version of SLSA at Google has shown that it\'s crucial to warding off tampering and keeping software secure. It\'s especially gratifying to see SLSA reaching v1.0 as an open source project-contributors have come together to produce solutions that will benefit everyone. SLSA for safer supply chains Developers and organizations that adopt SLSA will be protecting themselves against a variety of supply chain attacks, which have continued rising since Google first donated SLSA to OpenSSF in 2021. In that time, the industry has also seen a U.S. Executive Order on Cybersecurity and the associated NIST Secure Software Development Framework (SSDF) to guide national standards for software used by the U.S. government, as well as the Network and Information Security (NIS2) Directive in the European Union. SLSA offers not only an onramp to meeting these standards, but also a way to prepare for a climate of increased scrutiny on software development practices. As organizations benefit from using SLSA, it\'s also up to them to shoulder part of the burden of spreading these benefits to open source projects. Many maintainers of the critical open source projects that underpin the internet are volunteers; they cannot be expected to do all the work when so many of the rewards of adopting SLSA roll out across the supply chain to benefit everyone. Supply chain security for all That\'s why beyond contributing to SLSA, we\'ve also been laying the foundation to integrate supply chain solutions directly into the ecosystems and platforms used to create open source projects. We\'re also directly supporting open source maintainers, who often cite lack of time or resources as limiting factors when making security improvements to their projects. Our Open Source Security Upstream Team consists of developers who spend 100% of their time contributing to critical open source projects to make security improvements. For open source developers who choose to adopt SLSA on their own, we\'ve funded the Secure Open Source Rewards Program, which pays developers directly for these types of security improvements. Currently, open source developers who want to secure their builds can use the free SLSA L3 GitHub Builder, which requires only a one-time adjustment to the traditional build process implemented through GitHub actions. There\'s also the SLSA Verifier tool for software consumers. Users of npm-or Node Package Manager, the world\'s largest software repository-can take advantage of their recently released beta SLSA integration, which streamlines the process of creating and verifying SLSA provenance through the npm command line interface. We\'re also supporting the integration of Sigstore into many major | Tool Patching | ★★ | ||

| 2023-04-13 12:04:31 | Sécurité de la chaîne d'approvisionnement pour GO, partie 1: Gestion de la vulnérabilité Supply chain security for Go, Part 1: Vulnerability management (lien direct) |

Posted by Julie Qiu, Go Security & Reliability and Oliver Chang, Google Open Source Security Team High profile open source vulnerabilities have made it clear that securing the supply chains underpinning modern software is an urgent, yet enormous, undertaking. As supply chains get more complicated, enterprise developers need to manage the tidal wave of vulnerabilities that propagate up through dependency trees. Open source maintainers need streamlined ways to vet proposed dependencies and protect their projects. A rise in attacks coupled with increasingly complex supply chains means that supply chain security problems need solutions on the ecosystem level. One way developers can manage this enormous risk is by choosing a more secure language. As part of Google\'s commitment to advancing cybersecurity and securing the software supply chain, Go maintainers are focused this year on hardening supply chain security, streamlining security information to our users, and making it easier than ever to make good security choices in Go. This is the first in a series of blog posts about how developers and enterprises can secure their supply chains with Go. Today\'s post covers how Go helps teams with the tricky problem of managing vulnerabilities in their open source packages. Extensive Package Insights Before adopting a dependency, it\'s important to have high-quality information about the package. Seamless access to comprehensive information can be the difference between an informed choice and a future security incident from a vulnerability in your supply chain. Along with providing package documentation and version history, the Go package discovery site links to Open Source Insights. The Open Source Insights page includes vulnerability information, a dependency tree, and a security score provided by the OpenSSF Scorecard project. Scorecard evaluates projects on more than a dozen security metrics, each backed up with supporting information, and assigns the project an overall score out of ten to help users quickly judge its security stance (example). The Go package discovery site puts all these resources at developers\' fingertips when they need them most-before taking on a potentially risky dependency. Curated Vulnerability Information Large consumers of open source software must manage many packages and a high volume of vulnerabilities. For enterprise teams, filtering out noisy, low quality advisories and false positives from critical vulnerabilities is often the most important task in vulnerability management. If it is difficult to tell which vulnerabilities are important, it is impossible to properly prioritize their remediation. With granular advisory details, the Go vulnerability database removes barriers to vulnerability prioritization and remediation. All vulnerability database entries are reviewed and curated by the Go security team. As a result, entries are accurate and include detailed metadata to improve the quality of vulnerability scans and to make vulnerability information more actionable. This metadata includes information on affected functions, operating systems, and architectures. With this information, vulnerability scanners can reduce the number of false po | Tool Vulnerability | ★★ | ||

| 2023-04-11 12:11:33 | Annonce de l'API DEPS.DEV: données de dépendance critiques pour les chaînes d'approvisionnement sécurisées Announcing the deps.dev API: critical dependency data for secure supply chains (lien direct) |

Posted by Jesper Sarnesjo and Nicky Ringland, Google Open Source Security Team Today, we are excited to announce the deps.dev API, which provides free access to the deps.dev dataset of security metadata, including dependencies, licenses, advisories, and other critical health and security signals for more than 50 million open source package versions. Software supply chain attacks are increasingly common and harmful, with high profile incidents such as Log4Shell, Codecov, and the recent 3CX hack. The overwhelming complexity of the software ecosystem causes trouble for even the most diligent and well-resourced developers. We hope the deps.dev API will help the community make sense of complex dependency data that allows them to respond to-or even prevent-these types of attacks. By integrating this data into tools, workflows, and analyses, developers can more easily understand the risks in their software supply chains. The power of dependency data As part of Google\'s ongoing efforts to improve open source security, the Open Source Insights team has built a reliable view of software metadata across 5 packaging ecosystems. The deps.dev data set is continuously updated from a range of sources: package registries, the Open Source Vulnerability database, code hosts such as GitHub and GitLab, and the software artifacts themselves. This includes 5 million packages, more than 50 million versions, from the Go, Maven, PyPI, npm, and Cargo ecosystems-and you\'d better believe we\'re counting them! We collect and aggregate this data and derive transitive dependency graphs, advisory impact reports, OpenSSF Security Scorecard information, and more. Where the deps.dev website allows human exploration and examination, and the BigQuery dataset supports large-scale bulk data analysis, this new API enables programmatic, real-time access to the corpus for integration into tools, workflows, and analyses. The API is used by a number of teams internally at Google to support the security of our own products. One of the first publicly visible uses is the GUAC integration, which uses the deps.dev data to enrich SBOMs. We have more exciting integrations in the works, but we\'re most excited to see what the greater open source community builds! We see the API as being useful for tool builders, researchers, and tinkerers who want to answer questions like: What versions are available for this package? What are the licenses that cover this version of a package-or all the packages in my codebase? How many dependencies does this package have? What are they? Does the latest version of this package include changes to dependencies or licenses? What versions of what packages correspond to this file? Taken together, this information can help answer the most important overarching question: how much risk would this dependency add to my project? The API can help surface critical security information where and when developers can act. This data can be integrated into: IDE Plugins, to make dependency and security information immediately available. CI | Tool Vulnerability | ★★ | ||