What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2024-04-23 13:15:47 | Découvrir des menaces potentielles à votre application Web en tirant parti des rapports de sécurité Uncovering potential threats to your web application by leveraging security reports (lien direct) |

Posted by Yoshi Yamaguchi, Santiago Díaz, Maud Nalpas, Eiji Kitamura, DevRel team

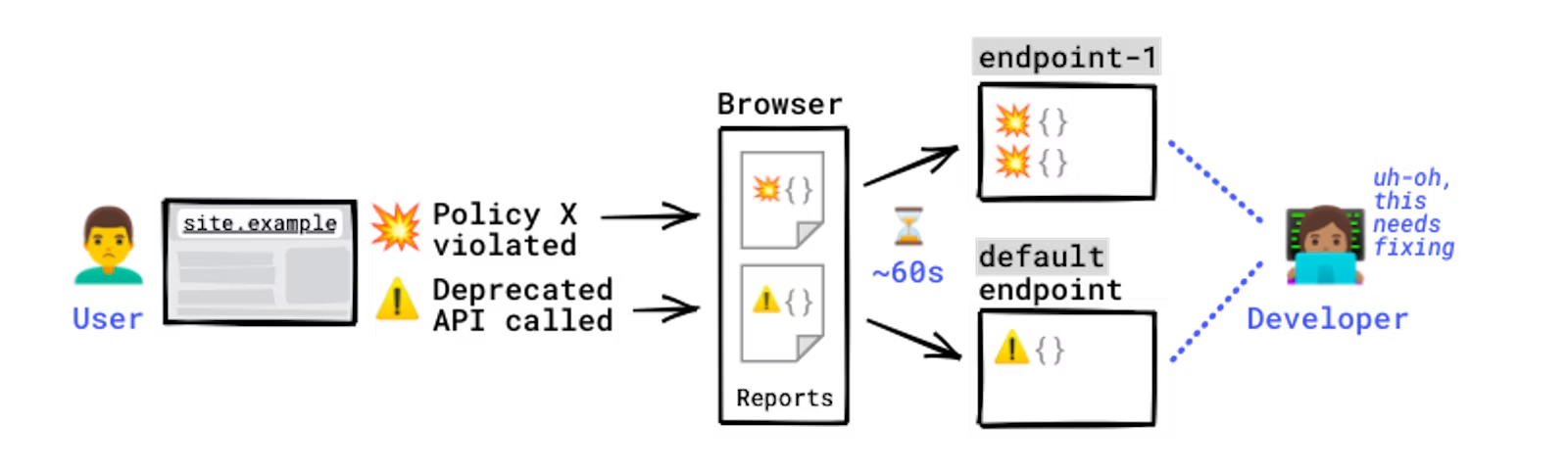

The Reporting API is an emerging web standard that provides a generic reporting mechanism for issues occurring on the browsers visiting your production website. The reports you receive detail issues such as security violations or soon-to-be-deprecated APIs, from users\' browsers from all over the world.

Collecting reports is often as simple as specifying an endpoint URL in the HTTP header; the browser will automatically start forwarding reports covering the issues you are interested in to those endpoints. However, processing and analyzing these reports is not that simple. For example, you may receive a massive number of reports on your endpoint, and it is possible that not all of them will be helpful in identifying the underlying problem. In such circumstances, distilling and fixing issues can be quite a challenge.

In this blog post, we\'ll share how the Google security team uses the Reporting API to detect potential issues and identify the actual problems causing them. We\'ll also introduce an open source solution, so you can easily replicate Google\'s approach to processing reports and acting on them.

How does the Reporting API work?

Some errors only occur in production, on users\' browsers to which you have no access. You won\'t see these errors locally or during development because there could be unexpected conditions real users, real networks, and real devices are in. With the Reporting API, you directly leverage the browser to monitor these errors: the browser catches these errors for you, generates an error report, and sends this report to an endpoint you\'ve specified.

How reports are generated and sent.

Errors you can monitor with the Reporting API include:

Security violations: Content-Security-Policy (CSP), Cross-Origin-Opener-Policy (COOP), Cross-Origin-Embedder-Policy (COEP)

Deprecated and soon-to-be-deprecated API calls

Browser interventions

Permissions policy

And more

For a full list of error types you can monitor, see use cases and report types.

The Reporting API is activated and configured using HTTP response headers: you need to declare the endpoint(s) you want the browser to send reports to, and which error types you want to monitor. The browser then sends reports to your endpoint in POST requests whose payload is a list of reports.

Example setup:#

How reports are generated and sent.

Errors you can monitor with the Reporting API include:

Security violations: Content-Security-Policy (CSP), Cross-Origin-Opener-Policy (COOP), Cross-Origin-Embedder-Policy (COEP)

Deprecated and soon-to-be-deprecated API calls

Browser interventions

Permissions policy

And more

For a full list of error types you can monitor, see use cases and report types.

The Reporting API is activated and configured using HTTP response headers: you need to declare the endpoint(s) you want the browser to send reports to, and which error types you want to monitor. The browser then sends reports to your endpoint in POST requests whose payload is a list of reports.

Example setup:# |

Malware Tool Vulnerability Mobile Cloud | ★★★ | ||

| 2024-04-08 14:12:48 | Comment nous avons construit le nouveau réseau de recherche avec la sécurité des utilisateurs et la confidentialité How we built the new Find My Device network with user security and privacy in mind (lien direct) |

Posted by Dave Kleidermacher, VP Engineering, Android Security and Privacy

Keeping people safe and their data secure and private is a top priority for Android. That is why we took our time when designing the new Find My Device, which uses a crowdsourced device-locating network to help you find your lost or misplaced devices and belongings quickly – even when they\'re offline. We gave careful consideration to the potential user security and privacy challenges that come with device finding services. During development, it was important for us to ensure the new Find My Device was secure by default and private by design. To build a private, crowdsourced device-locating network, we first conducted user research and gathered feedback from privacy and advocacy groups. Next, we developed multi-layered protections across three main areas: data safeguards, safety-first protections, and user controls. This approach provides defense-in-depth for Find My Device users.

How location crowdsourcing works on the Find My Device network

The Find My Device network locates devices by harnessing the Bluetooth proximity of surrounding Android devices. Imagine you drop your keys at a cafe. The keys themselves have no location capabilities, but they may have a Bluetooth tag attached. Nearby Android devices participating in the Find My Device network report the location of the Bluetooth tag. When the owner realizes they have lost their keys and logs into the Find My Device mobile app, they will be able to see the aggregated location contributed by nearby Android devices and locate their keys.

Keeping people safe and their data secure and private is a top priority for Android. That is why we took our time when designing the new Find My Device, which uses a crowdsourced device-locating network to help you find your lost or misplaced devices and belongings quickly – even when they\'re offline. We gave careful consideration to the potential user security and privacy challenges that come with device finding services. During development, it was important for us to ensure the new Find My Device was secure by default and private by design. To build a private, crowdsourced device-locating network, we first conducted user research and gathered feedback from privacy and advocacy groups. Next, we developed multi-layered protections across three main areas: data safeguards, safety-first protections, and user controls. This approach provides defense-in-depth for Find My Device users.

How location crowdsourcing works on the Find My Device network

The Find My Device network locates devices by harnessing the Bluetooth proximity of surrounding Android devices. Imagine you drop your keys at a cafe. The keys themselves have no location capabilities, but they may have a Bluetooth tag attached. Nearby Android devices participating in the Find My Device network report the location of the Bluetooth tag. When the owner realizes they have lost their keys and logs into the Find My Device mobile app, they will be able to see the aggregated location contributed by nearby Android devices and locate their keys.

|

Vulnerability Threat Mobile | ★★ | ||

| 2024-03-28 18:16:18 | Adressez désinfectant pour le firmware à métal nu Address Sanitizer for Bare-metal Firmware (lien direct) |

Posted by Eugene Rodionov and Ivan Lozano, Android Team With steady improvements to Android userspace and kernel security, we have noticed an increasing interest from security researchers directed towards lower level firmware. This area has traditionally received less scrutiny, but is critical to device security. We have previously discussed how we have been prioritizing firmware security, and how to apply mitigations in a firmware environment to mitigate unknown vulnerabilities. In this post we will show how the Kernel Address Sanitizer (KASan) can be used to proactively discover vulnerabilities earlier in the development lifecycle. Despite the narrow application implied by its name, KASan is applicable to a wide-range of firmware targets. Using KASan enabled builds during testing and/or fuzzing can help catch memory corruption vulnerabilities and stability issues before they land on user devices. We\'ve already used KASan in some firmware targets to proactively find and fix 40+ memory safety bugs and vulnerabilities, including some of critical severity. Along with this blog post we are releasing a small project which demonstrates an implementation of KASan for bare-metal targets leveraging the QEMU system emulator. Readers can refer to this implementation for technical details while following the blog post. Address Sanitizer (ASan) overview Address sanitizer is a compiler-based instrumentation tool used to identify invalid memory access operations during runtime. It is capable of detecting the following classes of temporal and spatial memory safety bugs: out-of-bounds memory access use-after-free double/invalid free use-after-return ASan relies on the compiler to instrument code with dynamic checks for virtual addresses used in load/store operations. A separate runtime library defines the instrumentation hooks for the heap memory and error reporting. For most user-space targets (such as aarch64-linux-android) ASan can be enabled as simply as using the -fsanitize=address compiler option for Clang due to existing support of this target both in the toolchain and in the libclang_rt runtime. However, the situation is rather different for bare-metal code which is frequently built with the none system targets, such as arm-none-eabi. Unlike traditional user-space programs, bare-metal code running inside an embedded system often doesn\'t have a common runtime implementation. As such, LLVM can\'t provide a default runtime for these environments. To provide custom implementations for the necessary runtime routines, the Clang toolchain exposes an interface for address sanitization through the -fsanitize=kernel-address compiler option. The KASan runtime routines implemented in the Linux kernel serve as a great example of how to define a KASan runtime for targets which aren\'t supported by default with -fsanitize=address. We\'ll demonstrate how to use the version of address sanitizer originally built for the kernel on other bare-metal targets. KASan 101 Let\'s take a look at the KASan major building blocks from a high-level perspective (a thorough explanation of how ASan works under-the-hood is provided in this whitepaper). The main idea behind KASan is that every memory access operation, such as load/store instructions and memory copy functions (for example, memm | Tool Vulnerability Mobile Technical | ★★ | ||

| 2024-03-12 11:59:14 | Programme de récompense de vulnérabilité: 2023 Année en revue Vulnerability Reward Program: 2023 Year in Review (lien direct) |

Posted by Sarah Jacobus, Vulnerability Rewards Team

Last year, we again witnessed the power of community-driven security efforts as researchers from around the world contributed to help us identify and address thousands of vulnerabilities in our products and services. Working with our dedicated bug hunter community, we awarded $10 million to our 600+ researchers based in 68 countries.

New Resources and Improvements

Just like every year, 2023 brought a series of changes and improvements to our vulnerability reward programs:

Through our new Bonus Awards program, we now periodically offer time-limited, extra rewards for reports to specific VRP targets.

We expanded our exploit reward program to Chrome and Cloud through the launch of v8CTF, a CTF focused on V8, the JavaScript engine that powers Chrome.

We launched Mobile VRP which focuses on first-party Android applications.

Our new Bughunters blog shared ways in which we make the internet, as a whole, safer, and what that journey entails. Take a look at our ever-growing repository of posts!

To further our engagement with top security researchers, we also hosted our yearly security conference ESCAL8 in Tokyo. It included live hacking events and competitions, student training with our init.g workshops, and talks from researchers and Googlers. Stay tuned for details on ESCAL8 2024.

As in past years, we are sharing our 2023 Year in Review statistics across all of our programs. We would like to give a special thank you to all of our dedicated researchers for their continued work with our programs - we look forward to more collaboration in the future!

Android and Google Devices

In 2023, the Android VRP achieved significant milestones, reflecting our dedication to securing the Android ecosystem. We awarded over $3.4 million in rewards to researchers who uncovered remarkable vulnerabilities within Android

Android and Google Devices

In 2023, the Android VRP achieved significant milestones, reflecting our dedication to securing the Android ecosystem. We awarded over $3.4 million in rewards to researchers who uncovered remarkable vulnerabilities within Android |

Vulnerability Threat Mobile Cloud Conference | ★★★ | ||

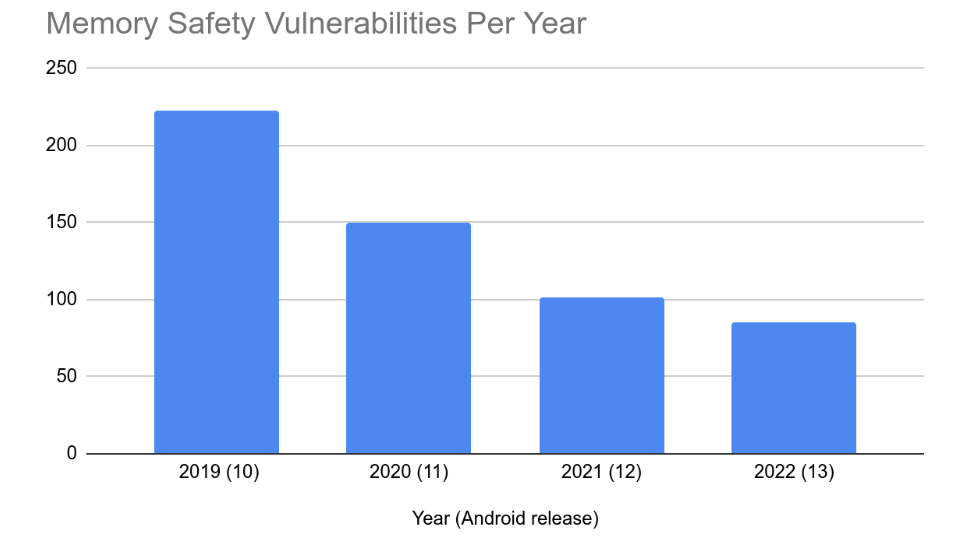

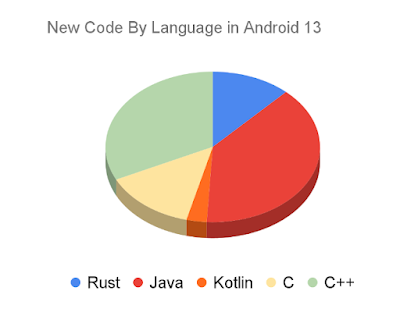

| 2024-03-04 14:00:38 | Sécurisé par conception: la perspective de Google \\ sur la sécurité de la mémoire Secure by Design: Google\\'s Perspective on Memory Safety (lien direct) |

Alex Rebert, Software Engineer, Christoph Kern, Principal Engineer, Security FoundationsGoogle\'s Project Zero reports that memory safety vulnerabilities-security defects caused by subtle coding errors related to how a program accesses memory-have been "the standard for attacking software for the last few decades and it\'s still how attackers are having success". Their analysis shows two thirds of 0-day exploits detected in the wild used memory corruption vulnerabilities. Despite substantial investments to improve memory-unsafe languages, those vulnerabilities continue to top the most commonly exploited vulnerability classes.In this post, we share our perspective on memory safety in a comprehensive whitepaper. This paper delves into the data, challenges of tackling memory unsafety, and discusses possible approaches for achieving memory safety and their tradeoffs. We\'ll also highlight our commitments towards implementing several of the solutions outlined in the whitepaper, most recently with a $1,000,000 grant to the Rust Foundation, thereby advancing the development of a robust memory-safe ecosystem.Why we\'re publishing this now2022 marked the 50th anniversary of memory safety vulnerabilities. Since then, memo | Vulnerability Mobile | ★★ | ||

| 2024-02-05 11:59:31 | Amélioration de l'interopérabilité entre la rouille et le C ++ Improving Interoperability Between Rust and C++ (lien direct) |

Publié par Lars Bergstrom & # 8211;Directeur, Android Platform Tools & amp;Bibliothèques et présidente du Rust Foundation Board En 2021, nous annoncé que Google rejoignait la Fondation Rust.À l'époque, Rust était déjà largement utilisée sur Android et d'autres produits Google.Notre annonce a souligné notre engagement à améliorer les examens de sécurité du code de la rouille et son interopérabilité avec le code C ++.La rouille est l'un des outils les plus forts que nous avons pour résoudre les problèmes de sécurité de la sécurité mémoire.Depuis cette annonce, les leaders de l'industrie et agences gouvernementales sentiment. Nous sommes ravis d'annoncer que Google a fourni une subvention de 1 million de dollars à la Rust Foundation pour soutenir les efforts qui amélioreront la capacité de Rust Code à interopérer avec les bases de code C ++ héritées existantes.Nous réapparaisons également notre engagement existant envers la communauté de la rouille open source en agrégant et en publiant Audits pour les caisses de rouille que nous utilisons dans les projets Google open-source.Ces contributions, ainsi que notre contributions précédentes à l'interopérabilité , ont-ellesenthousiasmé par l'avenir de la rouille. "Sur la base des statistiques historiques de la densité de la densité de vulnérabilité, Rust a empêché de manière proactive des centaines de vulnérabilités d'avoir un impact sur l'écosystème Android.Cet investissement vise à étendre l'adoption de la rouille sur divers composants de la plate-forme. » & # 8211;Dave Kleidermacher, vice-président de l'ingénierie, Android Security & AMP;Confidentialité Bien que Google ait connu la croissance la plus importante de l'utilisation de la rouille dans Android, nous continuons à augmenter son utilisation sur plus d'applications, y compris les clients et le matériel de serveur. «Bien que la rouille ne soit pas adaptée à toutes les applications de produits, la priorisation de l'interopérabilité transparente avec C ++ accélérera l'adoption de la communauté plus large, s'alignant ainsi sur les objectifs de l'industrie d'améliorer la sécurité mémoire.» & # 8211;Royal Hansen, vice-président de Google de la sécurité et de l'AMP;Sécurité L'outillage de rouille et l'écosystème prennent déjà en charge interopérabilité avec Android et avec un investissement continuDans des outils comme cxx , autocxx , bindgen , cbindgen , diplomate , et crubit, nous constatons des améliorations régulières de l'état d'interopérabilité de la rouille avec C ++.Au fur et à mesure que ces améliorations se sont poursuivies, nous avons constaté une réduction des obstacles à l'adoption et à l'adoption accélérée de la rouille.Bien que ces progrès à travers les nombreux outils se poursuivent, il ne se fait souvent que développer progressivement pour répondre aux besoins particuliers d'un projet ou d'une entreprise donnée. Afin d'accélérer à la fois l'adoption de la rouill | Tool Vulnerability Mobile | ★★★ | ||

| 2024-01-31 13:07:18 | Échelle de sécurité avec l'IA: de la détection à la solution Scaling security with AI: from detection to solution (lien direct) |

Dongge Liu and Oliver Chang, Google Open Source Security Team, Jan Nowakowski and Jan Keller, Machine Learning for Security TeamThe AI world moves fast, so we\'ve been hard at work keeping security apace with recent advancements. One of our approaches, in alignment with Google\'s Safer AI Framework (SAIF), is using AI itself to automate and streamline routine and manual security tasks, including fixing security bugs. Last year we wrote about our experiences using LLMs to expand vulnerability testing coverage, and we\'re excited to share some updates. Today, we\'re releasing our fuzzing framework as a free, open source resource that researchers and developers can use to improve fuzzing\'s bug-finding abilities. We\'ll also show you how we\'re using AI to speed up the bug patching process. By sharing these experiences, we hope to spark new ideas and drive innovation for a stronger ecosystem security.Update: AI-powered vulnerability discoveryLast August, we announced our framework to automate manual aspects of fuzz testing (“fuzzing”) that often hindered open source maintainers from fuzzing their projects effectively. We used LLMs to write project-specific code to boost fuzzing coverage and find more vulnerabilities. Our initial results on a subset of projects in our free OSS-Fuzz service | Vulnerability Patching Cloud | ★★ | ||

| 2024-01-11 14:18:14 | MiraclePtr: protéger les utilisateurs contre les vulnérabilités sans utilisation sans plateformes MiraclePtr: protecting users from use-after-free vulnerabilities on more platforms (lien direct) |

Posted by Keishi Hattori, Sergei Glazunov, Bartek Nowierski on behalf of the MiraclePtr team

Welcome back to our latest update on MiraclePtr, our project to protect against use-after-free vulnerabilities in Google Chrome. If you need a refresher, you can read our previous blog post detailing MiraclePtr and its objectives.

More platforms

We are thrilled to announce that since our last update, we have successfully enabled MiraclePtr for more platforms and processes:

In June 2022, we enabled MiraclePtr for the browser process on Windows and Android.

In September 2022, we expanded its coverage to include all processes except renderer processes.

In June 2023, we enabled MiraclePtr for ChromeOS, macOS, and Linux.

Furthermore, we have changed security guidelines to downgrade MiraclePtr-protected issues by one severity level!

Evaluating Security Impact

First let\'s focus on its security impact. Our analysis is based on two primary information sources: incoming vulnerability reports and crash reports from user devices. Let\'s take a closer look at each of these sources and how they inform our understanding of MiraclePtr\'s effectiveness.

Bug reports

Chrome vulnerability reports come from various sources, such as:

Chrome Vulnerability Reward Program participants,

our fuzzing infrastructure,

internal and external teams investigating security incidents.

For the purposes of this analysis, we focus on vulnerabilities that affect platforms where MiraclePtr was enabled at the time the issues were reported. We also exclude bugs that occur inside a sandboxed renderer process. Since the initial launch of MiraclePtr in 2022, we have received 168 use-after-free reports matching our criteria.

What does the data tell us? MiraclePtr effectively mitigated 57% of these use-after-free vulnerabilities in privileged processes, exceeding our initial estimate of 50%. Reaching this level of effectiveness, however, required additional work. For instance, we not only rewrote class fields to use MiraclePtr, as discussed in the previous post, but also added MiraclePtr support for bound function arguments, such as Unretained pointers. These pointers have been a significant source of use-after-frees in Chrome, and the additional protection allowed us to mitigate 39 more issues.

Moreover, these vulnerability reports enable us to pinpoint areas needing improvement. We\'re actively working on adding support for select third-party libraries that have been a source of use-after-free bugs, as well as developing a more advanced rewriter tool that can handle transformations like converting std::vector into std::vector. We\'ve also made sever

What does the data tell us? MiraclePtr effectively mitigated 57% of these use-after-free vulnerabilities in privileged processes, exceeding our initial estimate of 50%. Reaching this level of effectiveness, however, required additional work. For instance, we not only rewrote class fields to use MiraclePtr, as discussed in the previous post, but also added MiraclePtr support for bound function arguments, such as Unretained pointers. These pointers have been a significant source of use-after-frees in Chrome, and the additional protection allowed us to mitigate 39 more issues.

Moreover, these vulnerability reports enable us to pinpoint areas needing improvement. We\'re actively working on adding support for select third-party libraries that have been a source of use-after-free bugs, as well as developing a more advanced rewriter tool that can handle transformations like converting std::vector into std::vector. We\'ve also made sever |

Tool Vulnerability Threat Mobile | ★★★ | ||

| 2023-12-12 12:00:09 | Durcissant les bandes de base cellulaire dans Android Hardening cellular basebands in Android (lien direct) |

Posted by Ivan Lozano and Roger Piqueras Jover Android\'s defense-in-depth strategy applies not only to the Android OS running on the Application Processor (AP) but also the firmware that runs on devices. We particularly prioritize hardening the cellular baseband given its unique combination of running in an elevated privilege and parsing untrusted inputs that are remotely delivered into the device. This post covers how to use two high-value sanitizers which can prevent specific classes of vulnerabilities found within the baseband. They are architecture agnostic, suitable for bare-metal deployment, and should be enabled in existing C/C++ code bases to mitigate unknown vulnerabilities. Beyond security, addressing the issues uncovered by these sanitizers improves code health and overall stability, reducing resources spent addressing bugs in the future. An increasingly popular attack surface As we outlined previously, security research focused on the baseband has highlighted a consistent lack of exploit mitigations in firmware. Baseband Remote Code Execution (RCE) exploits have their own categorization in well-known third-party marketplaces with a relatively low payout. This suggests baseband bugs may potentially be abundant and/or not too complex to find and exploit, and their prominent inclusion in the marketplace demonstrates that they are useful. Baseband security and exploitation has been a recurring theme in security conferences for the last decade. Researchers have also made a dent in this area in well-known exploitation contests. Most recently, this area has become prominent enough that it is common to find practical baseband exploitation trainings in top security conferences. Acknowledging this trend, combined with the severity and apparent abundance of these vulnerabilities, last year we introduced updates to the severity guidelines of Android\'s Vulnerability Rewards Program (VRP). For example, we consider vulnerabilities allowing Remote Code Execution (RCE) in the cellular baseband to be of CRITICAL severity. Mitigating Vulnerability Root Causes with Sanitizers Common classes of vulnerabilities can be mitigated through the use of sanitizers provided by Clang-based toolchains. These sanitizers insert runtime checks against common classes of vulnerabilities. GCC-based toolchains may also provide some level of support for these flags as well, but will not be considered further in this post. We encourage you to check your toolchain\'s documentation. Two sanitizers included in Undefine | Tool Vulnerability Threat Mobile Prediction Conference | ★★★ | ||

| 2023-11-20 11:49:31 | Deux ans plus tard: une base de référence qui fait grimper la sécurité de l'industrie Two years later: a baseline that drives up security for the industry (lien direct) |

Royal Hansen, Vice President of Privacy, Safety and Security Engineering, GoogleNearly half of third-parties fail to meet two or more of the Minimum Viable Secure Product controls. Why is this a problem? Because "98% of organizations have a relationship with at least one third-party that has experienced a breach in the last 2 years."In this post, we\'re excited to share the latest improvements to the Minimum Viable Secure Product (MVSP) controls. We\'ll also shed light on how adoption of MVSP has helped Google improve its security processes, and hope this example will help motivate third-parties to increase their adoption of MVSP controls and thus improve product security across the industry.About MVSPIn October 2021, Google publicly launched MVSP alongside launch partners. Our original goal remains unchanged: to provide a vendor-neutral application security baseline, designed to eliminate overhead, complexity, and confusion in the end-to-end process of onboarding third-party products and services. It covers themes such as procurement, security assessment, and contract negotiation. Improvements since launchAs part of MVSP\'s annual control review, and our core philosophy of evolution over revolution Improvements since launchAs part of MVSP\'s annual control review, and our core philosophy of evolution over revolution |

Vulnerability Conference | ★★ | ||

| 2023-11-07 14:06:03 | MTE - le chemin prometteur à suivre pour la sécurité de la mémoire MTE - The promising path forward for memory safety (lien direct) |

Posted by Andy Qin, Irene Ang, Kostya Serebryany, Evgenii Stepanov Since 2018, Google has partnered with ARM and collaborated with many ecosystem partners (SoCs vendors, mobile phone OEMs, etc.) to develop Memory Tagging Extension (MTE) technology. We are now happy to share the growing adoption in the ecosystem. MTE is now available on some OEM devices (as noted in a recent blog post by Project Zero) with Android 14 as a developer option, enabling developers to use MTE to discover memory safety issues in their application easily. The security landscape is changing dynamically, new attacks are becoming more complex and costly to mitigate. It\'s becoming increasingly important to detect and prevent security vulnerabilities early in the software development cycle and also have the capability to mitigate the security attacks at the first moment of exploitation in production.The biggest contributor to security vulnerabilities are memory safety related defects and Google has invested in a set of technologies to help mitigate memory safety risks. These include but are not limited to: Shifting to memory safe languages such as Rust as a proactive solution to prevent the new memory safety bugs from being introduced in the first place. Tools for detecting memory safety defects in the development stages and production environment, such as widely used sanitizer technologies1 (ASAN, HWASAN, GWP-ASAN, etc.) as well as fuzzing (with sanitizers enabled). Foundational technologies like MTE, which many experts believe is the most promising path forward for improving C/C++ software security and it can be deployed both in development and production at reasonably low cost. MTE is a hardware based capability that can detect unknown memory safety vulnerabilities in testing and/or mitigate them in production. It works by tagging the pointers and memory regions and comparing the tags to identify mismatches (details). In addition to the security benefits, MTE can also help ensure integrity because memory safety bugs remain one of the major contributors to silent data corruption that not only impact customer trust, but also cause lost productivity for software developers. At the moment, MTE is supported on some of the latest chipsets: Focusing on security for Android devices, the MediaTek Dimensity 9300 integrates support for MTE via ARM\'s latest v9 architecture (which is what Cortex-X4 and Cortex-A720 processors are based on). This feature can be switched on and off in the bootloader by users and developers instead of having it always on or always off. Tensor G3 integrates support for MTE only within the developer mode toggle. Feature can be activated by developers. For both chipsets, this feature can be switched on and off by developers, making it easier to find memory-related bugs during development and after deployment. MTE can help users stay safe while also improving time to market for OEMs.Application develope | Vulnerability Mobile | ★★★ | ||

| 2023-11-02 12:00:24 | Plus de moyens pour les utilisateurs d'identifier les applications testées sur la sécurité indépendante sur Google Play More ways for users to identify independently security tested apps on Google Play (lien direct) |

Posted by Nataliya Stanetsky, Android Security and Privacy Team

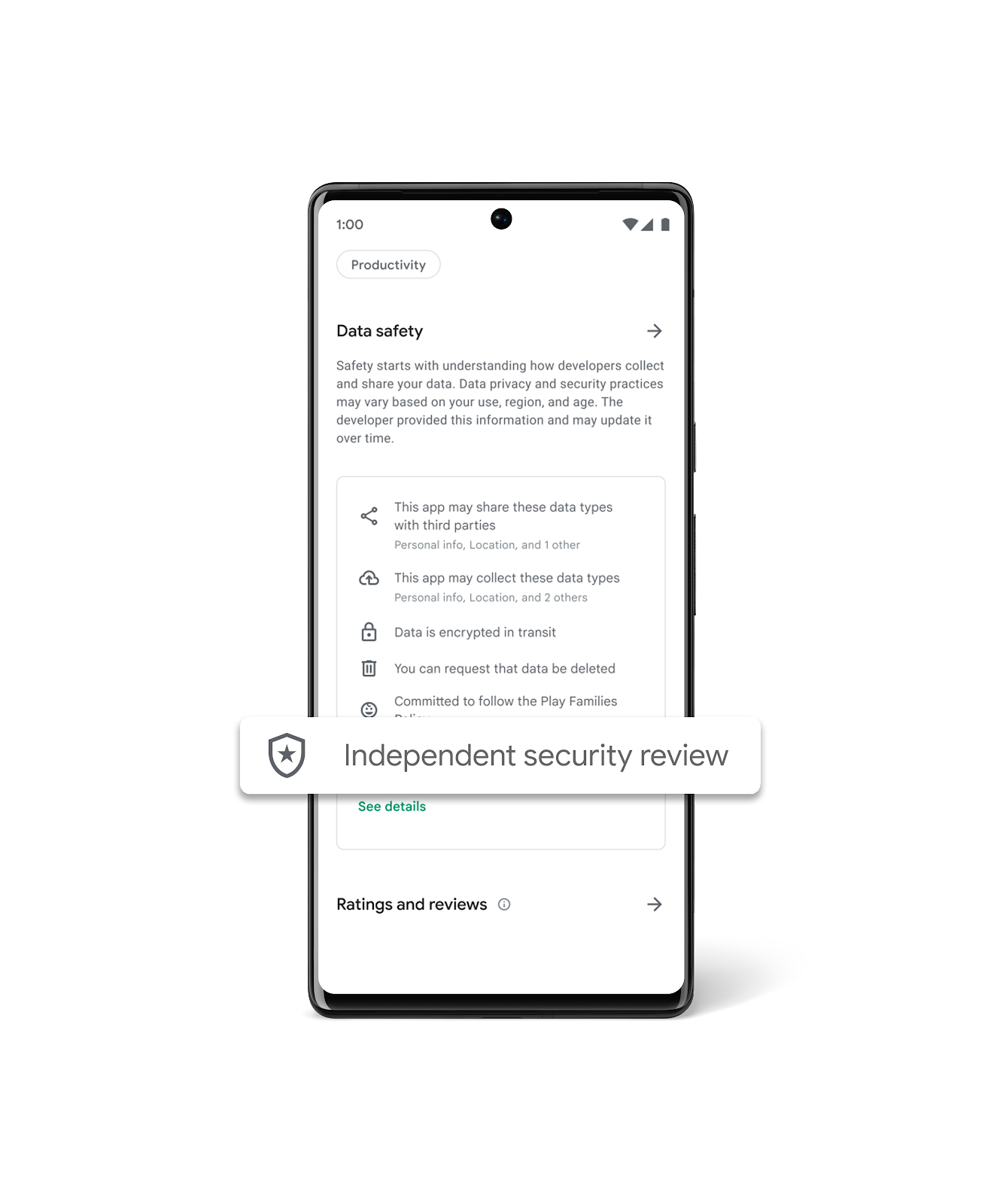

Keeping Google Play safe for users and developers remains a top priority for Google. As users increasingly prioritize their digital privacy and security, we continue to invest in our Data Safety section and transparency labeling efforts to help users make more informed choices about the apps they use.

Research shows that transparent security labeling plays a crucial role in consumer risk perception, building trust, and influencing product purchasing decisions. We believe the same principles apply for labeling and badging in the Google Play store. The transparency of an app\'s data security and privacy play a key role in a user\'s decision to download, trust, and use an app.

Highlighting Independently Security Tested VPN Apps

Last year, App Defense Alliance (ADA) introduced MASA (Mobile App Security Assessment), which allows developers to have their apps independently validated against a global security standard. This signals to users that an independent third-party has validated that the developers designed their apps to meet these industry mobile security and privacy minimum best practices and the developers are going the extra mile to identify and mitigate vulnerabilities. This, in turn, makes it harder for attackers to reach users\' devices and improves app quality across the ecosystem. Upon completion of the successful validation, Google Play gives developers the option to declare an “Independent security review” badge in its Data Safety section, as shown in the image below. While certification to baseline security standards does not imply that a product is free of vulnerabilities, the badge associated with these validated apps helps users see at-a-glance that a developer has prioritized security and privacy practices and committed to user safety.

To help give users a simplified view of which apps have undergone an independent security validation, we\'re introducing a new Google Play store banner for specific app types, starting with VPN apps. We\'ve launched this banner beginning with VPN apps due to the sensitive and significant amount of user data these apps handle. When a user searches for VPN apps, they will now see a banner at the top of Google Play that educates them about the “Independent security review” badge in the Data Safety Section. Users also have the ability to “Learn More”, which redirects them to the App Validation Directory, a centralized place to view all VPN apps that have been independently security reviewed. Users can also discover additional technical assessment details in the App Validation Directory, helping them to make more informed decisions about what VPN apps to download, use, and trust with their data.

To help give users a simplified view of which apps have undergone an independent security validation, we\'re introducing a new Google Play store banner for specific app types, starting with VPN apps. We\'ve launched this banner beginning with VPN apps due to the sensitive and significant amount of user data these apps handle. When a user searches for VPN apps, they will now see a banner at the top of Google Play that educates them about the “Independent security review” badge in the Data Safety Section. Users also have the ability to “Learn More”, which redirects them to the App Validation Directory, a centralized place to view all VPN apps that have been independently security reviewed. Users can also discover additional technical assessment details in the App Validation Directory, helping them to make more informed decisions about what VPN apps to download, use, and trust with their data.

|

Tool Vulnerability Mobile Technical | ★★ | ||

| 2023-10-26 08:49:41 | Increasing transparency in AI security (lien direct) | Mihai Maruseac, Sarah Meiklejohn, Mark Lodato, Google Open Source Security Team (GOSST)New AI innovations and applications are reaching consumers and businesses on an almost-daily basis. Building AI securely is a paramount concern, and we believe that Google\'s Secure AI Framework (SAIF) can help chart a path for creating AI applications that users can trust. Today, we\'re highlighting two new ways to make information about AI supply chain security universally discoverable and verifiable, so that AI can be created and used responsibly. The first principle of SAIF is to ensure that the AI ecosystem has strong security foundations. In particular, the software supply chains for components specific to AI development, such as machine learning models, need to be secured against threats including model tampering, data poisoning, and the production of harmful content. Even as machine learning and artificial intelligence continue to evolve rapidly, some solutions are now within reach of ML creators. We\'re building on our prior work with the Open Source Security Foundation to show how ML model creators can and should protect against ML supply chain attacks by using | Malware Tool Vulnerability Threat Cloud | ★★ | ||

| 2023-10-26 08:00:33 | Google\\'s reward criteria for reporting bugs in AI products (lien direct) | Eduardo Vela, Jan Keller and Ryan Rinaldi, Google Engineering In September, we shared how we are implementing the voluntary AI commitments that we and others in industry made at the White House in July. One of the most important developments involves expanding our existing Bug Hunter Program to foster third-party discovery and reporting of issues and vulnerabilities specific to our AI systems. Today, we\'re publishing more details on these new reward program elements for the first time. Last year we issued over $12 million in rewards to security researchers who tested our products for vulnerabilities, and we expect today\'s announcement to fuel even greater collaboration for years to come. What\'s in scope for rewards In our recent AI Red Team report, we identified common tactics, techniques, and procedures (TTPs) that we consider most relevant and realistic for real-world adversaries to use against AI systems. The following table incorporates shared learnings from | Tool Vulnerability | ★★ | ||

| 2023-10-10 15:39:40 | Échelle au-delà ducorp avec les politiques de contrôle d'accès assistées par l'IA Scaling BeyondCorp with AI-Assisted Access Control Policies (lien direct) |

Ayush Khandelwal, Software Engineer, Michael Torres, Security Engineer, Hemil Patel, Technical Product Expert, Sameer Ladiwala, Software EngineerIn July 2023, four Googlers from the Enterprise Security and Access Security organizations developed a tool that aimed at revolutionizing the way Googlers interact with Access Control Lists - SpeakACL. This tool, awarded the Gold Prize during Google\'s internal Security & AI Hackathon, allows developers to create or modify security policies using simple English instructions rather than having to learn system-specific syntax or complex security principles. This can save security and product teams hours of time and effort, while helping to protect the information of their users by encouraging the reduction of permitted access by adhering to the principle of least privilege.Access Control Policies in BeyondCorpGoogle requires developers and owners of enterprise applications to define their own access control policies, as described in BeyondCorp: The Access Proxy. We have invested in reducing the difficulty of self-service ACL and ACL test creation to encourage these service owners to define least privilege access control policies. However, it is still challenging to concisely transform their intent into the language acceptable to the access control engine. Additional complexity is added by the variety of engines, and corresponding policy definition languages that target different access control domains (i.e. websites, networks, RPC servers).To adequately implement an access control policy, service developers are expected to learn various policy definition languages and their associated syntax, in addition to sufficiently understanding security concepts. As this takes time away from core developer work, it is not the most efficient use of developer time. A solution was required to remove these challenges so developers can focus on building innovative tools and products.Making it WorkWe built a prototype interface for interactively defining and modifying access control policies for the | Tool Vulnerability | ★★★ | ||

| 2023-10-09 12:30:13 | Rust à métal nu dans Android Bare-metal Rust in Android (lien direct) |

Posted by Andrew Walbran, Android Rust Team

Last year we wrote about how moving native code in Android from C++ to Rust has resulted in fewer security vulnerabilities. Most of the components we mentioned then were system services in userspace (running under Linux), but these are not the only components typically written in memory-unsafe languages. Many security-critical components of an Android system run in a “bare-metal” environment, outside of the Linux kernel, and these are historically written in C. As part of our efforts to harden firmware on Android devices, we are increasingly using Rust in these bare-metal environments too.

To that end, we have rewritten the Android Virtualization Framework\'s protected VM (pVM) firmware in Rust to provide a memory safe foundation for the pVM root of trust. This firmware performs a similar function to a bootloader, and was initially built on top of U-Boot, a widely used open source bootloader. However, U-Boot was not designed with security in a hostile environment in mind, and there have been numerous security vulnerabilities found in it due to out of bounds memory access, integer underflow and memory corruption. Its VirtIO drivers in particular had a number of missing or problematic bounds checks. We fixed the specific issues we found in U-Boot, but by leveraging Rust we can avoid these sorts of memory-safety vulnerabilities in future. The new Rust pVM firmware was released in Android 14.

As part of this effort, we contributed back to the Rust community by using and contributing to existing crates where possible, and publishing a number of new crates as well. For example, for VirtIO in pVM firmware we\'ve spent time fixing bugs and soundness issues in the existing virtio-drivers crate, as well as adding new functionality, and are now helping maintain this crate. We\'ve published crates for making PSCI and other Arm SMCCC calls, and for managing page tables. These are just a start; we plan to release more Rust crates to support bare-metal programming on a range of platforms. These crates are also being used outside of Android, such as in Project Oak and the bare-metal section of our Comprehensive Rust course.

Training engineers

Many engineers have been positively surprised by how p

Last year we wrote about how moving native code in Android from C++ to Rust has resulted in fewer security vulnerabilities. Most of the components we mentioned then were system services in userspace (running under Linux), but these are not the only components typically written in memory-unsafe languages. Many security-critical components of an Android system run in a “bare-metal” environment, outside of the Linux kernel, and these are historically written in C. As part of our efforts to harden firmware on Android devices, we are increasingly using Rust in these bare-metal environments too.

To that end, we have rewritten the Android Virtualization Framework\'s protected VM (pVM) firmware in Rust to provide a memory safe foundation for the pVM root of trust. This firmware performs a similar function to a bootloader, and was initially built on top of U-Boot, a widely used open source bootloader. However, U-Boot was not designed with security in a hostile environment in mind, and there have been numerous security vulnerabilities found in it due to out of bounds memory access, integer underflow and memory corruption. Its VirtIO drivers in particular had a number of missing or problematic bounds checks. We fixed the specific issues we found in U-Boot, but by leveraging Rust we can avoid these sorts of memory-safety vulnerabilities in future. The new Rust pVM firmware was released in Android 14.

As part of this effort, we contributed back to the Rust community by using and contributing to existing crates where possible, and publishing a number of new crates as well. For example, for VirtIO in pVM firmware we\'ve spent time fixing bugs and soundness issues in the existing virtio-drivers crate, as well as adding new functionality, and are now helping maintain this crate. We\'ve published crates for making PSCI and other Arm SMCCC calls, and for managing page tables. These are just a start; we plan to release more Rust crates to support bare-metal programming on a range of platforms. These crates are also being used outside of Android, such as in Project Oak and the bare-metal section of our Comprehensive Rust course.

Training engineers

Many engineers have been positively surprised by how p |

Tool Vulnerability | ★★ | ||

| 2023-10-06 10:21:05 | Élargir notre programme de récompense d'exploitation à Chrome et à Cloud Expanding our exploit reward program to Chrome and Cloud (lien direct) |

Stephen Roettger and Marios Pomonis, Google Software EngineersIn 2020, we launched a novel format for our vulnerability reward program (VRP) with the kCTF VRP and its continuation kernelCTF. For the first time, security researchers could get bounties for n-day exploits even if they didn\'t find the vulnerability themselves. This format proved valuable in improving our understanding of the most widely exploited parts of the linux kernel. Its success motivated us to expand it to new areas and we\'re now excited to announce that we\'re extending it to two new targets: v8CTF and kvmCTF.Today, we\'re launching v8CTF, a CTF focused on V8, the JavaScript engine that powers Chrome. kvmCTF is an upcoming CTF focused on Kernel-based Virtual Machine (KVM) that will be released later in the year.As with kernelCTF, we will be paying bounties for successful exploits against these platforms, n-days included. This is on top of any existing rewards for the vulnerabilities themselves. For example, if you find a vulnerability in V8 and then write an exploit for it, it can be eligible under both the Chrome VRP and the v8CTF.We\'re always looking for ways to improve the security posture of our products, and we want to learn from the security community to understand how they will approach this challenge. If you\'re successful, you\'ll not only earn a reward, but you\'ll also help us make our products more secure for everyone. This is also a good opportunity to learn about technologies and gain hands-on experience exploiting them.Besides learning about exploitation techniques, we\'ll also leverage this program to experiment with new mitigation ideas and see how they perform against real-world exploits. For mitigations, it\'s crucial to assess their effectiveness early on in the process, and you can help us battle test them.How do I participate? | Vulnerability Cloud | ★★ | ||

| 2023-09-27 12:51:29 | Les lacunes de sécurité et de confidentialité SMS montrent clairement que les utilisateurs ont besoin d'une mise à niveau de messagerie SMS Security & Privacy Gaps Make It Clear Users Need a Messaging Upgrade (lien direct) |

Posted by Eugene Liderman and Roger Piqueras Jover

SMS texting is frozen in time.

People still use and rely on trillions of SMS texts each year to exchange messages with friends, share family photos, and copy two-factor authentication codes to access sensitive data in their bank accounts. It\'s hard to believe that at a time where technologies like AI are transforming our world, a forty-year old mobile messaging standard is still so prevalent.

Like any forty-year-old technology, SMS is antiquated compared to its modern counterparts. That\'s especially concerning when it comes to security.

The World Has Changed, But SMS Hasn\'t Changed With It

According to a recent whitepaper from Dekra, a safety certifications and testing lab, the security shortcomings of SMS can notably lead to:

SMS Interception: Attackers can intercept SMS messages by exploiting vulnerabilities in mobile carrier networks. This can allow them to read the contents of SMS messages, including sensitive information such as two-factor authentication codes, passwords, and credit card numbers due to the lack of encryption offered by SMS.

SMS Spoofing: Attackers can spoof SMS messages to launch phishing attacks to make it appear as if they are from a legitimate sender. This can be used to trick users into clicking on malicious links or revealing sensitive information. And because carrier networks have independently developed their approaches to deploying SMS texts over the years, the inability for carriers to exchange reputation signals to help identify fraudulent messages has made it tough to detect spoofed senders distributing potentially malicious messages.

These findings add to the well-established facts about SMS\' weaknesses, lack of encryption chief among them.

Dekra also compared SMS against a modern secure messaging protocol and found it lacked any built-in security functionality. According to Dekra, SMS users can\'t answer \'yes\' to any of the following basic security questions:

Confidentiality: Can I trust that no one else can read my SMSs?

Integrity: Can I trust that the content of the SMS that I receive is not modified?

Authentication: Can I trust the identity of the sender of the SMS that I receive?

But this isn\'t just theoretical: cybercriminals have also caught on to the lack of security protections SMS provides and have repeatedly exploited its weakness. Both novice hackers and advanced threat actor groups (such as UNC3944 / Scattered Spider and APT41 investigated by Mandiant, part of Google Cloud) leverage the security deficiencies in SMS to launch different

But this isn\'t just theoretical: cybercriminals have also caught on to the lack of security protections SMS provides and have repeatedly exploited its weakness. Both novice hackers and advanced threat actor groups (such as UNC3944 / Scattered Spider and APT41 investigated by Mandiant, part of Google Cloud) leverage the security deficiencies in SMS to launch different |

Vulnerability Threat Studies | APT 41 | ★★★ | |

| 2023-09-15 14:11:38 | Capslock: De quoi votre code est-il vraiment capable? Capslock: What is your code really capable of? (lien direct) |

Jess McClintock and John Dethridge, Google Open Source Security Team, and Damien Miller, Enterprise Infrastructure Protection TeamWhen you import a third party library, do you review every line of code? Most software packages depend on external libraries, trusting that those packages aren\'t doing anything unexpected. If that trust is violated, the consequences can be huge-regardless of whether the package is malicious, or well-intended but using overly broad permissions, such as with Log4j in 2021. Supply chain security is a growing issue, and we hope that greater transparency into package capabilities will help make secure coding easier for everyone.Avoiding bad dependencies can be hard without appropriate information on what the dependency\'s code actually does, and reviewing every line of that code is an immense task. Every dependency also brings its own dependencies, compounding the need for review across an expanding web of transitive dependencies. But what if there was an easy way to know the capabilities–the privileged operations accessed by the code–of your dependencies? Capslock is a capability analysis CLI tool that informs users of privileged operations (like network access and arbitrary code execution) in a given package and its dependencies. Last month we published the alpha version of Capslock for the Go language, which can analyze and report on the capabilities that are used beneath the surface of open source software. | Tool Vulnerability | ★★ | ||

| 2023-08-29 12:06:35 | Android se lance à fond dans le Fuzzing Android Goes All-in on Fuzzing (lien direct) |

Posted by Jon Bottarini and Hamzeh Zawawy, Android Security

Fuzzing is an effective technique for finding software vulnerabilities. Over the past few years Android has been focused on improving the effectiveness, scope, and convenience of fuzzing across the organization. This effort has directly resulted in improved test coverage, fewer security/stability bugs, and higher code quality. Our implementation of continuous fuzzing allows software teams to find new bugs/vulnerabilities, and prevent regressions automatically without having to manually initiate fuzzing runs themselves. This post recounts a brief history of fuzzing on Android, shares how Google performs fuzzing at scale, and documents our experience, challenges, and success in building an infrastructure for automating fuzzing across Android. If you\'re interested in contributing to fuzzing on Android, we\'ve included instructions on how to get started, and information on how Android\'s VRP rewards fuzzing contributions that find vulnerabilities.

A Brief History of Android Fuzzing

Fuzzing has been around for many years, and Android was among the early large software projects to automate fuzzing and prioritize it similarly to unit testing as part of the broader goal to make Android the most secure and stable operating system. In 2019 Android kicked off the fuzzing project, with the goal to help institutionalize fuzzing by making it seamless and part of code submission. The Android fuzzing project resulted in an infrastructure consisting of Pixel phones and Google cloud based virtual devices that enabled scalable fuzzing capabilities across the entire Android ecosystem. This project has since grown to become the official internal fuzzing infrastructure for Android and performs thousands of fuzzing hours per day across hundreds of fuzzers.

Under the Hood: How Is Android Fuzzed

Step 1: Define and find all the fuzzers in Android repo

The first step is to integrate fuzzing into the Android build system (Soong) to enable build fuzzer binaries. While developers are busy adding features to their codebase, they can include a fuzzer to fuzz their code and submit the fuzzer alongside the code they have developed. Android Fuzzing uses a build rule called cc_fuzz (see example below). cc_fuzz (we also support rust_fuzz and java_fuzz) defines a Soong module with source file(s) and dependencies that can be built into a binary.

cc_fuzz {

name: "fuzzer_foo",

srcs: [

"fuzzer_foo.cpp",

],

static_libs: [

"libfoo",

],

host_supported: true,

}

A packaging rule in Soong finds all of these cc_fuzz definitions and builds them automatically. The actual fuzzer structure itself is very simple and consists of one main method (LLVMTestOneInput):

#include

#include

extern "C" int LLVMFuzzerTestOneInput(

const uint8_t *data,

size_t size) {

// Here you invoke the code to be fuzzed.

return 0;

}

This fuzzer gets automatically built into a binary and along with its static/dynamic dependencies (as specified in the Android build file) are pack

Step 1: Define and find all the fuzzers in Android repo

The first step is to integrate fuzzing into the Android build system (Soong) to enable build fuzzer binaries. While developers are busy adding features to their codebase, they can include a fuzzer to fuzz their code and submit the fuzzer alongside the code they have developed. Android Fuzzing uses a build rule called cc_fuzz (see example below). cc_fuzz (we also support rust_fuzz and java_fuzz) defines a Soong module with source file(s) and dependencies that can be built into a binary.

cc_fuzz {

name: "fuzzer_foo",

srcs: [

"fuzzer_foo.cpp",

],

static_libs: [

"libfoo",

],

host_supported: true,

}

A packaging rule in Soong finds all of these cc_fuzz definitions and builds them automatically. The actual fuzzer structure itself is very simple and consists of one main method (LLVMTestOneInput):

#include

#include

extern "C" int LLVMFuzzerTestOneInput(

const uint8_t *data,

size_t size) {

// Here you invoke the code to be fuzzed.

return 0;

}

This fuzzer gets automatically built into a binary and along with its static/dynamic dependencies (as specified in the Android build file) are pack |

Vulnerability Cloud | ★★ | ||

| 2023-08-16 13:03:58 | Fuzzing à propulsion AI: brisant la barrière de chasse aux insectes AI-Powered Fuzzing: Breaking the Bug Hunting Barrier (lien direct) |

Dongge Liu, Jonathan Metzman, Oliver Chang, Google Open Source Security Team Since 2016, OSS-Fuzz has been at the forefront of automated vulnerability discovery for open source projects. Vulnerability discovery is an important part of keeping software supply chains secure, so our team is constantly working to improve OSS-Fuzz. For the last few months, we\'ve tested whether we could boost OSS-Fuzz\'s performance using Google\'s Large Language Models (LLM). This blog post shares our experience of successfully applying the generative power of LLMs to improve the automated vulnerability detection technique known as fuzz testing (“fuzzing”). By using LLMs, we\'re able to increase the code coverage for critical projects using our OSS-Fuzz service without manually writing additional code. Using LLMs is a promising new way to scale security improvements across the over 1,000 projects currently fuzzed by OSS-Fuzz and to remove barriers to future projects adopting fuzzing. LLM-aided fuzzingWe created the OSS-Fuzz service to help open source developers find bugs in their code at scale-especially bugs that indicate security vulnerabilities. After more than six years of running OSS-Fuzz, we now support over 1,000 open source projects with continuous fuzzing, free of charge. As the Heartbleed vulnerability showed us, bugs that could be easily found with automated fuzzing can have devastating effects. For most open source developers, setting up their own fuzzing solution could cost time and resources. With OSS-Fuzz, developers are able to integrate their project for free, automated bug discovery at scale. | Vulnerability Cloud | ★★ | ||

| 2023-08-08 13:33:00 | Chute et zenbleed: Googlers aide à sécuriser l'écosystème Downfall and Zenbleed: Googlers helping secure the ecosystem (lien direct) |

Tavis Ormandy, Software Engineer and Daniel Moghimi, Senior Research ScientistFinding and mitigating security vulnerabilities is critical to keeping Internet users safe. However, the more complex a system becomes, the harder it is to secure-and that is also the case with computing hardware and processors, which have developed highly advanced capabilities over the years. This post will detail this trend by exploring Downfall and Zenbleed, two new security vulnerabilities (one of which was disclosed today) that prior to mitigation had the potential to affect billions of personal and cloud computers, signifying the importance of vulnerability research and cross-industry collaboration. Had these vulnerabilities not been discovered by Google researchers, and instead by adversaries, they would have enabled attackers to compromise Internet users. For both vulnerabilities, Google worked closely with our partners in the industry to develop fixes, deploy mitigations and gather details to share widely and better secure the ecosystem.What are Downfall and Zenbleed?Downfall (CVE-2022-40982) and Zenbleed (CVE-2023-20593) are two different vulnerabilities affecting CPUs - Intel Core (6th - 11th generation) and AMD Zen2, respectively. They allow an attacker to violate the software-hardware boundary established in modern processors. This could allow an attacker to access data in internal hardware registers that hold information belonging to other users of the system (both across different virtual machines and different processes). These vulnerabilities arise from complex optimizations in modern CPUs tha | Vulnerability Prediction Cloud | ★★ | ||

| 2023-07-27 12:01:55 | Les hauts et les bas de 0 jours: une année en revue des 0 jours exploités dans le monde en 2022 The Ups and Downs of 0-days: A Year in Review of 0-days Exploited In-the-Wild in 2022 (lien direct) |

Maddie Stone, Security Researcher, Threat Analysis Group (TAG)This is Google\'s fourth annual year-in-review of 0-days exploited in-the-wild [2021, 2020, 2019] and builds off of the mid-year 2022 review. The goal of this report is not to detail each individual exploit, but instead to analyze the exploits from the year as a whole, looking for trends, gaps, lessons learned, and successes. Executive Summary41 in-the-wild 0-days were detected and disclosed in 2022, the second-most ever recorded since we began tracking in mid-2014, but down from the 69 detected in 2021. Although a 40% drop might seem like a clear-cut win for improving security, the reality is more complicated. Some of our key takeaways from 2022 include:N-days function like 0-days on Android due to long patching times. Across the Android ecosystem there were multiple cases where patches were not available to users for a significant time. Attackers didn\'t need 0-day exploits and instead were able to use n-days that functioned as 0-days. | Tool Vulnerability Threat Prediction Conference | ★★★ | ||

| 2023-07-20 16:03:33 | Sécurité de la chaîne d'approvisionnement pour Go, partie 3: décalage à gauche Supply chain security for Go, Part 3: Shifting left (lien direct) |

Julie Qiu, Go Security & Reliability and Jonathan Metzman, Google Open Source Security TeamPreviously in our Supply chain security for Go series, we covered dependency and vulnerability management tools and how Go ensures package integrity and availability as part of the commitment to countering the rise in supply chain attacks in recent years. In this final installment, we\'ll discuss how “shift left” security can help make sure you have the security information you need, when you need it, to avoid unwelcome surprises. Shifting leftThe software development life cycle (SDLC) refers to the series of steps that a software project goes through, from planning all the way through operation. It\'s a cycle because once code has been released, the process continues and repeats through actions like coding new features, addressing bugs, and more. |

Tool Vulnerability | ★★ | ||

| 2023-06-22 12:05:42 | Google Cloud attribue 313 337 $ en 2022 Prix VRP Google Cloud Awards $313,337 in 2022 VRP Prizes (lien direct) |

Anthony Weems, Information Security Engineer2022 was a successful year for Google\'s Vulnerability Reward Programs (VRPs), with over 2,900 security issues identified and fixed, and over $12 million in bounty rewards awarded to researchers. A significant amount of these vulnerability reports helped improve the security of Google Cloud products, which in turn helps improve security for our users, customers, and the Internet at large.We first announced the Google Cloud VRP Prize in 2019 to encourage security researchers to focus on the security of Google Cloud and to incentivize sharing knowledge on Cloud vulnerability research with the world. This year, we were excited to see an increase in collaboration between researchers, which often led to more detailed and complex vulnerability reports. After careful evaluation of the submissions, today we are excited to announce the winners of the 2022 Google Cloud VRP Prize.2022 Google Cloud VRP Prize Winners1st Prize - $133,337: Yuval Avrahami for the report and write-up Privilege escalations in GKE Autopilot. Yuval\'s excellent write-up describes several attack paths that would allow an attacker with permission to create pods in an Autopilot cluster to escalate privileges and compromise the underlying node VMs. While thes | Vulnerability Cloud | Uber | ★★ | |

| 2023-06-14 11:59:49 | Apprentissage de KCTF VRP \\'s 42 Linux Neule exploite les soumissions Learnings from kCTF VRP\\'s 42 Linux kernel exploits submissions (lien direct) |

Tamás Koczka, Security EngineerIn 2020, we integrated kCTF into Google\'s Vulnerability Rewards Program (VRP) to support researchers evaluating the security of Google Kubernetes Engine (GKE) and the underlying Linux kernel. As the Linux kernel is a key component not just for Google, but for the Internet, we started heavily investing in this area. We extended the VRP\'s scope and maximum reward in 2021 (to $50k), then again in February 2022 (to $91k), and finally in August 2022 (to $133k). In 2022, we also summarized our learnings to date in our cookbook, and introduced our experimental mitigations for the most common exploitation techniques.In this post, we\'d like to share our learnings and statistics about the latest Linux kernel exploit submissions, how effective our | Vulnerability | Uber | ★★ | |

| 2023-06-01 11:59:52 | Annonce du bonus d'exploitation en pleine chaîne du navigateur Chrome Announcing the Chrome Browser Full Chain Exploit Bonus (lien direct) |

Amy Ressler, Chrome Security Team au nom du Chrome VRP Depuis 13 ans, un pilier clé de l'écosystème de sécurité chromée a inclus des chercheurs en sécurité à trouver des vulnérabilités de sécurité dans Chrome Browser et à nous les signaler, à travers le Programme de récompenses de vulnérabilité Chrome . À partir d'aujourd'hui et jusqu'au 1er décembre 2023, le premier rapport de bogue de sécurité que nous recevons avec un exploit fonctionnel de la chaîne complète, résultant en une évasion chromée de sable, est éligible à triple le montant de la récompense complet .Votre exploit en pleine chaîne pourrait entraîner une récompense pouvant atteindre 180 000 $ (potentiellement plus avec d'autres bonus). Toutes les chaînes complètes ultérieures soumises pendant cette période sont éligibles pour doubler le montant de récompense complet ! Nous avons historiquement mis une prime sur les rapports avec les exploits & # 8211;«Des rapports de haute qualité avec un exploit fonctionnel» est le niveau le plus élevé de montants de récompense dans notre programme de récompenses de vulnérabilité.Au fil des ans, le modèle de menace de Chrome Browser a évolué à mesure que les fonctionnalités ont mûri et de nouvelles fonctionnalités et de nouvelles atténuations, tels a miracleptr , ont été introduits.Compte tenu de ces évolutions, nous sommes toujours intéressés par les explorations d'approches nouvelles et nouvelles pour exploiter pleinement le navigateur Chrome et nous voulons offrir des opportunités pour mieux inciter ce type de recherche.Ces exploits nous fournissent un aperçu précieux des vecteurs d'attaque potentiels pour exploiter Chrome et nous permettent d'identifier des stratégies pour un meilleur durcissement des caractéristiques et des idées de chrome spécifiques pour de futures stratégies d'atténuation à grande échelle. Les détails complets de cette opportunité de bonus sont disponibles sur le Chrome VRP Rules and Rewards page .Le résumé est le suivant: Les rapports de bogues peuvent être soumis à l'avance pendant que le développement de l'exploitation se poursuit au cours de cette fenêtre de 180 jours.Les exploits fonctionnels doivent être soumis à Chrome à la fin de la fenêtre de 180 jours pour être éligible à la triple ou double récompense. Le premier exploit fonctionnel de la chaîne complète que nous recevons est éligible au triple de récompense. L'exploit en chaîne complète doit entraîner une évasion de bac à sable de navigateur Chrome, avec une démonstration de contrôle / exécution de code de l'attaquant en dehors du bac à sable. L'exploitation doit pouvoir être effectuée à distance et aucune dépendance ou très limitée à l'interaction utilisateur. L'exploit doit avoir été fonctionnel dans un canal de libération actif de Chrome (Dev, Beta, stable, étendu stable) au moment des rapports initiaux des bogues dans cette chaîne.Veuillez ne pas soumettre des exploits développés à partir de bogues de sécurité divulgués publiquement ou d'autres artefacts dans les anciennes versions passées de Chrome. Comme cela est conforme à notre politique générale de récompenses, si l'exploit permet l'exécution du code distant (RCE) dans le navigateur ou un autre processus hautement privilégié, tel que le processus de réseau ou de GPU, pour entraîner une évasion de bac à sable sans avoir besoin d'une première étapeBug, le montant de récompense pour le rendu «rapport de haute qualité avec exploit fonctionnel» serait accordé et inclus dans le calcul du total de récompense de bonus. Sur la base de notre | Vulnerability Threat | ★★★ | ||

| 2023-05-24 12:49:28 | Annonçant le lancement de Guac V0.1 Announcing the launch of GUAC v0.1 (lien direct) |

Brandon Lum and Mihai Maruseac, Google Open Source Security TeamToday, we are announcing the launch of the v0.1 version of Graph for Understanding Artifact Composition (GUAC). Introduced at Kubecon 2022 in October, GUAC targets a critical need in the software industry to understand the software supply chain. In collaboration with Kusari, Purdue University, Citi, and community members, we have incorporated feedback from our early testers to improve GUAC and make it more useful for security professionals. This improved version is now available as an API for you to start developing on top of, and integrating into, your systems.The need for GUACHigh-profile incidents such as Solarwinds, and the recent 3CX supply chain double-exposure, are evidence that supply chain attacks are getting more sophisticated. As highlighted by the | Tool Vulnerability Threat | Yahoo | ★★ | |

| 2023-05-17 11:59:38 | Nouvelles initiatives du programme de récompense de vulnérabilité de l'appareil Android et Google New Android & Google Device Vulnerability Reward Program Initiatives (lien direct) |

Publié par Sarah Jacobus, Vulnérabilité Rewards Team Alors que la technologie continue d'avancer, les efforts des cybercriminels qui cherchent à exploiter les vulnérabilités dans les logiciels et les appareils.C'est pourquoi chez Google et Android, la sécurité est une priorité absolue, et nous travaillons constamment pour rendre nos produits plus sécurisés.Une façon dont nous le faisons est grâce à nos programmes de récompense de vulnérabilité (VRP), qui incitent les chercheurs en sécurité à trouver et à signaler les vulnérabilités dans notre système d'exploitation et nos appareils. Nous sommes heureux d'annoncer que nous mettons en œuvre un nouveau système de notation de qualité pour les rapports de vulnérabilité de sécurité pour encourager davantage de recherches sur la sécurité dans les domaines à impact plus élevé de nos produits et assurer la sécurité de nos utilisateurs.Ce système évaluera les rapports de vulnérabilité comme une qualité élevée, moyenne ou faible en fonction du niveau de détail fourni dans le rapport.Nous pensons que ce nouveau système encouragera les chercheurs à fournir des rapports plus détaillés, ce qui nous aidera à résoudre les problèmes rapportés plus rapidement et à permettre aux chercheurs de recevoir des récompenses de primes plus élevées. Les vulnérabilités de la plus haute qualité et les plus critiques sont désormais éligibles à des récompenses plus importantes allant jusqu'à 15 000 $! Il y a quelques éléments clés que nous recherchons: Description précise et détaillée : Un rapport doit décrire clairement et avec précision la vulnérabilité, y compris le nom et la version de l'appareil.La description doit être suffisamment détaillée pour comprendre facilement le problème et commencer à travailler sur un correctif. Analyse des causes racines : Un rapport doit inclure une analyse complète des causes profondes qui décrit pourquoi le problème se produit et quel code source Android doit être corrigé pour le résoudre.Cette analyse doit être approfondie et fournir suffisamment d'informations pour comprendre la cause sous-jacente de la vulnérabilité. preuve de concept : Un rapport doit inclure une preuve de concept qui démontre efficacement la vulnérabilité.Cela peut inclure des enregistrements vidéo, une sortie de débogueur ou d'autres informations pertinentes.La preuve de concept doit être de haute qualité et inclure la quantité minimale de code possible pour démontrer le problème. Reproductibilité : Un rapport doit inclure une explication étape par étape de la façon de reproduire la vulnérabilité sur un appareil éligible exécutant la dernière version.Ces informations doivent être claires et concises et devraient permettre à nos ingénieurs de reproduire facilement le problème et de commencer à travailler sur une correction. preuve d'accès à l'accouchement : Enfin, un rapport doit inclure des preuves ou une analyse qui démontrent le type de problème et le niveau d'accès ou d'exécution atteint. * Remarque: ces critères peuvent changer avec le temps.Pour les informations les plus récentes, veuillez vous référer à notre Page des règles publiques . De plus, à partir du 15 mars 2023, Android n'attribuera plus de vulnérabilités et d'expositions courantes (CVE) à la plupart des problèmes de gravité modérés.Le CVE continuera d'être affecté à des vulnérabilités critiques et à forte gravité. Nous pensons que l'incitation aux chercheurs à fournir des rapports de haute qualité profitera à la fois à la communauté de sécurité plus large et à notre | Vulnerability | ★★ | ||

| 2023-04-13 12:04:31 | Sécurité de la chaîne d'approvisionnement pour GO, partie 1: Gestion de la vulnérabilité Supply chain security for Go, Part 1: Vulnerability management (lien direct) |

Posted by Julie Qiu, Go Security & Reliability and Oliver Chang, Google Open Source Security Team High profile open source vulnerabilities have made it clear that securing the supply chains underpinning modern software is an urgent, yet enormous, undertaking. As supply chains get more complicated, enterprise developers need to manage the tidal wave of vulnerabilities that propagate up through dependency trees. Open source maintainers need streamlined ways to vet proposed dependencies and protect their projects. A rise in attacks coupled with increasingly complex supply chains means that supply chain security problems need solutions on the ecosystem level. One way developers can manage this enormous risk is by choosing a more secure language. As part of Google\'s commitment to advancing cybersecurity and securing the software supply chain, Go maintainers are focused this year on hardening supply chain security, streamlining security information to our users, and making it easier than ever to make good security choices in Go. This is the first in a series of blog posts about how developers and enterprises can secure their supply chains with Go. Today\'s post covers how Go helps teams with the tricky problem of managing vulnerabilities in their open source packages. Extensive Package Insights Before adopting a dependency, it\'s important to have high-quality information about the package. Seamless access to comprehensive information can be the difference between an informed choice and a future security incident from a vulnerability in your supply chain. Along with providing package documentation and version history, the Go package discovery site links to Open Source Insights. The Open Source Insights page includes vulnerability information, a dependency tree, and a security score provided by the OpenSSF Scorecard project. Scorecard evaluates projects on more than a dozen security metrics, each backed up with supporting information, and assigns the project an overall score out of ten to help users quickly judge its security stance (example). The Go package discovery site puts all these resources at developers\' fingertips when they need them most-before taking on a potentially risky dependency. Curated Vulnerability Information Large consumers of open source software must manage many packages and a high volume of vulnerabilities. For enterprise teams, filtering out noisy, low quality advisories and false positives from critical vulnerabilities is often the most important task in vulnerability management. If it is difficult to tell which vulnerabilities are important, it is impossible to properly prioritize their remediation. With granular advisory details, the Go vulnerability database removes barriers to vulnerability prioritization and remediation. All vulnerability database entries are reviewed and curated by the Go security team. As a result, entries are accurate and include detailed metadata to improve the quality of vulnerability scans and to make vulnerability information more actionable. This metadata includes information on affected functions, operating systems, and architectures. With this information, vulnerability scanners can reduce the number of false po | Tool Vulnerability | ★★ | ||

| 2023-04-11 12:11:33 | Annonce de l'API DEPS.DEV: données de dépendance critiques pour les chaînes d'approvisionnement sécurisées Announcing the deps.dev API: critical dependency data for secure supply chains (lien direct) |

Posted by Jesper Sarnesjo and Nicky Ringland, Google Open Source Security Team Today, we are excited to announce the deps.dev API, which provides free access to the deps.dev dataset of security metadata, including dependencies, licenses, advisories, and other critical health and security signals for more than 50 million open source package versions. Software supply chain attacks are increasingly common and harmful, with high profile incidents such as Log4Shell, Codecov, and the recent 3CX hack. The overwhelming complexity of the software ecosystem causes trouble for even the most diligent and well-resourced developers. We hope the deps.dev API will help the community make sense of complex dependency data that allows them to respond to-or even prevent-these types of attacks. By integrating this data into tools, workflows, and analyses, developers can more easily understand the risks in their software supply chains. The power of dependency data As part of Google\'s ongoing efforts to improve open source security, the Open Source Insights team has built a reliable view of software metadata across 5 packaging ecosystems. The deps.dev data set is continuously updated from a range of sources: package registries, the Open Source Vulnerability database, code hosts such as GitHub and GitLab, and the software artifacts themselves. This includes 5 million packages, more than 50 million versions, from the Go, Maven, PyPI, npm, and Cargo ecosystems-and you\'d better believe we\'re counting them! We collect and aggregate this data and derive transitive dependency graphs, advisory impact reports, OpenSSF Security Scorecard information, and more. Where the deps.dev website allows human exploration and examination, and the BigQuery dataset supports large-scale bulk data analysis, this new API enables programmatic, real-time access to the corpus for integration into tools, workflows, and analyses. The API is used by a number of teams internally at Google to support the security of our own products. One of the first publicly visible uses is the GUAC integration, which uses the deps.dev data to enrich SBOMs. We have more exciting integrations in the works, but we\'re most excited to see what the greater open source community builds! We see the API as being useful for tool builders, researchers, and tinkerers who want to answer questions like: What versions are available for this package? What are the licenses that cover this version of a package-or all the packages in my codebase? How many dependencies does this package have? What are they? Does the latest version of this package include changes to dependencies or licenses? What versions of what packages correspond to this file? Taken together, this information can help answer the most important overarching question: how much risk would this dependency add to my project? The API can help surface critical security information where and when developers can act. This data can be integrated into: IDE Plugins, to make dependency and security information immediately available. CI | Tool Vulnerability | ★★ | ||