What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2021-09-24 03:51:21 | Check: that Republican audit of Maricopa (lien direct) | Author: Robert Graham (@erratarob)Later today (Friday, September 24, 2021), Republican auditors release their final report on the found with elections in Maricopa county. Draft copies have circulated online. In this blogpost, I write up my comments on the cybersecurity portions of their draft.https://arizonaagenda.substack.com/p/we-got-the-senate-audit-reportThe three main problems are:They misapply cybersecurity principles that are meaningful for normal networks, but which don't really apply to the air gapped networks we see here.They make some errors about technology, especially networking.They are overstretching themselves to find dirt, claiming the things they don't understand are evidence of something bad.In the parts below, I pick apart individual pieces from that document to demonstrate these criticisms. I focus on section 7, the cybersecurity section, and ignore the other parts of the document, where others are more qualified than I to opine.In short, when corrected, section 7 is nearly empty of any content.7.5.2.1.1 Software and Patch Management, part 1They claim Dominion is defective at one of the best-known cyber-security issues: applying patches.It's not true. The systems are “air gapped”, disconnected from the typical sort of threat that exploits unpatched systems. The primary security of the system is physical.This is standard in other industries with hard reliability constraints, like industrial or medical. Patches in those systems can destabilize systems and kill people, so these industries are risk averse. They prefer to mitigate the threat in other ways, such as with firewalls and air gaps.Yes, this approach is controversial. There are some in the cybersecurity community who use lack of patches as a bludgeon with which to bully any who don't apply every patch immediately. But this is because patching is more a political issue than a technical one. In the real, non-political world we live in, most things don't get immediately patched all the time.7.5.2.1.1 Software and Patch Management, part 2They claim new software executables were applied to the system, despite the rules against new software being applied. This isn't necessarily true.There are many reasons why Windows may create new software executables even when no new software is added. One reason is “Features on Demand” or FOD. You'll see new executables appear in C:\Windows\WinSxS for these. Another reason is their .NET language, which causes binary x86 executables to be created from bytecode. You'll see this in the C:\Windows\assembly directory.The auditors simply counted the number of new executables, with no indication which category they fell in. Maybe they are right, maybe new software was installed or old software updated. It's just that their mere counting of executable files doesn't show understanding of these differences.7.5.2.1.2 Log ManagementThe auditors claim that a central log management system should be used.This obviously wouldn't apply to “air gapped” systems, because it would need a connection to an external network.Dominion already designates their EMSERVER as the central log repository for their little air gapped network. Important files from C: are copied to D:, a RAID10 drive. This is a perfectly adequate solution, adding yet another computer to their little network would be overkill, and add as many security problems as it solved.One could argue more Windows logs need to be preserved, but that would simply mean archiving the from the C: drive onto the D: drive, not that you need to connect to the Internet to centrally log files.7.5.2.1.3 Credential ManagementLike the other sections, this claim is out of place | Threat Patching | |||

| 2020-05-19 18:03:23 | Securing work-at-home apps (lien direct) | In today's post, I answer the following question:Our customer's employees are now using our corporate application while working from home. They are concerned about security, protecting their trade secrets. What security feature can we add for these customers?The tl;dr answer is this: don't add gimmicky features, but instead, take this opportunity to do security things you should already be doing, starting with a "vulnerability disclosure program" or "vuln program".GimmicksFirst of all, I'd like to discourage you from adding security gimmicks to your product. You are no more likely to come up with an exciting new security feature on your own as you are a miracle cure for the covid. Your sales and marketing people may get excited about the feature, and they may get the customer excited about it too, but the excitement won't last.Eventually, the customer's IT and cybersecurity teams will be brought in. They'll quickly identify your gimmick as snake oil, and you'll have made an enemy of them. They are already involved in securing the server side, the work-at-home desktop, the VPN, and all the other network essentials. You don't want them as your enemy, you want them as your friend. You don't want to send your salesperson into the maw of a technical meeting at the customer's site trying to defend the gimmick.You want to take the opposite approach: do something that the decision maker on the customer side won't necessarily understand, but which their IT/cybersecurity people will get excited about. You want them in the background as your champion rather than as your opposition.Vulnerability disclosure programTo accomplish this goal described above, the thing you want is known as a vulnerability disclosure program. If there's one thing that the entire cybersecurity industry is agreed about (other than hating the term cybersecurity, preferring "infosec" instead) is that you need this vulnerability disclosure program. Everything else you might want to do to add security features in your product come after you have this thing.Your product has security bugs, known as vulnerabilities. This is true of everyone, no matter how good you are. Apple, Microsoft, and Google employ the brightest minds in cybersecurity and they have vulnerabilities. Every month you update their products with the latest fixes for these vulnerabilities. I just bought a new MacBook Air and it's already telling me I need to update the operating system to fix the bugs found after it shipped.These bugs come mostly from outsiders. These companies have internal people searching for such bugs, as well as consultants, and do a good job quietly fixing what they find. But this goes only so far. Outsiders have a wider set of skills and perspectives than the companies could ever hope to control themselves, so find things that the companies miss.These outsiders are often not customers.This has been a chronic problem throughout the history of computers. Somebody calls up your support line and tells you there's an obvious bug that hackers can easily exploit. The customer support representative then ignores this because they aren't a customer. It's foolish wasting time adding features to a product that no customer is asking for.But then this bug leaks out to the public, hackers widely exploit it damaging customers, and angry customers now demand why you did nothing to fix the bug despite having been notified about it.The problem here is that nobody has the job of responding to such problems. The reason your company dropped the ball was that nobody was assigned to pick it up. All a vulnerability disclosure program means that at least one person within the company has the responsibility of dealing with it.How to set up vulnerability disclosure program | Spam Vulnerability Threat Guideline | ★★★ | ||

| 2020-04-02 01:23:55 | About them Zoom vulns... (lien direct) | Today a couple vulnerabilities were announced in Zoom, the popular work-from-home conferencing app. Hackers can possibly exploit these to do evil things to you, such as steal your password. Because of the COVID-19, these vulns have hit the mainstream media. This means my non-techy friends and relatives have been asking about it. I thought I'd write up a blogpost answering their questions.The short answer is that you don't need to worry about it. Unless you do bad things, like using the same password everywhere, it's unlikely to affect you. You should worry more about wearing pants on your Zoom video conferences in case you forget and stand up.Now is a good time to remind people to stop using the same password everywhere and to visit https://haveibeenpwned.com to view all the accounts where they've had their password stolen. Using the same password everywhere is the #1 vulnerability the average person is exposed to, and is a possible problem here. For critical accounts (Windows login, bank, email), use a different password for each. (Sure, for accounts you don't care about, use the same password everywhere, I use 'Foobar1234'). Write these passwords down on paper and put that paper in a secure location. Don't print them, don't store them in a file on your computer. Writing it on a Post-It note taped under your keyboard is adequate security if you trust everyone in your household.If hackers use this Zoom method to steal your Windows password, then you aren't in much danger. They can't log into your computer because it's almost certainly behind a firewall. And they can't use the password on your other accounts, because it's not the same.Why you shouldn't worryThe reason you shouldn't worry about this password stealing problem is because it's everywhere, not just Zoom. It's also here in this browser you are using. If you click on file://hackme.robertgraham.com/foo/bar.html, then I can grab your password in exactly the same way as if you clicked on that vulnerable link in Zoom chat. That's how the Zoom bug works: hackers post these evil links in the chat window during a Zoom conference.It's hard to say Zoom has a vulnerability when so many other applications have the same issue.Many home ISPs block such connections to the Internet, such as Comcast, AT&T, Cox, Verizon Wireless, and others. If this is the case, when you click on the above link, nothing will happen. Your computer till try to contact hackme.robertgraham.com, and fail. You may be protected from clicking on the above link without doing anything. If your ISP doesn't block such connections, you can configure your home router to do this. Go into the firewall settings and block "TCP port 445 outbound". Alternatively, you can configure Windows to only follow such links internal to your home network, but not to the Internet.If hackers (like me if you click on the above link) gets your password, then they probably can't use use it. That's because while your home Internet router allows outbound connections, it (almost always) blocks inbound connections. Thus, if I steal your Windows password, I can't use it to log into your home computer unless I also break physically into your house. But if I can break into your computer physically, I can hack it without knowing your password.The same arguments apply to corporate desktops. Corporations should block such outbound connections. They | Hack Vulnerability Threat | |||

| 2020-03-06 15:57:01 | Huawei backdoors explanation, explained (lien direct) | Today Huawei published a video explaining the concept of "backdoors" in telco equipment. Many are criticizing the video for being tone deaf. I don't understand this concept of "tone deafness". Instead, I want to explore the facts.Does the word “#backdoor” seem frightening? That's because it's often used incorrectly – sometimes to deliberately create fear. Watch to learn the truth about backdoors and other types of network access. #cybersecurity pic.twitter.com/NEUXbZbcqw- Huawei (@Huawei) March 4, 2020This video seems in response to last month's story about Huawei misusing law enforcement backdoors from the Wall Street Journal. All telco equipment has backdoors usable only by law enforcement, the accusation is that Huawei has a backdoor into this backdoor, so that Chinese intelligence can use it.That story was bogus. Sure, Huawei is probably guilty of providing backdoor access to the Chinese government, but something is deeply flawed with this particular story.We know something is wrong with the story because the U.S. officials cited are anonymous. We don't know who they are or what position they have in the government. If everything they said was true, they wouldn't insist on being anonymous, but would stand up and declare it in a press conference so that every newspaper could report it. When something is not true or spun, then they anonymously "leak" it to a corrupt journalist to report it their way.This is objectively bad journalism. The Society of Professional Journalists calls this the "Washington Game". They also discuss this on their Code of Ethics page. Yes, it's really common in Washington D.C. reporting, you see it all the time, especially with the NYTimes, Wall Street Journal, and Washington Post. But it happens because what the government says is news, regardless of its false or propaganda, giving government officials the ability to influence journalists. Exclusive access to corrupt journalists is how they influence stories.We know the reporter is being especially shady because of the one quote in the story that is attributed to a named official:“We have evidence that Huawei has the capability secretly to access sensitive and personal information in systems it maintains and sells around the world,” said national security adviser Robert O'Brien. This quote is deceptive because O'Brien doesn't say any of the things that readers assume he's saying. He doesn't actually confirm any of the allegations in the rest of the story.It doesn't say.That Huawei has used that capability.That Huawei intentionally put that capability there.That this is special to Huawei (rather than everywhere in the industry).In fact, this quote applies to every telco equipment maker. They all have law enforcement backdoors. These backdoors always hve "controls" to prevent them from being misused. But these controls are always flawed, either in design or how they are used in the real world.Moreover, all telcos have maintenance/service contracts with the equipment makers. When there are ways around such controls, it's the company's own support engineers who will know them.I absolutely believe Huawei that it has don | Hack Threat | |||

| 2019-08-04 18:52:45 | Securing devices for DEFCON (lien direct) | There's been much debate whether you should get burner devices for hacking conventions like DEF CON (phones or laptops). A better discussion would be to list those things you should do to secure yourself before going, just in case.These are the things I worry about:backup before you goupdate before you gocorrectly locking your devices with full disk encryptioncorrectly configuring WiFiBluetooth devicesMobile phone vs. StingraysUSBBackupTraveling means a higher chance of losing your device. In my review of crime statistics, theft seems less of a threat than whatever city you are coming from. My guess is that while thieves may want to target tourists, the police want to even more the target gangs of thieves, to protect the cash cow that is the tourist industry. But you are still more likely to accidentally leave a phone in a taxi or have your laptop crushed in the overhead bin. If you haven't recently backed up your device, now would be an extra useful time to do this.Anything I want backed up on my laptop is already in Microsoft's OneDrive, so I don't pay attention to this. However, I have a lot of pictures on my iPhone that I don't have in iCloud, so I copy those off before I go.UpdateLike most of you, I put off updates unless they are really important, updating every few months rather than every month. Now is a great time to make sure you have the latest updates.Backup before you update, but then, I already mentioned that above.Full disk encryptionThis is enabled by default on phones, but not the default for laptops. It means that if you lose your device, adversaries can't read any data from it.You are at risk if you have a simple unlock code, like a predicable pattern or a 4-digit code. The longer and less predictable your unlock code, the more secure you are.I use iPhone's "face id" on my phone so that people looking over my shoulder can't figure out my passcode when I need to unlock the phone. However, because this enables the police to easily unlock my phone, by putting it in front of my face, I also remember how to quickly disable face id (by holding the buttons on both sides for 2 seconds).As for laptops, it's usually easy to enable full disk encryption. However there are some gotchas. Microsoft requires a TPM for its BitLocker full disk encryption, which your laptop might not support. I don't know why all laptops don't just have TPMs, but they don't. You may be able to use some tricks to get around this. There are also third party full disk encryption products that use simple passwords.If you don't have a TPM, then hackers can brute-force crack your password, trying billions per second. This applies to my MacBook Air, which is the 2017 model before Apple started adding their "T2" chip to all their laptops. Therefore, I need a strong login password.I deal with this on my MacBook by having two accounts. When I power on the device, I log into an account using a long/complicated password. I then switch to an account with a simpler account for going in/out of sleep mode. This second account can't be used to decrypt the drive.On Linux, my password to decrypt the drive is similarly long, while the user account password is pretty short.I ignore the "evil maid" threat, because my devices are always with me rather than in | Hack Threat Guideline | |||

| 2019-05-29 20:16:09 | Your threat model is wrong (lien direct) | Several subjects have come up with the past week that all come down to the same thing: your threat model is wrong. Instead of addressing the the threat that exists, you've morphed the threat into something else that you'd rather deal with, or which is easier to understand.PhishingAn example is this question that misunderstands the threat of "phishing":Should failing multiple phishing tests be grounds for firing? I ran into a guy at a recent conference, said his employer fired people for repeatedly falling for (simulated) phishing attacks. I talked to experts, who weren't wild about this disincentive. https://t.co/eRYPZ9qkzB pic.twitter.com/Q1aqCmkrWL- briankrebs (@briankrebs) May 29, 2019The (wrong) threat model is here is that phishing is an email that smart users with training can identify and avoid. This isn't true.Good phishing messages are indistinguishable from legitimate messages. Said another way, a lot of legitimate messages are in fact phishing messages, such as when HR sends out a message saying "log into this website with your organization username/password".Recently, my university sent me an email for mandatory Title IX training, not digitally signed, with an external link to the training, that requested my university login creds for access, that was sent from an external address but from the Title IX coordinator.- Tyler Pieron (@tyler_pieron) May 29, 2019Yes, it's amazing how easily stupid employees are tricked by the most obvious of phishing messages, and you want to point and laugh at them. But frankly, you want the idiot employees doing this. The more obvious phishing attempts are the least harmful and a good test of the rest of your security -- which should be based on the assumption that users will frequently fall for phishing.In other words, if you paid attention to the threat model, you'd be mitigating the threat in other ways and not even bother training employees. You'd be firing HR idiots for phishing employees, not punishing employees for getting tricked. Your systems would be resilient against successful phishes, such as using two-factor authentication.IoT securityAfter the Mirai worm, government types pushed for laws to secure IoT devices, as billions of insecure devices like TVs, cars, security cameras, and toasters are added to the Internet. Everyone is afraid of the next Mirai-type worm. For example, they are pushing for devices to be auto-updated.But auto-updates are a bigger threat than worms.Since Mirai, roughly 10-billion new IoT devices have been added to the Internet, yet there hasn't been a Mirai-sized worm. Why is that? After 10-billion new IoT devices, it's still Windows and not IoT that is the main problem.The answer is that number, 10-billion. Internet worms work by guessing IPv4 addresses, of which there are only 4-billion. You can't have 10-billion new devices on the public IPv4 addresses because there simply aren't enough addresses. Instead, those 10-billion devices are almost entirely being put on private ne | Ransomware Tool Vulnerability Threat Guideline | FedEx NotPetya | ||

| 2019-05-28 06:20:06 | Almost One Million Vulnerable to BlueKeep Vuln (CVE-2019-0708) (lien direct) | Microsoft announced a vulnerability in it's "Remote Desktop" product that can lead to robust, wormable exploits. I scanned the Internet to assess the danger. I find nearly 1-million devices on the public Internet that are vulnerable to the bug. That means when the worm hits, it'll likely compromise those million devices. This will likely lead to an event as damaging as WannaCry and notPetya from 2017 -- potentially worse, as hackers have since honed their skills exploiting these things for ransomware and other nastiness.To scan the Internet, I started with masscan, my Internet-scale port scanner, looking for port 3389, the one used by Remote Desktop. This takes a couple hours, and lists all the devices running Remote Desktop -- in theory.This returned 7,629,102 results (over 7-million). However, there is a lot of junk out there that'll respond on this port. Only about half are actually Remote Desktop.Masscan only finds the open ports, but is not complex enough to check for the vulnerability. Remote Desktop is a complicated protocol. A project was posted that could connect to an address and test it, to see if it was patched or vulnerable. I took that project and optimized it a bit, rdpscan, then used it to scan the results from masscan. It's a thousand times slower, but it's only scanning the results from masscan instead of the entire Internet.The table of results is as follows:1447579 UNKNOWN - receive timeout1414793 SAFE - Target appears patched1294719 UNKNOWN - connection reset by peer1235448 SAFE - CredSSP/NLA required 923671 VULNERABLE -- got appid 651545 UNKNOWN - FIN received 438480 UNKNOWN - connect timeout 105721 UNKNOWN - connect failed 9 82836 SAFE - not RDP but HTTP 24833 UNKNOWN - connection reset on connect 3098 UNKNOWN - network error 2576 UNKNOWN - connection terminatedThe various UNKNOWN things fail for various reasons. A lot of them are because the protocol isn't actually Remote Desktop and respond weirdly when we try to talk Remote Desktop. A lot of others are Windows machines, sometimes vulnerable and sometimes not, but for some reason return errors sometimes.The important results are those marked VULNERABLE. There are 923,671 vulnerable machines in this result. That means we've confirmed the vulnerability really does exist, though it's possible a small number of these are "honeypots" deliberately pretending to be vulnerable in order to monitor hacker activity on the Internet.The next result are those marked SAFE due to probably being "pached". Actually, it doesn't necessarily mean they are patched Windows boxes. They could instead be non-Windows systems that appear the same as patched Windows boxes. But either way, they are safe from this vulnerability. There are 1,414,793 of them.The next result to look at are those marked SAFE due to CredSSP/NLA failures, of which there are 1,235,448. This doesn't mean they are patched, but only that we can't exploit them. They require "network level authentication" first before we can talk Remote Desktop to them. That means we can't test whether they are patched or vulnerable -- but neither can the hackers. They may still be exploitable via an insider threat who knows a valid username/password, but they aren't exploitable by anonymous hackers or worms.The next category is marked as SAFE because they aren't Remote Desktop at all, but HTTP servers. In other words, in response to o | Ransomware Vulnerability Threat Patching Guideline | NotPetya Wannacry | ||

| 2018-11-04 18:22:46 | Brian Kemp is bad on cybersecurity (lien direct) | I'd prefer a Republican governor, but as a cybersecurity expert, I have to point out how bad Brian Kemp (candidate for Georgia governor) is on cybersecurity. When notified about vulnerabilities in election systems, his response has been to shoot the messenger rather than fix the vulnerabilities. This was the premise behind the cybercrime bill earlier this year that was ultimately vetoed by the current governor after vocal opposition from cybersecurity companies. More recently, he just announced that he's investigating the Georgia State Democratic Party for a "failed hacking attempt".According to news stories, state elections websites are full of common vulnerabilities, those documented by the OWASP Top 10, such as "direct object references" that would allow any election registration information to be read or changed, as allowing a hacker to cancel registrations of those of the other party.Testing for such weaknesses is not a crime. Indeed, it's desirable that people can test for security weaknesses. Systems that aren't open to test are insecure. This concept is the basis for many policy initiatives at the federal level, to not only protect researchers probing for weaknesses from prosecution, but to even provide bounties encouraging them to do so. The DoD has a "Hack the Pentagon" initiative encouraging exactly this.But the State of Georgia is stereotypically backwards and thuggish. Earlier this year, the legislature passed SB 315 that criminalized this activity of merely attempting to access a computer without permission, to probe for possibly vulnerabilities. To the ignorant and backwards person, this seems reasonable, of course this bad activity should be outlawed. But as we in the cybersecurity community have learned over the last decades, this only outlaws your friends from finding security vulnerabilities, and does nothing to discourage your enemies. Russian election meddling hackers are not deterred by such laws, only Georgia residents concerned whether their government websites are secure.It's your own users, and well-meaning security researchers, who are the primary source for improving security. Unless you live under a rock (like Brian Kemp, apparently), you'll have noticed that every month you have your Windows desktop or iPhone nagging you about updating the software to fix security issues. If you look behind the scenes, you'll find that most of these security fixes come from outsiders. They come from technical experts who accidentally come across vulnerabilities. They come from security researchers who specifically look for vulnerabilities.It's because of this "research" that systems are mostly secure today. A few days ago was the 30th anniversary of the "Morris Worm" that took down the nascent Internet in 1988. The net of that time was hostile to security research, with major companies ignoring vulnerabilities. Systems then were laughably insecure, but vendors tried to address the problem by suppressing research. The Morris Worm exploited several vulnerabilities that were well-known at the time, but ignored by the vendor (in this case, primarily Sun Microsystems).Since then, with a culture of outsiders disclosing vulnerabilities, vendors have been pressured into fix them. This has led to vast improvements in security. I'm posting this from a public WiFi hotspot in a bar, for example, because computers are secure enough for this to be safe. 10 years ago, such activity wasn't safe.The Georgia Democrats obviously have concerns about the integrity of election systems. They have every reason to thoroughly probe an elections website looking for vulnerabilities. This sort of activity should be encouraged, not supp | Vulnerability Threat Guideline | |||

| 2018-09-10 17:33:17 | California\'s bad IoT law (lien direct) | California has passed an IoT security bill, awaiting the government's signature/veto. It's a typically bad bill based on a superficial understanding of cybersecurity/hacking that will do little improve security, while doing a lot to impose costs and harm innovation.It's based on the misconception of adding security features. It's like dieting, where people insist you should eat more kale, which does little to address the problem you are pigging out on potato chips. The key to dieting is not eating more but eating less. The same is true of cybersecurity, where the point is not to add “security features” but to remove “insecure features”. For IoT devices, that means removing listening ports and cross-site/injection issues in web management. Adding features is typical “magic pill” or “silver bullet” thinking that we spend much of our time in infosec fighting against.We don't want arbitrary features like firewall and anti-virus added to these products. It'll just increase the attack surface making things worse. The one possible exception to this is “patchability”: some IoT devices can't be patched, and that is a problem. But even here, it's complicated. Even if IoT devices are patchable in theory there is no guarantee vendors will supply such patches, or worse, that users will apply them. Users overwhelmingly forget about devices once they are installed. These devices aren't like phones/laptops which notify users about patching.You might think a good solution to this is automated patching, but only if you ignore history. Many rate “NotPetya” as the worst, most costly, cyberattack ever. That was launched by subverting an automated patch. Most IoT devices exist behind firewalls, and are thus very difficult to hack. Automated patching gets beyond firewalls; it makes it much more likely mass infections will result from hackers targeting the vendor. The Mirai worm infected fewer than 200,000 devices. A hack of a tiny IoT vendor can gain control of more devices than that in one fell swoop.The bill does target one insecure feature that should be removed: hardcoded passwords. But they get the language wrong. A device doesn't have a single password, but many things that may or may not be called passwords. A typical IoT device has one system for creating accounts on the web management interface, a wholly separate authentication system for services like Telnet (based on /etc/passwd), and yet a wholly separate system for things like debugging interfaces. Just because a device does the proscribed thing of using a unique or user generated password in the user interface doesn't mean it doesn't also have a bug in Telnet.That was the problem with devices infected by Mirai. The description that these were hardcoded passwords is only a superficial understanding of the problem. The real problem was that there were different authentication systems in the web interface and in other services like Telnet. Most of the devices vulnerable to Mirai did the right thing on the web interfaces (meeting the language of this law) requiring the user to create new passwords before operating. They just did the wrong thing elsewhere.People aren't really paying attention to what happened with Mirai. They look at the 20 billion new IoT devices that are going to be connected to the Internet by 2020 and believe Mirai is just the tip of the iceberg. But it isn't. The IPv4 Internet has only 4 billion addresses, which are pretty much already used up. This means those 20 billion won't be exposed to the public Internet like Mirai devices, but hidden behind firewalls that translate addresses. Thus, rather than Mirai presaging the future, it represents the last gasp of the past that is unlikely to come again.This law is backwards looking rather than forward looking. Forward looking, by far the most important t | Hack Threat Patching Guideline | NotPetya Tesla | ||

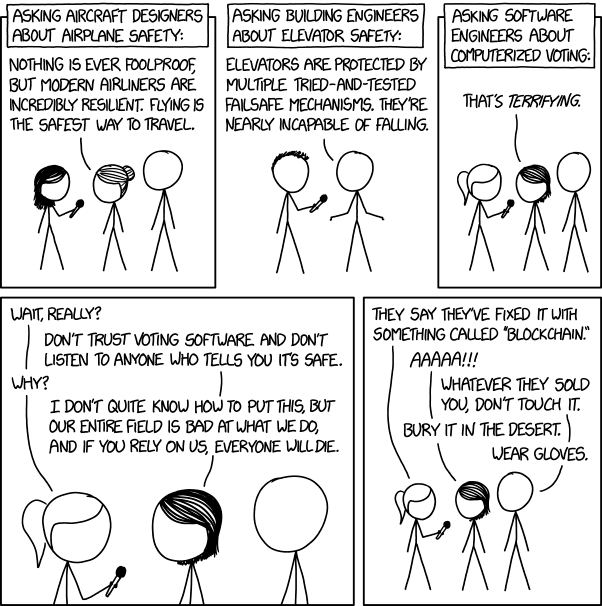

| 2018-08-08 20:09:17 | That XKCD on voting machine software is wrong (lien direct) | The latest XKCD comic on voting machine software is wrong, profoundly so. It's the sort of thing that appeals to our prejudices, but mistakes the details. Accidents vs. attackThe biggest flaw is that the comic confuses accidents vs. intentional attack. Airplanes and elevators are designed to avoid accidental failures. If that's the measure, then voting machine software is fine and perfectly trustworthy. Such machines are no more likely to accidentally record a wrong vote than the paper voting systems they replaced -- indeed less likely. The reason we have electronic voting machines in the first place was due to the "hanging chad" problem in the Bush v. Gore election of the year 2000. After that election, a wave of new, software-based, voting machines replaced the older inaccurate paper machines.The question is whether software voting machines can be attacked. Well, if that's the measure, then airplanes aren't safe at all. Security against human attack consists of the entire infrastructure outside the plane, such as TSA forcing us to take off our shoes, to trade restrictions to prevent the proliferation of Stinger missiles.Confusing the two, accidents vs. attack, is used here because it makes the reader feel superior. We get to mock and feel superior to those stupid software engineers for not living up to what's essentially a fictional standard of reliability.To repeat: software is better than the mechanical machines they replaced, which is why there are so many software-based machines in the United States. The issue isn't normal accuracy, but their robustness against a different standard, against attack -- a standard which airplanes and elevators suck at.The problems are as much hardware as softwareLast year at the DEF CON hacking conference they had an "Election Hacking Village" where they hacked a number of electronic voting machines. Most of those "hacks" were against the hardware, such as soldering on a JTAG device or accessing USB ports. Other errors have been voting machines being sold on eBay whose data wasn't wiped, allowing voter records to be recovered.What we want to see is hardware designed more like an iPhone, where the FBI can't decrypt a phone even when they really really want to. This requires special chips, such as secure enclaves, signed boot loaders, and so on. Only once we get the hardware right can we complain about the software being deficient.To be fair, software problems were also found at DEF CON, like an exploit over WiFi. Though, a lot of problems are questionable whether the fault lies in the software design or the hardware design, fixable in either one. The situation is better described as the entire design being flawed, from the "requirements", to the high-level system "architecture", and lastly to the actual "software" code.It's lack of accountability/fail-safesWe imagine the threat is that votes can be changed in the voting machine, but it's more profound than that. The problem is that votes can be changed invisibly. The first change experts want to see is adding a paper trail, rather than fixing bugs.Consider "recounts". With many of today's electronic voting machines, this is meaningless, with nothing to recount. The machine produces a number, and we have nothing else to test against whether that number is correct or fa Accidents vs. attackThe biggest flaw is that the comic confuses accidents vs. intentional attack. Airplanes and elevators are designed to avoid accidental failures. If that's the measure, then voting machine software is fine and perfectly trustworthy. Such machines are no more likely to accidentally record a wrong vote than the paper voting systems they replaced -- indeed less likely. The reason we have electronic voting machines in the first place was due to the "hanging chad" problem in the Bush v. Gore election of the year 2000. After that election, a wave of new, software-based, voting machines replaced the older inaccurate paper machines.The question is whether software voting machines can be attacked. Well, if that's the measure, then airplanes aren't safe at all. Security against human attack consists of the entire infrastructure outside the plane, such as TSA forcing us to take off our shoes, to trade restrictions to prevent the proliferation of Stinger missiles.Confusing the two, accidents vs. attack, is used here because it makes the reader feel superior. We get to mock and feel superior to those stupid software engineers for not living up to what's essentially a fictional standard of reliability.To repeat: software is better than the mechanical machines they replaced, which is why there are so many software-based machines in the United States. The issue isn't normal accuracy, but their robustness against a different standard, against attack -- a standard which airplanes and elevators suck at.The problems are as much hardware as softwareLast year at the DEF CON hacking conference they had an "Election Hacking Village" where they hacked a number of electronic voting machines. Most of those "hacks" were against the hardware, such as soldering on a JTAG device or accessing USB ports. Other errors have been voting machines being sold on eBay whose data wasn't wiped, allowing voter records to be recovered.What we want to see is hardware designed more like an iPhone, where the FBI can't decrypt a phone even when they really really want to. This requires special chips, such as secure enclaves, signed boot loaders, and so on. Only once we get the hardware right can we complain about the software being deficient.To be fair, software problems were also found at DEF CON, like an exploit over WiFi. Though, a lot of problems are questionable whether the fault lies in the software design or the hardware design, fixable in either one. The situation is better described as the entire design being flawed, from the "requirements", to the high-level system "architecture", and lastly to the actual "software" code.It's lack of accountability/fail-safesWe imagine the threat is that votes can be changed in the voting machine, but it's more profound than that. The problem is that votes can be changed invisibly. The first change experts want to see is adding a paper trail, rather than fixing bugs.Consider "recounts". With many of today's electronic voting machines, this is meaningless, with nothing to recount. The machine produces a number, and we have nothing else to test against whether that number is correct or fa |

Hack Threat |

1

We have: 10 articles.

We have: 10 articles.

To see everything:

Our RSS (filtrered)