What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2021-09-24 03:51:21 | Check: that Republican audit of Maricopa (lien direct) | Author: Robert Graham (@erratarob)Later today (Friday, September 24, 2021), Republican auditors release their final report on the found with elections in Maricopa county. Draft copies have circulated online. In this blogpost, I write up my comments on the cybersecurity portions of their draft.https://arizonaagenda.substack.com/p/we-got-the-senate-audit-reportThe three main problems are:They misapply cybersecurity principles that are meaningful for normal networks, but which don't really apply to the air gapped networks we see here.They make some errors about technology, especially networking.They are overstretching themselves to find dirt, claiming the things they don't understand are evidence of something bad.In the parts below, I pick apart individual pieces from that document to demonstrate these criticisms. I focus on section 7, the cybersecurity section, and ignore the other parts of the document, where others are more qualified than I to opine.In short, when corrected, section 7 is nearly empty of any content.7.5.2.1.1 Software and Patch Management, part 1They claim Dominion is defective at one of the best-known cyber-security issues: applying patches.It's not true. The systems are “air gapped”, disconnected from the typical sort of threat that exploits unpatched systems. The primary security of the system is physical.This is standard in other industries with hard reliability constraints, like industrial or medical. Patches in those systems can destabilize systems and kill people, so these industries are risk averse. They prefer to mitigate the threat in other ways, such as with firewalls and air gaps.Yes, this approach is controversial. There are some in the cybersecurity community who use lack of patches as a bludgeon with which to bully any who don't apply every patch immediately. But this is because patching is more a political issue than a technical one. In the real, non-political world we live in, most things don't get immediately patched all the time.7.5.2.1.1 Software and Patch Management, part 2They claim new software executables were applied to the system, despite the rules against new software being applied. This isn't necessarily true.There are many reasons why Windows may create new software executables even when no new software is added. One reason is “Features on Demand” or FOD. You'll see new executables appear in C:\Windows\WinSxS for these. Another reason is their .NET language, which causes binary x86 executables to be created from bytecode. You'll see this in the C:\Windows\assembly directory.The auditors simply counted the number of new executables, with no indication which category they fell in. Maybe they are right, maybe new software was installed or old software updated. It's just that their mere counting of executable files doesn't show understanding of these differences.7.5.2.1.2 Log ManagementThe auditors claim that a central log management system should be used.This obviously wouldn't apply to “air gapped” systems, because it would need a connection to an external network.Dominion already designates their EMSERVER as the central log repository for their little air gapped network. Important files from C: are copied to D:, a RAID10 drive. This is a perfectly adequate solution, adding yet another computer to their little network would be overkill, and add as many security problems as it solved.One could argue more Windows logs need to be preserved, but that would simply mean archiving the from the C: drive onto the D: drive, not that you need to connect to the Internet to centrally log files.7.5.2.1.3 Credential ManagementLike the other sections, this claim is out of place | Threat Patching | |||

| 2019-05-28 06:20:06 | Almost One Million Vulnerable to BlueKeep Vuln (CVE-2019-0708) (lien direct) | Microsoft announced a vulnerability in it's "Remote Desktop" product that can lead to robust, wormable exploits. I scanned the Internet to assess the danger. I find nearly 1-million devices on the public Internet that are vulnerable to the bug. That means when the worm hits, it'll likely compromise those million devices. This will likely lead to an event as damaging as WannaCry and notPetya from 2017 -- potentially worse, as hackers have since honed their skills exploiting these things for ransomware and other nastiness.To scan the Internet, I started with masscan, my Internet-scale port scanner, looking for port 3389, the one used by Remote Desktop. This takes a couple hours, and lists all the devices running Remote Desktop -- in theory.This returned 7,629,102 results (over 7-million). However, there is a lot of junk out there that'll respond on this port. Only about half are actually Remote Desktop.Masscan only finds the open ports, but is not complex enough to check for the vulnerability. Remote Desktop is a complicated protocol. A project was posted that could connect to an address and test it, to see if it was patched or vulnerable. I took that project and optimized it a bit, rdpscan, then used it to scan the results from masscan. It's a thousand times slower, but it's only scanning the results from masscan instead of the entire Internet.The table of results is as follows:1447579 UNKNOWN - receive timeout1414793 SAFE - Target appears patched1294719 UNKNOWN - connection reset by peer1235448 SAFE - CredSSP/NLA required 923671 VULNERABLE -- got appid 651545 UNKNOWN - FIN received 438480 UNKNOWN - connect timeout 105721 UNKNOWN - connect failed 9 82836 SAFE - not RDP but HTTP 24833 UNKNOWN - connection reset on connect 3098 UNKNOWN - network error 2576 UNKNOWN - connection terminatedThe various UNKNOWN things fail for various reasons. A lot of them are because the protocol isn't actually Remote Desktop and respond weirdly when we try to talk Remote Desktop. A lot of others are Windows machines, sometimes vulnerable and sometimes not, but for some reason return errors sometimes.The important results are those marked VULNERABLE. There are 923,671 vulnerable machines in this result. That means we've confirmed the vulnerability really does exist, though it's possible a small number of these are "honeypots" deliberately pretending to be vulnerable in order to monitor hacker activity on the Internet.The next result are those marked SAFE due to probably being "pached". Actually, it doesn't necessarily mean they are patched Windows boxes. They could instead be non-Windows systems that appear the same as patched Windows boxes. But either way, they are safe from this vulnerability. There are 1,414,793 of them.The next result to look at are those marked SAFE due to CredSSP/NLA failures, of which there are 1,235,448. This doesn't mean they are patched, but only that we can't exploit them. They require "network level authentication" first before we can talk Remote Desktop to them. That means we can't test whether they are patched or vulnerable -- but neither can the hackers. They may still be exploitable via an insider threat who knows a valid username/password, but they aren't exploitable by anonymous hackers or worms.The next category is marked as SAFE because they aren't Remote Desktop at all, but HTTP servers. In other words, in response to o | Ransomware Vulnerability Threat Patching Guideline | NotPetya Wannacry | ||

| 2019-05-27 19:59:38 | A lesson in journalism vs. cybersecurity (lien direct) | A recent NYTimes article blaming the NSA for a ransomware attack on Baltimore is typical bad journalism. It's an op-ed masquerading as a news article. It cites many to support the conclusion the NSA is to be blamed, but only a single quote, from the NSA director, from the opposing side. Yet many experts oppose this conclusion, such as @dave_maynor, @beauwoods, @daveaitel, @riskybusiness, @shpantzer, @todb, @hrbrmst, ... It's not as if these people are hard to find, it's that the story's authors didn't look.The main reason experts disagree is that the NSA's Eternalblue isn't actually responsible for most ransomware infections. It's almost never used to start the initial infection -- that's almost always phishing or website vulns. Once inside, it's almost never used to spread laterally -- that's almost always done with windows networking and stolen credentials. Yes, ransomware increasingly includes Eternalblue as part of their arsenal of attacks, but this doesn't mean Eternalblue is responsible for ransomware.The NYTimes story takes extraordinary effort to jump around this fact, deliberately misleading the reader to conflate one with the other. A good example is this paragraph: That link is a warning from last July about the "Emotet" ransomware and makes no mention of EternalBlue. Instead, the story is citing anonymous researchers claiming that EthernalBlue has been added to Emotet since after that DHS warning.Who are these anonymous researchers? The NYTimes article doesn't say. This is bad journalism. The principles of journalism are that you are supposed to attribute where you got such information, so that the reader can verify for themselves whether the information is true or false, or at least, credible.And in this case, it's probably false. The likely source for that claim is this article from Malwarebytes about Emotet. They have since retracted this claim, as the latest version of their article points out. That link is a warning from last July about the "Emotet" ransomware and makes no mention of EternalBlue. Instead, the story is citing anonymous researchers claiming that EthernalBlue has been added to Emotet since after that DHS warning.Who are these anonymous researchers? The NYTimes article doesn't say. This is bad journalism. The principles of journalism are that you are supposed to attribute where you got such information, so that the reader can verify for themselves whether the information is true or false, or at least, credible.And in this case, it's probably false. The likely source for that claim is this article from Malwarebytes about Emotet. They have since retracted this claim, as the latest version of their article points out. In any event, the NYTimes article claims that Emotet is now "relying" on the NSA's EternalBlue to spread. That's not the same thing as "using", not even close. Yes, lots of ransomware has been updated to also use Eternalblue to spread. However, what ransomware is relying upon is still the Wind In any event, the NYTimes article claims that Emotet is now "relying" on the NSA's EternalBlue to spread. That's not the same thing as "using", not even close. Yes, lots of ransomware has been updated to also use Eternalblue to spread. However, what ransomware is relying upon is still the Wind |

Ransomware Malware Patching Guideline | NotPetya Wannacry | ||

| 2018-09-10 17:33:17 | California\'s bad IoT law (lien direct) | California has passed an IoT security bill, awaiting the government's signature/veto. It's a typically bad bill based on a superficial understanding of cybersecurity/hacking that will do little improve security, while doing a lot to impose costs and harm innovation.It's based on the misconception of adding security features. It's like dieting, where people insist you should eat more kale, which does little to address the problem you are pigging out on potato chips. The key to dieting is not eating more but eating less. The same is true of cybersecurity, where the point is not to add “security features” but to remove “insecure features”. For IoT devices, that means removing listening ports and cross-site/injection issues in web management. Adding features is typical “magic pill” or “silver bullet” thinking that we spend much of our time in infosec fighting against.We don't want arbitrary features like firewall and anti-virus added to these products. It'll just increase the attack surface making things worse. The one possible exception to this is “patchability”: some IoT devices can't be patched, and that is a problem. But even here, it's complicated. Even if IoT devices are patchable in theory there is no guarantee vendors will supply such patches, or worse, that users will apply them. Users overwhelmingly forget about devices once they are installed. These devices aren't like phones/laptops which notify users about patching.You might think a good solution to this is automated patching, but only if you ignore history. Many rate “NotPetya” as the worst, most costly, cyberattack ever. That was launched by subverting an automated patch. Most IoT devices exist behind firewalls, and are thus very difficult to hack. Automated patching gets beyond firewalls; it makes it much more likely mass infections will result from hackers targeting the vendor. The Mirai worm infected fewer than 200,000 devices. A hack of a tiny IoT vendor can gain control of more devices than that in one fell swoop.The bill does target one insecure feature that should be removed: hardcoded passwords. But they get the language wrong. A device doesn't have a single password, but many things that may or may not be called passwords. A typical IoT device has one system for creating accounts on the web management interface, a wholly separate authentication system for services like Telnet (based on /etc/passwd), and yet a wholly separate system for things like debugging interfaces. Just because a device does the proscribed thing of using a unique or user generated password in the user interface doesn't mean it doesn't also have a bug in Telnet.That was the problem with devices infected by Mirai. The description that these were hardcoded passwords is only a superficial understanding of the problem. The real problem was that there were different authentication systems in the web interface and in other services like Telnet. Most of the devices vulnerable to Mirai did the right thing on the web interfaces (meeting the language of this law) requiring the user to create new passwords before operating. They just did the wrong thing elsewhere.People aren't really paying attention to what happened with Mirai. They look at the 20 billion new IoT devices that are going to be connected to the Internet by 2020 and believe Mirai is just the tip of the iceberg. But it isn't. The IPv4 Internet has only 4 billion addresses, which are pretty much already used up. This means those 20 billion won't be exposed to the public Internet like Mirai devices, but hidden behind firewalls that translate addresses. Thus, rather than Mirai presaging the future, it represents the last gasp of the past that is unlikely to come again.This law is backwards looking rather than forward looking. Forward looking, by far the most important t | Hack Threat Patching Guideline | NotPetya Tesla | ||

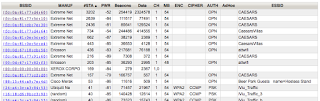

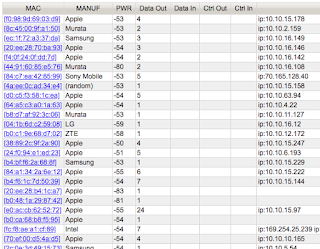

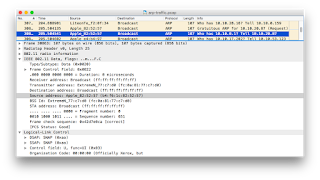

| 2018-08-07 23:18:45 | What the Caesars (@DefCon) WiFi situation looks like (lien direct) | So I took a survey of WiFi at Caesar's Palace and thought I'd write up some results.When we go to DEF CON in Vegas, hundreds of us bring our WiFi tools to look at the world. Actually, no special hardware is necessary, as modern laptops/phones have WiFi built-in, while the operating system (Windows, macOS, Linux) enables “monitor mode”. Software is widely available and free. We still love our specialized WiFi dongles and directional antennas, but they aren't really needed anymore.It's also legal, as long as you are just grabbing header information and broadcasts. Which is about all that's useful anymore as encryption has become the norm -- we can pretty much only see what we are allowed to see. The days of grabbing somebody's session-cookie and hijacking their web email are long gone (though the was a fun period). There are still a few targets around if you want to WiFi hack, but most are gone.So naturally I wanted to do a survey of what Caesar's Palace has for WiFi during the DEF CON hacker conference located there.Here is a list of access-points (on channel 1 only) sorted by popularity, the number of stations using them. These have mind-blowing high numbers in the ~3000 range for “CAESARS”. I think something is wrong with the data. I click on the first one to drill down, and I find a source of the problem. I'm seeing only “Data Out” packets from these devices, not “Data In”. I click on the first one to drill down, and I find a source of the problem. I'm seeing only “Data Out” packets from these devices, not “Data In”. These are almost entirely ARP packets from devices, associated with other access-points, not actually associated with this access-point. The hotel has bridged (via Ethernet) all the access-points together. We can see this in the raw ARP packets, such as the one shown below: These are almost entirely ARP packets from devices, associated with other access-points, not actually associated with this access-point. The hotel has bridged (via Ethernet) all the access-points together. We can see this in the raw ARP packets, such as the one shown below: WiFi packets have three MAC addresses, the source and destination (as expected) and also the address of the access-point involved. The access point is the actual transmitter, but it's bridging the packet from some other location on the local Ethernet network.Apparently, CAESARS dumps all the guests into the address range 10.10.x.x, all going out through the router 10.10.0.1. We can see this from the ARP traffic, as everyone seems to be ARPing that router.I'm probably seeing all the devices on the CAESARS WiFi. In ot WiFi packets have three MAC addresses, the source and destination (as expected) and also the address of the access-point involved. The access point is the actual transmitter, but it's bridging the packet from some other location on the local Ethernet network.Apparently, CAESARS dumps all the guests into the address range 10.10.x.x, all going out through the router 10.10.0.1. We can see this from the ARP traffic, as everyone seems to be ARPing that router.I'm probably seeing all the devices on the CAESARS WiFi. In ot |

Patching | |||

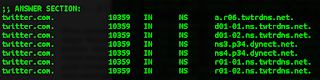

| 2018-07-12 19:54:20 | Your IoT security concerns are stupid (lien direct) | Lots of government people are focused on IoT security, such as this recent effort. They are usually wrong. It's a typical cybersecurity policy effort which knows the answer without paying attention to the question.Patching has little to do with IoT security. For one thing, consumers will not patch vulns, because unlike your phone/laptop computer which is all "in your face", IoT devices, once installed, are quickly forgotten. For another thing, the average lifespan of a device on your network is at least twice the duration of support from the vendor making patches available.Naive solutions to the manual patching problem, like forcing autoupdates from vendors, increase rather than decrease the danger. Manual patches that don't get applied cause a small, but manageable constant hacking problem. Automatic patching causes rarer, but more catastrophic events when hackers hack the vendor and push out a bad patch. People are afraid of Mirai, a comparatively minor event that led to a quick cleansing of vulnerable devices from the Internet. They should be more afraid of notPetya, the most catastrophic event yet on the Internet that was launched by subverting an automated patch of accounting software.Vulns aren't even the problem. Mirai didn't happen because of accidental bugs, but because of conscious design decisions. Security cameras have unique requirements of being exposed to the Internet and needing a remote factory reset, leading to the worm. While notPetya did exploit a Microsoft vuln, it's primary vector of spreading (after the subverted update) was via misconfigured Windows networking, not that vuln. In other words, while Mirai and notPetya are the most important events people cite supporting their vuln/patching policy, neither was really about vuln/patching.Such technical analysis of events like Mirai and notPetya are ignored. Policymakers are only cherrypicking the superficial conclusions supporting their goals. They assiduously ignore in-depth analysis of such things because it inevitably fails to support their positions, or directly contradicts them. IoT security is going to be solved regardless of what government does. All this policy talk is premised on things being static unless government takes action. This is wrong. Government is still waffling on its response to Mirai, but the market quickly adapted. Those off-brand, poorly engineered security cameras you buy for $19 from Amazon.com shipped directly from Shenzen now look very different, having less Internet exposure, than the ones used in Mirai. Major Internet sites like Twitter now use multiple DNS providers so that a DDoS attack on one won't take down their services.In addition, technology is fundamentally changing. Mirai attacked IPv4 addresses outside the firewall. The 100-billion IoT devices going on the network in the next decade will not work this way, cannot work this way, because there are only 4-billion IPv4 addresses. Instead, they'll be behind NATs or accessed via IPv6, both of which prevent Mirai-style worms from functioning. Your fridge and toaster won't connect via your home WiFi anyway, but via a 5G chip unrelated to your home.Lastly, focusing on the ven IoT security is going to be solved regardless of what government does. All this policy talk is premised on things being static unless government takes action. This is wrong. Government is still waffling on its response to Mirai, but the market quickly adapted. Those off-brand, poorly engineered security cameras you buy for $19 from Amazon.com shipped directly from Shenzen now look very different, having less Internet exposure, than the ones used in Mirai. Major Internet sites like Twitter now use multiple DNS providers so that a DDoS attack on one won't take down their services.In addition, technology is fundamentally changing. Mirai attacked IPv4 addresses outside the firewall. The 100-billion IoT devices going on the network in the next decade will not work this way, cannot work this way, because there are only 4-billion IPv4 addresses. Instead, they'll be behind NATs or accessed via IPv6, both of which prevent Mirai-style worms from functioning. Your fridge and toaster won't connect via your home WiFi anyway, but via a 5G chip unrelated to your home.Lastly, focusing on the ven |

Hack Patching Guideline | NotPetya | ||

| 2018-06-27 15:49:15 | Lessons from nPetya one year later (lien direct) | This is the one year anniversary of NotPetya. It was probably the most expensive single hacker attack in history (so far), with FedEx estimating it cost them $300 million. Shipping giant Maersk and drug giant Merck suffered losses on a similar scale. Many are discussing lessons we should learn from this, but they are the wrong lessons.An example is this quote in a recent article:"One year on from NotPetya, it seems lessons still haven't been learned. A lack of regular patching of outdated systems because of the issues of downtime and disruption to organisations was the path through which both NotPetya and WannaCry spread, and this fundamental problem remains." This is an attractive claim. It describes the problem in terms of people being "weak" and that the solution is to be "strong". If only organizations where strong enough, willing to deal with downtime and disruption, then problems like this wouldn't happen.But this is wrong, at least in the case of NotPetya.NotPetya's spread was initiated through the Ukraining company MeDoc, which provided tax accounting software. It had an auto-update process for keeping its software up-to-date. This was subverted in order to deliver the initial NotPetya infection. Patching had nothing to do with this. Other common security controls like firewalls were also bypassed.Auto-updates and cloud-management of software and IoT devices is becoming the norm. This creates a danger for such "supply chain" attacks, where the supplier of the product gets compromised, spreading an infection to all their customers. The lesson organizations need to learn about this is how such infections can be contained. One way is to firewall such products away from the core network. Another solution is port-isolation/microsegmentation, that limits the spread after an initial infection.Once NotPetya got into an organization, it spread laterally. The chief way it did this was through Mimikatz/PsExec, reusing Windows credentials. It stole whatever login information it could get from the infected machine and used it to try to log on to other Windows machines. If it got lucky getting domain administrator credentials, it then spread to the entire Windows domain. This was the primary method of spreading, not the unpatched ETERNALBLUE vulnerability. This is why it was so devastating to companies like Maersk: it wasn't a matter of a few unpatched systems getting infected, it was a matter of losing entire domains, including the backup systems.Such spreading through Windows credentials continues to plague organizations. A good example is the recent ransomware infection of the City of Atlanta that spread much the same way. The limits of the worm were the limits of domain trust relationships. For example, it didn't infect the city airport because that Windows domain is separate from the city's domains.This is the most pressing lesson organizations need to learn, the one they are ignoring. They need to do more to prevent desktops from infecting each other, such as through port-isolation/microsegmentation. They need to control the spread of administrative credentials within the organization. A lot of organizations put the same local admin account on every workstation which makes the spread of NotPetya style worms trivial. They need to reevaluate trust relationships between domains, so that the admin of one can't infect the others.These solutions are difficult, which is why news articles don't mention them. You don't have to know anything about security to proclaim "the problem is lack of patches". It's moral authority, chastising the weak, rather than a proscription of what to do. Solving supply chain hacks and Windows credential sharing, though, is hard. I don't know any universal solution to this -- I'd have to thoroughly analyze your network and business in order to | Ransomware Malware Patching | FedEx NotPetya Wannacry |

1

We have: 7 articles.

We have: 7 articles.

To see everything:

Our RSS (filtrered)