What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2021-04-21 17:27:21 | Ethics: University of Minnesota\'s hostile patches (lien direct) | The University of Minnesota (UMN) got into trouble this week for doing a study where they have submitted deliberately vulnerable patches into open-source projects, in order to test whether hostile actors can do this to hack things. After a UMN researcher submitted a crappy patch to the Linux Kernel, kernel maintainers decided to rip out all recent UMN patches.Both things can be true:Their study was an important contribution to the field of cybersecurity.Their study was unethical.It's like Nazi medical research on victims in concentration camps, or U.S. military research on unwitting soldiers. The research can simultaneously be wildly unethical but at the same time produce useful knowledge.I'd agree that their paper is useful. I would not be able to immediately recognize their patches as adding a vulnerability -- and I'm an expert at such things.In addition, the sorts of bugs it exploits shows a way forward in the evolution of programming languages. It's not clear that a "safe" language like Rust would be the answer. Linux kernel programming requires tracking resources in ways that Rust would consider inherently "unsafe". Instead, the C language needs to evolve with better safety features and better static analysis. Specifically, we need to be able to annotate the parameters and return statements from functions. For example, if a pointer can't be NULL, then it needs to be documented as a non-nullable pointer. (Imagine if pointers could be signed and unsigned, meaning, can sometimes be NULL or never be NULL).So I'm glad this paper exists. As a researcher, I'll likely cite it in the future. As a programmer, I'll be more vigilant in the future. In my own open-source projects, I should probably review some previous pull requests that I've accepted, since many of them have been the same crappy quality of simply adding a (probably) unnecessary NULL-pointer check.The next question is whether this is ethical. Well, the paper claims to have sign-off from their university's IRB -- their Institutional Review Board that reviews the ethics of experiments. Universities created IRBs to deal with the fact that many medical experiments were done on either unwilling or unwitting subjects, such as the Tuskegee Syphilis Study. All medical research must have IRB sign-off these days.However, I think IRB sign-off for computer security research is stupid. Things like masscanning of the entire Internet are undecidable with traditional ethics. I regularly scan every device on the IPv4 Internet, including your own home router. If you paid attention to the packets your firewall drops, some of them would be from me. Some consider this a gross violation of basic ethics and get very upset that I'm scanning their computer. Others consider this to be the expected consequence of the end-to-end nature of the public Internet, that there's an inherent social contract that you must be prepared to receive any packet from anywhere. Kerckhoff's Principle from the 1800s suggests that core ethic of cybersecurity is exposure to such things rather than trying to cover them up.The point isn't to argue whether masscanning is ethical. The point is to argue that it's undecided, and that your IRB isn't going to be able to answer the question better than anybody else.But here's the thing about masscanning: I'm honest and transparent about it. My very first scan of the entire Internet came with a tweet "BTW, this is me scanning the entire Internet".A lot of ethical questions in other fields comes down to honesty. If you have to lie about it or cover it up, then th | Hack Vulnerability | |||

| 2021-02-25 20:31:46 | No, 1,000 engineers were not needed for SolarWinds (lien direct) | Microsoft estimates it would take 1,000 to carry out the famous SolarWinds hacker attacks. This means in reality that it was probably fewer than 100 skilled engineers. I base this claim on the following Tweet: When asked why they think it was 1,000 devs, Brad Smith says they saw an elaborate and persistent set of work. Made an estimate of how much work went into each of these attacks, and asked their own engineers. 1,000 was their estimate.— Joseph Cox (@josephfcox) February 23, 2021 Yes, it would take Microsoft 1,000 engineers to replicate the attacks. But it takes a large company like Microsoft 10-times the effort to replicate anything. This is partly because Microsoft is a big, stodgy corporation. But this is mostly because this is a fundamental property of software engineering, where replicating something takes 10-times the effort of creating the original thing.It's like painting. The effort to produce a work is often less than the effort to reproduce it. I can throw some random paint strokes on canvas with almost no effort. It would take you an immense amount of work to replicate those same strokes -- even to figure out the exact color of paint that I randomly mixed together.Software EngineeringThe process of software engineering is about creating software that meets a certain set of requirements, or a specification. It is an extremely costly process verify the specification is correct. It's like if you build a bridge but forget a piece and the entire bridge collapses.But code slinging by hackers and open-source programmers works differently. They aren't building toward a spec. They are building whatever they can and whatever they want. It takes a tenth, or even a hundredth of the effort of software engineering. Yes, it usually builds things that few people (other than the original programmer) want to use. But sometimes it produces gems that lots of people use.Take my most popular code slinging effort, masscan. I spent about 6-months of total effort writing it at this point. But if you run code analysis tools on it, they'll tell you that it would take several millions of dollars to replicate the amount of code I've written. And that's just measuring the bulk code, not the numerous clever capabilities and innovations in the code.According to these metrics, I'm either a 100x engineer (a hundred times better than the average engineer) or my claim is true that "code slinging" is a fraction of the effort of "software engineering".The same is true of everything the SolarWinds hackers produced. They didn't have to software engineer code according to Microsoft's processes. They only had to sling code to satisfy their own needs. They don't have to train/hire engineers with the skills necessary to meet a specification, they can write the specification according to what their own engineers can produce. They can do whatever they want with the code because they don't have to satisfy somebody else's needs.HackingSomething is similarly true with hacking. Hacking a specific target, a specific way, is very hard. Hacking any target, any way, is easy.Like most well-known hackers, I regularly get those emails asking me to hack somebody's Facebook account. This is very hard. I can try a lot of things, and in the end, chances are I cannot succeed. On the other hand, if you ask me to hack anybody's Facebook account, I can do that in seconds. I can download one of the many ha | Hack | |||

| 2020-10-14 19:34:25 | Yes, we can validate leaked emails (lien direct) | When emails leak, we can know whether they are authenticate or forged. It's the first question we should ask of today's leak of emails of Hunter Biden. It has a definitive answer.Today's emails have "cryptographic signatures" inside the metadata. Such signatures have been common for the past decade as one way of controlling spam, to verify the sender is who they claim to be. These signatures verify not only the sender, but also that the contents have not been altered. In other words, it authenticates the document, who sent it, and when it was sent.Crypto works. The only way to bypass these signatures is to hack into the servers. In other words, when we see a 6 year old message with a valid Gmail signature, we know either (a) it's valid or (b) they hacked into Gmail to steal the signing key. Since (b) is extremely unlikely, and if they could hack Google, they could a ton more important stuff with the information, we have to assume (a).Your email client normally hides this metadata from you, because it's boring and humans rarely want to see it. But it's still there in the original email document. An email message is simply a text document consisting of metadata followed by the message contents.It takes no special skills to see metadata. If the person has enough skill to export the email to a PDF document, they have enough skill to export the email source. If they can upload the PDF to Scribd (as in the story), they can upload the email source. I show how to below.To show how this works, I send an email using Gmail to my private email server (from gmail.com to robertgraham.com).The NYPost story shows the email printed as a PDF document. Thus, I do the same thing when the email arrives on my MacBook, using the Apple "Mail" app. It looks like the following: The "raw" form originally sent from my Gmail account is simply a text document that looked like the following: The "raw" form originally sent from my Gmail account is simply a text document that looked like the following: This is rather simple. Client's insert details like a "Message-ID" that humans don't care about. There's also internal formatting details, like the fact that this is a "plain text" message rather than an "HTML" email.But this raw document was the one sent by the Gmail web client. It then passed through Gmail's servers, then was passed across the Internet to my private server, where I finally retrieved it using my MacBook.As email messages pass through servers, the servers add their own metadata.When it arrived, the "raw" document looked like the following. None of the important bits changed, but a lot more metadata was added: This is rather simple. Client's insert details like a "Message-ID" that humans don't care about. There's also internal formatting details, like the fact that this is a "plain text" message rather than an "HTML" email.But this raw document was the one sent by the Gmail web client. It then passed through Gmail's servers, then was passed across the Internet to my private server, where I finally retrieved it using my MacBook.As email messages pass through servers, the servers add their own metadata.When it arrived, the "raw" document looked like the following. None of the important bits changed, but a lot more metadata was added: |

Hack Guideline | |||

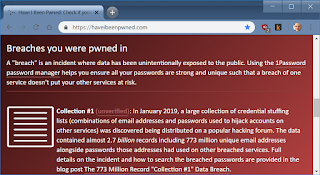

| 2020-04-02 01:23:55 | About them Zoom vulns... (lien direct) | Today a couple vulnerabilities were announced in Zoom, the popular work-from-home conferencing app. Hackers can possibly exploit these to do evil things to you, such as steal your password. Because of the COVID-19, these vulns have hit the mainstream media. This means my non-techy friends and relatives have been asking about it. I thought I'd write up a blogpost answering their questions.The short answer is that you don't need to worry about it. Unless you do bad things, like using the same password everywhere, it's unlikely to affect you. You should worry more about wearing pants on your Zoom video conferences in case you forget and stand up.Now is a good time to remind people to stop using the same password everywhere and to visit https://haveibeenpwned.com to view all the accounts where they've had their password stolen. Using the same password everywhere is the #1 vulnerability the average person is exposed to, and is a possible problem here. For critical accounts (Windows login, bank, email), use a different password for each. (Sure, for accounts you don't care about, use the same password everywhere, I use 'Foobar1234'). Write these passwords down on paper and put that paper in a secure location. Don't print them, don't store them in a file on your computer. Writing it on a Post-It note taped under your keyboard is adequate security if you trust everyone in your household.If hackers use this Zoom method to steal your Windows password, then you aren't in much danger. They can't log into your computer because it's almost certainly behind a firewall. And they can't use the password on your other accounts, because it's not the same.Why you shouldn't worryThe reason you shouldn't worry about this password stealing problem is because it's everywhere, not just Zoom. It's also here in this browser you are using. If you click on file://hackme.robertgraham.com/foo/bar.html, then I can grab your password in exactly the same way as if you clicked on that vulnerable link in Zoom chat. That's how the Zoom bug works: hackers post these evil links in the chat window during a Zoom conference.It's hard to say Zoom has a vulnerability when so many other applications have the same issue.Many home ISPs block such connections to the Internet, such as Comcast, AT&T, Cox, Verizon Wireless, and others. If this is the case, when you click on the above link, nothing will happen. Your computer till try to contact hackme.robertgraham.com, and fail. You may be protected from clicking on the above link without doing anything. If your ISP doesn't block such connections, you can configure your home router to do this. Go into the firewall settings and block "TCP port 445 outbound". Alternatively, you can configure Windows to only follow such links internal to your home network, but not to the Internet.If hackers (like me if you click on the above link) gets your password, then they probably can't use use it. That's because while your home Internet router allows outbound connections, it (almost always) blocks inbound connections. Thus, if I steal your Windows password, I can't use it to log into your home computer unless I also break physically into your house. But if I can break into your computer physically, I can hack it without knowing your password.The same arguments apply to corporate desktops. Corporations should block such outbound connections. They | Hack Vulnerability Threat | |||

| 2020-03-06 15:57:01 | Huawei backdoors explanation, explained (lien direct) | Today Huawei published a video explaining the concept of "backdoors" in telco equipment. Many are criticizing the video for being tone deaf. I don't understand this concept of "tone deafness". Instead, I want to explore the facts.Does the word “#backdoor” seem frightening? That's because it's often used incorrectly – sometimes to deliberately create fear. Watch to learn the truth about backdoors and other types of network access. #cybersecurity pic.twitter.com/NEUXbZbcqw- Huawei (@Huawei) March 4, 2020This video seems in response to last month's story about Huawei misusing law enforcement backdoors from the Wall Street Journal. All telco equipment has backdoors usable only by law enforcement, the accusation is that Huawei has a backdoor into this backdoor, so that Chinese intelligence can use it.That story was bogus. Sure, Huawei is probably guilty of providing backdoor access to the Chinese government, but something is deeply flawed with this particular story.We know something is wrong with the story because the U.S. officials cited are anonymous. We don't know who they are or what position they have in the government. If everything they said was true, they wouldn't insist on being anonymous, but would stand up and declare it in a press conference so that every newspaper could report it. When something is not true or spun, then they anonymously "leak" it to a corrupt journalist to report it their way.This is objectively bad journalism. The Society of Professional Journalists calls this the "Washington Game". They also discuss this on their Code of Ethics page. Yes, it's really common in Washington D.C. reporting, you see it all the time, especially with the NYTimes, Wall Street Journal, and Washington Post. But it happens because what the government says is news, regardless of its false or propaganda, giving government officials the ability to influence journalists. Exclusive access to corrupt journalists is how they influence stories.We know the reporter is being especially shady because of the one quote in the story that is attributed to a named official:“We have evidence that Huawei has the capability secretly to access sensitive and personal information in systems it maintains and sells around the world,” said national security adviser Robert O'Brien. This quote is deceptive because O'Brien doesn't say any of the things that readers assume he's saying. He doesn't actually confirm any of the allegations in the rest of the story.It doesn't say.That Huawei has used that capability.That Huawei intentionally put that capability there.That this is special to Huawei (rather than everywhere in the industry).In fact, this quote applies to every telco equipment maker. They all have law enforcement backdoors. These backdoors always hve "controls" to prevent them from being misused. But these controls are always flawed, either in design or how they are used in the real world.Moreover, all telcos have maintenance/service contracts with the equipment makers. When there are ways around such controls, it's the company's own support engineers who will know them.I absolutely believe Huawei that it has don | Hack Threat | |||

| 2020-01-28 16:53:00 | There\'s no evidence the Saudis hacked Jeff Bezos\'s iPhone (lien direct) | There's no evidence the Saudis hacked Jeff Bezos's iPhone.This is the conclusion of the all the independent experts who have reviewed the public report behind the U.N.'s accusations. That report failed to find evidence proving the theory, but instead simply found unknown things it couldn't explain, which it pretended was evidence.This is a common flaw in such forensics reports. When there's evidence, it's usually found and reported. When there's no evidence, investigators keep looking. Todays devices are complex, so if you keep looking, you always find anomalies you can't explain. There's only two results from such investigations: proof of bad things or anomalies that suggest bad things. There's never any proof that no bad things exist (at least, not in my experience).Bizarre and inexplicable behavior doesn't mean a hacker attack. Engineers trying to debug problems, and support technicians helping customers, find such behavior all the time. Pretty much every user of technology experiences this. Paranoid users often think there's a conspiracy against them when electronics behave strangely, but "behaving strangely" is perfectly normal.When you start with the theory that hackers are involved, then you have an explanation for the all that's unexplainable. It's all consistent with the theory, thus proving it. This is called "confirmation bias". It's the same thing that props up conspiracy theories like UFOs: space aliens can do anything, thus, anything unexplainable is proof of space aliens. Alternate explanations, like skunkworks testing a new jet, never seem as plausible.The investigators were hired to confirm bias. Their job wasn't to do an unbiased investigation of the phone, but instead, to find evidence confirming the suspicion that the Saudis hacked Bezos.Remember the story started in February of 2019 when the National Inquirer tried to extort Jeff Bezos with sexts between him and his paramour Lauren Sanchez. Bezos immediately accused the Saudis of being involved. Even after it was revealed that the sexts came from Michael Sanchez, the paramour's brother, Bezos's team double-downed on their accusations the Saudi's hacked Bezos's phone.The FTI report tells a story beginning with Saudi Crown Prince sending Bezos a message using WhatsApp containing a video. The story goes:The downloader that delivered the 4.22MB video was encrypted, delaying or preventing further study of the code delivered along with the video. It should be noted that the encrypted WhatsApp file sent from MBS' account was slightly larger than the video itself.This story is invalid. Such messages use end-to-end encryption, which means that while nobody in between can decrypt them (not even WhatsApp), anybody with possession of the ends can. That's how the technology is supposed to work. If Bezos loses/breaks his phone and needs to restore a backup onto a new phone, the backup needs to have the keys used to decrypt the WhatsApp messages.Thus, the forensics image taken by the investigators had the necessary keys to decrypt the video -- the investigators simply didn't know about them. In a previous blogpost I explain these magical WhatsApp keys and where to find them so that anybody, even you at home, can forensics their own iPhone, retrieve these keys, and decrypt their own videos. | Hack | Uber | ||

| 2020-01-28 14:24:42 | How to decrypt WhatsApp end-to-end media files (lien direct) | At the center of the "Saudis hacked Bezos" story is a mysterious video file investigators couldn't decrypt, sent by Saudi Crown Prince MBS to Bezos via WhatsApp. In this blog post, I show how to decrypt it. Once decrypted, we'll either have a smoking gun proving the Saudi's guilt, or exoneration showing that nothing in the report implicated the Saudis. I show how everyone can replicate this on their own iPhones.The steps are simple:backup the phone to your computer (macOS or Windows), using one of many freely available tools, such as Apple's own iTunes appextract the database containing WhatsApp messages from that backup, using one of many freely available tools, or just hunt for the specific file yourselfgrab the .enc file and decryption key from that database, using one of many freely available SQL toolsdecrypt the video, using a tool I just created on GitHubEnd-to-end encrypted downloaderThe FTI report says that within hours of receiving a suspicious video that Bezos's iPhone began behaving strangely. The report says:...analysis revealed that the suspect video had been delivered via an encrypted downloader host on WhatsApp's media server. Due to WhatsApp's end-to-end encryption, the contents of the downloader cannot be practically determined. The phrase "encrypted downloader" is not a technical term but something the investigators invented. It sounds like a term we use in malware/viruses, where a first stage downloads later stages using encryption. But that's not what happened here.Instead, the file in question is simply the video itself, encrypted, with a few extra bytes due to encryption overhead (10 bytes of checksum at the start, up to 15 bytes of padding at the end).Now let's talk about "end-to-end encryption". This only means that those in middle can't decrypt the file, not even WhatsApp's servers. But those on the ends can -- and that's what we have here, one of the ends. Bezos can upgrade his old iPhone X to a new iPhone XS by backing up the old phone and restoring onto the new phone and still decrypt the video. That means the decryption key is somewhere in the backup.Specifically, the decryption key is in the file named 7c7fba66680ef796b916b067077cc246adacf01d in the backup, in the table named ZWAMDIAITEM, as the first protobuf field in the field named ZMEDIAKEY. These details are explained below.WhatsApp end-to-end encryption of videoLet's discuss how videos are transmitted using text messages.We'll start with SMS, the old messaging system built into the phone system that predates modern apps. It can only send short text messages of a few hundred bytes at a time. These messages are too small to hold a complete video many megabytes in size. They are sent through the phone system itself, not via the Internet.When you send a video via SMS what happens is that the video is uploaded to the phone company's servers via HTTP. Then, a text message is sent with a URL link to the video. When the recipient gets the message, their phone downloads the video from the URL. The text messages going through the phone system just contain the URL, an Internet connection is used to transfer the video.This happens transparently to the user. The user just sees the video and not the URL. They'll only notice a difference when using ancient 2G mobile phones that can get the SMS messages but which can't actually connect to the Internet.A similar thing happens with WhatsApp, only with encryption added.The sender first encryp | Malware Hack Tool | |||

| 2019-09-26 13:24:44 | CrowdStrike-Ukraine Explained (lien direct) | Trump's conversation with the President of Ukraine mentions "CrowdStrike". I thought I'd explain this.What was said?This is the text from the conversation covered in this“I would like you to find out what happened with this whole situation with Ukraine, they say Crowdstrike... I guess you have one of your wealthy people... The server, they say Ukraine has it.”Personally, I occasionally interrupt myself while speaking, so I'm not sure I'd criticize Trump here for his incoherence. But at the same time, we aren't quite sure what was meant. It's only meaningful in the greater context. Trump has talked before about CrowdStrike's investigation being wrong, a rich Ukrainian owning CrowdStrike, and a "server". He's talked a lot about these topics before.Who is CrowdStrike?They are a cybersecurity firm that, among other things, investigates hacker attacks. If you've been hacked by a nation state, then CrowdStrike is the sort of firm you'd hire to come and investigate what happened, and help prevent it from happening again.Why is CrowdStrike mentioned?Because they were the lead investigators in the DNC hack who came to the conclusion that Russia was responsible. The pro-Trump crowd believes this conclusion is false. If the conclusion is false, then it must mean CrowdStrike is part of the anti-Trump conspiracy.Trump always had a thing for CrowdStrike since their first investigation. It's intensified since the Mueller report, which solidified the ties between Trump-Russia, and Russia-DNC-Hack.Personally, I'm always suspicious of such investigations. Politics, either grand (on this scale) or small (internal company politics) seem to drive investigations, creating firm conclusions based on flimsy evidence. But CrowdStrike has made public some pretty solid information, such as BitLy accounts used both in the DNC hacks and other (known) targets of state-sponsored Russian hackers. Likewise, the Mueller report had good data on Bitcoin accounts. I'm sure if I looked at all the evidence, I'd have more doubts, but at the same time, of the politicized hacking incidents out there, this seems to have the best (public) support for the conclusion.What's the conspiracy?The basis of the conspiracy is that the DNC hack was actually an inside job. Some former intelligence officials lead by Bill Binney claim they looked at some data and found that the files were copied "locally" instead of across the Internet, and therefore, it was an insider who did it and not a remote hacker.I debunk the claim here, but the short explanation is: of course the files were copied "locally", the hacker was inside the network. In my long experience investigating hacker intrusions, and performing them myself, I know this is how it's normally done. I mention my own experience because I'm technical and know these things, in contrast with Bill Binney and those other intelligence officials who have no experience with such things. He sounds impressive that he's formerly of the NSA, but he was a mid-level manager in charge of budgets. Binney has never performed a data breach investigation, has never performed a pentest.There's other parts to the conspiracy. In the middle of all this, a DNC staffer was murdered on the street, possibley due to a mugging. Naturally this gets included as part of the conspiracy, this guy ("Seth Rich") must've been the "insider" in this attack, and mus | Data Breach Hack Guideline | NotPetya | ||

| 2019-08-04 18:52:45 | Securing devices for DEFCON (lien direct) | There's been much debate whether you should get burner devices for hacking conventions like DEF CON (phones or laptops). A better discussion would be to list those things you should do to secure yourself before going, just in case.These are the things I worry about:backup before you goupdate before you gocorrectly locking your devices with full disk encryptioncorrectly configuring WiFiBluetooth devicesMobile phone vs. StingraysUSBBackupTraveling means a higher chance of losing your device. In my review of crime statistics, theft seems less of a threat than whatever city you are coming from. My guess is that while thieves may want to target tourists, the police want to even more the target gangs of thieves, to protect the cash cow that is the tourist industry. But you are still more likely to accidentally leave a phone in a taxi or have your laptop crushed in the overhead bin. If you haven't recently backed up your device, now would be an extra useful time to do this.Anything I want backed up on my laptop is already in Microsoft's OneDrive, so I don't pay attention to this. However, I have a lot of pictures on my iPhone that I don't have in iCloud, so I copy those off before I go.UpdateLike most of you, I put off updates unless they are really important, updating every few months rather than every month. Now is a great time to make sure you have the latest updates.Backup before you update, but then, I already mentioned that above.Full disk encryptionThis is enabled by default on phones, but not the default for laptops. It means that if you lose your device, adversaries can't read any data from it.You are at risk if you have a simple unlock code, like a predicable pattern or a 4-digit code. The longer and less predictable your unlock code, the more secure you are.I use iPhone's "face id" on my phone so that people looking over my shoulder can't figure out my passcode when I need to unlock the phone. However, because this enables the police to easily unlock my phone, by putting it in front of my face, I also remember how to quickly disable face id (by holding the buttons on both sides for 2 seconds).As for laptops, it's usually easy to enable full disk encryption. However there are some gotchas. Microsoft requires a TPM for its BitLocker full disk encryption, which your laptop might not support. I don't know why all laptops don't just have TPMs, but they don't. You may be able to use some tricks to get around this. There are also third party full disk encryption products that use simple passwords.If you don't have a TPM, then hackers can brute-force crack your password, trying billions per second. This applies to my MacBook Air, which is the 2017 model before Apple started adding their "T2" chip to all their laptops. Therefore, I need a strong login password.I deal with this on my MacBook by having two accounts. When I power on the device, I log into an account using a long/complicated password. I then switch to an account with a simpler account for going in/out of sleep mode. This second account can't be used to decrypt the drive.On Linux, my password to decrypt the drive is similarly long, while the user account password is pretty short.I ignore the "evil maid" threat, because my devices are always with me rather than in | Hack Threat Guideline | |||

| 2019-04-11 20:22:14 | Assange indicted for breaking a password (lien direct) | In today's news, after 9 years holed up in the Ecuadorian embassy, Julian Assange has finally been arrested. The US DoJ accuses Assange for trying to break a password. I thought I'd write up a technical explainer what this means.According to the US DoJ's press release:Julian P. Assange, 47, the founder of WikiLeaks, was arrested today in the United Kingdom pursuant to the U.S./UK Extradition Treaty, in connection with a federal charge of conspiracy to commit computer intrusion for agreeing to break a password to a classified U.S. government computer.The full indictment is here.It seems the indictment is based on already public information that came out during Manning's trial, namely this log of chats between Assange and Manning, specifically this section where Assange appears to agree to break a password: What this says is that Manning hacked a DoD computer and found the hash "80c11049faebf441d524fb3c4cd5351c" and asked Assange to crack it. Assange appears to agree.So what is a "hash", what can Assange do with it, and how did Manning grab it?Computers store passwords in an encrypted (sic) form called a "one way hash". Since it's "one way", it can never be decrypted. However, each time you log into a computer, it again performs the one way hash on what you typed in, and compares it with the stored version to see if they match. Thus, a computer can verify you've entered the right password, without knowing the password itself, or storing it in a form hackers can easily grab. Hackers can only steal the encrypted form, the hash.When they get the hash, while it can't be decrypted, hackers can keep guessing passwords, performing the one way algorithm on them, and see if they match. With an average desktop computer, they can test a billion guesses per second. This may seem like a lot, but if you've chosen a sufficiently long and complex password (more than 12 characters with letters, numbers, and punctuation), then hackers can't guess them.It's unclear what format this password is in, whether "NT" or "NTLM". Using my notebook computer, I could attempt to crack the NT format using the hashcat password crack with the following command:hashcat -m 3000 -a 3 80c11049faebf441d524fb3c4cd5351c ?a?a?a?a?a?a?aAs this image shows, it'll take about 22 hours on my laptop to crack this. However, this doesn't succeed, so it seems that this isn't in the NT format. Unlike other password formats, the "NT" format can only be 7 characters in length, so we can completely crack it. What this says is that Manning hacked a DoD computer and found the hash "80c11049faebf441d524fb3c4cd5351c" and asked Assange to crack it. Assange appears to agree.So what is a "hash", what can Assange do with it, and how did Manning grab it?Computers store passwords in an encrypted (sic) form called a "one way hash". Since it's "one way", it can never be decrypted. However, each time you log into a computer, it again performs the one way hash on what you typed in, and compares it with the stored version to see if they match. Thus, a computer can verify you've entered the right password, without knowing the password itself, or storing it in a form hackers can easily grab. Hackers can only steal the encrypted form, the hash.When they get the hash, while it can't be decrypted, hackers can keep guessing passwords, performing the one way algorithm on them, and see if they match. With an average desktop computer, they can test a billion guesses per second. This may seem like a lot, but if you've chosen a sufficiently long and complex password (more than 12 characters with letters, numbers, and punctuation), then hackers can't guess them.It's unclear what format this password is in, whether "NT" or "NTLM". Using my notebook computer, I could attempt to crack the NT format using the hashcat password crack with the following command:hashcat -m 3000 -a 3 80c11049faebf441d524fb3c4cd5351c ?a?a?a?a?a?a?aAs this image shows, it'll take about 22 hours on my laptop to crack this. However, this doesn't succeed, so it seems that this isn't in the NT format. Unlike other password formats, the "NT" format can only be 7 characters in length, so we can completely crack it. |

Hack | |||

| 2019-03-12 18:43:41 | Some notes on the Raspberry Pi (lien direct) | I keep seeing this article in my timeline today about the Raspberry Pi. I thought I'd write up some notes about it.The Raspberry Pi costs $35 for the board, but to achieve a fully functional system, you'll need to add a power supply, storage, and heatsink, which ends up costing around $70 for the full system. At that price range, there are lots of alternatives. For example, you can get a fully function $99 Windows x86 PC, that's just as small and consumes less electrical power.There are a ton of Raspberry Pi competitors, often cheaper with better hardware, such as a Odroid-C2, Rock64, Nano Pi, Orange Pi, and so on. There are also a bunch of "Android TV boxes" running roughly the same hardware for cheaper prices, that you can wipe and reinstall Linux on. You can also acquire Android phones for $40.However, while "better" technically, the alternatives all suffer from the fact that the Raspberry Pi is better supported -- vastly better supported. The ecosystem of ARM products focuses on getting Android to work, and does poorly at getting generic Linux working. The Raspberry Pi has the worst, most out-of-date hardware, of any of its competitors, but I'm not sure I can wholly recommend any competitor, as they simply don't have the level of support the Raspberry Pi does. The defining feature of the Raspberry Pi isn't that it's a small/cheap computer, but that it's a computer with a bunch of GPIO pins. When you look at the board, it doesn't just have the recognizable HDMI, Ethernet, and USB connectors, but also has 40 raw pins strung out across the top of the board. There's also a couple extra connectors for cameras.The concept wasn't simply that of a generic computer, but a maker device, for robot servos, temperature and weather measurements, cameras for a telescope, controlling christmas light displays, and so on.I think this is underemphasized in the above story. The reason it finds use in the factories is because they have the same sorts of needs for controlling things that maker kids do. A lot of industrial needs can be satisfied by a teenager buying $50 of hardware off Adafruit and writing a few Python scripts.On the other hand, support for industrial uses is nearly nonexistant. The reason commercial products cost $1000 is because somebody will answer your phone, unlike the teenager whose currently out at the movies with their friends. However, with more and more people having experience with the Raspberry Pi, presumably you'll be able to hire generic consultants soon that can maintain th The defining feature of the Raspberry Pi isn't that it's a small/cheap computer, but that it's a computer with a bunch of GPIO pins. When you look at the board, it doesn't just have the recognizable HDMI, Ethernet, and USB connectors, but also has 40 raw pins strung out across the top of the board. There's also a couple extra connectors for cameras.The concept wasn't simply that of a generic computer, but a maker device, for robot servos, temperature and weather measurements, cameras for a telescope, controlling christmas light displays, and so on.I think this is underemphasized in the above story. The reason it finds use in the factories is because they have the same sorts of needs for controlling things that maker kids do. A lot of industrial needs can be satisfied by a teenager buying $50 of hardware off Adafruit and writing a few Python scripts.On the other hand, support for industrial uses is nearly nonexistant. The reason commercial products cost $1000 is because somebody will answer your phone, unlike the teenager whose currently out at the movies with their friends. However, with more and more people having experience with the Raspberry Pi, presumably you'll be able to hire generic consultants soon that can maintain th |

Hack | |||

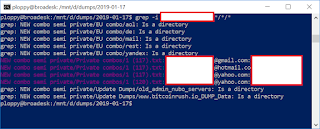

| 2019-02-08 10:08:18 | How Bezo\'s dick pics might\'ve been exposed (lien direct) | In the news, the National Enquirer has extorted Amazon CEO Jeff Bezos by threatening to publish the sext-messages/dick-pics he sent to his mistress. How did the National Enquirer get them? There are rumors that maybe Trump's government agents or the "deep state" were involved in this sordid mess. The more likely explanation is that it was a simple hack. Teenage hackers regularly do such hacks -- they aren't hard.This post is a description of how such hacks might've been done.To start with, from which end were they stolen? As a billionaire, I'm guessing Bezos himself has pretty good security, so I'm going to assume it was the recipient, his girlfriend, who was hacked.The hack starts by finding the email address she uses. People use the same email address for both public and private purposes. There are lots of "people finder" services on the Internet that you can use to track this information down. These services are partly scams, using "dark patterns" to get you to spend tons of money on them without realizing it, so be careful.Using one of these sites, I quickly found a couple of a email accounts she's used, one at HotMail, another at GMail. I've blocked out her address. I want to describe how easy the process is, I'm not trying to doxx her. Next, I enter those email addresses into the website http://haveibeenpwned.com to see if hackers have ever stolen her account password. When hackers break into websites, they steal the account passwords, and then exchange them on the dark web with other hackers. The above website tracks this, helping you discover if one of your accounts has been so compromised. You should take this opportunity to enter your email address in this site to see if it's been so "pwned".I find that her email addresses have been included in that recent dump of 770 million accounts called "Collection#1". Next, I enter those email addresses into the website http://haveibeenpwned.com to see if hackers have ever stolen her account password. When hackers break into websites, they steal the account passwords, and then exchange them on the dark web with other hackers. The above website tracks this, helping you discover if one of your accounts has been so compromised. You should take this opportunity to enter your email address in this site to see if it's been so "pwned".I find that her email addresses have been included in that recent dump of 770 million accounts called "Collection#1". The http://haveibeenpwned.com won't disclose the passwords, only the fact they've been pwned. However, I have a copy of that huge Collection#1 dump, so I can search it myself to get her password. As this output shows, I get a few hits, all with the same password. The http://haveibeenpwned.com won't disclose the passwords, only the fact they've been pwned. However, I have a copy of that huge Collection#1 dump, so I can search it myself to get her password. As this output shows, I get a few hits, all with the same password. At this point, I have a password, but not necessarily the password to access any useful accounts. For all I know, this was the At this point, I have a password, but not necessarily the password to access any useful accounts. For all I know, this was the |

Hack | |||

| 2019-01-28 22:21:56 | Passwords in a file (lien direct) | My dad is on some sort of committee for his local home owners association. He asked about saving all the passwords in a file stored on Microsoft's cloud OneDrive, along with policy/procedures for the association. I assumed he called because I'm an internationally recognized cyberexpert. Or maybe he just wanted to chat with me*. Anyway, I thought I'd write up a response.The most important rule of cybersecurity is that it depends upon the risks/costs. That means if what you want to do is write down the procedures for operating a garden pump, including the passwords, then that's fine. This is because there's not much danger of hackers exploiting this. On the other hand, if the question is passwords for the association's bank account, then DON'T DO THIS. Such passwords should never be online. Instead, write them down and store the pieces of paper in a secure place.OneDrive is secure, as much as anything is. The problem is that people aren't secure. There's probably one member of the home owner's association who is constantly infecting themselves with viruses or falling victim to scams. This is the person who you are giving OneDrive access to. This is fine for the meaningless passwords, but very much not fine for bank accounts.OneDrive also has some useful backup features. Thus, when one of your members infects themselves with ransomware, which will encrypt all the OneDrive's contents, you can retrieve the old versions of the documents. I highly recommend groups like the home owner's association use OneDrive. I use it as part of my Office 365 subscription for $99/year.Just don't do this for banking passwords. In fact, not only should you not store such a password online, you should strongly consider getting "two factor authentication" setup for the account. This is a system where you need an additional hardware device/token in addition to a password (in some cases, your phone can be used as the additional device). This may not work if multiple people need to access a common account, but then, you should have multiple passwords, for each individual, in such cases. Your bank should have descriptions of how to set this up. If your bank doesn't offer two factor authentication for its websites, then you really need to switch banks.For individuals, write your passwords down on paper. For elderly parents, write down a copy and give it to your kids. It should go without saying: store that paper in a safe place, ideally a safe, not a post-it note glued to your monitor. Again, this is for your important passwords, like for bank accounts and e-mail. For your Spotify or Pandora accounts (music services), then security really doesn't matter.Lastly, the way hackers most often break into things like bank accounts is because people use the same password everywhere. When one site gets hacked, those passwords are then used to hack accounts on other websites. Thus, for important accounts, don't reuse passwords, make them unique for just that account. Since you can't remember unique passwords for every account, write them down.You can check if your password has been hacked this way by checking http://haveibeenpwned.com and entering your email address. Entering my dad's email address, I find that his accounts at Adobe, LinkedIn, and Disqus has been discovered by hackers (due to hacks of those websites) and published. I sure hope whatever these passwords were that they are not the same or similar to his passwords for GMail or his bank account. * the lame joke at the top was my dad's, so don't blame me :-) |

Hack | |||

| 2018-11-02 02:57:36 | Why no cyber 9/11 for 15 years? (lien direct) | This The Atlantic article asks why hasn't there been a cyber-terrorist attack for the last 15 years, or as it phrases it:National-security experts have been warning of terrorist cyberattacks for 15 years. Why hasn't one happened yet?As a pen-tester whose broken into power grids and found 0day exploits in control center systems, I thought I'd write up some comments.Instead of asking why one hasn't happened yet, maybe we should instead ask why national-security experts keep warning about them.One possible answer is that national-security experts are ignorant. I get the sense that "national security experts" have very little expertise in cyber. That's why I include a brief resume at the top of this article, I've actually broken into a power grid and found 0days in critical power grid products (specifically, the ABB implementation of ICCP on AIX -- it's rather an obvious buffer-overflow, *cough* ASN.1 *cough*, I don't know if they ever fixed it).Another possibility is that they are fear mongering in order to support their agenda. That's the problem with "experts", they get their expertise by being employed to achieve some goal. The ones who know most about an issue are simultaneously the ones most biased about an issue. They have every incentive to make people be afraid, and little incentive to tell the truth.The most likely answer, though, is simply because they can. Anybody can warn of "digital 9/11" and be taken seriously, regardless of expertise. They'll get all the press. It's always the Morally Right thing to say. You never have to back it up with evidence. Conversely, those who say the opposite don't get the same level of press, and are frequently challenged to defend their abnormal stance.Indeed, that's this article by The Atlantic works. It's entire premise is that the national security experts are still "right" even though their predictions haven't happened, and it's reality that's "wrong".Now let's consider the original question.One good answer in the article is "cause certain types of fear and terror, that garner certain media attention, that galvanize followers". Blowing something up causes more fear in the target population than deleting some data.But the same is true of the terrorists themselves, that they prefer violence. In other words, what motivates terrorists, the ends or the means? It is it the need to achieve a political goal? Or is it simply about looking for an excuse to commit violence?I suspect that it's the later issue. It's not that terrorists are violent so much as violent people are attracted to terrorism. This can explain a lot, such as why they have such poor op-sec and encryption, as I've written about before. They enjoy learning how to shoot guns and trigger bombs, but they don't enjoy learning how to use a computer correctly.I've explored the cyber Islamic dark web and come to a couple conclusions about it. The primary motivation of these hackers is gay porn. A frequent initiation rite to gain access to these forums is to send post pictures of your, well, equipment. Such things are repressed in their native countries and societies, so hacking becomes a necessary skill in order to get it.It's hard for us to understand their motivations. From our western perspective, we'd think gay young men would be on our side, motivated to fight against their own governments in defense of gay rights, in order to achieve marriage equality. None of them want that. Their goal is to get married and have children. Sure, they want gay sex and intimate relationships with men, but they also want a subservient wife who manages the household, and the deep family ties that | Hack | |||

| 2018-10-22 16:33:56 | Some notes for journalists about cybersecurity (lien direct) |  The recent Bloomberg article about Chinese hacking motherboards is a great opportunity to talk about problems with journalism.Journalism is about telling the truth, not a close approximation of the truth, but the true truth. They don't do a good job at this in cybersecurity.Take, for example, a recent incident where the Associated Press fired a reporter for photoshopping his shadow out of a photo. The AP took a scorched-earth approach, not simply firing the photographer, but removing all his photographs from their library.That's because there is a difference between truth and near truth.Now consider Bloomberg's story, such as a photograph of a tiny chip. Is that a photograph of the actual chip the Chinese inserted into the motherboard? Or is it another chip, representing the size of the real chip? Is it truth or near truth?Or consider the technical details in Bloomberg's story. They are garbled, as this discussion shows. Something like what Bloomberg describes is certainly plausible, something exactly what Bloomberg describes is impossible. Again there is the question of truth vs. near truth.There are other near truths involved. For example, we know that supply chains often replace high-quality expensive components with cheaper, lower-quality knockoffs. It's perfectly plausible that some of the incidents Bloomberg describes is that known issue, which they are then hyping as being hacker chips. This demonstrates how truth and near truth can be quite far apart, telling very different stories.Another example is a NYTimes story about a terrorist's use of encryption. As I've discussed before, the story has numerous "near truth" errors. The NYTimes story is based upon a transcript of an interrogation of the hacker. The French newspaper Le Monde published excerpts from that interrogation, with details that differ slightly from the NYTimes article.One the justifications journalists use is that near truth is easier for their readers to understand. First of all, that's not justification for false hoods. If the words mean something else, then it's false. It doesn't matter if its simpler. Secondly, I'm not sure they actually are easier to understand. It's still techy gobbledygook. In the Bloomberg article, if I as an expert can't figure out what actually happened, then I know that the average reader can't, either, no matter how much you've "simplified" the language.Stories can solve this by both giving the actual technical terms that experts can understand, then explain them. Yes, it eats up space, but if you care about the truth, it's necessary.In groundbreaking stories like Bloomberg's, the length is already enough that the average reader won't slog through it. Instead, it becomes a seed for lots of other coverage that explains the story. In such cases, you want to get the techy details, the actual truth, correct, so that we experts can stand behind the story and explain it. Otherwise, going for the simpler near truth means that all us experts simply question the veracity of the story.The companies mentioned in the Bloomberg story have called it an out The recent Bloomberg article about Chinese hacking motherboards is a great opportunity to talk about problems with journalism.Journalism is about telling the truth, not a close approximation of the truth, but the true truth. They don't do a good job at this in cybersecurity.Take, for example, a recent incident where the Associated Press fired a reporter for photoshopping his shadow out of a photo. The AP took a scorched-earth approach, not simply firing the photographer, but removing all his photographs from their library.That's because there is a difference between truth and near truth.Now consider Bloomberg's story, such as a photograph of a tiny chip. Is that a photograph of the actual chip the Chinese inserted into the motherboard? Or is it another chip, representing the size of the real chip? Is it truth or near truth?Or consider the technical details in Bloomberg's story. They are garbled, as this discussion shows. Something like what Bloomberg describes is certainly plausible, something exactly what Bloomberg describes is impossible. Again there is the question of truth vs. near truth.There are other near truths involved. For example, we know that supply chains often replace high-quality expensive components with cheaper, lower-quality knockoffs. It's perfectly plausible that some of the incidents Bloomberg describes is that known issue, which they are then hyping as being hacker chips. This demonstrates how truth and near truth can be quite far apart, telling very different stories.Another example is a NYTimes story about a terrorist's use of encryption. As I've discussed before, the story has numerous "near truth" errors. The NYTimes story is based upon a transcript of an interrogation of the hacker. The French newspaper Le Monde published excerpts from that interrogation, with details that differ slightly from the NYTimes article.One the justifications journalists use is that near truth is easier for their readers to understand. First of all, that's not justification for false hoods. If the words mean something else, then it's false. It doesn't matter if its simpler. Secondly, I'm not sure they actually are easier to understand. It's still techy gobbledygook. In the Bloomberg article, if I as an expert can't figure out what actually happened, then I know that the average reader can't, either, no matter how much you've "simplified" the language.Stories can solve this by both giving the actual technical terms that experts can understand, then explain them. Yes, it eats up space, but if you care about the truth, it's necessary.In groundbreaking stories like Bloomberg's, the length is already enough that the average reader won't slog through it. Instead, it becomes a seed for lots of other coverage that explains the story. In such cases, you want to get the techy details, the actual truth, correct, so that we experts can stand behind the story and explain it. Otherwise, going for the simpler near truth means that all us experts simply question the veracity of the story.The companies mentioned in the Bloomberg story have called it an out |

Hack | |||

| 2018-10-16 17:06:57 | Notes on the UK IoT cybersec "Code of Practice" (lien direct) | The British government has released a voluntary "Code of Practice" for securing IoT devices. I thought I'd write some notes on it.First, the good partsBefore I criticize the individual points, I want to praise if for having a clue. So many of these sorts of things are written by the clueless, those who want to be involved in telling people what to do, but who don't really understand the problem.The first part of the clue is restricting the scope. Consumer IoT is so vastly different from things like cars, medical devices, industrial control systems, or mobile phones that they should never really be talked about in the same guide.The next part of the clue is understanding the players. It's not just the device that's a problem, but also the cloud and mobile app part that relates to the device. Though they do go too far and include the "retailer", which is a bit nonsensical.Lastly, while I'm critical of most all the points on the list and how they are described, it's probably a complete list. There's not much missing, and the same time, it includes little that isn't necessary. In contrast, a lot of other IoT security guides lack important things, or take the "kitchen sink" approach and try to include everything conceivable.1) No default passwordsSince the Mirai botnet of 2016 famously exploited default passwords, this has been at the top of everyone's list. It's the most prominent feature of the recent California IoT law. It's the major feature of federal proposals.But this is only a superficial understanding of what really happened. The issue wasn't default passwords so much as Internet-exposed Telnet.IoT devices are generally based on Linux which maintains operating-system passwords in the /etc/passwd file. However, devices almost never use that. Instead, the web-based management interface maintains its own password database. The underlying Linux system is vestigial like an appendix and not really used.But these devices exposed Telnet, providing a path to this otherwise unused functionality. I bought several of the Mirai-vulnerable devices, and none of them used /etc/passwd for anything other than Telnet.Another way default passwords get exposed in IoT devices is through debugging interfaces. Manufacturers configure the system one way for easy development, and then ship a separate "release" version. Sometimes they make a mistake and ship the development backdoors as well. Programmers often insert secret backdoor accounts into products for development purposes without realizing how easy it is for hackers to discover those passwords.The point is that this focus on backdoor passwords is misunderstanding the problem. Device makers can easily believe they are compliant with this directive while still having backdoor passwords.As for the web management interface, saying "no default passwords" is useless. Users have to be able to setup the device the first time, so there has to be some means to connect to the device without passwords initially. Device makers don't know how to do this without default passwords. Instead of mindless guidance of what not to do, a document needs to be written that explains how devices can do this both securely as well as easy enough for users to use.Humorously, the footnotes in this section do reference external documents that might explain this, but they are the wrong documents, appropriate for things like website password policies, but inappropriate for IoT web interfaces. This again demonstrates how they have only a superficial understanding of the problem.2) Implement a vulnerability disclosure policyThis is a clueful item, and it should be the #1 item on every list. | Hack | |||

| 2018-10-14 04:57:46 | How to irregular cyber warfare (lien direct) | Somebody (@thegrugq) pointed me to this article on "Lessons on Irregular Cyber Warfare", citing the masters like Sun Tzu, von Clausewitz, Mao, Che, and the usual characters. It tries to answer:...as an insurgent, which is in a weaker power position vis-a-vis a stronger nation state; how does cyber warfare plays an integral part in the irregular cyber conflicts in the twenty-first century between nation-states and violent non-state actors or insurgenciesI thought I'd write a rebuttal.None of these people provide any value. If you want to figure out cyber insurgency, then you want to focus on the technical "cyber" aspects, not "insurgency". I regularly read military articles about cyber written by those, like in the above article, which demonstrate little experience in cyber.The chief technical lesson for the cyber insurgent is the Birthday Paradox. Let's say, hypothetically, you go to a party with 23 people total. What's the chance that any two people at the party have the same birthday? The answer is 50.7%. With a party of 75 people, the chance rises to 99.9% that two will have the same birthday.The paradox is that your intuitive way of calculating the odds is wrong. You are thinking the odds are like those of somebody having the same birthday as yourself, which is in indeed roughly 23 out of 365. But we aren't talking about you vs. the remainder of the party, we are talking about any possible combination of two people. This dramatically changes how we do the math.In cryptography, this is known as the "Birthday Attack". One crypto task is to uniquely fingerprint documents. Historically, the most popular way of doing his was with an algorithm known as "MD5" which produces 128-bit fingerprints. Given a document, with an MD5 fingerprint, it's impossible to create a second document with the same fingerprint. However, with MD5, it's possible to create two documents with the same fingerprint. In other words, we can't modify only one document to get a match, but we can keep modifying two documents until their fingerprints match. Like a room, finding somebody with your birthday is hard, finding any two people with the same birthday is easier.The same principle works with insurgencies. Accomplishing one specific goal is hard, but accomplishing any goal is easy. Trying to do a narrowly defined task to disrupt the enemy is hard, but it's easy to support a group of motivated hackers and let them do any sort of disruption they can come up with.The above article suggests a means of using cyber to disrupt a carrier attack group. This is an example of something hard, a narrowly defined attack that is unlikely to actually work in the real world.Conversely, consider the attacks attributed to North Korea, like those against Sony or the Wannacry virus. These aren't the careful planning of a small state actor trying to accomplish specific goals. These are the actions of an actor that supports hacker groups, and lets them loose without a lot of oversight and direction. Wannacry in particular is an example of an undirected cyber attack. We know from our experience with network worms that its effects were impossible to predict. Somebody just stuck the newly discovered NSA EternalBlue payload into an existing virus framework and let it run to see what happens. As we worm experts know, nobody could have predicted the results of doing so, not even its creators.Another example is the DNC election hacks. The reason we can attribute them to Russia is because it wasn't their narrow goal. Instead, by looking at things like their URL shortener, we can see that they flailed around broadly all over cyberspace. The DNC was just one of thei | Hack Guideline | Wannacry | ||

| 2018-10-04 16:36:51 | Notes on the Bloomberg Supermicro supply chain hack story (lien direct) | Bloomberg has a story how Chinese intelligence inserted secret chips into servers bound for America. There are a couple issues with the story I wanted to address.The story is based on anonymous sources, and not even good anonymous sources. An example is this attribution:a person briefed on evidence gathered during the probe saysThat means somebody not even involved, but somebody who heard a rumor. It also doesn't the person even had sufficient expertise to understand what they were being briefed about.The technical detail that's missing from the story is that the supply chain is already messed up with fake chips rather than malicious chips. Reputable vendors spend a lot of time ensuring quality, reliability, tolerances, ability to withstand harsh environments, and so on. Even the simplest of chips can command a price premium when they are well made.What happens is that other companies make clones that are cheaper and lower quality. They are just good enough to pass testing, but fail in the real world. They may not even be completely fake chips. They may be bad chips the original manufacturer discarded, or chips the night shift at the factory secretly ran through on the equipment -- but with less quality control.The supply chain description in the Bloomberg story is accurate, except that in fails to discuss how these cheap, bad chips frequently replace the more expensive chips, with contract manufacturers or managers skimming off the profits. Replacement chips are real, but whether they are for malicious hacking or just theft is the sticking point.For example, consider this listing for a USB-to-serial converter using the well-known FTDI chip. The word "genuine" is in the title, because fake FTDI chips are common within the supply chain. As you can see form the $11 price, the amount of money you can make with fake chips is low -- these contract manufacturers hope to make it up in volume. The story implies that Apple is lying in its denials of malicious hacking, and deliberately avoids this other supply chain issue. It's perfectly reasonable for Apple to have rejected Supermicro servers because of bad chips that have nothing to do with hacking.If there's hacking going on, it may not even be Chinese intelligence -- the manufacturing process is so lax that any intelligence agency could be responsible. Just because most manufacturing of server motherboards happen in China doesn't point the finger to Chinese intelligence as being the ones responsible.Finally, I want to point out the sensationalism of the story. It spends much effort focusing on the invisible nature of small chips, as evidence that somebody is trying to hide something. That the chips are so small means nothing: except for the major chips, all the chips on a motherboard are small. It's hard to have large chips, except for the big things like the CPU and DRAM. Serial ROMs containing firmware are never going to be big, because they just don't hold that much information.A fake serial ROM is the focus here not so much because that's the chip they found by accident, but that's the chip they'd look for. The chips contain the firmware for other hardware devices on the motherboard. Thus, instead of designing complex hardware to do malicious things, a hacker simply has to make simple changes t The story implies that Apple is lying in its denials of malicious hacking, and deliberately avoids this other supply chain issue. It's perfectly reasonable for Apple to have rejected Supermicro servers because of bad chips that have nothing to do with hacking.If there's hacking going on, it may not even be Chinese intelligence -- the manufacturing process is so lax that any intelligence agency could be responsible. Just because most manufacturing of server motherboards happen in China doesn't point the finger to Chinese intelligence as being the ones responsible.Finally, I want to point out the sensationalism of the story. It spends much effort focusing on the invisible nature of small chips, as evidence that somebody is trying to hide something. That the chips are so small means nothing: except for the major chips, all the chips on a motherboard are small. It's hard to have large chips, except for the big things like the CPU and DRAM. Serial ROMs containing firmware are never going to be big, because they just don't hold that much information.A fake serial ROM is the focus here not so much because that's the chip they found by accident, but that's the chip they'd look for. The chips contain the firmware for other hardware devices on the motherboard. Thus, instead of designing complex hardware to do malicious things, a hacker simply has to make simple changes t |

Hack | |||

| 2018-09-10 17:33:17 | California\'s bad IoT law (lien direct) | California has passed an IoT security bill, awaiting the government's signature/veto. It's a typically bad bill based on a superficial understanding of cybersecurity/hacking that will do little improve security, while doing a lot to impose costs and harm innovation.It's based on the misconception of adding security features. It's like dieting, where people insist you should eat more kale, which does little to address the problem you are pigging out on potato chips. The key to dieting is not eating more but eating less. The same is true of cybersecurity, where the point is not to add “security features” but to remove “insecure features”. For IoT devices, that means removing listening ports and cross-site/injection issues in web management. Adding features is typical “magic pill” or “silver bullet” thinking that we spend much of our time in infosec fighting against.We don't want arbitrary features like firewall and anti-virus added to these products. It'll just increase the attack surface making things worse. The one possible exception to this is “patchability”: some IoT devices can't be patched, and that is a problem. But even here, it's complicated. Even if IoT devices are patchable in theory there is no guarantee vendors will supply such patches, or worse, that users will apply them. Users overwhelmingly forget about devices once they are installed. These devices aren't like phones/laptops which notify users about patching.You might think a good solution to this is automated patching, but only if you ignore history. Many rate “NotPetya” as the worst, most costly, cyberattack ever. That was launched by subverting an automated patch. Most IoT devices exist behind firewalls, and are thus very difficult to hack. Automated patching gets beyond firewalls; it makes it much more likely mass infections will result from hackers targeting the vendor. The Mirai worm infected fewer than 200,000 devices. A hack of a tiny IoT vendor can gain control of more devices than that in one fell swoop.The bill does target one insecure feature that should be removed: hardcoded passwords. But they get the language wrong. A device doesn't have a single password, but many things that may or may not be called passwords. A typical IoT device has one system for creating accounts on the web management interface, a wholly separate authentication system for services like Telnet (based on /etc/passwd), and yet a wholly separate system for things like debugging interfaces. Just because a device does the proscribed thing of using a unique or user generated password in the user interface doesn't mean it doesn't also have a bug in Telnet.That was the problem with devices infected by Mirai. The description that these were hardcoded passwords is only a superficial understanding of the problem. The real problem was that there were different authentication systems in the web interface and in other services like Telnet. Most of the devices vulnerable to Mirai did the right thing on the web interfaces (meeting the language of this law) requiring the user to create new passwords before operating. They just did the wrong thing elsewhere.People aren't really paying attention to what happened with Mirai. They look at the 20 billion new IoT devices that are going to be connected to the Internet by 2020 and believe Mirai is just the tip of the iceberg. But it isn't. The IPv4 Internet has only 4 billion addresses, which are pretty much already used up. This means those 20 billion won't be exposed to the public Internet like Mirai devices, but hidden behind firewalls that translate addresses. Thus, rather than Mirai presaging the future, it represents the last gasp of the past that is unlikely to come again.This law is backwards looking rather than forward looking. Forward looking, by far the most important t | Hack Threat Patching Guideline | NotPetya Tesla | ||

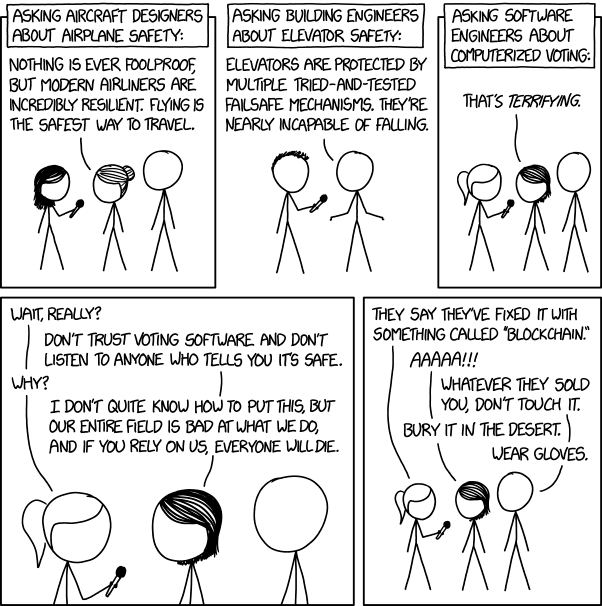

| 2018-08-08 20:09:17 | That XKCD on voting machine software is wrong (lien direct) | The latest XKCD comic on voting machine software is wrong, profoundly so. It's the sort of thing that appeals to our prejudices, but mistakes the details. Accidents vs. attackThe biggest flaw is that the comic confuses accidents vs. intentional attack. Airplanes and elevators are designed to avoid accidental failures. If that's the measure, then voting machine software is fine and perfectly trustworthy. Such machines are no more likely to accidentally record a wrong vote than the paper voting systems they replaced -- indeed less likely. The reason we have electronic voting machines in the first place was due to the "hanging chad" problem in the Bush v. Gore election of the year 2000. After that election, a wave of new, software-based, voting machines replaced the older inaccurate paper machines.The question is whether software voting machines can be attacked. Well, if that's the measure, then airplanes aren't safe at all. Security against human attack consists of the entire infrastructure outside the plane, such as TSA forcing us to take off our shoes, to trade restrictions to prevent the proliferation of Stinger missiles.Confusing the two, accidents vs. attack, is used here because it makes the reader feel superior. We get to mock and feel superior to those stupid software engineers for not living up to what's essentially a fictional standard of reliability.To repeat: software is better than the mechanical machines they replaced, which is why there are so many software-based machines in the United States. The issue isn't normal accuracy, but their robustness against a different standard, against attack -- a standard which airplanes and elevators suck at.The problems are as much hardware as softwareLast year at the DEF CON hacking conference they had an "Election Hacking Village" where they hacked a number of electronic voting machines. Most of those "hacks" were against the hardware, such as soldering on a JTAG device or accessing USB ports. Other errors have been voting machines being sold on eBay whose data wasn't wiped, allowing voter records to be recovered.What we want to see is hardware designed more like an iPhone, where the FBI can't decrypt a phone even when they really really want to. This requires special chips, such as secure enclaves, signed boot loaders, and so on. Only once we get the hardware right can we complain about the software being deficient.To be fair, software problems were also found at DEF CON, like an exploit over WiFi. Though, a lot of problems are questionable whether the fault lies in the software design or the hardware design, fixable in either one. The situation is better described as the entire design being flawed, from the "requirements", to the high-level system "architecture", and lastly to the actual "software" code.It's lack of accountability/fail-safesWe imagine the threat is that votes can be changed in the voting machine, but it's more profound than that. The problem is that votes can be changed invisibly. The first change experts want to see is adding a paper trail, rather than fixing bugs.Consider "recounts". With many of today's electronic voting machines, this is meaningless, with nothing to recount. The machine produces a number, and we have nothing else to test against whether that number is correct or fa Accidents vs. attackThe biggest flaw is that the comic confuses accidents vs. intentional attack. Airplanes and elevators are designed to avoid accidental failures. If that's the measure, then voting machine software is fine and perfectly trustworthy. Such machines are no more likely to accidentally record a wrong vote than the paper voting systems they replaced -- indeed less likely. The reason we have electronic voting machines in the first place was due to the "hanging chad" problem in the Bush v. Gore election of the year 2000. After that election, a wave of new, software-based, voting machines replaced the older inaccurate paper machines.The question is whether software voting machines can be attacked. Well, if that's the measure, then airplanes aren't safe at all. Security against human attack consists of the entire infrastructure outside the plane, such as TSA forcing us to take off our shoes, to trade restrictions to prevent the proliferation of Stinger missiles.Confusing the two, accidents vs. attack, is used here because it makes the reader feel superior. We get to mock and feel superior to those stupid software engineers for not living up to what's essentially a fictional standard of reliability.To repeat: software is better than the mechanical machines they replaced, which is why there are so many software-based machines in the United States. The issue isn't normal accuracy, but their robustness against a different standard, against attack -- a standard which airplanes and elevators suck at.The problems are as much hardware as softwareLast year at the DEF CON hacking conference they had an "Election Hacking Village" where they hacked a number of electronic voting machines. Most of those "hacks" were against the hardware, such as soldering on a JTAG device or accessing USB ports. Other errors have been voting machines being sold on eBay whose data wasn't wiped, allowing voter records to be recovered.What we want to see is hardware designed more like an iPhone, where the FBI can't decrypt a phone even when they really really want to. This requires special chips, such as secure enclaves, signed boot loaders, and so on. Only once we get the hardware right can we complain about the software being deficient.To be fair, software problems were also found at DEF CON, like an exploit over WiFi. Though, a lot of problems are questionable whether the fault lies in the software design or the hardware design, fixable in either one. The situation is better described as the entire design being flawed, from the "requirements", to the high-level system "architecture", and lastly to the actual "software" code.It's lack of accountability/fail-safesWe imagine the threat is that votes can be changed in the voting machine, but it's more profound than that. The problem is that votes can be changed invisibly. The first change experts want to see is adding a paper trail, rather than fixing bugs.Consider "recounts". With many of today's electronic voting machines, this is meaningless, with nothing to recount. The machine produces a number, and we have nothing else to test against whether that number is correct or fa |

Hack Threat | |||