What's new arround internet

| Src | Date (GMT) | Titre | Description | Tags | Stories | Notes |

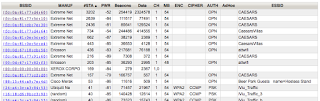

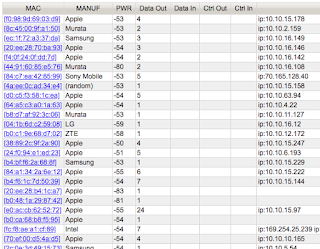

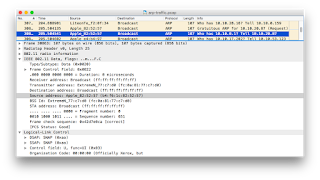

| 2019-08-04 18:52:45 | Securing devices for DEFCON (lien direct) | There's been much debate whether you should get burner devices for hacking conventions like DEF CON (phones or laptops). A better discussion would be to list those things you should do to secure yourself before going, just in case.These are the things I worry about:backup before you goupdate before you gocorrectly locking your devices with full disk encryptioncorrectly configuring WiFiBluetooth devicesMobile phone vs. StingraysUSBBackupTraveling means a higher chance of losing your device. In my review of crime statistics, theft seems less of a threat than whatever city you are coming from. My guess is that while thieves may want to target tourists, the police want to even more the target gangs of thieves, to protect the cash cow that is the tourist industry. But you are still more likely to accidentally leave a phone in a taxi or have your laptop crushed in the overhead bin. If you haven't recently backed up your device, now would be an extra useful time to do this.Anything I want backed up on my laptop is already in Microsoft's OneDrive, so I don't pay attention to this. However, I have a lot of pictures on my iPhone that I don't have in iCloud, so I copy those off before I go.UpdateLike most of you, I put off updates unless they are really important, updating every few months rather than every month. Now is a great time to make sure you have the latest updates.Backup before you update, but then, I already mentioned that above.Full disk encryptionThis is enabled by default on phones, but not the default for laptops. It means that if you lose your device, adversaries can't read any data from it.You are at risk if you have a simple unlock code, like a predicable pattern or a 4-digit code. The longer and less predictable your unlock code, the more secure you are.I use iPhone's "face id" on my phone so that people looking over my shoulder can't figure out my passcode when I need to unlock the phone. However, because this enables the police to easily unlock my phone, by putting it in front of my face, I also remember how to quickly disable face id (by holding the buttons on both sides for 2 seconds).As for laptops, it's usually easy to enable full disk encryption. However there are some gotchas. Microsoft requires a TPM for its BitLocker full disk encryption, which your laptop might not support. I don't know why all laptops don't just have TPMs, but they don't. You may be able to use some tricks to get around this. There are also third party full disk encryption products that use simple passwords.If you don't have a TPM, then hackers can brute-force crack your password, trying billions per second. This applies to my MacBook Air, which is the 2017 model before Apple started adding their "T2" chip to all their laptops. Therefore, I need a strong login password.I deal with this on my MacBook by having two accounts. When I power on the device, I log into an account using a long/complicated password. I then switch to an account with a simpler account for going in/out of sleep mode. This second account can't be used to decrypt the drive.On Linux, my password to decrypt the drive is similarly long, while the user account password is pretty short.I ignore the "evil maid" threat, because my devices are always with me rather than in | Hack Threat Guideline | |||

| 2019-07-28 15:21:32 | Why we fight for crypto (lien direct) | This last week, the Attorney General William Barr called for crypto backdoors. His speech is a fair summary of law-enforcement's side of the argument. In this post, I'm going to address many of his arguments.The tl;dr version of this blog post is this:Their claims of mounting crime are unsubstantiated, based on emotional anecdotes rather than statistics. We live in a Golden Age of Surveillance where, if any balancing is to be done in the privacy vs. security tradeoff, it should be in favor of more privacy.But we aren't talking about tradeoff with privacy, but other rights. In particular, it's every much as important to protect the rights of political dissidents to keep some communications private (encryption) as it is to allow them to make other communications public (free speech). In addition, there is no solution to their "going dark" problem that doesn't restrict the freedom to run arbitrary software of the user's choice on their computers/phones.Thirdly, there is the problem of technical feasibility. We don't know how to make backdoors available for law enforcement access that doesn't enormously reduce security for users.BalanceThe crux of his argument is balancing civil rights vs. safety, also described as privacy vs. security. This balance is expressed in the constitution by the Fourth Amendment. The 4rth doesn't express an absolute right to privacy, but allows for police to invade your privacy if they can show an independent judge that they have "probable cause". By making communications "warrant proof", encryption is creating a "law free zone" enabling crime to be conducted without the ability of the police to investigate.It's a reasonable argument. If your child gets kidnapped by sex traffickers, you'll be demanding the police do something, anything to get your child back safe. If a phone is found at the scene, you'll definitely want them to have the ability to decrypt the phone, as long as a judge gives them a search warrant to balance civil liberty concerns.However, this argument is wrong, as I'll discuss below.Law free zonesBarr claims encryption creates a new "law free zone ... giving criminals the means to operate free of lawful scrutiny". He pretends that such zones never existed before.Of course they've existed before. Attorney-client privilege is one example, which is definitely abused to further crime. Barr's own boss has committed obstruction of justice, hiding behind the law-free zone of Article II of the constitution. We are surrounded by legal loopholes that criminals exploit in order to commit crimes, where the cost of closing the loophole is greater than the benefit.The biggest "law free zone" that exists is just the fact that we don't live in a universal surveillance state. I think impure thoughts without the police being able to read my mind. I can whisper quietly in your ear at a bar without the government overhearing. I can invite you over to my house to plot nefarious deeds in my living room.Technology didn't create these zones. However, technological advances are allowing police to defeat them.Business's have security cameras everywhere. Neighborhood associations are installing license plate readers. We are putting Echo/OkGoogle/Cortana/Siri devices in our homes listening to us. Our phones and computers have microphones and cameras. Our TV's increasingly have cameras and mics, too, in case we want to use them for video conferencing, or give them voice commands.Every argument Barr makes about crypto backdoors applies to backdoor access to microphones, every arguments applies to forcing TVs to have a backdoor allowing police armed with a warrant to turn on the camera in your living room. These | Guideline | |||

| 2019-06-14 19:45:51 | Censorship vs. the memes (lien direct) | The most annoying thing in any conversation is when people drop a meme bomb, some simple concept they've heard elsewhere in a nice package that they really haven't thought through, which takes time and nuance to rebut. These memes are often bankrupt of any meaning.When discussing censorship, which is wildly popular these days, people keep repeating these same memes to justify it:you can't yell fire in a crowded movie theaterbut this speech is harmfulKarl Popper's Paradox of Tolerancecensorship/free-speech don't apply to private organizationsTwitter blocks and free speechThis post takes some time to discuss these memes, so I can refer back to it later, instead of repeating the argument every time some new person repeats the same old meme.You can't yell fire in a crowded movie theaterThis phrase was first used in the Supreme Court decision Schenck v. United States to justify outlawing protests against the draft. Unless you also believe the government can jail you for protesting the draft, then the phrase is bankrupt of all meaning.In other words, how can it be used to justify the thing you are trying to censor and yet be an invalid justification for censoring those things (like draft protests) you don't want censored?What this phrase actually means is that because it's okay to suppress one type of speech, it justifies censoring any speech you want. Which means all censorship is valid. If that's what you believe, just come out and say "all censorship is valid".But this speech is harmful or invalidThat's what everyone says. In the history of censorship, nobody has ever wanted to censor good speech, only speech they claimed was objectively bad, invalid, unreasonable, malicious, or otherwise harmfulIt's just that everybody has different definitions of what, actually is bad, harmful, or invalid. It's like the movie theater quote. For example, China's constitution proclaims freedom of speech, yet the government blocks all mention of the Tienanmen Square massacre because it's harmful. It's "Great Firewall of China" is famous for blocking most of the content of the Internet that the government claims harms its citizens. I put some photos of the Tiananmen anniversary mass vigil in #Hongkong last night onto Wechat and my account has been suspended for “spreading malicious rumours”. The #China of today... pic.twitter.com/F6e2exsgGE- Stephen McDonell (@StephenMcDonell) June 5, 2019At least in case of movie theaters, the harm of shouting "fire" is immediate and direct. In all these other cases, the harm is many steps removed. Many want to censor anti-vaxxers, because their speech kills children. But the speech doesn't, the virus does. By extension, those not getting vaccinations may harm peopl I put some photos of the Tiananmen anniversary mass vigil in #Hongkong last night onto Wechat and my account has been suspended for “spreading malicious rumours”. The #China of today... pic.twitter.com/F6e2exsgGE- Stephen McDonell (@StephenMcDonell) June 5, 2019At least in case of movie theaters, the harm of shouting "fire" is immediate and direct. In all these other cases, the harm is many steps removed. Many want to censor anti-vaxxers, because their speech kills children. But the speech doesn't, the virus does. By extension, those not getting vaccinations may harm peopl |

||||

| 2019-05-31 20:15:34 | Some Raspberry Pi compatible computers (lien direct) | I noticed this spreadsheet over at r/raspberry_pi reddit. I thought I'd write up some additional notes.https://docs.google.com/spreadsheets/d/1jWMaK-26EEAKMhmp6SLhjScWW2WKH4eKD-93hjpmm_s/edit#gid=0Consider the Upboard, an x86 computer in the Raspberry Pi form factor for $99. When you include storage, power supplies, heatsinks, cases, and so on, it's actually pretty competitive. It's not ARM, so many things built for the Raspberry Pi won't necessarily work. But on the other hand, most of the software built for the Raspberry Pi was originally developed for x86 anyway, so sometimes it'll work better.Consider the quasi-RPi boards that support the same GPIO headers, but in a form factor that's not the same as a RPi. A good example would be the ODroid-N2. These aren't listed in the above spreadsheet, but there's a tone of them. There's only two Nano Pi's listed in the spreadsheet having the same form factor as the RPi, but there's around 20 different actual boards with all sorts of different form factors and capabilities.Consider the heatsink, which can make a big difference in the performance and stability of the board. You can put a small heatsink on any board, but you really need larger heatsinks and possibly fans. Some boards, like the ODroid-C2, come with a nice large heatsink. Other boards have a custom designed large heatsink you can purchase along with the board for around $10. The Raspberry Pi, of course, has numerous third party heatsinks available. Whether or not there's a nice large heatsink available is an important buying criteria. That spreadsheet should have a column for "Large Heatsink", whether one is "Integrated" or "Available".Consider power consumption and heat dissipation as a buying criteria. Uniquely among the competing devices, the Raspberry Pi itself uses a CPU fabbed on a 40nm process, whereas most of the competitors use 28nm or even 14nm. That means it consumes more power and produces more heat than any of it's competitors, by a large margin. The Intel Atom CPU mentioned above is actually one of the most power efficient, being fabbed on a 14nm process. Ideally, that spreadsheet would have tow additional columns for power consumption (and hence heat production) at "Idle" and "Load".You shouldn't really care about CPU speed. But if you are, there basically two classes of speed: in-order and out-of-order. For the same GHz, out-of-order CPUs are roughly twice as fast as in-order. The Cortex A5, A7, and A53 are in-order. The Cortex A17, A72, and A73 (and Intel Atom) are out-of-order. The spreadsheet also lists some NXP i.MX series processors, but those are actually ARM Cortex designs. I don't know which, though.The spreadsheet lists memory, like LPDDR3 or DDR4, but it's unclear as to speed. There's two things that determine speed, the number of MHz/GHz and the width, typically either 32-bits or 64-bits. By "64-bits" we can mean a single channel that's 64-bits wide, as in the case of the Intel Atom processors, or two channels that are each 32-bits wide, as in the case of some ARM processors. The Raspberry Pi has an incredibly anemic 32-bit 400-MHz memory, whereas some competitors have 64-bit 1600-MHz memory, or roughly 8 times the speed. For CPU-bound tasks, this isn't so important, but a lot of tasks are in fact bound by memory speed.As for GPUs, most are not OpenCL programmable, but some are. The VideoCore and Mali 4xx (Utgard) GPUs are not programmable. The Mali Txxx (Midgard) are programmable. The "MP2" suffix means two GPU processors, whereas "MP4" means four GPU processors. For a lot of tasks, such as "SDR" (software defined radio), offloading onto GPU simultaneously reduce | ||||

| 2019-05-29 20:16:09 | Your threat model is wrong (lien direct) | Several subjects have come up with the past week that all come down to the same thing: your threat model is wrong. Instead of addressing the the threat that exists, you've morphed the threat into something else that you'd rather deal with, or which is easier to understand.PhishingAn example is this question that misunderstands the threat of "phishing":Should failing multiple phishing tests be grounds for firing? I ran into a guy at a recent conference, said his employer fired people for repeatedly falling for (simulated) phishing attacks. I talked to experts, who weren't wild about this disincentive. https://t.co/eRYPZ9qkzB pic.twitter.com/Q1aqCmkrWL- briankrebs (@briankrebs) May 29, 2019The (wrong) threat model is here is that phishing is an email that smart users with training can identify and avoid. This isn't true.Good phishing messages are indistinguishable from legitimate messages. Said another way, a lot of legitimate messages are in fact phishing messages, such as when HR sends out a message saying "log into this website with your organization username/password".Recently, my university sent me an email for mandatory Title IX training, not digitally signed, with an external link to the training, that requested my university login creds for access, that was sent from an external address but from the Title IX coordinator.- Tyler Pieron (@tyler_pieron) May 29, 2019Yes, it's amazing how easily stupid employees are tricked by the most obvious of phishing messages, and you want to point and laugh at them. But frankly, you want the idiot employees doing this. The more obvious phishing attempts are the least harmful and a good test of the rest of your security -- which should be based on the assumption that users will frequently fall for phishing.In other words, if you paid attention to the threat model, you'd be mitigating the threat in other ways and not even bother training employees. You'd be firing HR idiots for phishing employees, not punishing employees for getting tricked. Your systems would be resilient against successful phishes, such as using two-factor authentication.IoT securityAfter the Mirai worm, government types pushed for laws to secure IoT devices, as billions of insecure devices like TVs, cars, security cameras, and toasters are added to the Internet. Everyone is afraid of the next Mirai-type worm. For example, they are pushing for devices to be auto-updated.But auto-updates are a bigger threat than worms.Since Mirai, roughly 10-billion new IoT devices have been added to the Internet, yet there hasn't been a Mirai-sized worm. Why is that? After 10-billion new IoT devices, it's still Windows and not IoT that is the main problem.The answer is that number, 10-billion. Internet worms work by guessing IPv4 addresses, of which there are only 4-billion. You can't have 10-billion new devices on the public IPv4 addresses because there simply aren't enough addresses. Instead, those 10-billion devices are almost entirely being put on private ne | Ransomware Tool Vulnerability Threat Guideline | FedEx NotPetya | ||

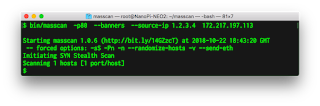

| 2019-05-28 06:20:06 | Almost One Million Vulnerable to BlueKeep Vuln (CVE-2019-0708) (lien direct) | Microsoft announced a vulnerability in it's "Remote Desktop" product that can lead to robust, wormable exploits. I scanned the Internet to assess the danger. I find nearly 1-million devices on the public Internet that are vulnerable to the bug. That means when the worm hits, it'll likely compromise those million devices. This will likely lead to an event as damaging as WannaCry and notPetya from 2017 -- potentially worse, as hackers have since honed their skills exploiting these things for ransomware and other nastiness.To scan the Internet, I started with masscan, my Internet-scale port scanner, looking for port 3389, the one used by Remote Desktop. This takes a couple hours, and lists all the devices running Remote Desktop -- in theory.This returned 7,629,102 results (over 7-million). However, there is a lot of junk out there that'll respond on this port. Only about half are actually Remote Desktop.Masscan only finds the open ports, but is not complex enough to check for the vulnerability. Remote Desktop is a complicated protocol. A project was posted that could connect to an address and test it, to see if it was patched or vulnerable. I took that project and optimized it a bit, rdpscan, then used it to scan the results from masscan. It's a thousand times slower, but it's only scanning the results from masscan instead of the entire Internet.The table of results is as follows:1447579 UNKNOWN - receive timeout1414793 SAFE - Target appears patched1294719 UNKNOWN - connection reset by peer1235448 SAFE - CredSSP/NLA required 923671 VULNERABLE -- got appid 651545 UNKNOWN - FIN received 438480 UNKNOWN - connect timeout 105721 UNKNOWN - connect failed 9 82836 SAFE - not RDP but HTTP 24833 UNKNOWN - connection reset on connect 3098 UNKNOWN - network error 2576 UNKNOWN - connection terminatedThe various UNKNOWN things fail for various reasons. A lot of them are because the protocol isn't actually Remote Desktop and respond weirdly when we try to talk Remote Desktop. A lot of others are Windows machines, sometimes vulnerable and sometimes not, but for some reason return errors sometimes.The important results are those marked VULNERABLE. There are 923,671 vulnerable machines in this result. That means we've confirmed the vulnerability really does exist, though it's possible a small number of these are "honeypots" deliberately pretending to be vulnerable in order to monitor hacker activity on the Internet.The next result are those marked SAFE due to probably being "pached". Actually, it doesn't necessarily mean they are patched Windows boxes. They could instead be non-Windows systems that appear the same as patched Windows boxes. But either way, they are safe from this vulnerability. There are 1,414,793 of them.The next result to look at are those marked SAFE due to CredSSP/NLA failures, of which there are 1,235,448. This doesn't mean they are patched, but only that we can't exploit them. They require "network level authentication" first before we can talk Remote Desktop to them. That means we can't test whether they are patched or vulnerable -- but neither can the hackers. They may still be exploitable via an insider threat who knows a valid username/password, but they aren't exploitable by anonymous hackers or worms.The next category is marked as SAFE because they aren't Remote Desktop at all, but HTTP servers. In other words, in response to o | Ransomware Vulnerability Threat Patching Guideline | NotPetya Wannacry | ||

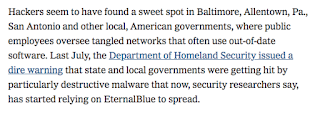

| 2019-05-27 19:59:38 | A lesson in journalism vs. cybersecurity (lien direct) | A recent NYTimes article blaming the NSA for a ransomware attack on Baltimore is typical bad journalism. It's an op-ed masquerading as a news article. It cites many to support the conclusion the NSA is to be blamed, but only a single quote, from the NSA director, from the opposing side. Yet many experts oppose this conclusion, such as @dave_maynor, @beauwoods, @daveaitel, @riskybusiness, @shpantzer, @todb, @hrbrmst, ... It's not as if these people are hard to find, it's that the story's authors didn't look.The main reason experts disagree is that the NSA's Eternalblue isn't actually responsible for most ransomware infections. It's almost never used to start the initial infection -- that's almost always phishing or website vulns. Once inside, it's almost never used to spread laterally -- that's almost always done with windows networking and stolen credentials. Yes, ransomware increasingly includes Eternalblue as part of their arsenal of attacks, but this doesn't mean Eternalblue is responsible for ransomware.The NYTimes story takes extraordinary effort to jump around this fact, deliberately misleading the reader to conflate one with the other. A good example is this paragraph: That link is a warning from last July about the "Emotet" ransomware and makes no mention of EternalBlue. Instead, the story is citing anonymous researchers claiming that EthernalBlue has been added to Emotet since after that DHS warning.Who are these anonymous researchers? The NYTimes article doesn't say. This is bad journalism. The principles of journalism are that you are supposed to attribute where you got such information, so that the reader can verify for themselves whether the information is true or false, or at least, credible.And in this case, it's probably false. The likely source for that claim is this article from Malwarebytes about Emotet. They have since retracted this claim, as the latest version of their article points out. That link is a warning from last July about the "Emotet" ransomware and makes no mention of EternalBlue. Instead, the story is citing anonymous researchers claiming that EthernalBlue has been added to Emotet since after that DHS warning.Who are these anonymous researchers? The NYTimes article doesn't say. This is bad journalism. The principles of journalism are that you are supposed to attribute where you got such information, so that the reader can verify for themselves whether the information is true or false, or at least, credible.And in this case, it's probably false. The likely source for that claim is this article from Malwarebytes about Emotet. They have since retracted this claim, as the latest version of their article points out. In any event, the NYTimes article claims that Emotet is now "relying" on the NSA's EternalBlue to spread. That's not the same thing as "using", not even close. Yes, lots of ransomware has been updated to also use Eternalblue to spread. However, what ransomware is relying upon is still the Wind In any event, the NYTimes article claims that Emotet is now "relying" on the NSA's EternalBlue to spread. That's not the same thing as "using", not even close. Yes, lots of ransomware has been updated to also use Eternalblue to spread. However, what ransomware is relying upon is still the Wind |

Ransomware Malware Patching Guideline | NotPetya Wannacry | ||

| 2019-04-23 00:18:02 | Programming languages infosec professionals should learn (lien direct) | Code is an essential skill of the infosec professional, but there are so many languages to choose from. What language should you learn? As a heavy coder, I thought I'd answer that question, or at least give some perspective.The tl;dr is JavaScript. Whatever other language you learn, you'll also need to learn JavaScript. It's the language of browsers, Word macros, JSON, NodeJS server side, scripting on the command-line, and Electron apps. You'll also need to a bit of bash and/or PowerShell scripting skills, SQL for database queries, and regex for extracting data from text files. Other languages are important as well, Python is very popular for example. Actively avoid C++ and PHP as they are obsolete.Also tl;dr: whatever language you decide to learn, also learn how to use an IDE with visual debugging, rather than just a text editor. That probably means Visual Code from Microsoft. Also, whatever language you learn, stash your code at GitHub.Let's talk in general terms. Here are some types of languages.Unavoidable. As mentioned above, familiarity with JavaScript, bash/Powershell, and SQL are unavoidable. If you are avoiding them, you are doing something wrong.Small scripts. You need to learn at least one language for writing quick-and-dirty command-line scripts to automate tasks or process data. As a tool using animal, this is your basic tool. You are a monkey, this is the stick you use to knock down the banana. Good choices are JavaScript, Python, and Ruby. Some domain-specific languages can also work, like PHP and Lua. Those skilled in bash/PowerShell can do a surprising amount of "programming" tasks in those languages. Old timers use things like PERL or TCL. Sometimes the choice of which language to learn depends upon the vast libraries that come with the languages, especially Python and JavaScript libraries.Development languages. Those scripting languages have grown up into real programming languages, but for the most part, "software development" means languages designed for that task like C, C++, Java, C#, Rust, Go, or Swift.Domain-specific languages. The language Lua is built into nmap, snort, Wireshark, and many games. Ruby is the language of Metasploit. Further afield, you may end up learning languages like R or Matlab. PHP is incredibly important for web development. Mobile apps may need Java, C#, Kotlin, Swift, or Objective-C.As an experienced developer, here are my comments on the various languages, sorted in alphabetic order.bash (and other Unix shells)You have to learn some bash for dealing with the command-line. But it's also a fairly completely programming language. Perusing the scripts in an average Linux distribution, especially some of the older ones, and you'll find that bash makes up a substantial amount of what we think of as the Linux operating system. Actually, it's called bash/Linux.In the Unix world, there are lots of other related shells that aren't bash, which have slightly different syntax. A good example is BusyBox which has "ash". I mention this because my bash skills are rather poor partly because I originally learned "csh" and get my syntax variants confused.As a hard-core developer, I end up just programming in JavaScript or even C rather than trying to create complex bash scripts. But you shouldn't look down on complex bash scripts, because they can do great things. In particular, if you are a pentester, the shell is often the only language you'll get when hacking into a system, sod good bash language skills are a must.CThis is the development language I use the most, simply because I'm an old-time "systems" developer. What "systems programming" means i | Guideline | |||

| 2019-04-21 17:16:36 | Was it a Chinese spy or confused tourist? (lien direct) | Politico has an article from a former spy analyzing whether the "spy" they caught at Mar-a-lago (Trump's Florida vacation spot) was actually a "spy". I thought I'd add to it from a technical perspective about her malware, USB drives, phones, cash, and so on.The part that has gotten the most press is that she had a USB drive with evil malware. We've belittled the Secret Service agents who infected themselves, and we've used this as the most important reason to suspect she was a spy.But it's nonsense.It could be something significant, but we can't know that based on the details that have been reported. What the Secret Service reported was that it "started installing software". That's a symptom of a USB device installing drivers, not malware. Common USB devices, such as WiFi adapters, Bluetooth adapters, microSD readers, and 2FA keys look identical to flash drives, and when inserted into a computer, cause Windows to install drivers.Visual "installing files" is not a symptom of malware. When malware does its job right, there are no symptoms. It installs invisibly in the background. Thats the entire point of malware, that you don't know it's there. It's not to say there would be no visible evidence. A popular way of hacking desktops with USB drives is by emulating a keyboard/mouse that quickly types commands, which will cause some visual artifacts on the screen. It's just that "installing files" does not lend itself to malware as being the most likely explanation.That it was "malware" instead of something normal is just the standard trope that anything unexplained is proof of hackers/viruses. We have no evidence it was actually malware, and the evidence we do have suggests something other than malware.Lots of travelers carry wads of cash. I carry ten $100 bills with me, hidden in my luggage, for emergencies. I've been caught before when the credit card company fraud detection triggers in a foreign country leaving me with nothing. It's very distressing, hence cash.The Politico story mentioned the "spy" also has a U.S. bank account, and thus cash wasn't needed. Well, I carry that cash, too, for domestic travel. It's just not for international travel. In any case, the U.S. may have been just one stop on a multi-country itinerary. I've taken several "round the world" trips where I've just flown one direction, such as east, before getting back home. $8k is in the range of cash that such travelers carry.The same is true of phones and SIMs. Different countries have different frequencies and technologies. In the past, I've traveled with as many as three phones (US, Japan, Europe). It's gotten better with modern 4G phones, where my iPhone Xs should work everywhere. (Though it's likely going to diverge again with 5G, as the U.S. goes on a different path from the rest of the world.)The same is true with SIMs. In the past, you pretty much needed a different SIM for each country. Arrival in the airport meant going to the kiosk to get a SIM for $10. At the end of a long itinerary, I'd arrive home with several SIMs. These days, however, with so many "MVNOs", such as Google Fi, this is radically less necessary. However, the fact that the latest high-end phones all support dual-SIMs proves it's still an issue.Thus, the evidence so far is that of a normal traveler. If these SIMs/phones are indeed because of spying, we would need additional evidence. A quick analysis of the accounts associated with the SIMs and the of the contents of the phones should tells us if she's a traveler or spy.Normal travelers may be concerned about hidden cameras. There's this story from about Korean hotels filming guests, and this other one about | Malware | |||

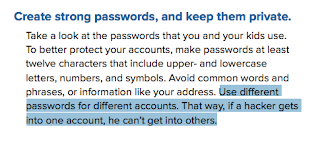

| 2019-04-11 20:22:14 | Assange indicted for breaking a password (lien direct) | In today's news, after 9 years holed up in the Ecuadorian embassy, Julian Assange has finally been arrested. The US DoJ accuses Assange for trying to break a password. I thought I'd write up a technical explainer what this means.According to the US DoJ's press release:Julian P. Assange, 47, the founder of WikiLeaks, was arrested today in the United Kingdom pursuant to the U.S./UK Extradition Treaty, in connection with a federal charge of conspiracy to commit computer intrusion for agreeing to break a password to a classified U.S. government computer.The full indictment is here.It seems the indictment is based on already public information that came out during Manning's trial, namely this log of chats between Assange and Manning, specifically this section where Assange appears to agree to break a password: What this says is that Manning hacked a DoD computer and found the hash "80c11049faebf441d524fb3c4cd5351c" and asked Assange to crack it. Assange appears to agree.So what is a "hash", what can Assange do with it, and how did Manning grab it?Computers store passwords in an encrypted (sic) form called a "one way hash". Since it's "one way", it can never be decrypted. However, each time you log into a computer, it again performs the one way hash on what you typed in, and compares it with the stored version to see if they match. Thus, a computer can verify you've entered the right password, without knowing the password itself, or storing it in a form hackers can easily grab. Hackers can only steal the encrypted form, the hash.When they get the hash, while it can't be decrypted, hackers can keep guessing passwords, performing the one way algorithm on them, and see if they match. With an average desktop computer, they can test a billion guesses per second. This may seem like a lot, but if you've chosen a sufficiently long and complex password (more than 12 characters with letters, numbers, and punctuation), then hackers can't guess them.It's unclear what format this password is in, whether "NT" or "NTLM". Using my notebook computer, I could attempt to crack the NT format using the hashcat password crack with the following command:hashcat -m 3000 -a 3 80c11049faebf441d524fb3c4cd5351c ?a?a?a?a?a?a?aAs this image shows, it'll take about 22 hours on my laptop to crack this. However, this doesn't succeed, so it seems that this isn't in the NT format. Unlike other password formats, the "NT" format can only be 7 characters in length, so we can completely crack it. What this says is that Manning hacked a DoD computer and found the hash "80c11049faebf441d524fb3c4cd5351c" and asked Assange to crack it. Assange appears to agree.So what is a "hash", what can Assange do with it, and how did Manning grab it?Computers store passwords in an encrypted (sic) form called a "one way hash". Since it's "one way", it can never be decrypted. However, each time you log into a computer, it again performs the one way hash on what you typed in, and compares it with the stored version to see if they match. Thus, a computer can verify you've entered the right password, without knowing the password itself, or storing it in a form hackers can easily grab. Hackers can only steal the encrypted form, the hash.When they get the hash, while it can't be decrypted, hackers can keep guessing passwords, performing the one way algorithm on them, and see if they match. With an average desktop computer, they can test a billion guesses per second. This may seem like a lot, but if you've chosen a sufficiently long and complex password (more than 12 characters with letters, numbers, and punctuation), then hackers can't guess them.It's unclear what format this password is in, whether "NT" or "NTLM". Using my notebook computer, I could attempt to crack the NT format using the hashcat password crack with the following command:hashcat -m 3000 -a 3 80c11049faebf441d524fb3c4cd5351c ?a?a?a?a?a?a?aAs this image shows, it'll take about 22 hours on my laptop to crack this. However, this doesn't succeed, so it seems that this isn't in the NT format. Unlike other password formats, the "NT" format can only be 7 characters in length, so we can completely crack it. |

Hack | |||

| 2019-03-12 18:43:41 | Some notes on the Raspberry Pi (lien direct) | I keep seeing this article in my timeline today about the Raspberry Pi. I thought I'd write up some notes about it.The Raspberry Pi costs $35 for the board, but to achieve a fully functional system, you'll need to add a power supply, storage, and heatsink, which ends up costing around $70 for the full system. At that price range, there are lots of alternatives. For example, you can get a fully function $99 Windows x86 PC, that's just as small and consumes less electrical power.There are a ton of Raspberry Pi competitors, often cheaper with better hardware, such as a Odroid-C2, Rock64, Nano Pi, Orange Pi, and so on. There are also a bunch of "Android TV boxes" running roughly the same hardware for cheaper prices, that you can wipe and reinstall Linux on. You can also acquire Android phones for $40.However, while "better" technically, the alternatives all suffer from the fact that the Raspberry Pi is better supported -- vastly better supported. The ecosystem of ARM products focuses on getting Android to work, and does poorly at getting generic Linux working. The Raspberry Pi has the worst, most out-of-date hardware, of any of its competitors, but I'm not sure I can wholly recommend any competitor, as they simply don't have the level of support the Raspberry Pi does. The defining feature of the Raspberry Pi isn't that it's a small/cheap computer, but that it's a computer with a bunch of GPIO pins. When you look at the board, it doesn't just have the recognizable HDMI, Ethernet, and USB connectors, but also has 40 raw pins strung out across the top of the board. There's also a couple extra connectors for cameras.The concept wasn't simply that of a generic computer, but a maker device, for robot servos, temperature and weather measurements, cameras for a telescope, controlling christmas light displays, and so on.I think this is underemphasized in the above story. The reason it finds use in the factories is because they have the same sorts of needs for controlling things that maker kids do. A lot of industrial needs can be satisfied by a teenager buying $50 of hardware off Adafruit and writing a few Python scripts.On the other hand, support for industrial uses is nearly nonexistant. The reason commercial products cost $1000 is because somebody will answer your phone, unlike the teenager whose currently out at the movies with their friends. However, with more and more people having experience with the Raspberry Pi, presumably you'll be able to hire generic consultants soon that can maintain th The defining feature of the Raspberry Pi isn't that it's a small/cheap computer, but that it's a computer with a bunch of GPIO pins. When you look at the board, it doesn't just have the recognizable HDMI, Ethernet, and USB connectors, but also has 40 raw pins strung out across the top of the board. There's also a couple extra connectors for cameras.The concept wasn't simply that of a generic computer, but a maker device, for robot servos, temperature and weather measurements, cameras for a telescope, controlling christmas light displays, and so on.I think this is underemphasized in the above story. The reason it finds use in the factories is because they have the same sorts of needs for controlling things that maker kids do. A lot of industrial needs can be satisfied by a teenager buying $50 of hardware off Adafruit and writing a few Python scripts.On the other hand, support for industrial uses is nearly nonexistant. The reason commercial products cost $1000 is because somebody will answer your phone, unlike the teenager whose currently out at the movies with their friends. However, with more and more people having experience with the Raspberry Pi, presumably you'll be able to hire generic consultants soon that can maintain th |

Hack | |||

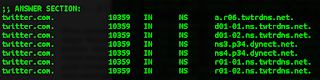

| 2019-03-09 15:39:51 | A quick lesson in confirmation bias (lien direct) | In my experience, hacking investigations are driven by ignorance and confirmation bias. We regularly see things we cannot explain. We respond by coming up with a story where our pet theory explains it. Since there is no alternative explanation, this then becomes evidence of our theory, where this otherwise inexplicable thing becomes proof.For example, take that "Trump-AlfaBank" theory. One of the oddities noted by researchers is lookups for "trump-email.com.moscow.alfaintra.net". One of the conspiracy theorists explains has proof of human error, somebody "fat fingered" the wrong name when typing it in, thus proving humans were involved in trying to communicate between the two entities, as opposed to simple automated systems. But that's because this "expert" doesn't know how DNS works. Your computer is configured to automatically put local suffices on the end of names, so that you only have to lookup "2ndfloorprinter" instead of a full name like "2ndfloorprinter.engineering.example.com".When looking up a DNS name, your computer may try to lookup the name both with and without the suffix. Thus, sometimes your computer looks up "www.google.com.engineering.exmaple.com" when it wants simply "www.google.com".Apparently, Alfabank configures its Moscow computers to have a suffix "moscow.alfaintra.net". That means any DNS name that gets resolved will sometimes get this appended, so we'll sometimes see "www.google.com.moscow.alfaintra.net".Since we already know there were lookups from that organization for "trump-email.com", the fact that we also see "trump-email.com.moscow.alfaintra.net" tells us nothing new.In other words, the conspiracy theorists didn't understand it, so came up with their own explanation, and this confirmed their biases. In fact, there is a simpler explanation that neither confirms nor refutes anything.The reason for the DNS lookups for "trump-email.com" are still unexplained. Maybe they are because of something nefarious. The Trump organizations had all sorts of questionable relationships with Russian banks, so such a relationship wouldn't be surprising. But here's the thing: just because we can't come up with a simpler explanation doesn't make them proof of a Trump-Alfabank conspiracy. Until we know why those lookups where generated, they are an "unknown" and not "evidence".The reason I write this post is because of this story about a student expelled due to "grade hacking". It sounds like this sort of situation, where the IT department saw anomalies it couldn't explain, so the anomalies became proof of the theory they'd created to explain them.Unexplained phenomena are unexplained. They are not evidence confirming your theory that explains them. But that's because this "expert" doesn't know how DNS works. Your computer is configured to automatically put local suffices on the end of names, so that you only have to lookup "2ndfloorprinter" instead of a full name like "2ndfloorprinter.engineering.example.com".When looking up a DNS name, your computer may try to lookup the name both with and without the suffix. Thus, sometimes your computer looks up "www.google.com.engineering.exmaple.com" when it wants simply "www.google.com".Apparently, Alfabank configures its Moscow computers to have a suffix "moscow.alfaintra.net". That means any DNS name that gets resolved will sometimes get this appended, so we'll sometimes see "www.google.com.moscow.alfaintra.net".Since we already know there were lookups from that organization for "trump-email.com", the fact that we also see "trump-email.com.moscow.alfaintra.net" tells us nothing new.In other words, the conspiracy theorists didn't understand it, so came up with their own explanation, and this confirmed their biases. In fact, there is a simpler explanation that neither confirms nor refutes anything.The reason for the DNS lookups for "trump-email.com" are still unexplained. Maybe they are because of something nefarious. The Trump organizations had all sorts of questionable relationships with Russian banks, so such a relationship wouldn't be surprising. But here's the thing: just because we can't come up with a simpler explanation doesn't make them proof of a Trump-Alfabank conspiracy. Until we know why those lookups where generated, they are an "unknown" and not "evidence".The reason I write this post is because of this story about a student expelled due to "grade hacking". It sounds like this sort of situation, where the IT department saw anomalies it couldn't explain, so the anomalies became proof of the theory they'd created to explain them.Unexplained phenomena are unexplained. They are not evidence confirming your theory that explains them. |

||||

| 2019-02-25 18:20:47 | A basic question about TCP (lien direct) | So on Twitter, somebody asked this question:I have a very basic computer networking question: when sending a TCP packet, is the packet ACK'ed at every node in the route between the sender and the recipient, or just by the final recipient?This isn't just a basic question, it is the basic question, the defining aspect of TCP/IP that makes the Internet different from the telephone network that predated it.Remember that the telephone network was already a cyberspace before the Internet came around. It allowed anybody to create a connection to anybody else. Most circuits/connections were 56-kilobits-per-secondl using the "T" system, these could be aggregated into faster circuits/connections. The "T1" line consisting of 1.544-mbps was an important standard back in the day.In the phone system, when a connection is established, resources must be allocated in every switch along the path between the source and destination. When the phone system is overloaded, such as when you call loved ones when there's been an earthquake/tornado in their area, you'll sometimes get a message "No circuits are available". Due to congestion, it can't reserve the necessary resources in one of the switches along the route, so the call can't be established."Congestion" is important. Keep that in mind. We'll get to it a bit further down.The idea that each router needs to ACK a TCP packet means that the router needs to know about the TCP connection, that it needs to reserve resources to it.This was actually the original design of the the OSI Network Layer.Let's rewind a bit and discuss "OSI". Back in the 1970s, the major computer companies of the time all had their own proprietary network stacks. IBM computers couldn't talk to DEC computers, and neither could talk to Xerox computers. They all worked differently. The need for a standard protocol stack was obvious.To do this, the "Open Systems Interconnect" or "OSI" group was established under the auspices of the ISO, the international standards organization.The first thing the OSI did was create a model for how protocol stacks would work. That's because different parts of the stack need to be independent from each other.For example, consider the local/physical link between two nodes, such as between your computer and the local router, or your router to the next router. You use Ethernet or WiFi to talk to your router. You may use 802.11n WiFi in the 2.4GHz band, or 802.11ac in the 5GHz band. However you do this, it doesn't matter as far as the TCP/IP packets are concerned. This is just between you and your router, and all the information is stripped out of the packets before they are forwarded to across the Internet.Likewise, your ISP may use cable modems (DOCSIS) to connect your router to their routers, or they may use xDSL. This information is likewise is stripped off before packets go further into the Internet. When your packets reach the other end, like at Google's servers, they contain no traces of this.There are 7 layers to the OSI model. The one we are most interested in is layer 3, the "Network Layer". This is the layer at which IPv4 and IPv6 operate. TCP will be layer 4, the "Transport Layer".The original idea for the network layer was that it would be connection oriented, modeled after the phone system. The phone system was already offering such a service, called X.25, which the OSI model was built around. X.25 was important in the pre-Internet era for creating long-distance computer connections, allowing cheaper connections than renting a full T1 circuit from the phone company. Normal telephone circuits are designed for a continuous flow of data, whereas computer communication is bursty. X.25 was especially popular for terminals, because it only needed to send packets from the terminal when users were typing.Layer 3 also included | ||||

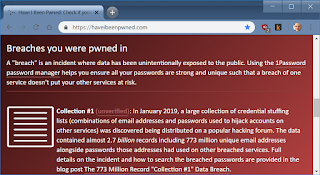

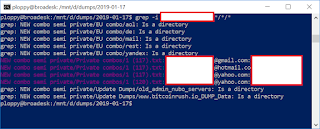

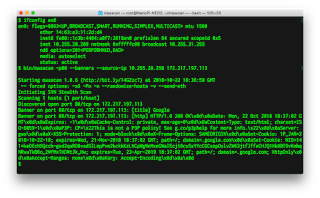

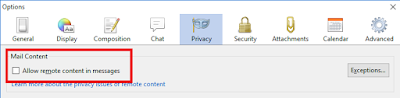

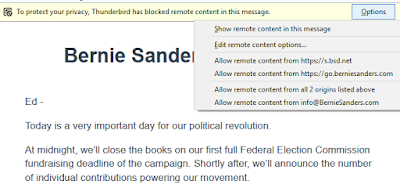

| 2019-02-08 10:08:18 | How Bezo\'s dick pics might\'ve been exposed (lien direct) | In the news, the National Enquirer has extorted Amazon CEO Jeff Bezos by threatening to publish the sext-messages/dick-pics he sent to his mistress. How did the National Enquirer get them? There are rumors that maybe Trump's government agents or the "deep state" were involved in this sordid mess. The more likely explanation is that it was a simple hack. Teenage hackers regularly do such hacks -- they aren't hard.This post is a description of how such hacks might've been done.To start with, from which end were they stolen? As a billionaire, I'm guessing Bezos himself has pretty good security, so I'm going to assume it was the recipient, his girlfriend, who was hacked.The hack starts by finding the email address she uses. People use the same email address for both public and private purposes. There are lots of "people finder" services on the Internet that you can use to track this information down. These services are partly scams, using "dark patterns" to get you to spend tons of money on them without realizing it, so be careful.Using one of these sites, I quickly found a couple of a email accounts she's used, one at HotMail, another at GMail. I've blocked out her address. I want to describe how easy the process is, I'm not trying to doxx her. Next, I enter those email addresses into the website http://haveibeenpwned.com to see if hackers have ever stolen her account password. When hackers break into websites, they steal the account passwords, and then exchange them on the dark web with other hackers. The above website tracks this, helping you discover if one of your accounts has been so compromised. You should take this opportunity to enter your email address in this site to see if it's been so "pwned".I find that her email addresses have been included in that recent dump of 770 million accounts called "Collection#1". Next, I enter those email addresses into the website http://haveibeenpwned.com to see if hackers have ever stolen her account password. When hackers break into websites, they steal the account passwords, and then exchange them on the dark web with other hackers. The above website tracks this, helping you discover if one of your accounts has been so compromised. You should take this opportunity to enter your email address in this site to see if it's been so "pwned".I find that her email addresses have been included in that recent dump of 770 million accounts called "Collection#1". The http://haveibeenpwned.com won't disclose the passwords, only the fact they've been pwned. However, I have a copy of that huge Collection#1 dump, so I can search it myself to get her password. As this output shows, I get a few hits, all with the same password. The http://haveibeenpwned.com won't disclose the passwords, only the fact they've been pwned. However, I have a copy of that huge Collection#1 dump, so I can search it myself to get her password. As this output shows, I get a few hits, all with the same password. At this point, I have a password, but not necessarily the password to access any useful accounts. For all I know, this was the At this point, I have a password, but not necessarily the password to access any useful accounts. For all I know, this was the |

Hack | |||

| 2019-01-28 22:21:56 | Passwords in a file (lien direct) | My dad is on some sort of committee for his local home owners association. He asked about saving all the passwords in a file stored on Microsoft's cloud OneDrive, along with policy/procedures for the association. I assumed he called because I'm an internationally recognized cyberexpert. Or maybe he just wanted to chat with me*. Anyway, I thought I'd write up a response.The most important rule of cybersecurity is that it depends upon the risks/costs. That means if what you want to do is write down the procedures for operating a garden pump, including the passwords, then that's fine. This is because there's not much danger of hackers exploiting this. On the other hand, if the question is passwords for the association's bank account, then DON'T DO THIS. Such passwords should never be online. Instead, write them down and store the pieces of paper in a secure place.OneDrive is secure, as much as anything is. The problem is that people aren't secure. There's probably one member of the home owner's association who is constantly infecting themselves with viruses or falling victim to scams. This is the person who you are giving OneDrive access to. This is fine for the meaningless passwords, but very much not fine for bank accounts.OneDrive also has some useful backup features. Thus, when one of your members infects themselves with ransomware, which will encrypt all the OneDrive's contents, you can retrieve the old versions of the documents. I highly recommend groups like the home owner's association use OneDrive. I use it as part of my Office 365 subscription for $99/year.Just don't do this for banking passwords. In fact, not only should you not store such a password online, you should strongly consider getting "two factor authentication" setup for the account. This is a system where you need an additional hardware device/token in addition to a password (in some cases, your phone can be used as the additional device). This may not work if multiple people need to access a common account, but then, you should have multiple passwords, for each individual, in such cases. Your bank should have descriptions of how to set this up. If your bank doesn't offer two factor authentication for its websites, then you really need to switch banks.For individuals, write your passwords down on paper. For elderly parents, write down a copy and give it to your kids. It should go without saying: store that paper in a safe place, ideally a safe, not a post-it note glued to your monitor. Again, this is for your important passwords, like for bank accounts and e-mail. For your Spotify or Pandora accounts (music services), then security really doesn't matter.Lastly, the way hackers most often break into things like bank accounts is because people use the same password everywhere. When one site gets hacked, those passwords are then used to hack accounts on other websites. Thus, for important accounts, don't reuse passwords, make them unique for just that account. Since you can't remember unique passwords for every account, write them down.You can check if your password has been hacked this way by checking http://haveibeenpwned.com and entering your email address. Entering my dad's email address, I find that his accounts at Adobe, LinkedIn, and Disqus has been discovered by hackers (due to hacks of those websites) and published. I sure hope whatever these passwords were that they are not the same or similar to his passwords for GMail or his bank account. * the lame joke at the top was my dad's, so don't blame me :-) |

Hack | |||

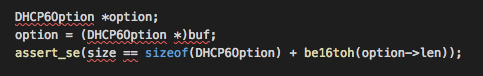

| 2018-12-15 22:40:22 | Notes on Build Hardening (lien direct) | I thought I'd comment on a paper about "build safety" in consumer products, describing how software is built to harden it against hackers trying to exploit bugs.What is build safety?Modern languages (Java, C#, Go, Rust, JavaScript, Python, etc.) are inherently "safe", meaning they don't have "buffer-overflows" or related problems.However, C/C++ is "unsafe", and is the most popular language for building stuff that interacts with the network. In other cases, while the language itself may be safe, it'll use underlying infrastructure ("libraries") written in C/C++. When we are talking about hardening builds, making them safe or security, we are talking about C/C++.In the last two decades, we've improved both hardware and operating-systems around C/C++ in order to impose safety on it from the outside. We do this with options when the software is built (compiled and linked), and then when the software is run.That's what the paper above looks at: how consumer devices are built using these options, and thereby, measuring the security of these devices.In particular, we are talking about the Linux operating system here and the GNU compiler gcc. Consumer products almost always use Linux these days, though a few also use embedded Windows or QNX. They are almost always built using gcc, though some are built using a clone known as clang (or llvm).How software is builtSoftware is first compiled then linked. Compiling means translating the human-readable source code into machine code. Linking means combining multiple compiled files into a single executable.Consider a program hello.c. We might compile it using the following command:gcc -o hello hello.cThis command takes the file, hello.c, compiles it, then outputs -o an executable with the name hello.We can set additional compilation options on the command-line here. For example, to enable stack guards, we'd compile with a command that looks like the following:gcc -o hello -fstack-protector hello.cIn the following sections, we are going to look at specific options and what they do.Stack guardsA running program has various kinds of memory, optimized for different use cases. One chunk of memory is known as the stack. This is the scratch pad for functions. When a function in the code is called, the stack grows with additional scratchpad needs of that functions, then shrinks back when the function exits. As functions call other functions, which call other functions, the stack keeps growing larger and larger. When they return, it then shrinks back again.The scratch pad for each function is known as the stack frame. Among the things stored in the stack frame is the return address, where the function was called from so that when it exits, the caller of the function can continue executing where it left off.The way stack guards work is to stick a carefully constructed value in between each stack frame, known as a canary. Right before the function exits, it'll check this canary in order to validate it hasn't been corrupted. If corruption is detected, the program exits, or crashes, to prevent worse things from happening.This solves the most common exploited vulnerability in C/C++ code, the stack buffer-overflow. This is the bug described in that famous paper Smashing the Stack for Fun and Profit& | Guideline | |||

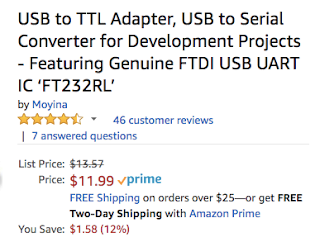

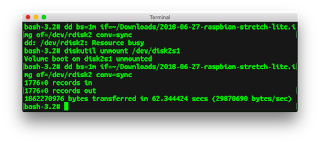

| 2018-12-11 22:59:55 | Notes about hacking with drop tools (lien direct) | In this report, Kasperky found Eastern European banks hacked with Raspberry Pis and "Bash Bunnies" (DarkVishnya). I thought I'd write up some more detailed notes on this.Drop toolsA common hacking/pen-testing technique is to drop a box physically on the local network. On this blog, there are articles going back 10 years discussing this. In the old days, this was done with $200 "netbook" (cheap notebook computers). These days, it can be done with $50 "Raspberry Pi" computers, or even $25 consumer devices reflashed with Linux.A "Raspberry Pi" is a $35 single board computer, for which you'll need to add about another $15 worth of stuff to get it running (power supply, flash drive, and cables). These are extremely popular hobbyist computers that are used everywhere from home servers, robotics, and hacking. They have spawned a large number of clones, like the ODROID, Orange Pi, NanoPi, and so on. With a quad-core, 1.4 GHz, single-issue processor, 2 gigs of RAM, and typically at least 8 gigs of flash, these are pretty powerful computers.Typically what you'd do is install Kali Linux. This is a Linux "distro" that contains all the tools hackers want to use.You then drop this box physically on the victim's network. We often called these "dropboxes" in the past, but now that there's a cloud service called "Dropbox", this becomes confusing, so I guess we can call them "drop tools". The advantage of using something like a Raspberry Pi is that it's cheap: once dropped on a victim's network, you probably won't ever get it back again.Gaining physical access to even secure banks isn't that hard. Sure, getting to the money is tightly controlled, but other parts of the bank aren't not nearly as secure. One good trick is to pretend to be a banking inspector. At least in the United States, they'll quickly bend over an spread them if they think you are a regulator. Or, you can pretend to be maintenance worker there to fix the plumbing. All it takes is a uniform with a logo and what appears to be a valid work order. If questioned, whip out the clipboard and ask them to sign off on the work. Or, if all else fails, just walk in brazenly as if you belong.Once inside the physical network, you need to find a place to plug something in. Ethernet and power plugs are often underneath/behind furniture, so that's not hard. You might find access to a wiring closet somewhere, as Aaron Swartz famously did. You'll usually have to connect via Ethernet, as it requires no authentication/authorization. If you could connect via WiFi, you could probably do it outside the building using directional antennas without going through all this.Now that you've got your evil box installed, there is the question of how you remotely access it. It's almost certainly firewalled, preventing any inbound connection.One choice is to configure it for outbound connections. When doing pentests, I configure reverse SSH command-prompts to a command-and-control server. Another alternative is to create a SSH Tor hidden service. There are a myriad of other ways you might do this. They all suffer the problem that anybody looking at the organization's outbound traffic can notice these connections.Another alternative is to use the WiFi. This allows you to physically sit outside in the parking lot and connect to the box. This can sometimes be detected using WiFi intrusion prevention systems, though it's not hard to get around that. The downside is that it puts you in some physical jeopardy, because you have to be physically near the building. However, you can mitigate this in some cases, such as sticking a second Raspberry Pi in a nearby bar that is close enough to connection, and then use the bar's Internet connection to hop-scotch on in. | Vulnerability | |||

| 2018-11-18 19:51:36 | Some notes about HTTP/3 (lien direct) | HTTP/3 is going to be standardized. As an old protocol guy, I thought I'd write up some comments.Google (pbuh) has both the most popular web browser (Chrome) and the two most popular websites (#1 Google.com #2 Youtube.com). Therefore, they are in control of future web protocol development. Their first upgrade they called SPDY (pronounced "speedy"), which was eventually standardized as the second version of HTTP, or HTTP/2. Their second upgrade they called QUIC (pronounced "quick"), which is being standardized as HTTP/3.SPDY (HTTP/2) is already supported by the major web browser (Chrome, Firefox, Edge, Safari) and major web servers (Apache, Nginx, IIS, CloudFlare). Many of the most popular websites support it (even non-Google ones), though you are unlikely to ever see it on the wire (sniffing with Wireshark or tcpdump), because it's always encrypted with SSL. While the standard allows for HTTP/2 to run raw over TCP, all the implementations only use it over SSL.There is a good lesson here about standards. Outside the Internet, standards are often de jure, run by government, driven by getting all major stakeholders in a room and hashing it out, then using rules to force people to adopt it. On the Internet, people implement things first, and then if others like it, they'll start using it, too. Standards are often de facto, with RFCs being written for what is already working well on the Internet, documenting what people are already using. SPDY was adopted by browsers/servers not because it was standardized, but because the major players simply started adding it. The same is happening with QUIC: the fact that it's being standardized as HTTP/3 is a reflection that it's already being used, rather than some milestone that now that it's standardized that people can start using it.QUIC is really more of a new version of TCP (TCP/2???) than a new version of HTTP (HTTP/3). It doesn't really change what HTTP/2 does so much as change how the transport works. Therefore, my comments below are focused on transport issues rather than HTTP issues.The major headline feature is faster connection setup and latency. TCP requires a number of packets being sent back-and-forth before the connection is established. SSL again requires a number of packets sent back-and-forth before encryption is established. If there is a lot of network delay, such as when people use satellite Internet with half-second ping times, it can take quite a long time for a connection to be established. By reducing round-trips, connections get setup faster, so that when you click on a link, the linked resource pops up immediatelyThe next headline feature is bandwidth. There is always a bandwidth limitation between source and destination of a network connection, which is almost always due to congestion. Both sides need to discover this speed so that they can send packets at just the right rate. Sending packets too fast, so that they'll get dropped, causes even more congestion for others without improving transfer rate. Sending packets too slowly means unoptimal use of the network.How HTTP traditionally does this is bad. Using a single TCP connection didn't work for HTTP because interactions with websites require multiple things to be transferred simultaneously, so browsers opened multiple connections to the web server (typically 4). However, this breaks the bandwidth estimation, because each of your TCP connections is trying to do it independently as if the other connections don't exist. SPDY addressed this by its multiplexing feature that combined multiple interactions between browser/server with a single bandwidth calculation.QUIC extends this multiplexing, making it even easier to handle multiple interactions between the browser/server, without any one interaction blocking another, but with a common bandwidth estimation. This will make interactions smoother from a user's perspective, while | ||||

| 2018-11-04 18:22:46 | Brian Kemp is bad on cybersecurity (lien direct) | I'd prefer a Republican governor, but as a cybersecurity expert, I have to point out how bad Brian Kemp (candidate for Georgia governor) is on cybersecurity. When notified about vulnerabilities in election systems, his response has been to shoot the messenger rather than fix the vulnerabilities. This was the premise behind the cybercrime bill earlier this year that was ultimately vetoed by the current governor after vocal opposition from cybersecurity companies. More recently, he just announced that he's investigating the Georgia State Democratic Party for a "failed hacking attempt".According to news stories, state elections websites are full of common vulnerabilities, those documented by the OWASP Top 10, such as "direct object references" that would allow any election registration information to be read or changed, as allowing a hacker to cancel registrations of those of the other party.Testing for such weaknesses is not a crime. Indeed, it's desirable that people can test for security weaknesses. Systems that aren't open to test are insecure. This concept is the basis for many policy initiatives at the federal level, to not only protect researchers probing for weaknesses from prosecution, but to even provide bounties encouraging them to do so. The DoD has a "Hack the Pentagon" initiative encouraging exactly this.But the State of Georgia is stereotypically backwards and thuggish. Earlier this year, the legislature passed SB 315 that criminalized this activity of merely attempting to access a computer without permission, to probe for possibly vulnerabilities. To the ignorant and backwards person, this seems reasonable, of course this bad activity should be outlawed. But as we in the cybersecurity community have learned over the last decades, this only outlaws your friends from finding security vulnerabilities, and does nothing to discourage your enemies. Russian election meddling hackers are not deterred by such laws, only Georgia residents concerned whether their government websites are secure.It's your own users, and well-meaning security researchers, who are the primary source for improving security. Unless you live under a rock (like Brian Kemp, apparently), you'll have noticed that every month you have your Windows desktop or iPhone nagging you about updating the software to fix security issues. If you look behind the scenes, you'll find that most of these security fixes come from outsiders. They come from technical experts who accidentally come across vulnerabilities. They come from security researchers who specifically look for vulnerabilities.It's because of this "research" that systems are mostly secure today. A few days ago was the 30th anniversary of the "Morris Worm" that took down the nascent Internet in 1988. The net of that time was hostile to security research, with major companies ignoring vulnerabilities. Systems then were laughably insecure, but vendors tried to address the problem by suppressing research. The Morris Worm exploited several vulnerabilities that were well-known at the time, but ignored by the vendor (in this case, primarily Sun Microsystems).Since then, with a culture of outsiders disclosing vulnerabilities, vendors have been pressured into fix them. This has led to vast improvements in security. I'm posting this from a public WiFi hotspot in a bar, for example, because computers are secure enough for this to be safe. 10 years ago, such activity wasn't safe.The Georgia Democrats obviously have concerns about the integrity of election systems. They have every reason to thoroughly probe an elections website looking for vulnerabilities. This sort of activity should be encouraged, not supp | Vulnerability Threat Guideline | |||

| 2018-11-02 02:57:36 | Why no cyber 9/11 for 15 years? (lien direct) | This The Atlantic article asks why hasn't there been a cyber-terrorist attack for the last 15 years, or as it phrases it:National-security experts have been warning of terrorist cyberattacks for 15 years. Why hasn't one happened yet?As a pen-tester whose broken into power grids and found 0day exploits in control center systems, I thought I'd write up some comments.Instead of asking why one hasn't happened yet, maybe we should instead ask why national-security experts keep warning about them.One possible answer is that national-security experts are ignorant. I get the sense that "national security experts" have very little expertise in cyber. That's why I include a brief resume at the top of this article, I've actually broken into a power grid and found 0days in critical power grid products (specifically, the ABB implementation of ICCP on AIX -- it's rather an obvious buffer-overflow, *cough* ASN.1 *cough*, I don't know if they ever fixed it).Another possibility is that they are fear mongering in order to support their agenda. That's the problem with "experts", they get their expertise by being employed to achieve some goal. The ones who know most about an issue are simultaneously the ones most biased about an issue. They have every incentive to make people be afraid, and little incentive to tell the truth.The most likely answer, though, is simply because they can. Anybody can warn of "digital 9/11" and be taken seriously, regardless of expertise. They'll get all the press. It's always the Morally Right thing to say. You never have to back it up with evidence. Conversely, those who say the opposite don't get the same level of press, and are frequently challenged to defend their abnormal stance.Indeed, that's this article by The Atlantic works. It's entire premise is that the national security experts are still "right" even though their predictions haven't happened, and it's reality that's "wrong".Now let's consider the original question.One good answer in the article is "cause certain types of fear and terror, that garner certain media attention, that galvanize followers". Blowing something up causes more fear in the target population than deleting some data.But the same is true of the terrorists themselves, that they prefer violence. In other words, what motivates terrorists, the ends or the means? It is it the need to achieve a political goal? Or is it simply about looking for an excuse to commit violence?I suspect that it's the later issue. It's not that terrorists are violent so much as violent people are attracted to terrorism. This can explain a lot, such as why they have such poor op-sec and encryption, as I've written about before. They enjoy learning how to shoot guns and trigger bombs, but they don't enjoy learning how to use a computer correctly.I've explored the cyber Islamic dark web and come to a couple conclusions about it. The primary motivation of these hackers is gay porn. A frequent initiation rite to gain access to these forums is to send post pictures of your, well, equipment. Such things are repressed in their native countries and societies, so hacking becomes a necessary skill in order to get it.It's hard for us to understand their motivations. From our western perspective, we'd think gay young men would be on our side, motivated to fight against their own governments in defense of gay rights, in order to achieve marriage equality. None of them want that. Their goal is to get married and have children. Sure, they want gay sex and intimate relationships with men, but they also want a subservient wife who manages the household, and the deep family ties that | Hack | |||

| 2018-11-01 02:03:51 | Masscan and massive address lists (lien direct) | I saw this go by on my Twitter feed. I thought I'd blog on how masscan solves the same problem.If you do @nmap scanning with big exclusion lists, things are about to get a lot faster. ;)- Daniel Miller ✝ (@bonsaiviking) November 1, 2018Both nmap and masscan are port scanners. The differences is that nmap does an intensive scan on a limited range of addresses, whereas masscan does a light scan on a massive range of addresses, including the range of 0.0.0.0 - 255.255.255.255 (all addresses). If you've got a 10-gbps link to the Internet, it can scan the entire thing in under 10 minutes, from a single desktop-class computer.How massan deals with exclude ranges is probably its defining feature. That seems kinda strange, since it's a little used feature in nmap. But when you scan the entire list, people will complain, with nasty emails, so you are going to build up a list of hundreds, if not thousands, of addresses to exclude from your scans.Therefore, the first design choice is to combine the two lists, the list of targets to include and the list of targets to exclude. Other port scanners don't do this because they typically work from a large include list and a short exclude list, so they optimize for the larger thing. In mass scanning the Internet, the exclude list is the largest thing, so that's what we optimize for. It makes sense to just combine the two lists.So the performance now isn't how to lookup an address in an exclude list efficiently, it's how to quickly choose a random address from a large include target list.Moreover, the decision is how to do it with as little state as possible. That's the trick for sending massive numbers of packets at rates of 10 million packets-per-second, it's not keeping any bookkeeping of what was scanned. I'm not sure exactly how nmap randomizes it's addresses, but the documentation implies that it does a block of a addresses at a time, and randomizes that block, keeping state on which addresses it's scanned and which ones it hasn't.The way masscan is not to randomly pick an IP address so much as to randomize the index.To start with, we created a sorted list of IP address ranges, the targets. The total number of IP addresses in all the ranges is target_count (not the number of ranges but the number of all IP addresses). We then define a function pick() that returns one of those IP addresses given the index: ip = pick(targets, index);Where index is in the range [0..target_count].This function is just a binary search. After the ranges have been sorted, a start_index value is added to each range, which is the total number of IP addresses up to that point. Thus, given a random index, we search the list of start_index values to find which range we've chosen, and then which IP address address within that range. The function is here, though reading it, I realize I need to refactor it to make it clearer. (I read the comments telling me to refactor it, and I realize I haven't gotten around to that yet :-).Given this system, we can now do an in-order (not randomized) port scan by doing the follow | ||||